|

|

Introductory course on machine learning, pattern recognition, neural nets,

statistical modeling for undergraduates.

Instructor: Yann LeCun, 715 Broadway, Room 1220, x83283, yann [

a t ] cs.nyu.edu (note: new room number).

Teaching Assistant: Raia Hadsell, 715 Broadway, 10th floor, raia [ a t ] cs.nyu.edu.

Classes: Tuesdays and Thursday, 3:30-4:45PM, Room 102, Warren Weaver Hall.

Computer Lab: Room 512, Warren Weaver Hall.

Office Hours for Prof. LeCun: Wednesdays 2:00-4:00 PM

Office Hours for Raia: TBA

Click here for schedule and course material >>>

|

|

|

This course will cover a wide variety of topics in machine learning,

pattern recognition, statistical modeling, and neural computation.

The course will cover the mathematical methods, theoretical

aspects, algorithms, implementation issues, and applications.

Machine Learning and Pattern Recognition methods are at the core of

many recent advances in "intelligent computing". Current applications

include machine perception (vision, audition, speech recognition),

control (process control, robotics), data mining, time-series

prediction (e.g. in finance), natural language processing, text mining

and text classification, bio-informatics, neural modeling,

computational models of biological processes, and many other areas.

|

|

| Who Can Take This Course? |

|

This course can be useful to all students who would want to use or

develop statistical modeling methods. This includes students in CS

(AI, Vision, Graphics), Math (System Modeling), Neuroscience (Computational

Neuroscience, Brain Imaging), Finance (Financial modeling and

prediction), Psychology (Vision), Linguistics, Biology (Computational

Biology, Genomics, Bio-informatics), and Medicine (Bio-Statistics,

Epidemiology).

The only formal pre-requisites are familiarity with computer

programming and linear algebra, but the course relies heavily on such

mathematical tools as probability and statistics, multi-variate

calculus, and function optimization. The basic mathematical

concepts will be introduced when needed, but the students will be

expected to assimilate a non-trivial amount of new mathematical

concepts in a fairly short time.

Therefore, the course is primarily for students at the senior and

junior levels.

|

|

The topics studied in the course include:

- the basics of inductive inference, learning, and generalization.

- linear classifiers: Perceptron, LMS, logistic regression.

- non-linear classifiers with linear parameterizations:

basis-function methods, boosting, Support Vector Machines.

- multilayer neural networks, backpropagation

- heterogeneous learning systems

- Energy-based models, loss functions

- optimization methods in learning: gradient-based methods,

second-order methods.

- Probabilistic models, Bayesian methods,

the Expectation-Maximization algorithm.

- graph-based models for sequences: Hidden Markov Models,

finite-state transducers, recurrent networks.

- unsupervised learning: density estimation,

clustering, and dimensionality reduction methods.

- introduction to graphical models and factor graphs

- approximate inference, sampling.

- Learning theory, the bias-variance dilemma, regularization, model selection.

- applications in computer vision, handwriting recognition, speech recognition, robotics,

natural language processing, financial forecasting, biological modeling...

By the end of the course, students will be able to understand,

implement, and apply all the major machine learning methods.

This course will be a adapted version of the graduate course

G22-3033-002 taught in Fall 2004. Please visit the site

of the Fall 2004 Graduate-level version of this course to have a look at the

schedule and source material.

|

|

The best way (some would say the only way) to understand an algorithm

is to implement it and apply it. Building working systems is also a

lot more fun, more creative, and more relevant than pen-and-paper problems.

Therefore students will be evaluated primarily on programming projects

given on a 2 week cycle. There will also be a final exam and a final

project.

|

Linear algebra and good programming skills are the only formal

pre-requisites, but the course will use such mathematical concepts

as vector calculus (multi-dimensional surfaces, gradients, Jacobians),

elementary statistics and probability theory (Bayes rule,

multi-variate Gaussian).

Good programming ability is a must: many assignements will

consist in implementing algorithms studied in class.

For most programming assignement, a skeleton code

in the Lush language will

be provided. Lush is an interpreted language that makes it easy

to quickly implement numerical algorithms.

The course will include a short tutorial on

Lush.

Lush can be downloaded and installed on Linux,

Mac, and Windows (under Cygwin).

See Chris Poultney's

notes on installing Lush under Cygwin.

Lush is available on the CIMS Sun

workstations.

Programming projects may be implemented in any language, (C, C++,

Java, Matlab, Lisp, Python,...) but the use of a high-level

interpreted language with good numerical support and and good support

for vector/matrix algebra is highly recommended (Lush, Matlab,

Octave...). Some assignments require the use of an object-oriented

language.

|

|

Register to the course's mailing list.

Richard O. Duda, Peter E. Hart, David G. Stork: "Pattern Classification"

Wiley-Interscience; 2nd edition, October 2000.

I will not follow this book very closely. In particular, much of the

material covered in the second half of the course cannot be found in

the above book. I will refer to research papers and lectures notes

for those topics.

Either one of the following books is also recommended, but not

absolutely required (you can get a copy from the library):

- T. Hastie, R. Tibshirani, and J. Friedman:

"Elements of Statistical Learning",

Springer-Verlag, 2001.

- C. Bishop: "Neural Networks for Pattern Recognition", Oxford

University Press, 1996. [quite good, if a little dated].

Other Books of Interest

- S. Haykin: "Neural Networks, a comprehensive foundation",

Prentice Hall, 1999 (second edition).

- Tom Mitchell: "Machine Learning", McGraw Hill, 1997.

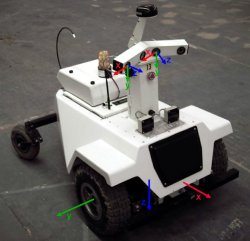

| Machine Learning Research at NYU |

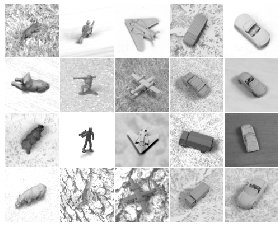

Please have a look at the research project page of the

Computational and Biological Learning Lab for a few example

of machine learning research at NYU.

There are opportunities for undergraduate research projects.

Contact Prof. LeCun for details.

Code

- Lush: A simple language for quick

implementation of, and experimentation with, numerical algorithms

(for Linux, Mac, and Windows/Cygwin). Many algorithms described in this

course are implemented in the Lush library. Lush is available on the

department's Sun machines that are freely accessible to students

in the class (WWH Room 512).

See Chris Poultney's

notes on installing Lush under Cygwin.

- Torch: A C++ library for machine learning.

Papers

Some of those papers are available in the DjVu format.

The viewer/plugins for Windows, Linux, Mac, and various Unix flavors are

available here.

Many papers are available from Prof. LeCun's publication's

page.

Here are a few paper that are relevant to the course.

- Y. LeCun, L. Bottou, Y. Bengio, and P. Haffner,

"Gradient-Based Learning Applied to Document Recognition,"

Proceedings of the IEEE, vol. 86, no. 11, pp. 2278-2324, Nov. 1998.

[PS.GZ]

[DjVu]

- Y. LeCun, L. Bottou, G. Orr, and K. Muller, "Efficient BackProp,"

in Neural Networks: Tricks of the trade, (G. Orr and Muller K., eds.), 1998.

[PS.GZ]

[DjVu]

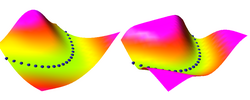

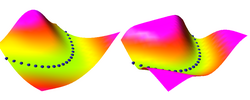

- P. Simard, Y. LeCun, J. Denker, and B. Victorri,

"Transformation Invariance in Pattern Recognition, Tangent Distance and Tangent Propagation,"

in Neural Networks: Tricks of the trade, (G. Orr and Muller K., eds.), 1998.

[PS.GZ]

[DjVu]

Publications, Journals

Conference Sites

Datasets

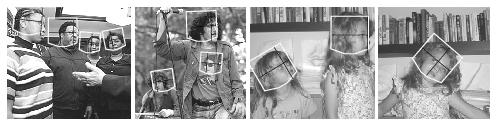

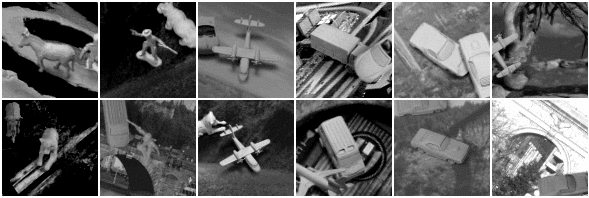

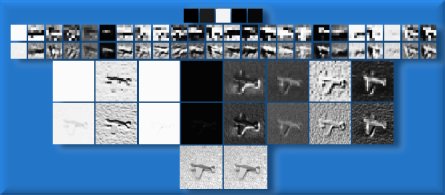

| Demos and Pretty Pictures |

More demos are available here.

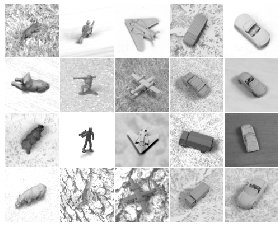

Object Recognition with Convolutional Neural Nets

|

|