| |

G22-3033-014, Spring 2004:

Machine Learning and Pattern Recognition

[ Course Homepage | Schedule and Course Material | Mailing List ]

|

Graduate Course on machine learning, pattern recognition, neural nets, statistical modeling. Instructor: Yann LeCun, 715 Broadway, Room 706, x83283, yann [ a t ] cs.nyu.edu Teaching Assistant: Fu Jie Huang, 715 Broadway, Room 705, jhuangfu [ a t ] cs.nyu.edu Classes: Wednesdays 5:00-6:50PM, Room 101, Warren Weaver Hall. Office Hours for Prof. LeCun: Thursdays 2:00-4:00 PM Office Hours for TA Fu-Jie Huang: Tuesdays 2:00-4:00 PM

This course will cover a wide variety of topics in machine learning, pattern recognition, statistical modeling, and neural computation. The course will cover the mathematical methods and theoretical aspects, but will primarily focus on algorithmic and practical issues. Machine Learning and Pattern Recognition methods are at the core of many recent advances in "intelligent computing". Current applications include machine perception (vision, audition), control (process control, robotics), data mining, time-series prediction (e.g. in finance), natural language processing, text mining and text classification, bio-informatics and computational models of biological processes, and many other areas. The topics studied in the course include:

Linear algebra, vector calculus, elementary statistics and probability theory. Good programming ability is a must: many assignements will involve implementing algorithms studied in class. The course will include a short tutorial on the Lush language, a simple interpreted language for numerical applications. Programming projects may be implemented in any language, (C, C++, Java, Matlab, Lisp, Python,...) but the use of a high-level interpreted language with good numerical support and and good support for vector/matrix algebra is highly recommended (Lush, Matlab, Octave...).

Register to the course's mailing list.

T. Hastie, R. Tibshirani, and J. Friedman: "Elements of Statistical Learning", Springer-Verlag, 2001. I will not follow this book very closely. In particular, much of the material covered in the second half of the course cannot be found in the above book. I will refer to research papers and lectures notes for those topics. Either one of the following books is also recommended, but not absolutely required (you can get a copy from the library):

Other Books of Interest

Code

Lush is installed on the department's PCs. It will soon be available on the Sun network as well.

PapersSome of those papers are available in the DjVu format. The viewer/plugins for Windows, Linux, Mac, and various Unix flavors are available here.

Publications, Journals

Conference Sites

Datasets

Demo of Convolutional Nets for handwriting recognition. More demos are available here.

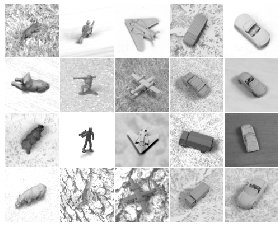

Object Recognition with Convolutional Neural Nets

|

||||||||