| |

DAVE: Autonomous Off-Road Vehicle Control

using End-to-End Learning

The purpose of the DAVE project was to be a proof-of-concept in

preparation of the LAGR project

("Learning Applied to Ground Robots") sponsored by the US

government. The success of the DAVE project contributed to the

decision to launch the LAGR project, which started in December 2004.

The purpose of the DAVE project was to be a proof-of-concept in

preparation of the LAGR project

("Learning Applied to Ground Robots") sponsored by the US

government. The success of the DAVE project contributed to the

decision to launch the LAGR project, which started in December 2004.

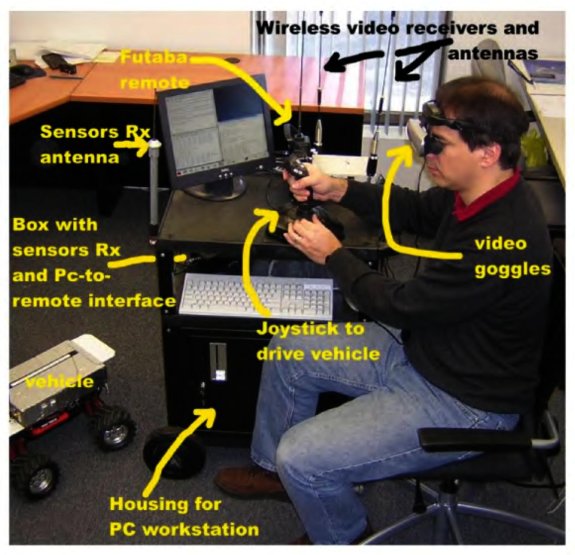

We built a small off-road robot that uses an end-to-end learning system to avoid obstacles solely from visual input. The DAVE robot has two cameras with analog video transmitter. The video is transmitted to a remote computer that collects the data, runs the automatic driving system, and controls the robot through radio control. A convolutional network takes the left and right camera images (YUV components) and is trained to directly predict the steering angle of a human driver. Several hours of binocular video were collected together with the steering angle of a human driver who navigated the robot around obstacles in a wide variety of outdoor settings. The convolutional net was trained end-to-end in supervised mode to map raw YUV image pairs to steering angles provided by the human driver. 1,500 short video sequences were collected. From these sequences, roughly 95,000 frames were selected for training, and 31,800 frames (from different sequences) for independent testing. Convergence of the learning procedure was obtained after 11 passes through the training set (which took approximately 4 days of CPU time on a 3.0GHz Xeon machine running Linux Red Hat 8). The input to the network consisted of a stereo pair of images from the left and right cameras in YUV (luminance/chrominance) representation at a resolution of 149 by 58 pixels. The convolutional network had a total of 3.15 Million connexions, and 71,900 trainable parameters. The entire software system was implemented in the LISP-like Lush language. Once trained, the driving system runs at roughly 10 frames per second on a 1.4GHz AMD Athlon processor.

The DAVE robot

Videos: note: these videos are all compatible with mplayer, Kaffeine, and other media players on Linux.

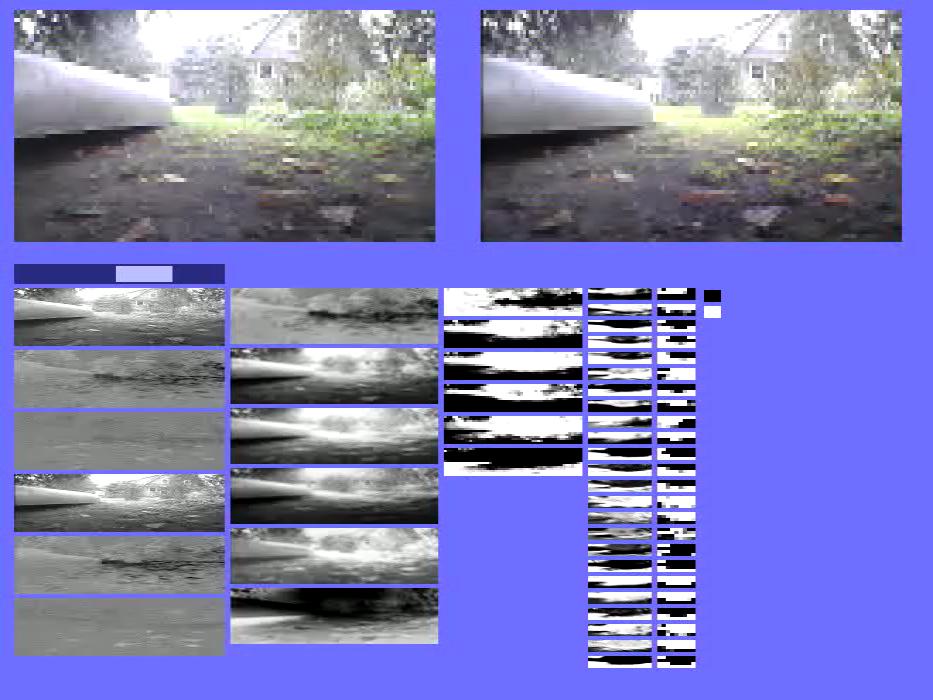

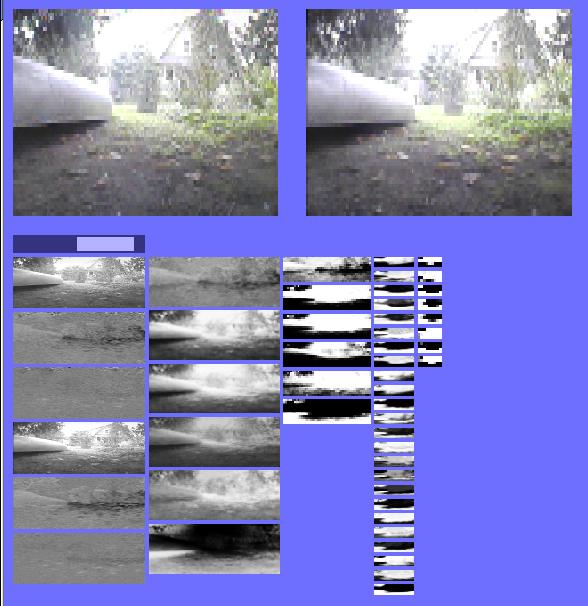

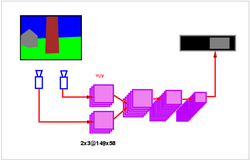

The picture below shows the left and right images, the steering angle

predicted by the system, and the states of the various layers of

the convolutional networks.

The data collection setup.

The pictures below show samples of input images, together with

the steering angles produced by the human driver and by DAVE system.

|

||||||||||||||||||||||||||||||||