NYU Depth V1

Nathan Silberman, Rob Fergus

Indoor Scene Segmentation using a Structured Light Sensor

ICCV 2011 Workshop on 3D Representation and Recognition [PDF][Bib]

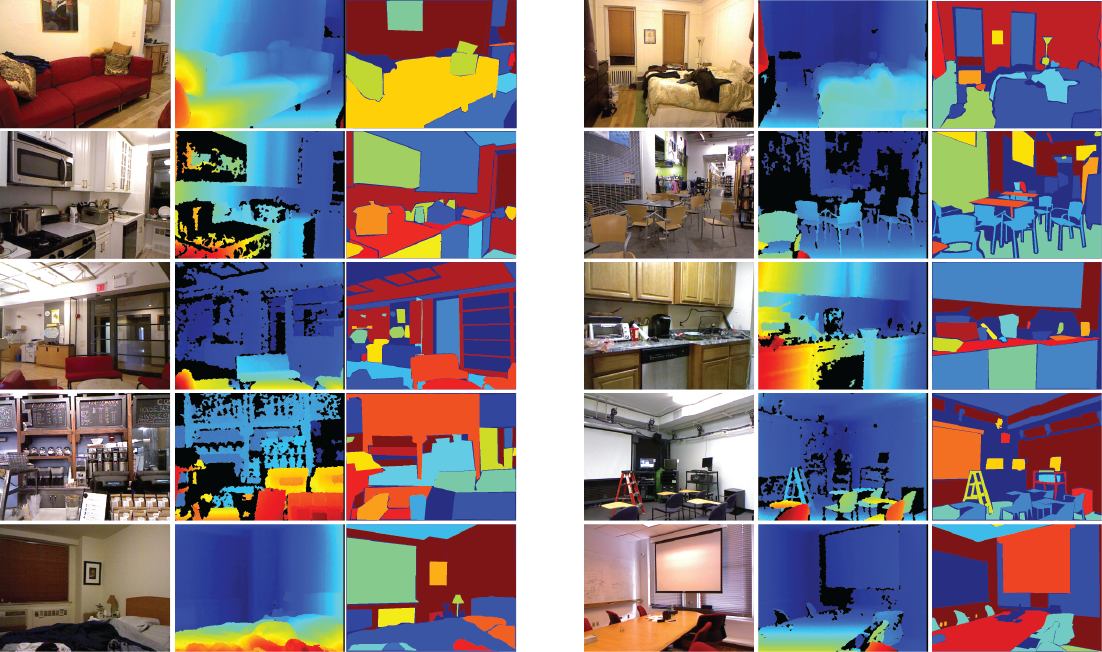

Samples of the RGB image, the raw depth image, and the class labels from the dataset.

Overview

The NYU-Depth data set is comprised of video sequences from a variety of indoor scenes as recorded by both the RGB and Depth cameras from the Microsoft Kinect. The dataset has several components:

- Labeled: A subset of the video data accompanied by dense multi-class labels. This data has also been preprocessed to fill in missing depth labels.

- Raw: The raw rgb, depth and accelerometer data as provided by the Kinect.

- Toolbox: Useful functions for manipulating the data and labels.

- The train/test splits used for evaluation.

Downloads

- Labeled dataset (~4 GB)

- Raw dataset (~90 GB)

- Toolbox

- Original raw RGB and Depth filenames for each of the labeled images

- Train/test splits for multi-class segmentation

- Train/test splits for classification

Labeled Dataset

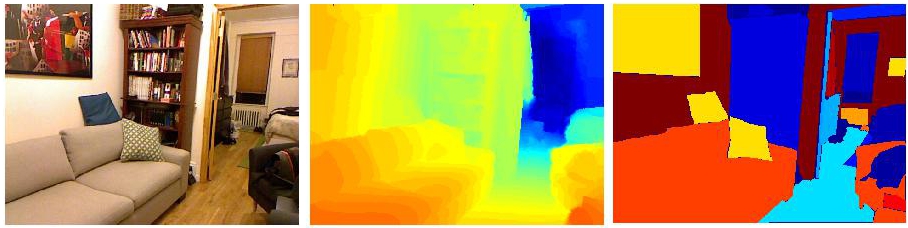

Output from the RGB camera (left), preprocessed depth (center) and a set of labels (right) for the image.

The labeled dataset is a subset of the Raw Dataset. It is comprised of pairs of RGB and Depth frames that have been synchronized and annotated with dense labels for every image. In addition to the projected depth maps, we have included a set of preprocessed depth maps whose missing values have been filled in using the colorization scheme of Levin et al. Unlike, the Raw dataset, the labeled dataset is provided as a Matlab .mat file with the following variables:

- accelData – Nx4 matrix of accelerometer values indicated when each frame was taken. The columns contain the roll, yaw, pitch and tilt angle of the device.

- depths – HxWxN matrix of depth maps where H and W are the height and width, respectively and N is the number of images. The values of the depth elements are in meters.

- images – HxWx3xN matrix of RGB images where H and W are the height and width, respectively, and N is the number of images.

- labels – HxWxN matrix of label masks where H and W are the height and width, respectively and N is the number of images. The labels range from 1..C where C is the total number of classes. If a pixel’s label value is 0, then that pixel is ‘unlabeled’.

- names – Cx1 cell array of the english names of each class.

- namesToIds – map from english label names to IDs (with C key-value pairs)

- rawDepths – HxWxN matrix of depth maps where H and W are the height and width, respectively, and N is the number of images. These depth maps are the raw output from the kinect.

- scenes – Cx1 cell array of the name of the scene from which each image was taken.

Raw Dataset

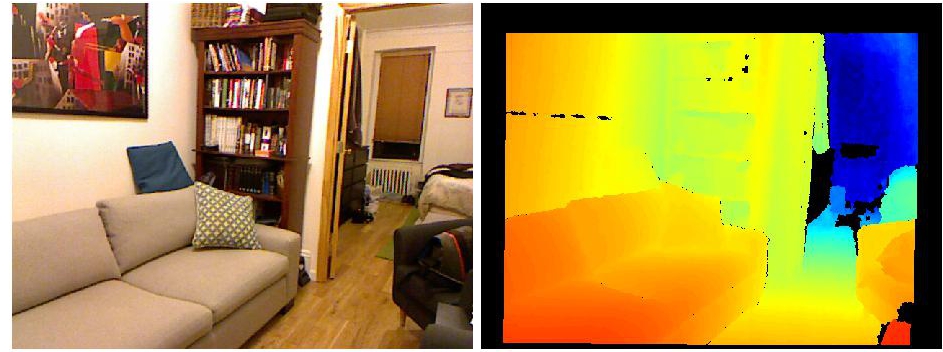

Output from the RGB camera (left) and depth camera (right). Missing values in the depth image are a result of (a) shadows caused by the disparity between the infrared emitter and camera, (b) random missing or spurious values caused by specular or low albedo surfaces or (c) the projection of the depth coordinate frame.

The raw dataset contains the raw image and accelerometer dumps from the kinect. The RGB and Depth camera sampling rate lies between 20 and 30 FPS (variable over time). While the frames are not synchronized, the timestamps for each of the RGB, depth and accelerometer files are included as part of each filename.

The dataset is divided into different folders which correspond to each ’scene’ being filmed, such as ‘living_room_0012′ or ‘office_0014′. The file hierarchy is structured as follows:

/

../bedroom_0001/

../bedroom_0001/a-1294886363.011060-3164794231.dump

../bedroom_0001/a-1294886363.016801-3164794231.dump

...

../bedroom_0001/d-1294886362.665769-3143255701.pgm

../bedroom_0001/d-1294886362.793814-3151264321.pgm

...

../bedroom_0001/r-1294886362.238178-3118787619.ppm

../bedroom_0001/r-1294886362.814111-3152792506.ppm

Files that begin with the prefix a- are the accelerometer dumps. These dumps are written to disk in binary and can be read with file get_accel_data.mex. Files that begin with the prefix r- and d- are the frames from the RGB and depth cameras, respectively. Since no preprocessing has been performed, the raw depth images must be projected onto the RGB coordinate space into order to align the images.

Toolbox

The matlab toolbox has several useful functions for handling the data. To run the unit tests, you must have matlab’s xUnit on your path.

- demo_synched_projected_frames.m – Demos synchronization of the raw rgb and depth images as well as alignment of the rgb and raw depth.

- eval_seg.m – Evaluates the predicted segmentation against the ground truth label map.

- get_projected_depth.m – Projects the raw depth image onto the rgb image plane. This file contains the calibration parameters that are specific to the kinect used to gather the data.

- get_accel_data.m – Extracts the accelerometer data from the binary files in the raw dataset.

- get_projection_mask.m – Gets a mask for the images that crops areas whose depth had to be inferred, rather than directly projected from the Kinect signal.

- get_synched_frames.m – Returns a list of synchronized rgb and depth frames from a given scene in the raw dataset.

- get_train_test_split.m – Splits the data in a way that ensures that images from the same scene are not found in both train and test sets.