G22.2110 Programming Languages

2009-10 Fall

Allan Gottlieb

Wednesday 5-6:50pm Room 109 Ciww

Start Lecture #1

Chapter 0: Administrivia

I start at 0 so that when we get to chapter 1, the numbering will

agree with the text.

0.1: Contact Information

- my-last-name AT nyu DOT edu (best method)

- http://cs.nyu.edu/~gottlieb

- 715 Broadway, Room 712

- 212 998 3344

0.2: Course Web Page

There is a web site for the course. You can find it from my home

page, which is http://cs.nyu.edu/~gottlieb

- You can also find these lecture notes on the course home page.

Please let me know if you can't find it.

- The notes are updated as bugs are found or improvements made.

- I will also produce a separate page for each lecture after the

lecture is given.

These individual pages might not get updated as quickly as the large

page.

0.3: Textbook

The course text is Scott, "Programming Language Pragmatics", Third

Edition (3e).

- We will proceed largely in order, but with some exceptions and

occasional outside sources.

- The 2e had been the primary text for this course for several

semesters.

Since the course has not changed radically, I advise looking at

the on line material for previous semesters; I certainly have.

In particular, I have adapted some material, with permission,

from Clark Barrett's notes for the 2008-09 Fall course.

Those notes were in turn influenced by Ed Osinski's notes from

2007-08 Summer.

0.4: Computer Accounts and Mailman Mailing List

- You are entitled to a computer account on one of the NYU

sun machines.

If you do not have one already, please get it asap.

- Sign up for the Mailman mailing list for the course.

You can do so by clicking

here.

- If you want to send mail just to me, use my-last-name AT nyu DOT edu not

the mailing list.

- Questions on the labs should go to the mailing list.

You may answer questions posed on the list as well.

Note that replies are sent to the list.

- I will respond to all questions; if another student has answered the

question before I get to it, I will confirm if the answer given is

correct.

- Please use proper mailing list etiquette.

- Send plain text messages rather than (or at least in

addition to) html.

- Use

Reply

to contribute to the current thread,

but NOT to start another topic.

- If quoting a previous message, trim off irrelevant parts.

- Use a descriptive Subject: field when starting a new topic.

- Do not use one message to ask two unrelated questions.

- Do NOT make the mistake of sending your

completed lab assignment to the mailing list.

This is not a joke; several students have made this mistake in

past semesters.

- As a favor to me, please do NOT

top post

.

That is, when replying, I ask that you either place your reply

after the original text or interspersed with it.

- I know there are differing opinions on top posting, but I

find it very hard to follow conversations that way.

- Exception: I realize Blackberry users

must

top post.

0.5: Grades

Grades are based on the labs and the final exam, with each

very important.

The weighting will be approximately

30%*LabAverage + 30%*MidTermExam + 40%*FinalExam

(but see homeworks below).

0.6: The Upper Left Board

I use the upper left board for lab/homework assignments and

announcements.

I should never erase that board.

Viewed as a file it is group readable (the group is those in the

room), appendable by just me, and (re-)writable by no one.

If you see me start to erase an announcement, let me know.

I try very hard to remember to write all announcements on the upper

left board and I am normally successful.

If, during class, you see that I have forgotten to record something,

please let me know.

HOWEVER, if I forgot and no one reminds me, the

assignment has still been given.

0.7: Homeworks and Labs

I make a distinction between homeworks and labs.

Labs are

- Required.

- Due several lectures later (date given on assignment).

- Graded and form part of your final grade.

- Penalized for lateness.

(added for 2009-10 spring:

The penalty is 1 point per day up to 30 days; then 2 points per day).

- Computer programs you must write.

Homeworks are

- Optional.

- Due the beginning of Next lecture or recitation

(whichever comes first).

You can bring your homework to class/recitation or can email it to me

before the class/recitation starts.

- Not accepted late.

- Mostly from the book.

- Collected and returned.

- Able to help, but not hurt, your grade.

0.7.1: Homework Numbering

Homeworks are numbered by the class in which they are assigned.

So any homework given today is homework #1.

Even if I do not give homework today, the homework assigned next

class will be homework #2.

Unless I explicitly state otherwise, all homeworks assignments can

be found in the class notes.

So the homework present in the notes for lecture #n is homework #n

(even if I inadvertently forgot to write it to the upper left

board).

0.7.2: Doing Labs on non-NYU Systems

You may solve lab assignments on any system you wish, but ...

- You are responsible for any non-nyu machine.

I extend deadlines if the nyu machines are down, not if yours are.

- Be sure to test your assignments on the nyu

systems.

In an ideal world, a program written in a high level language like

Java, C, or C++ that works on your system would also work on the

NYU system used by the grader.

Sadly, this ideal is not always achieved despite marketing claims

that it is achieved.

So, although you may develop your lab on any system, you

must ensure that it runs on the nyu system assigned to

the course.

- If somehow your assignment is misplaced by me and/or a grader,

we need a to have some timestamp ON AN NYU SYSTEM

that can be used to verify the date the lab was completed.

- When you complete a lab and have it on an nyu system, email the

lab to the grader and copy yourself on that message.

Please use one of the following two methods of mailing the lab.

- Send the mail from your CIMS account.

(Not all students have a CIMS account.)

- Use the

request receipt

feature from home.nyu.edu

or mail.nyu.edu and select the when delivered

option.

Keep the copy until you have received your grade on the

assignment.

I realize that I am being paranoid about this.

It is rare for labs to get misplaced, but they sometimes do and I

really don't want to be in the middle of an

I sent it ... I never received it

debate.

Thank you.

0.7.3: Obtaining Help with the Labs

Good methods for obtaining help include

- Asking me during office hours (see web page for my hours).

- Asking the mailing list.

- Asking another student, but ...

Your lab must be your own.

That is, each student must submit a unique lab.

Naturally, simply changing comments, variable names, etc. does

not produce a unique lab.

0.8: A Grade of Incomplete

The new rules set by GSAS state:

3.6. Incomplete Grades: An unresolved grade, I, reverts to F one

year after the beginning of the semester in which the course

was taken unless an extension of the incomplete grade has been

approved by the Vice Dean.

3.6.1. At the request of the departmental DGS and with the

approval of the course instructor, the Vice Dean will

review requests for an extension of an incomplete grade.

3.6.2. A request for an extension of incomplete must be

submitted before the end of one year from the beginning

of the semester in which the course was taken.

3.6.3. An extension of an incomplete grade may be requested for

a period of up to, but not exceeding, one year

3.6.4. Only one one-year extension of an incomplete may be granted.

3.6.5. If a student is approved for a leave of absence (See 4.4)

any time the student spends on that leave of absence will

not count toward the time allowed for completion of the

coursework.

0.9 Academic Integrity Policy

This email from the assistant director, describes the policy.

Dear faculty,

The vast majority of our students comply with the

department's academic integrity policies; see

www.cs.nyu.edu/web/Academic/Undergrad/academic_integrity.html

www.cs.nyu.edu/web/Academic/Graduate/academic_integrity.html

Unfortunately, every semester we discover incidents in

which students copy programming assignments from those of

other students, making minor modifications so that the

submitted programs are extremely similar but not identical.

To help in identifying inappropriate similarities, we

suggest that you and your TAs consider using Moss, a

system that automatically determines similarities between

programs in several languages, including C, C++, and Java.

For more information about Moss, see:

http://theory.stanford.edu/~aiken/moss/

Feel free to tell your students in advance that you will be

using this software or any other system. And please emphasize,

preferably in class, the importance of academic integrity.

Rosemary Amico

Assistant Director, Computer Science

Courant Institute of Mathematical Sciences

Chapter 1: Introduction

Brief History

Very early systems were programmed in machine language.

- Since most computers are binary oriented, early programs

consisted of large strings of ones and zeros.

Exception: The IBM 1620 (circa 1965) was a decimal machine.

- Programming in machine language is tedious and error prone.

- No 10 million line programs were written.

(Today, large programs compile into millions of lines of code.)

Next came assembly language where the programmer uses mnemonics for

the opcode, but still writes one line for every machine instruction.

Simple translators, called assemblers, translate this language into

machine code.

Assembly language, like machine language is completely non-portable.

Later assemblers supported macros, which helped the tedium, but did

not increase portability.

Today high-level languages dominate and are translated by

sophisticated software called compilers.

The 3e has much material on compilers, but I will de-emphasize that

portion since we give a course on the subject (G22-2130).

My extensive lecture notes for 2130 can be found off my home page.

1.1: The Art of Language Design

What is a Program?

At the most primitive level, a program is a sequence of characters

chosen from an alphabet.

But not all sequences are legal: there are syntactic

restrictions (e.g., identifiers in C cannot begin with a digit).

We also ascribe meaning to programs using the semantics of

the language, which further restricts the legal sequences (e.g.,

declarations must precede uses in Ada).

A Programming Language specifies what character sequences

are legal and what these sequences mean (i.e., it specifies

the syntax and semantics).

Why So Many Languages?

Desirable Traits

Why are some languages so much more popular/successful than others?

- Expressive.

Formally, essentially all programming languages are equally

expressive in that they can all compute the same class of

algorithms.

However, it is easier with some than with others.

Abstraction is often a key component in this regard.

- Short Learning Curve.

- Ease (and Ready Availability) of Implementation.

Good examples include Pascal and Java.

Bad examples include Algol-68 and Ada (initially).

- Standardization.

Requires the existence of a tight definition and standard

libraries.

- Precise Semantics.

Important for verification.

- Open Source Compiler.

- High Performance Compiler.

This refers to the performance of the compiled code.

The designers of Fortran, the first high-level language, worried

much more about compiler performance than about syntax.

- Economics, Patronage, and Inertia.

Major sponsors help.

Dod supported Ada, but nearly killed it by forbidding subsets,

which resulted in no inexpensive compilers for a long time.

1.2: The Programming Language Spectrum

Imperative vs. Declarative Languages

An imperative language programmer essentially tells the computer

exactly how to solve the problem at hand.

In contrast a declarative language is higher-level

: The

programmer describes only what is to be done.

For example, malloc/free (especially free) is imperative, but

garbage collection is normally found in declarative languages.

The Prolog example below illustrates the declarative style.

There are broad spectrum languages like Ada that try to provide

both low-level and high-level features.

By necessity, such languages are large and complex.

von Neumann: Fortran, Pascal, C, Ada 83

This is the most common programming paradigm and largely

subsumes the Object-Oriented class described next.

It is the prototypical imperative language style.

The defining characteristic is that the state of a program

(very roughly the values of the variables) changes

during execution.

Very often the change is the result of executing an assignment

statement.

Recently, the term von Neumann is used to refer to serial

execution of a program (i.e., no concurrency).

Object-Oriented: Simula 67, Smalltalk, Ada 95, Java, C#, C++

Languages that emphasize information hiding and (especially)

inheritance.

Data structures are bundled together with the operators using them.

Most are von Neumann, but pure

object-oriented languages like

Smalltalk have a different viewpoint of computation, which is viewed

as occurring at the objects themselves.

Functional (a.k.a. Applicative): Scheme, ML, Haskell

These languages are based on the lambda calculus.

They emphasize functions (without side effects) and discourage

assignment statements and other forms of mutable state.

Functions are first-class objects; new functions can be constructed

while the program is running.

This will be emphasized when we study Scheme.

Here is a taste.

(define sumtwo

(lambda (A B)

(+ A B)))

> (sumtwo 5 8)

13

(define sumthree

(lambda (A B C)

(+ (+ A B) C)))

> (sumthree 5 8 2)

15

(define oosumtwo ; "object oriented" sum

(lambda (A B)

( (lambda (X) (+ X B)) ; this is the anonymous "add B" function

A))) ; which we apply to A

> (oosumtwo 5 8) ; the anonymous "add 8" temporarily existed

13

Logic (Declarative, Constraint Based): Prolog

A program is a set of constraints and rules.

The following example is a fixed version of Figure 1.14.

gcd(A,B,G) :- A=B, G=A.

gcd(A,B,G) :- A>B, C is A-B, gcd(C,B,G)

gcd(A,B,G) :- B>A, C is B-A, gcd(C,A,G)

Homework: 1.4.

Unless otherwise stated numbered homework problems are from the

Exercises

section of the current chapter.

For example this problem can be found in section 1.8.

Scripting (Shell, Perl, Python)

Often used as glue to connect other programs together.

Mixtures

Many languages contain aspects of several of these classes.

As mentioned C++, Java, and C# are both von Neumann and

object-oriented.

The language OHaskell is object-oriented and functional.

Concurrency

Concurrency is not normally considered a separate class.

It is usually obtained via extensions to languages in the above

classes.

These extensions can be in the form of libraries.

A few languages have concurrency features, but the bulk of the

language is devoted to serial execution.

For example, threads in Java and rendezvous in Ada.

(High-Level vs Low-Level) High-Level Languages

In this course we are considering only high-level languages, i.e.,

we exclude machine and assembly languages.

However, the class of high-level languages is quite broad and some

are considered higher level than others.

Thus when comparing languages, we often call C and Fortran

low-level since the programmer has more control and must expend more

effort.

In contrast, languages like Scheme and Haskell are considered

high-level.

They require less effort for a given task but give the programmer

less control and the run-time performance is not as easy to predict.

Perhaps we should call C a low-level, high-level language, but that

suggestion is too awkward to take seriously.

There are wide spectrum

languages like Ada and C++ that

provide both low-level control when desired, in addition to

high-level features such as garbage-collection and array

manipulation.

The cost of this is a large, complex language.

Homework: Page 16 CYU (check your understanding)

#2.

Characteristics of Modern Languages

Modern general-purpose languages such as Ada, C++, and Java share

many characteristics.

- Large and complex: Hugh manuals and grammars.

- Complex type system.

- Procedures and functions.

- Object-oriented facilities.

- Abstraction mechanisms with information hiding.

- Multiple storage-allocation mechanisms.

- Support for concurrency (not in C++).

- Support for generic programming (new in Java).

- Large, standard libraries.

1.3: Why Study Programming Languages

Become Familiar with Various Idioms

Learning several languages exposes you to multiple techniques for

problem solving, some of which are idioms in the various languages.

Your exposure to the different idioms enlarges your toolbox and

increases your programming power, even if you only use a very few,

closely related, languages.

Elegance, Beauty and Coolness

The automatic pattern matching and rule application of Prolog, once

understood, is real neat.

It is certainly not something I would have thought of had I never

seen Prolog.

If you encounter problems for which Prolog is well suited and for

which a Prolog based solution is permitted, the programming effort

saved is substantial.

A very primitive form of the Prolog unification is the

automatic dependency checking, topological sorting, and evaluation

performed by spreadsheets.

I have never written a serious program using

continuation passing

, but nonetheless appreciate the elegance

and (mathematical-like) beauty the technique offers.

I hope you will too when we study Scheme.

Applying Programming Language / Compiler Techniques outside the Domain

Very few of you will write a compiler or be part of a programming

language design effort.

Nonetheless, the techniques used in these areas can be applied to

many other problems.

For example, lexing and especially parsing can be used to produce a

front end for many systems.

Brian Kernighan refers to them as little languages

; others

call them domain-specific languages

or application-specific languages

.

1.4: Compilation and Interpretation

The standard reference for compilers is the Dragon Book

now in its third incarnation (gaining an author with each new life).

My notes for our compiler course using this text can be found off my

home page (click on previous courses).

A Compiler is a translator from one language, the input

or source language, to another language, the output

or target language.

Often, but not always, the target language is an assembler language

or the machine language for a computer processor.

Note that using a compiler requires a two step process to run a

program.

- Execute the compiler (and possibly an assembler) to translate

the source program into a machine language program.

- Execute the resulting machine language program, supplying

appropriate input.

This should be compared with an interpreter, which accepts

the source language program and the appropriate

input, and itself produces the program output.

Sometimes both compilation and interpretation are used.

For example, consider typical Java implementations.

The (Java) source code is translated (i.e., compiled)

into bytecodes, the machine language for an

idealized virtual machine

, the Java Virtual Machine or JVM.

Then an interpreter of the JVM (itself normally called a JVM)

accepts the bytecodes and the appropriate input,

and produces the output.

This technique was quite popular in academia some time ago with the

Pascal programming language and P-code.

1.5: Programming Environments

The Compilation Tool Chain

This section of 3e is quite clear, but rather abbreviated.

It is enough for this class, but if you are interested in further

details you can look

here

at the corresponding section from the compilers course (G22.2130).

Other Parts of the Programming Environment

In addition to the compilation tool chain, the programming

environment includes such items as pretty printers

,

debuggers, and configuration managers.

These aids may be standalone or integrated in an IDE or

sophisticated editing system like emacs.

1.6: An Overview of Compilation

The material in 1.6 of the 3e, including the subsections below, is briefly

covered in section 1.2 of my compilers notes.

The later chapters of those notes naturally cover the material in

great depth.

However, we will not be emphasizing compilation aspects of

programming languages in this course so will be content with the

following abbreviated coverage.

Homework: 1.1 (a-d).

1.6.1: Lexical and Syntax Analysis

1.6.2: Semantic Analysis and Intermediate Code Generation

1.6.3: Target Code Generation

1.6.4: Code Improvement

1.7: Summary

Read.

Every chapter ends with these same four sections.

I will not be repeating them in the notes.

You should always read the summary.

1.8: Exercises

1.9: Explorations

1.10: Bibliographic Notes

Chapter 2: Programming Language Syntax

We cover this material (in much more depth) in the compilers

course.

For the programming language course we are content with the very

brief treatment in the previous chapter.

Homework: 2.1(a), 2.3, 2.9(a,b)

Start Lecture #2

Chapter 3: Names, Scopes, and Bindings

Advantages of High-level Programming Languages

They are Machine Independent.

This is clear.

They are easier to use and understand.

This is clearly true but the exact reasons are not clear.

Many studies have shown that the number ofbugs per line of code is

roughly constant between low- and high-level languages.

Since low-level languages need more lines of code for the same

functionality, writing a programs using these languages results in

more bugs.

Studies also support the statement that programs can be written

quicker in high-level languages (comparable number of documented

lines of code per day for high- and low-level languages).

What is not clear is exactly what aspects of high-level

languages are the most important and hence how should such

languages be designed.

There has been some convergence, which will be reflected in

the course, but this is not a dead issue.

Names

A name is an identifier, i.e., a string of

characters (with some restrictions) that represents something else.

Many different kinds of things can be named, for example.

- Variables

- Constants

- Functions/Procedures

- Types

- Classes

- Labels (i.e., execution points)

- Continuations (i.e., execution points with environments)

- Packages/Modules

Names are an important part of abstraction.

- Abstraction eases programming by supporting

information hiding, that is by enabling the suppression

of details.

- Naming a procedure gives a control abstraction.

- Naming a class or type gives a data abstraction.

Consider the following Ada program.

procedure Demo is

type Color is (Red, Blue, Green, Purple, White, Black);

type ColorMatrix is array (Integer range <>, Integer range <>) of Color;

subtype ColorMatrix10 is ColorMatrix(0..9,0..9);

A : ColorMatrix10;

B : ColorMatrix(3..8, 6..12);

begin

A(2,3) := Red;

B(4,11) := Blue;

end Demo;

3.1: The Notion of Binding Time

In general a binding is as association of two things.

We will be interesting in binding a name to the

thing it names and will see that a key question

is when does this binding occur.

The answer to that question is called the

binding time.

There is quite a range of possibilities.

The following list is ordered from early binding to late binding.

- Language design time: The designers bind keywords to

their meaning.

- Language implementation time: Some parts of a

language are normally left for the implementer to decide (i.e.,

they are

not the same across implementations).

One common example is the number of bits in an integer; another

is the order in which the additions are performed

in

A+B+C

.

- Program writing time: Clearly, programmers choose

many names.

- Compile time: The compiler binds high-level

constructs, such as control structures (if, while, etc.) to

machine code and also may bind static objects (e.g., global

data) to specific machine locations.

- Link time: The linker combines separately compiled

object modules into a final load module ready to be loaded and

run.

During this process, the overall layout of the modules is

determined and inter-module references are resolved.

The technical terminology is that relative addresses are

relocated and external references are

resolved.

- Load time: In older operating systems, jobs did not

move once started.

With such systems the loader bound virtual addresses (i.e., the

addresses in the program) to physical addresses (i.e., addresses

in the machine.

Today it is more complicated: Very little of the job need be

loaded prior to execution and once loaded, parts of the job can

be moved.

That is, today the binding of virtual address to physical

addresses changes during execution.

- Run time: This is a large class and actually covers a

range of times, for example

- Program start time.

- Module entry time.

- Declaration elaboration time, when a declaration is first

encountered.

- Function/procedure call time.

- Block entry time.

- Statement execution time.

Let's look at an example from ada

procedure Demo is

X : Integer;

begin

X := 3;

declare

type Color is (Red, Blue, Green, Purple, White, Black);

type ColorMatrix is array (Integer range <>, Integer range <>) of Color;

subtype ColorMatrix10 is ColorMatrix(0..9,0..9);

A : ColorMatrix10;

B : ColorMatrix(X..8, 6..12); -- could not be bound at start

begin

A(2,3) := Red;

A(2,X) := Red; -- Same as previous statement

B(4,11) := Blue;

end;

X := 4;

end Demo;

Static binding refers to binding performed

prior to run time.

Dynamic binding refers to binding performed

during run time.

These terms are not very precise since there are many times

prior to run time, and run time itself covers several times

.

Trade-offs for Early vs. Late Binding

Run time is generally reduced if we can compile, rather than

interpret, the program.

It is typically further reduced if the compiler can make more

decisions rather than deferring them to run time, i.e., if static

binding can be used.

Summary: The earlier (binding time) decisions are made, the better

the code that the compiler can produce.

Early binding times are typically associated with compiled

languages while late binding times are typically associated with

interpreted languages.

The compiler is easier to implement if there are bindings done even

earlier than compile time

We shall see, however, that dynamic binding gives added flexibility.

For one example, late-binding languages

like Smalltalk, APL,

and most scripting languages permit polymorphism: The same code can

be executed on objects of different types.

Moreover, late-binding gives control to the programmer since they

control run time.

This gives increased flexibility when compared to early-binding,

which is done by the compiler or language designer.

3.2: Object Lifetime and Storage Management

Lifetimes

We use the term lifetime to refer to the interval between

creation and destruction.

For example, the interval between the binding's creation and

destruction is the binding's lifetime.

For another example, the interval between the creation and

destruction of an object is the object's lifetime.

How can the binding lifetime differ from the object lifetime?

- Pass-by-reference calling semantics.

At the time of the call the parameter in the called procedure is

bound to the object corresponding to the called argument.

Thus the binding of the parameter in the called procedure has a

shorter lifetime that the object it is bound to.

- Dangling References.

Assume there are two pointers P and Q.

An object is created using P; then P is assigned to Q; and

finally the object is destroyed using Q.

Pointer P is still bound to the object after the latter is

destroyed, and hence the lifetime of the binding to P exceeds the

lifetime of the object it is bound to.

Dangling references like this are nearly always a bug and argue

against languages permitting explicit object de-allocation

(rather than automatic deallocation via garbage collection).

Storage Allocation Mechanisms

There are three primary mechanisms used for storage allocation:

- Static objects maintain the same (virtual) address

throughout program execution.

- Stack objects are allocated and deallocated in a

last-in, first-out (LIFO, or stack-like) order.

The allocations/deallocations are normally associated with

procedure or block entry/exit.

- Heap objects are allocated/deallocated at arbitrary

times.

The price for this flexibility is that the memory management

operations are more expensive.

We study these three in turn.

3.2.1: Static Allocation

This is the simplest and least flexible of the allocation

mechanisms.

It is designed for objects whose lifetime is the entire program

execution.

The obvious examples are global variables.

These variables are bound once at the beginning and remain bound

until execution ends; that is their object and binding lifetimes are

the entire execution.

Static binding permits slightly better code to be compiled (for

some architectures and compilers) since the addresses are computable

at compile time.

Using Static Allocation for all Objects

In a (perhaps overzealous) attempt to achieve excellent run time

performance, early versions of the Fortran language were designed to

permit static allocation of all objects.

The price of this decision was high.

- Recursion was not supported.

- Arrays had to be declared of a fixed size.

Before condemning this decision, one must remember that, at the

time Fortran was introduced (mid 1950s), it was believed by many to

be impossible for a compiler to turn out

high-quality machine code.

The great achievement of Fortran was to provide the first

significant counterexample to this mistaken belief.

Local Variables

For languages supporting recursion (which includes recent versions

of Fortran), the same local variable name can correspond to multiple

objects corresponding to the multiple instantiations of the

recursive procedure containing the variable.

Thus a static implementation is not feasible and stack-based

allocation is used instead.

These same considerations apply to compiler-generated temporaries,

parameters, and the return value of a function.

Constants

If a constant is constant throughout execution (what??, see below),

then it can be stored statically, even if used recursively or by

many different procedures.

These constants are often called manifest constants or

compile time constants.

In some languages a constant is just an object whose value doesn't

change (but whose lifetime can be short).

In ada

loop

declare

v : integer;

begin

get(v); -- input a value for v

declare

c : constant integer := v; -- c is a "constant"

begin

v := 5; -- legal; c unchanged.

c := 5; -- illegal

end;

end;

end loop;

For these constants static allocation is again not feasible.

3.2.2: Stack-Based Allocation

This mechanism is tailored for objects whose lifetime is the same

as the block/procedure in which it is declared.

Examples include local variables, parameters, temporaries, and

return values.

The key observation is that the lifetimes of such objects obey a

LIFO (stack-like) discipline:

If object A is created prior to object B, then A will be

destroyed no earlier than B

.

When procedure P is invoked the local variables, etc for P are

allocated together and are pushed on a stack.

This stack is often called the control stack and the data

pushed on the stack for a single invocation of a procedure/block is

called the activation record or frame of the

invocation.

When P calls Q, the frame for Q is pushed on to the stack, right

after the frame for P and the LIFO lifetime property guarantees that

we will remove frames from the stack in the safe order (i.e., will

always remove (pop) the frame on the top of the stack.

This scheme is very effective, but remember it is only for objects

with LIFO lifetimes.

For more information, see any compiler book or

my compiler notes.

3.2.3: Heap-Based Allocation

What if we don't have LIFO lifetimes and thus cannot use

stack-based allocation methods?

Then we use heap-based allocation, which just means we can allocate

and destroy objects in any order and with lifetimes unrelated to

program/block entry and exit.

A heap is a region of memory from which

allocations and destructions can be performed at arbitrary times.

Remark: Please do not confuse

these heaps with the heaps you may have learned in a data

structures course.

Those (data-structure) heaps are used to implement priority queues;

they are not used to implement our heaps.

What objects are heap allocated

?

- Objects allocated with malloc() in C, or new in Java, or cons

in Scheme.

- Objects whose size may change during execution, for example,

extendable arrays and strings.

Implementing Heaps

The question is how do respond to allocate/destroy commands?

Looked at from the memory allocators viewpoint, the question is how

to implement requests and returns of memory blocks (typically, the

block returned must be one of those obtained by a request, not just

a portion of a requested block).

Note that, since blocks are not necessarily

returned in LIFO order, the heap will have not simply be a region of

allocated memory and another region of available memory.

Instead it will have free regions interleaved with allocated

regions.

So the first question becomes, when a request arrives, which region

should be (partially) uses to satisfy it.

Each algorithmic solution to this question (e.g., first fit, best

fit, worst fit, circular first fit, quick fit, buddy, Fibonacci)

also includes a corresponding algorithm for processing the return of

a block.

- First Fit: Choose the first free block big enough to

satisfy the request.

A right-size piece of this block is used to satisfy the request

and the remaining piece is a new, smaller free block.

If the entire block was used to satisfy the request, the

unnecessary portion is wasted.

This is called internal fragmentation since

the wasted space is inside (internal to) an allocated block.

- Best Fit: Similar, but return the

smallest free block that is big enough.

- Worst Fit: Similar, but return the

largest free block.

- Circular First Fit: The same as first fit, but start

the next search where the previous one left off.

- Quick Fit: Keep, in addition, lists of blocks of

commonly needed size.

For example, cons cells in Scheme are might all be the same size

(two pointers).

- Buddy and Fibonacci are more complicated.

What happens when the user no longer needs the heap-allocated

space?

- Manual deallocation: The user issues a command like free or

delete to return the space (C, Pascal).

When a block (including any internal wasted space) is returned,

it is coalesced, if possible, with any adjacent free blocks.

- Automatic deallocation via garbage collection (Java, C#,

Scheme, ML, Perl).

- Semi-automatic deallocation using destructors (C++, Ada).

The destructor is called automatically by the system, but the

programmer writes the destructor code.

Poorly done manual deallocation is a common programming error.

- If an object is deallocated and subsequently used, we get a

dangling reference.

- If an object is never deallocated, we get a

memory leak.

We can run out of heap space for at least three different reasons.

- What if there is not enough free space in total for the new

request and all the currently allocated space is needed?

Solution: Abort.

- What if we have several, non-contiguous free blocks, none of

which are big enough for the new request, but in total they are

big enough?

This is called external fragmentation since

the wasted space is outside (external to) all allocated

blocks.

Solution: Compactify.

- What if some of the allocated blocks are no longer accessible

by the user, but haven't been returned?

Solution: Garbage Collection (next section).

3.2.4: Garbage Collection

The 3e covers garbage collection twice.

It is covered briefly here in 3.2.4 and in more depth in 7.7.3.

My coverage here contains much of 7.7.3.

A garbage collection algorithm is one that

automatically deallocates heap storage when it is no longer needed.

It should be compared to manual deallocation functions such as

free().

There are two aspects to garbage collection: first, determining

automatically what portions of heap allocated storage will

(definitely) not be used in the future, and second making this

unneeded storage available for reuse.

After describing the pros and cons of garbage collection, we

describe several of the algorithms used.

Advantages and Disadvantages of Garbage Collection

We start with the negative.

Providing automatic reclamation of unneeded storage is an extra

burden for the language implementer.

More significantly, when the garbage collector is running, machine

resources are being consumed.

For some programs the garbage collection overhead can be a

significant portion of the total execution time.

If, as is often the case, the programmer can easily tell when the

storage is no longer needed, it is normally much quicker for the

programmer to free it manually than to have a garbage collector do

it.

Homework: What would characterizes programs for

which garbage collection causes significant overhead?

The advantages of garbage collection are quite significant (perhaps

they should be considered problems with manual deallocation).

Unfortunately, there are often times when it seems obvious that

storage is no longer needed; but it fact it used later.

The mistaken use of previously freed storage is called a

dangling reference.

One possible cause is that the program is changed months later and a

new use is added.

Another problem with manual deallocation is that the user may

forget to do it.

This bug, called a storage leak might only turn up in

production use when the program is run for an extended period.

That is if the program leaks 1KB/sec, you might well not notice any

problem during test runs of 5 minutes, but a production run may

crash (or begin to run intolerably slowly) after a month.

The balance is swinging in favor of garbage collection.

Reference Counting

This is perhaps the simplest scheme, but misses some of the

garbage.

- Initialize the reference count to 1 for each newly created

object.

- Increment the reference count by 1 when an additional pointer

to the object is created (e.g., newP:=oldP increments

the count of the object pointed to by oldP).

- Decrement the reference count by 1 when we remove a pointer to

the object (e.g., newP:=OldP decrements the count of

the object, if any, formerly pointed to by newP).

- If the count is decremented to zero, the object is garbage

(i.e., it can no longer be referenced) and thus is freed.

Remarks:

- Assume L is the only pointer to a circular

list and we assign a new value to L.

The circular list now cannot be accessed, but every reference

count is 1 so nothing will be freed.

Storage has leaked.

- C programmers can fairly easily implement the reference

counting algorithm for for their heap storage using the above

algorithm.

However, in C it is hard to tell when new pointers are being

created because the type system is loose and pointers are really

just integers.

Tracing Collectors

The idea is to find the objects that are live and then

reclaim all dead objects.

A heap object is live if it can be reached starting from a non-heap

object and following pointers.

The remaining heap objects are dead.

That is, we start at machine registers, stack variables and

constants, and static variables and constants that point to heap

objects.

These starting places are called roots.

It is assumed that pointers to heap objects can be distinguished

from other objects (e.g., integers).

The idea is that for each root

we preform a graph traversal

following all pointers.

Everything we find this way is live; the rest is dead.

Mark-and-Sweep

This is a two phase algorithm as the name suggests and basically

follows the idea just given: We mark live objects during the mark

phase and reclaim dead ones during the sweep phase.

It is assumed that each object has an extra mark

bit.

The code below defines a procedure mark(p), which uses the mark bit.

Please don't confuse the uses of the name mark as both a procedure

and a bit.

Procedure GC is

for each root pointer p

mark(p)

sweep

for each root pointer p

p.mark := false

Procedure mark(p) is

if not p.mark -- i.e. if the mark bit is not set

p.mark := true

for each pointer p.x -- i.e. each ptr in obj pointed to by p

mark(p.x) -- i.e. invoke the mark procedure on x recursively

Procedure sweep is

for each object x in the heap

if not x.mark

insert(x,free_list)

else

x.mark := false

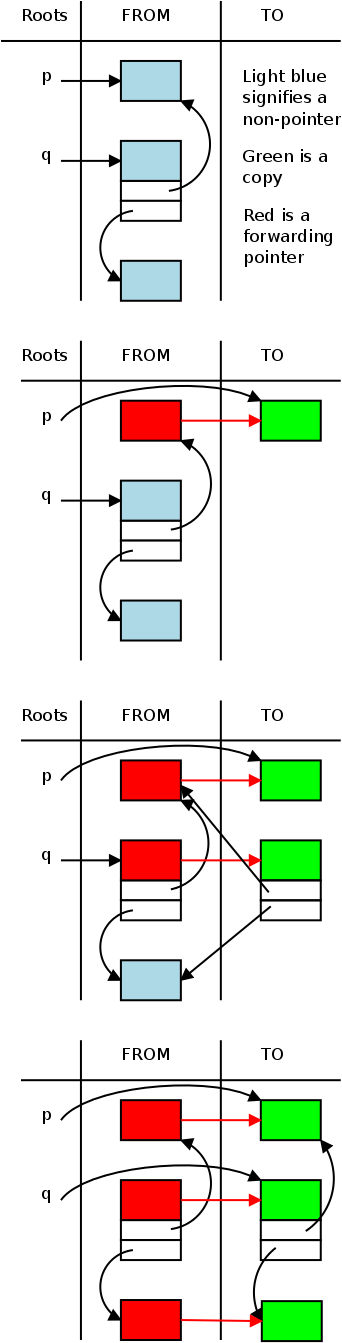

Copying (a.k.a. Stop-and-Copy)

A performance problem with mark-and-sweep is that it moves each

dead object (i.e., each piece of garbage).

Since experimental data from LISP indicates that, when garbage

collection is invoked, about 2/3 of the heap is garbage, it would be

better to leave the garbage alone and move the live data instead.

That is the motivation for stop-and-copy.

Divide the heap into two equal size pieces, often called

FROM

and TO

.

Initially, all allocations are performed using FROM; TO is unused.

When FROM is (nearly) full, garbage collection is performed.

During the collection, all the live data is moved from FROM to TO.

Naturally the pointers must now point to TO.

The tricky part is that live data may move while there are still

pointers to it.

For this reason a forwarding address

is left in FROM.

Once, all the data is moved, we flip the names FROM and TO and

resume program execution.

Procedure GC is

for each root pointer p

p := traverse(p)

Procedure traverse is

if p.ALL is a forwarding address

p := the forwarding address in p.ALL

return p

else

newP := copy(p,TO)

p.ALL := newP -- write forwarding address

for each pointers x in newP.ALL

newP.x := traverse(newP.x)

return newP

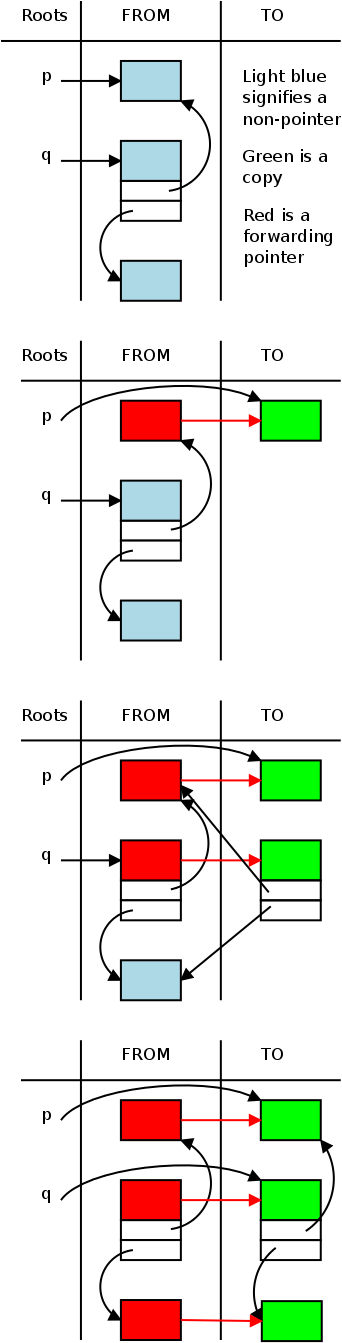

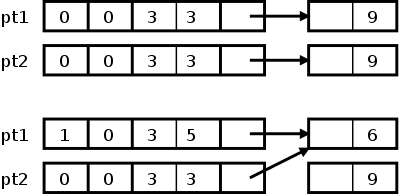

The movie on the right illustrates stop and copy in action.

- The top frame of the movie is the initial state.

There are two root pointers p and q, and three heap objects.

Initially p points to the first object, q points to the second.

The second object contains pointers to the other two.

- In the second frame we see the state after traverse has been

called with argument p and the assignment statement in GC has

been executed.

Note that traverse(p) executes the else arm of the if.

The object has been copied and the forwarding pointer set.

In this diagram I assumed that the forwarding pointer is the

same size as the original object.

It is required that an object is at least as large as a pointer.

The previous contents of the object have been overwritten by

this forwarding pointer, but no information is lost since the

copy (in TO space) contains the original data.

There are no internal pointers so the for loop is empty.

When traverse completes, p is set to point to the new object.

- The third frame shows the state while traverse(q) is in

progress.

The first two statements of the else branch have been executed.

The middle object has been copied and the forwarding pointer has

been set.

This forwarding pointer will never be used since there are no

other pointers to q.ALL.

Note that the two internal pointers refer to the old

(FROM-space) versions of the first and third objects.

Indeed, those pointers might have been overwritten by the

forwarding pointer, but again no information is lost since the

TO-space copy has the original values.

- The last frame shows the final state.

A great deal has happened since the previous frame.

The traverse(q) execution continues reaches the for loop.

This time there are two pointers in the copied block so traverse

will be called recursively two times.

The first pointer refers to an already-moved block so the then

branch is taken and, when traverse returns, the pointer is

updated.

The second pointer points to the original version of the third

block so the else branch is taken.

As in the top frame, the block is copied and the forwarding

pointer is set.

Again, when traverse returns, the pointer in the to-space copy

of the second block is updated.

Finally, traverse(q) returns and q is updated.

We are done.

The FROM space is superfluous, TO space is now what FROM space

was at the start.

Remarks

- Stop-and-copy compactifies with no additional code.

Specifically, the copies into TO space are contiguous so when

garbage collection ends, there is no external fragmentation.

- With mark-and-sweep, the garbage is moved, but the live

objects are not.

Thus, when collection ends, we have the in-use and free blocks

intersperses, i.e., external fragmentation.

Extra effort is needed to compactify.

- It has been observed that most garbage is

fresh, i.e., newly created.

Generational collectors separate out in-use blocks that

have survived collections into a separate region, which is

garbage collected less frequently.

- As described above, both mark-and-sweep and

stop-and-copy assume that no activity occurs during a pass of

the collector.

With multiprocessors (e.g., multicore CPUs) it is intriguing to

consider have the collector run simultaneous with the user

program (the so-called mu tater)

procedure f is

x : integer;

begin

x := 4;

declare

x : float;

begin

x := 21.75;

end;

end f;

Homework: CYU p. 121 (2, 4, 9)

Homework: Give a program in C that would not work

if local variables were statically.

3.3: Scope Rules

The region of program text where a binding is active is called the

scope of the binding.

Note that this can be different from the lifetime.

The lifetime of the outer x in the example on the right is all of

procedure f, but the scope of that x has a hole where the inner x

hides the binding.

We shall see below that in some languages, the hole can be filled.

Static vs Dynamic Scoping

procedure main is

x : integer := 1;

procedure f is

begin

put(x);

end f;

procedure g is

x : integer := 2;

begin

f;

end g;

begin

g;

end main;

Before we begin in earnest, I thought a short example might be

helpful.

What is printed when the procedure main on the right is run?

That looks pretty easy, main just calls g, g just calls f, and f

just prints (put is ada-speak for print) x.

So x is printed.

Sure, but which x?

There are, after all, two of them.

Is is ambiguous, i.e., erroneous?

Since this section about scope, we see that the question is which x

is in scope at the put(x) call?

Is it the one declared in main, inside of which f is defined, or is

the one inside g, which calls f, or is it both, or neither?

For some languages, the answer is the x declared in main and for

others it is the x declared in g.

The program is actually written in Ada, which is statically scoped

(a.k.a. lexically scoped) and thus gives the answer 1.

How about Scheme, a dialect of lisp?

(define x 1)

(define f (lambda () x))

(define g (lambda () (let ((x 2)) (f))))

We get the same result: when g is evaluated, 1 is printed.

Scheme, like, ada, C, Java, C++, C#, ... is statically scoped.

Is every language statically scoped?

No, some dialects of Lisp are dynamically scoped, as is

Snobol, Tex, and APL.

In Perl the programmer gets to choose.

(setq x 1)

(defun f () x)

(defun g () (let ((x 2)) (f)))

In particular, the last program on the right, which is written in

emacs lisp, gives 2 when g is evaluated.

The two Lisps are actually more similar that they might appear on

the right:

The emacs Lisp defun (which stands for "define function") is

essentially a combination of Scheme's define and lambda.

3.3.1: Static Scoping

In static scoping, the binding of a name can be determined by

reading the program text; it does not depend on the execution.

Thus it can be determined at compile time.

The simplest situation is the one in early versions of Basic: There

is only one scope, the whole program.

Recall, that early basic was intended for tiny programs.

I believe variable names were limited to two characters, a letter

optionally follow by a digit.

For large programs a more flexible approach is needed, as given in

the next section.

3.3.2: Nested Subroutines, i.e., Nested Scopes

The typical situation is that the relevant binding for a name is

the one that occurs in the smallest containing block and the scope

of the binding is that block.

So the rule for finding the relevant binding is to look in the

current block (I consider a procedure/function definition to be a

block).

If the name is found, that is the binding.

If the name is not found, look in the immediately enclosing scope

and repeat until the name is found.

If the name is not found at all, the program is erroneous.

What about built in names such as type names (Integer, etc),

standard functions (sin, cos, etc), or I/O routines (Print, etc)?

It is easiest to consider these as defined in an invisible scope

enclosing the entire program.

Given a binding B in scope S, the above rules can be summarized by

the following two statements.

- B is available in scopes nested inside S, unless B is

overridden, in which case it is hidden.

- B is not available in scopes enclosing S.

Some languages have facilities that enable the programmer to

reference bindings that would otherwise be hidden by statement 1

above.

For example

- In Ada a reference S.Y can be used to access the binding of Y

declared in scope S even if there is a lexically closer scope.

- In C++ S::Y can be used to access the binding of Y is class S.

Similarly ::Y can be used to refer to the binding of Y in the

global scope (outside all classes).

procedure outer is procedure outer is

x : integer := 6; x : integer := 6;

procedure inner is procedure inner is

begin x : integer := 88;

put(x); begin

end inner; put(x,outer.x);

begin end inner;

inner; begin

end outer; inner;

end outer2;

Access to Nonlocal Objects

Consider first two easy cases of nested scopes illustrated on the

right with procedures outer and inner.

How does the left execution of inner find the binding to x?

How does the right execution of inner find both bindings of x?

We need a link from the activation record of the inner to the

activation record of outer.

This is called the static link or the

access link.

But it is actually more difficult than that.

The static link must point to the most recent

invocation of the surrounding subroutine.

Of course the nesting can be deeper; but that just means

following a series of static links from one scope to the next

outer one.

Moreover, finding the most recent invocation of outer is not

trivial; for example, inner may have been called by a routine

nested inside inner.

For the details see a compilers book or

my course notes.

3.3.3: Declaration Order

There are several questions here.

- Must all declarations for a block precede all other

statements?

In Ada the answer is yes.

Indeed, the declarations are set off by the syntax.

procedure <declarations> begin <statements> end;

declare <declarations> begin <statements> end;

In C, C++, and java the answer is no.

The following is legal.

int z; z=4; int y;

- Can one declaration refer to a previous declaration in the

same block?

Yes for Ada, C, C++, Java.

int z=4; int y=z;

- Do declarations take affect where the block begins (i.e., they

apply to the entire block except for holes due to inner scopes)

or do they start only at the declaration itself?

int y; y=z; int z=4;

In Java, Ada, C, C++ they start at the declaration so the

example above is illegal.

In JavaScript and Modula3 they start at the beginning of the

block so the above is legal.

In Pascal the declaration starts at block beginning, but can't

be used before it is declared.

This has a weird effect:

In inner declaration hides an outer declaration but can't be

used in earlier statements of the inner.

Scheme

Scheme uses let, let* and letrec to introduce a nested scope.

The variables named are given initial values.

In the simple case where the initial values are manifest constants,

the three let's are the same.

(let ( (x 2)(y 3) ) body) ; eval body using new variables x=2 & y=3

We will study the three when we do scheme.

For now, I just add that for letrec the scope of each declaration is

the whole block (including the preceding declarations, so x and y

can depend on each other), for let* the scope starts at the

declaration (so y can depend on x, but not the reverse) and for let

the declarations start at the body (so neither y nor x can depend on

the other).

The above is somewhat oversimplified.

Homework: 5, 6(a).

Declarations and Definitions

Many languages (e.g., C, C++, Ada) require names to be declared

before they are used, which causes problems for recursive

definitions.

Consider a payroll program with employees and managers.

procedure payroll is

type Employee;

type Manager is record

Salary : Float;

Secretary : access Employee; -- access is ada-speak for pointer

end record;

type Employee is record

Salary : Float;

Boss : access Manager;

end record;

end payroll;

These languages introduce a distinction between a

declaration, which simply introduces the name

and indicates its scope, and a definition,

which fully describes the object to which the name is bound.

Nested Blocks

Essentially all the points made above about nested procedures

applies to nested blocks as well.

For example the code on the right, using nested blocks, exhibits the

same hole in the scope

as does the code on the left, using

nested procedures.

procedure outer is declare

x : integer; x : integer;

procedure inner is begin

x : float: -- start body of outer x is integer

begin declare

-- body of inner x : float;

end inner; begin

begin -- body of inner, x is float

-- body of outer end;

end outer; -- more of body of outer. x again integer

end;

Redeclaration

Some (mostly interpreted) languages permit a redeclaration

in which a new binding is created for a name already bound in the

scope.

Does this new binding start at the beginning of the scope or just

where the redeclaration is written?

int f (int x) { return x+10; }

int g (int x) { return f(x); }

g(0)

int f (int x) { return x+20; }

g(5);

Consider the code on the right.

The evaluation of g(0) uses the first definition of f and returns

10.

Does the evaluation of g(5) use the first f, the one in effect when

g was defined, or does it use the second f, the one in effect when

g(5) was invoked.

The answer is: it depends.

For most languages supporting redeclaration, the second f is used;

but in ML it is the first.

In other words for most languages the redeclaration replaces the old

in all contexts; in ML it replaces the old only in later uses, not

previous ones.

This has some of the flavor of static vs. dynamic scoping.

3.3.4: Modules

Will be done later.

3.3.5: Module Types and Classes

Will be done later.

3.3.6: Dynamic Scoping

We covered dynamic scoping already.

3.4: Implementing Scope

3.5: The Meaning of Names within a Scope

3.6: The Binding of Referencing Environments

3.7: Macro Expansion

This section will be covered later.

3.8: Separate Compilation

Chapter 4: Semantic Analysis

Chapter 5: Target Machine Architecture

Start Lecture #3

Chapter 6: Control Flow

Remark: We will have a midterm.

It will be 1 hour, during recitation.

It will not be either next monday or the monday

after.

6.1: Expression Evaluation

Most languages use infix notation for built in operators.

Scheme is an exception (+ 2 (/ 9 x)).

function "*" (A, B: Vector) return Float is

Result: Float :=0.0;

begin

for I in A'Range loop

Result := Result + A(I)*B(I);

end loop;

return Result;

end "*";

Some languages permit the programmer to extend the infix operators.

An example from Ada is at right.

This example assumes Vector has already been declared as a

one-dimensional array of floats.

A'R gives the range of the legal subscripts, i.e. the bounds of the

array.

With loops like this it is not possible to access the array out of

bounds.

6.1.1: Precedence and Associativity

Most languages agree on associativity, but APL is strictly right to

left (no precedence, either).

Normal is for most binary operators to be left associative, but

exponentiation is right associative (also true in math).

Why?

Languages differ considerably in precedence (see figure 6.1 in 3e).

The safest rule is to use parentheses unless certain.

Homework: 1.

We noted in Section 6.1.1 that most binary arithmetic operators are

left-associative in most programming languages.

In Section 6.1.4, however, we also noted that most compilers are

free to evaluate the operands of a binary operator.

Are these statements contradictory?

Why or why not?

6.1.2: Assignments

Assignment statements are the dominant example of side effects,

where execution does more that compute values.

Side effects change the behavior of subsequent statements and

expressions.

References and Values

There is a distinction between the container for a value

(the memory location

) and the value itself.

Some values, e.g., 3+4, do not have corresponding containers and,

among other things, cannot appear on the left hand side of an

assignment.

Otherwise said 3+4

is a legal r-value, but not a legal

l-value.

Given an l-value, the corresponding r-value can be obtained by

dereferencing.

In most languages, this dereferencing is automatic.

Consider

a := a + 1;

the first a gives the l-value; the second the r-value.

Boxing

begin

a := if b < c then d else c;

a := begin f(b); g(c) end;

g(d);

2+3

end

Orthogonality

The goal of orthogonality of features is to permit the features in

any combination possible.

Algol 68 emphasized orthogonality.

Since it was an expression-oriented language, expressions could

appear almost anywhere.

There was no separate notion of statement.

An example appears on the right.

I have heard and read that this insistence on orthogonality lead to

significant implementation difficulties.

Combination Assignment Operators

I suspect you all know the C syntax

a += 4;

It is no doubt often convenient, but its major significance is that

in statements like

a[f(i)] += 4;

it guarantees that f(i) is evaluated only once.

The importance of this guarantee becomes apparent if f(i) has side

effects, e.g., if f(i) modifies i or prints.

Multiway Assignment (and Tuples)

Some languages, e.g., Perl and Python, permit tuples to be assigned

and returned by functions, giving rise to code such as

a, b := b, a -- swapping w/o an explicit temporary

x, y := f(5); -- this f takes one argument and returns two results

6.1.3: Initialization

Avoids the problem where an uninitialized variable has different

values in different runs causing non-reproducible results.

Some systems provide default initialization, for example C

initializes external variables to zero.

To initialize aggregates requires a way to specify aggregate

constants.

Dynamic Checks

An alternative to have the system check during execution if a

variable is uninitialized.

For IEEE floating point this is free, since there are invalid bit

patterns (NaN) that are checked by conforming hardware.

To do this in general needs special hardware or expensive software

checking.

Definite Assignment

Some languages, e.g., Java and C#, specify that the compiler must

be able to show that no variable is used before given a value.

If the compiler cannot confirm this, a warning/error message is

produced.

Constructors

Some languages, e.g., Java, C++, and C# permit the program to

provide constructors that automatically initialize dynamically

allocated variables.

6.1.4: Ordering within Expressions

Which addition is done first when you evaluate X+Y+Z?

This is not trivial: one order might overflow or give a less precise

result.

For expressions such as f(x)+g(x) the evaluation order matters if

the functions have side effects.

Applying Mathematical Identities

6.1.5: Short-Circuit Evaluation

Sometimes the value of the first operand determines the value of

the entire binary operation.

For example 0*x is 0 for any x; True or X

is True for any X.

Not evaluating the second operand in such cases is called a

short-circuit evaluation.

Note that this is not just a performance improvement; short-circuit

evaluation changes the meaning of some programs: Consider 0*f(x),

when f has side effects (e.g. modifying x or printing).

We treat this issue in 6.4.1 (short-circuited conditions) when

discussing control statements for selection.

Homework: CYU 7.

What is an l-value? An r-value?

CYU 11.

What are the advantages of updating a variable with an

assignment operator, rather than with a regular assignment

in which the variable appears on both the left- and right-hand

sides?

6.2: Structured and Unstructured Flow

Early languages, in particular, made heavy use of unstructured

control flow, especially goto's.

Much evidence was accumulated to show that the great reliance on

goto's both increased the bug density and decreased the readability

(these are not unrelated consequences) so today

structured alternatives dominate.

A famous article (actually a letter to the editor) by Dijkstra

entitled

Go To Statement Considered Harmful

put the case before the

computing public.

Common today are

- Selection

- if statement

- case statement

- Iteration

- while and repeat loops

- for loops

- iterators (looping over a collection)

- Other

- goto: Only when necessary.

- call/return: Accomplishes more than flow control

- exceptions

- continuations: normally in functional languages

6.2.1: Structured Alternatives to goto

Common uses of goto have been captured by newer control statements.

For example, Fortran had a DO loop (similar to C's for), but had no

way other that GOTO to exit the loop early.

C has a break statement for that purpose.

Homework: 24.

Rubin [Rub87] used the following example (rewritten here in C) to

argue in favor of a goto statement.

int first_zero_row = -1 /* none */

int i, j;

for (i=0; i<n; i++) {

for (j=0; j<n; j++) {

if (A[i][j]) goto next;

}

first_zero_row = i;

break;

next:;

}

The intent of the code is to find the first all-zero row, if any, of

an n×n matrix.

Do you find the example convincing?

Is there a good structured alternative in C?

In any language?

Multilevel Returns

Errors and Other Exceptions

begin begin

x := y/z; x := y/z;

z := F(y,z); -- function call z := F(y,z);

z := G(y,z); -- array ref z := G(y,z);

end; exception -- handlers

when Constraint_Error =>

-- do something

end;

The Ada code on the right illustrates exception handling.

An Ada constraint error

signifies using an incorrect value,

e.g., dividing by zero, accessing an array out of bounds, assigning

a negative value to a variable declared positive

, etc.

Ada is statically scoped but constraints have a dynamic flavor.

If G raises a constraint error and does not have its own handler,

then the constraint error is propagated back to the caller of G, in

our case the anonymous block on the right.

Note that G is not lexically inside the block so the constraint

error is propagating via the call chain not scoping.

(define f

(lambda (c)

(c)))

(define g

(lambda ()

;; beginning of g

(call-with-current-continuation f)

;; more of g

))

6.2.2: Continuations

A continuation encapsulates the current context, including the

current location counter and bindings.

Scheme contains a function

(call-with-current-continuation f)

that invokes f, passing to it the current continuation c.

The simplest case occurs if a function g executes the invocation

above and then f immediately calls c.

The result is the same as if f returned to g.

This example is shown on the right.

However, the continuation is a object that can be further passed

on and can be saved and reused.

Hence one can get back to a given context multiple times.

Moreover, if f calls h giving it c and h calls c, we are back in g

without having passed through f.

One more subtlety: The binding that contained in c

is writable

.

That is, the binding is from name to memory-location-storing-the

name, not just from name to current-value.

So in the example above, if f changes the value of a name in the

binding, and then calls c, g will see the change.

Continuations are actually quite powerful and can be used to

implement quite a number of constructs, but the power comes with a

warning: It is easy to (mis-)use continuations to create extremely

obscure programs.

6.3: Sequencing

for I in 1..N loop

-- loop body

end loop;

Many languages have a means of grouping several statements together

to form a compound statement

.

Pascal used begin-end pairs to form compound statements, C shortened

it to {}.

Ada doesn't need {} because the language syntax already contains

bracketing constructs.

As an example, the code on the right is the Ada version of a simple

C for loop.

Note that the end

is part of the for statement and not a

separate begin-end compound.

When declarations are combined with a sequence the result is a

block.

A block is written

{ declarations / statements } declare declarations begin statements end

in C and Ada respectively.

As usual C is more compact, Ada more readable.

Another alternative is make indentation significant.

I often use this is pseudo-code; B2/ABC/Python and Haskell use it

for real.

if condition then statements

if (condition) statement

if condition then

statements_1

else

statements_2

end if

if condition_1 {

statements_1 }

else if condition_2 {

statements_2}

else {

statements_3}

if condition_1 then

statements_1

elsif condition_2 then

statements_2

...

elsif condition_n then

statements_n

end if

6.4: Selection

Essentially every language has an if statement to use for selection.

The simplest case is shown in the first two lines on the right.

Something is needed to separate the condition from the statement.

In Pascal and Ada it is the then keyword;

in C/C++/Java it is the parentheses surrounding the condition.

The next step up is if-then-else, introduced in Algol 60.

We have already seen the infamous dangling else

problem that

can occur if statements_1 is an if.

If the language uses end markers like end if, the

problem disappears; Ada uses this approach, which is shown on the

right.

Another solution, used by Algol 60, is to forbid a

then to be followed immediately by

an if (you can follow then by a

begin block starting with if).

When there are more than 2 possibilities, it is common to employ

else if.

The C/C++/Java syntax is shown on the right.

This same idea could be used in languages featuring

end if, but the result would be a bunch

of end if statements at the end.

To avoid requiring this somewhat ugly code, languages like Ada

include an elsif which continues the

existing if rather than starting a new one.

Thus only one end if is required as we see in the

bottom right example.

6.4.1: Short-Circuit Conditions (and Evaluation)

Can if (y==0 || 1/y < 100)

ever divide by zero?

Not in C which requires short-circuit evaluation.

In addition to divide by zero cases, short-circuit evaluation is

helpful when searching a null-terminated list in C.

In that situation the idiom is

while (ptr && ptr->val != value)

The short circuit evaluation of && guarantees that we will

never execute null->val.

But sometimes we do not want to short-circuit the evaluation.

For example, consider f(x)&&g(x) where g(x) has a desirable

side effect that we want to employ even when f(x) is false.

In C this cannot be done directly.

if C1 and C2 -- always eval C2

if C1 and then C2 -- not always

Ada has both possibilities.

The operator or always evaluates both arguments;

whereas, the operator or else does a short circuit

evaluation.

Homework: 12.

Neither Algol 60 nore Algol 68 employs short-circuit evaluation for

Boolean expressions.

In both languages, however, an

if...then...else construct can be used as an expression.

Show how to use if...then...else to achieve the effect of

short-circuit evaluations.

case nextChar is

when 'I' => Value := 1;

when 'V' => Value := 5;

when 'X' => Value := 10;

when 'C' => Value := 100;

when 'D' => Value := 500;

when 'M' => Value := 1000;

when others => raise InvalidRomanNumeral;

end case;

6.4.2 Case/Switch Statements

Most modern languages have a case or switch statement where the

selection is based on the value of a single expression.

The code on the right was written in Ada by a student of ancient

Rome.

The raise instruction causes an exception to be raised and thus

control is transferred to the

appropriate handler.

A case statement can always be simulated by a sequence of if

statements, but the case statement is clearer.

(Alternative) Implementations

In addition to increased clarity over multiple if statements, the

case statement can, in many situations be compiled into better code.

Most languages having this construct require that the tested for

values ('I', 'V', 'X', 'C', 'D', and 'M' above) must be computable

at compile time.

For example in Ada, they must be manifest constants, or ranges such

as 5..8 composed of manifest constants, or a disjunction of these

(e.g., 6 | 9..20 | 8).

In the simplest case where each choice is a constants, a jump

table can be constructed and just two jumps are executed,

independent of the number of cases.

The size of the jump table is the range of choices so would be

impractical if the case expression was an arbitrary integer.

If the when arms formed consecutive integers, the implementation

would jump to others if out of range (using comparison operators)

and then use a jump table for the when arms.

Hashing techniques are also used (see 3e).

In the general case the code produced is a bunch of tests and

tables.

Syntax and Label Semantics

The languages differ in details.

The words case

and when

in Ada become switch

and case

in C.

Ada requires that all possible values of the case expression be

accounted for (the others construct makes this

easy).

C and Fortran 90 simply do nothing when the expression does not

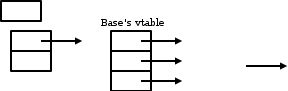

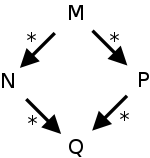

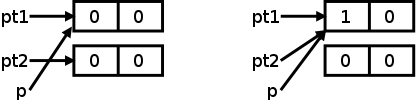

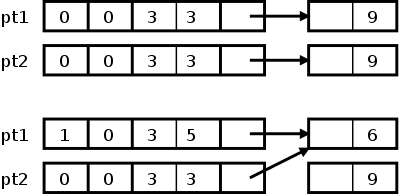

match any arm even though C does support a default