================ Start Lecture #1 ================

V22.0202: Operating Systems

(Computer System Organization II)

2004-05 Fall

Allan Gottlieb

Tues Thurs 11-12:15 Room 102 CIWW

Chapter -1: Administrivia

I start at -1 so that when we get to chapter 1, the numbering will

agree with the text.

(-1).1: Contact Information

- gottlieb@nyu.edu (best method)

- http://cs.nyu.edu/~gottlieb

- 715 Broadway, Room 712

-

212 998 3344

(-1).2: Course Web Page

There is a web site for the course. You can find it from my home

page, which is http://cs.nyu.edu/~gottlieb

-

You can also find these lecture notes on the course home page.

Please let me know if you can't find it.

-

The notes are updated as bugs are found or improvements made.

-

I will also produce a separate page for each lecture after the

lecture is given. These individual pages

might not get updated as quickly as the large page.

(-1).3: Textbook

The course text is Tanenbaum, "Modern Operating Systems", 2nd Edition

-

The first edition is not adequate as there have been many

changes.

-

Available in bookstore.

-

We will cover nearly all of the first 7 chapters.

(-1).4: Computer Accounts and Mailman Mailing List

-

You all have i5.nyu.edu accounts; please confirm that they are set

up correctly.

-

Sign up for the Mailman mailing list for the course.

You can do so by clicking

here

-

If you want to send mail just to me, use gottlieb@nyu.edu not

the mailing list.

-

Questions on the labs should go to the mailing list.

You may answer questions posed on the list as well.

Note that replies are sent to the list.

-

I will respond to all questions; if another student has answered the

question before I get to it, I will confirm if the answer given is

correct.

-

Please use proper mailing list etiquette.

-

Send plain text messages rather than (or at least in

addition to html).

-

Use Reply to contribute to the current thread, but NOT

to start another topic.

-

If quoting a previous message, trim off irrelevant parts.

-

Use a descriptive Subject: field when starting a new topic.

-

Do not use one message to ask two unrelated questions.

(-1).5: Grades

Grades will computed as

(30%)* MidtermExam + (30%)*LabAverage + (40%)*FinalExam

(but see homeworks below).

(-1).6: The Upper Left Board

I use the upper left board for lab/homework assignments and

announcements. I should never erase that board.

Viewed as a file it is group readable (the group is those in the

room), appendable by just me, and (re-)writable by no one.

If you see me start to erase an announcement, let me know.

I try very hard to remember to write all announcements on the upper

left board and I am normally successful. If, during class, you see

that I have forgotten to record something, please let me know.

HOWEVER, if I forgot and no one reminds me, the

assignment has still been given.

(-1).7: Homeworks and Labs

I make a distinction between homeworks and labs.

Labs are

-

Required.

-

Due several lectures later (date given on assignment).

-

Graded and form part of your final grade.

-

Penalized for lateness.

-

Computer programs you must write.

Homeworks are

-

Optional.

-

Due the beginning of Next lecture.

-

Not accepted late.

-

Mostly from the book.

-

Collected and returned.

-

Able to help, but not hurt, your grade.

(-1).7.1: Homework Numbering

Homeworks are numbered by the class in which they are assigned. So

any homework given today is homework #1. Even if I do not give homework today,

the homework assigned next class will be homework #2. Unless I

explicitly state otherwise, all homeworks assignments can be found in

the class notes. So the homework present in the notes for lecture #n

is homework #n (even if I inadvertently forgot to write it to the

upper left board).

(-1).7.2: Doing Labs on non-NYU Systems

You may solve lab assignments on any system you wish, but ...

- You are responsible for any non-nyu machine.

I extend deadlines if the nyu machines are down, not if yours are.

- Be sure to upload your assignments to the

nyu systems.

-

In an ideal world, a program written in a high level language

like Java, C, or C++ that works on your system would also work

on the NYU system used by the grader.

Sadly this ideal is not always achieved despite marketing

claims that it is achieved.

So, although you may develop you lab on any system,

you must ensure that it runs on the nyu system assigned to the

course.

-

If somehow your assignment is misplaced by me and/or a grader,

we need a to have a copy ON AN NYU SYSTEM

that can be used to verify the date the lab was completed.

-

When you complete a lab (and have it on an nyu system), do

not edit those files. Indeed, put the lab in a separate

directory and keep out of the directory. You do not want to

alter the dates.

(-1).7.3: Obtaining Help with the Labs

Good methods for obtaining help include

- Asking me during office hours (see web page for my hours).

- Asking the mailing list.

- Asking another student, but ...

Your lab must be your own.

That is, each student must submit a unique lab.

Naturally, simply changing comments, variable names, etc. does

not produce a unique lab.

(-1).7.4: Computer Language Used for Labs

The department wishes to reinforce the knowledge of C learned in

201. As a result, lab #2 must be written in C.

The other labs may be written in C or Java.

C++ is permitted (and counts as C for lab2); however, C++ is a

complicated language and I advise against using it unless you are already

quite comfortable with the language.

(-1).8: A Grade of “Incomplete”

The rules for incompletes and grade changes are set by the school

and not the department or individual faculty member.

The rules set by CAS can be found in the CAS Bulletin.

As I understand them, these rules state:

-

A student's request for an incomplete be granted only in

exceptional circumstances and only if applied for in advance.

The application must be before the final exam; the only exception

is if a student, after the final, brings a note from a doctor or

documentation of a death in the family.

-

If a student receives a grade other than incomplete, the student

may not simply audit the course in the future and have his/her

grade changed.

Instead, the student should enroll in the course again and be

treated as a first time student.

The grade obtained the second time replaces the original grade.

Chapter 0: Interlude on Linkers

Originally called a linkage editor by IBM.

A linker is an example of a utility program included with an

operating system distribution. Like a compiler, the linker is not

part of the operating system per se, i.e. it does not run in supervisor mode.

Unlike a compiler it is OS dependent (what object/load file format is

used) and is not (normally) language dependent.

0.1: What does a Linker Do?

Link of course.

When the compiler and assembler have finished processing a module,

they produce an object module

that is almost runnable.

There are two remaining tasks to be accomplished before object modules

can be run.

Both are involved with linking (that word, again) together multiple

object modules.

The tasks are relocating relative addresses

and resolving external references.

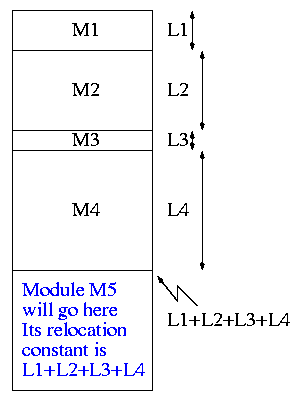

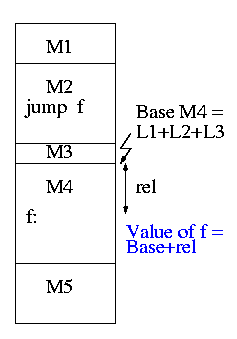

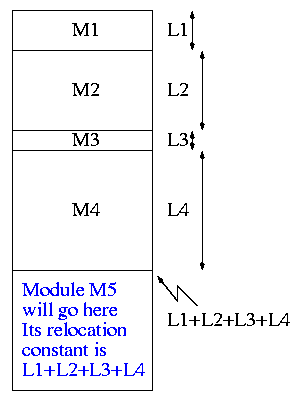

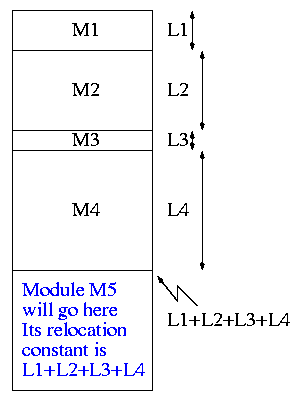

0.1.1: Relocating Relative Addresses

- Each module is (mistakenly) treated as if it will be loaded at

location zero.

- For example, the machine instruction

jump 100

is used to indicate a jump to location 100 of

the current module.

- To convert this relative address to an

absolute address,

the linker adds the base address of the module

to the relative address.

The base address is the address at which

this module will be loaded.

- Example: Module A is to be loaded starting at location 2300 and

contains the instruction

jump 120

The linker changes this instruction to

jump 2420

- How does the linker know that Module M5 is to be loaded starting at

location 2300?

- It processes the modules one at a time. The first module is

to be loaded at location zero.

So relocating the first module is trivial (adding zero).

We say that the relocation constant is zero.

- After processing the first module, the linker knows its length

(say that length is L1).

- Hence the next module is to be loaded starting at L1, i.e.,

the relocation constant is L1.

- In general the linker keeps the sum of the lengths of

all the modules it has already processed; this sum is the

relocation constant for the next module.

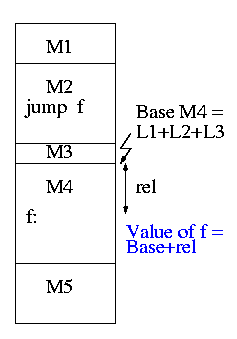

0.1.2: Resolving External Reverences

- If a C (or Java, or Pascal) program contains a function call

f(x)

to a function f() that is compiled separately, the resulting

object module must contain some kind of jump to the beginning of

f.

-

But this is impossible!

-

When the C program is compiled. the compiler and assembler

do not know the location of f() so there is no

way they can supply the starting address.

-

Instead a dummy address is supplied and a notation made that

this address needs to be filled in with the location of

f(). This is called a use of

f.

-

The object module containing the definition

of f() contains a notation that f is being

defined and gives the relative address of the definition, which

the linker converts to an absolute address (as above).

-

The linker then changes all uses of f() to the correct absolute address.

The output of a linker is called a load module

because it is now ready to be loaded and run.

To see how a linker works lets consider the following example,

which is the first dataset from lab #1. The description in lab1 is

more detailed.

The target machine is word addressable and has a memory of 250

words, each consisting of 4 decimal digits. The first (leftmost)

digit is the opcode and the remaining three digits form an address.

Each object module contains three parts, a definition list, a use

list, and the program text itself. Each definition is a pair (sym,

loc). Each entry in the use list is a symbol and a list of uses of

that symbol.

The program text consists of a count N followed by N pairs (type, word),

where word is a 4-digit instruction described above and type is a

single character indicating if the address in the word is

Immediate,

Absolute,

Relative, or

External.

Input set #1

1 xy 2

2 z xy

5 R 1004 I 5678 E 2000 R 8002 E 7001

0

1 z

6 R 8001 E 1000 E 1000 E 3000 R 1002 A 1010

0

1 z

2 R 5001 E 4000

1 z 2

2 xy z

3 A 8000 E 1001 E 2000

The first pass simply finds the base address of each module and

produces the symbol table giving the values for xy and z (2 and 15

respectively). The second pass does the real work using the symbol

table and base addresses produced in pass one.

Symbol Table

xy=2

z=15

Memory Map

+0

0: R 1004 1004+0 = 1004

1: I 5678 5678

2: xy: E 2000 ->z 2015

3: R 8002 8002+0 = 8002

4: E 7001 ->xy 7002

+5

0 R 8001 8001+5 = 8006

1 E 1000 ->z 1015

2 E 1000 ->z 1015

3 E 3000 ->z 3015

4 R 1002 1002+5 = 1007

5 A 1010 1010

+11

0 R 5001 5001+11= 5012

1 E 4000 ->z 4015

+13

0 A 8000 8000

1 E 1001 ->z 1015

2 z: E 2000 ->xy 2002

The output above is more complex than I expect you to produce

it is there to help me explain what the linker is doing. All I would

expect from you is the symbol table and the rightmost column of the

memory map.

You must process each module separately, i.e. except for the symbol

table and memory map your space requirements should be proportional to the

largest module not to the sum of the modules.

This does NOT make the lab harder.

(Unofficial) Remark:

It is faster (less I/O) to do a one pass approach, but is harder

since you need “fix-up code” whenever a use occurs in a module that

precedes the module with the definition.

The linker on unix was mistakenly called ld (for loader), which is

unfortunate since it links but does not load.

Historical remark: Unix was originally

developed at Bell Labs; the seventh edition of unix was made

publicly available (perhaps earlier ones were somewhat available).

The 7th ed man page for ld begins (see http://cm.bell-labs.com/7thEdMan).

.TH LD 1

.SH NAME

ld \- loader

.SH SYNOPSIS

.B ld

[ option ] file ...

.SH DESCRIPTION

.I Ld

combines several

object programs into one, resolves external

references, and searches libraries.

By the mid 80s the Berkeley version (4.3BSD) man page referred to ld as

"link editor" and this more accurate name is now standard in unix/linux

distributions.

During the 2004-05 fall semester a student wrote to me

“BTW - I have meant to tell you that I know the lady who

wrote ld. She told me that they called it loader, because they just

really didn't

have a good idea of what it was going to be at the time.”

Lab #1:

Implement a two-pass linker. The specific assignment is detailed on

the class home page.

================ Start Lecture #2 ================

End of Interlude on Linkers

Chapter 1: Introduction

Homework: Read Chapter 1 (Introduction)

Levels of abstraction (virtual machines)

-

Software (and hardware, but that is not this course) is often

implemented in layers.

-

The higher layers use the facilities provided by lower layers.

-

Alternatively said, the upper layers are written using a more

powerful and more abstract virtual machine than the lower layers.

-

Alternatively said, each layer is written as though it runs on the

virtual machine supplied by the lower layer and in turn provides a

more abstract (pleasant) virtual machine for the higher layer to

run on.

-

Using a broad brush, the layers are.

- Applications and utilities

- Compilers, Editors, Command Interpreter (shell, DOS prompt)

- Libraries

- The OS proper (the kernel, runs in

privileged/kernel/supervisor mode)

- Hardware

-

Compilers, editors, shell, linkers. etc run in user mode.

-

The kernel itself is itself normally layered, e.g.

- Filesystems

- Machine independent I/O

- Machine dependent device drivers

-

The machine independent I/O part is written assuming “virtual

(i.e. idealized) hardware”. For example, the machine independent

I/O portion simply reads a block from a “disk”. But in reality

one must deal with the specific disk controller.

-

Often the machine independent part is more than one layer.

-

The term OS is not well defined. Is it just the kernel? How

about the libraries? The utilities? All these are certainly

system software but not clear how much is part of

the OS.

1.1: What is an operating system?

The kernel itself raises the level of abstraction and hides details.

For example a user (of the kernel) can write to a file (a concept not

present in hardware) and ignore whether the file resides on a floppy,

a CD-ROM, or a hard disk.

The user can also ignore issues such as whether the file is stored

contiguously or is broken into blocks.

The kernel is a resource manager (so users don't

conflict).

How is an OS fundamentally different from a compiler (say)?

Answer: Concurrency! Per Brinch Hansen in Operating Systems

Principles (Prentice Hall, 1973) writes.

The main difficulty of multiprogramming is that concurrent activities

can interact in a time-dependent manner, which makes it practically

impossibly to locate programming errors by systematic testing.

Perhaps, more than anything else, this explains the difficulty of

making operating systems reliable.

Homework: 1, 2. (unless otherwise stated, problems

numbers are from the end of the chapter in Tanenbaum.)

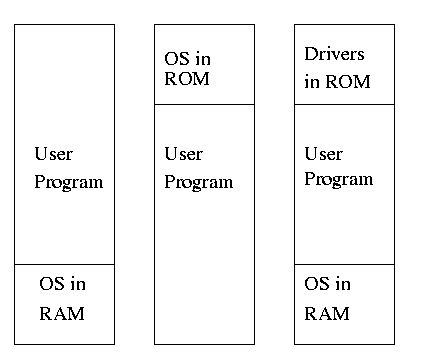

1.2 History of Operating Systems

- Single user (no OS).

- Batch, uniprogrammed, run to completion.

- The OS now must be protected from the user program so that it is

capable of starting (and assisting) the next program in the batch.

- Multiprogrammed

- The purpose was to overlap CPU and I/O

-

Multiple batches

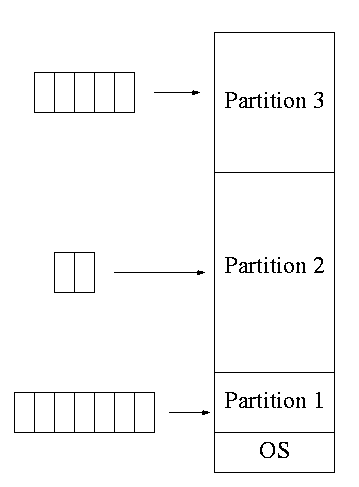

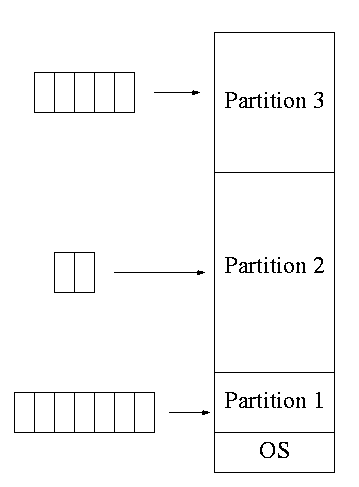

- IBM OS/MFT (Multiprogramming with a Fixed number of Tasks)

- OS for IBM system 360.

- The (real) memory is partitioned and a batch is

assigned to a fixed partition.

- The memory assigned to a

partition does not change.

- Jobs were spooled from cards into the

memory by a separate processor (an IBM 1401).

Similarly output was

spooled from the memory to a printer (a 1403) by the 1401.

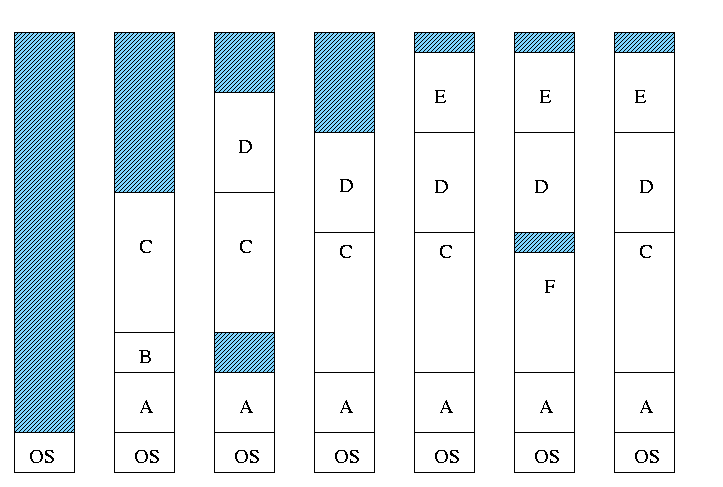

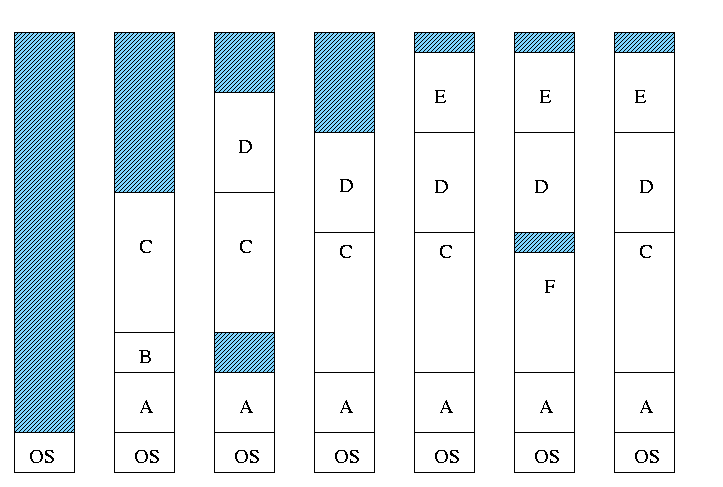

- IBM OS/MVT (Multiprogramming with a Variable number of Tasks)

(then other names)

- Each job gets just the amount of memory it needs. That

is, the partitioning of memory changes as jobs enter and leave

- MVT is a more “efficient” user of resources, but is

more difficult.

- When we study memory management, we will see that, with

varying size partitions, questions like compaction and

“holes” arise.

-

Time sharing

-

This is multiprogramming with rapid switching between jobs

(processes). Deciding when to switch and which process to

switch to is called scheduling.

-

We will study scheduling when we do processor management

-

Personal Computers

-

Serious PC Operating systems such as linux, Windows NT/2000/XP

and (the newest) MacOS are multiprogrammed OSes.

-

GUIs have become important. Debate as to whether it should be

part of the kernel.

-

Early PC operating systems were uniprogrammed and their direct

descendants in some sense still are (e.g. Windows ME).

Homework: 3.

1.3: OS Zoo

There is not as much difference between mainframe, server,

multiprocessor, and PC OSes as Tannenbaum suggests. For example

Windows NT/2000/XP, Unix and Linux are used on all.

1.3.1: Mainframe Operating Systems

Used in data centers, these systems ofter tremendous I/O

capabilities and extensive fault tolerance.

1.3.2: Server Operating Systems

Perhaps the most important servers today are web servers.

Again I/O (and network) performance are critical.

1.3.3: Multiprocessor Operating systems

These existed almost from the beginning of the computer

age, but now are not exotic.

1.3.4: PC Operating Systems (client machines)

Some OSes (e.g. Windows ME) are tailored for this application. One

could also say they are restricted to this application.

1.3.5: Real-time Operating Systems

- Often are Embedded Systems.

- Soft vs hard real time. In the latter missing a deadline is a

fatal error--sometimes literally.

- Very important commercially, but not covered much in this course.

1.3.6: Embedded Operating Systems

- The OS is “part of” the device. For example, PDAs,

microwave ovens, cardiac monitors.

- Often are real-time systems.

- Very important commercially, but not covered much in this course.

1.3.7: Smart Card Operating Systems

Very limited in power (both meanings of the word).

Multiple computers

- Network OS: Make use of the multiple PCs/workstations on a LAN.

- Distributed OS: A “seamless” version of above.

- Not part of this course (but often in G22.2251).

Homework: 5.

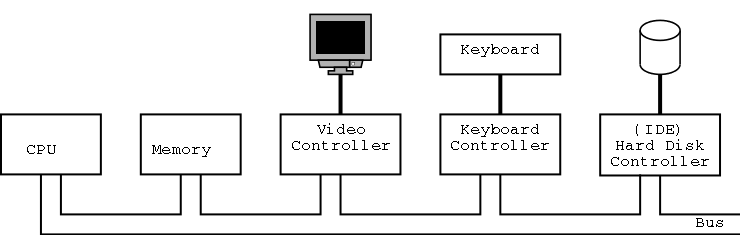

1.4: Computer Hardware Review

Tannenbaum's treatment is very brief and superficial. Mine is even

more so.

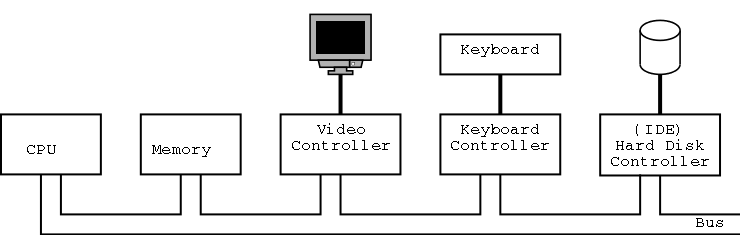

The picture above is very simplified.

(For one thing, today separate buses are used to Memory and Video.)

A bus is a set of wires that connect two or more devices.

Only one message can be on the bus at a time.

All the devices “receive” the message:

There are no switches in between to steer the message to the desired

destination, but often some of the wires form an address that

indicates which devices should actually process the message.

1.4.1: Processors

We will ignore processor concepts such as program

counters and stack pointers. We will also ignore

computer design issues such as pipelining and

superscalar. We do, however, need the notion of a

trap, that is an instruction that atomically

switches the processor into privileged mode and jumps to a pre-defined

physical address.

1.4.2: Memory

We will ignore caches, but will (later) discuss demand paging,

which is very similar (although demand paging and caches use

completely disjoint terminology).

In both cases, the goal is to combine large slow memory with small

fast memory to achieve the effect of large fast memory.

The central memory in a system is called RAM

(Random Access Memory). A key point is that it is volatile, i.e. the

memory loses its data if power is turned off.

Disk Hardware

I don't understand why Tanenbaum discusses disks here instead of in

the next section entitled I/O devices, but he does. I don't.

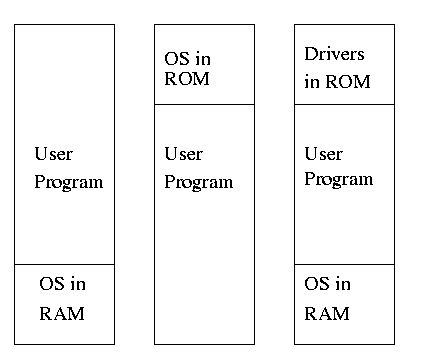

ROM / PROM / EPROM / EEPROM / Flash Ram

ROM (Read Only Memory) is used to hold data that

will not change, e.g. the serial number of a computer or the program

use in a microwave. ROM is non-volatile. A modern, familiar ROM is

CD-ROM (or the denser DVD).

But often this unchangable data needs to be changed (e.g., to fix

bugs). This gives rise first to PROM (Programmable

ROM), which, like a

CD-R, can be written once (as opposed to being mass produced already

written like a CD-ROM), and then to EPROM (Erasable

PROM; not Erasable

ROM as in Tanenbaum), which is like a CD-RW. An EPROM is especially

convenient if it can be erased with a normal circuit (EEPROM,

Electrically EPROM or Flash RAM).

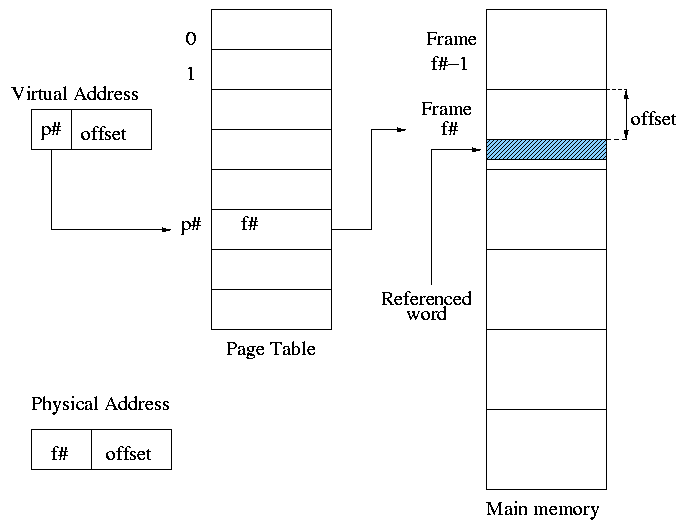

Memory Protection and Context Switching

As mentioned above when discussing

OS/MFT and OS/MVT

multiprogramming requires that we protect one process from another.

That is we need to translate the virtual addresses of

each program into distinct physical addresses. The

hardware that performs this translation is called the

MMU or Memory Management Unit.

When context switching from one process to

another, the translation must change, which can be an expensive

operation.

1.4.3: I/O Devices

When we do I/O for real, I will show a real disk opened up and

illustrate the components

- Platter

- Surface

- Head

- Track

- Sector

- Cylinder

- Seek time

- Rotational latency

- Transfer time

Devices are often quite complicated to manage and a separate

computer, called a controller, is used to translate simple commands

(read sector 123456) into what the device requires (read cylinder 321,

head 6, sector 765). Actually the controller does considerably more,

e.g. calculates a checksum for error detection.

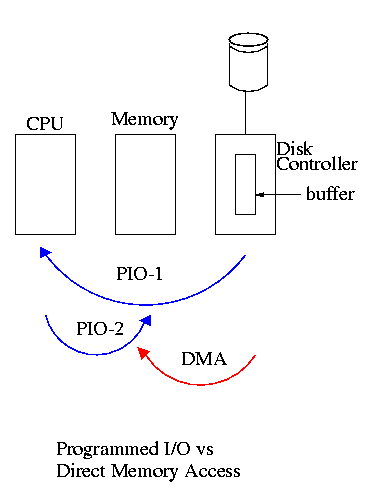

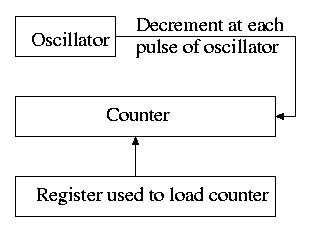

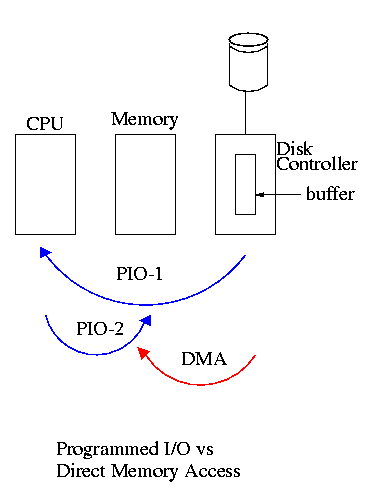

How does the OS know when the I/O is complete?

-

It can busy wait constantly asking the controller

if the I/O is complete. This is the easiest (by far) but has low

performance. This is also called polling or

PIO (Programmed I/O).

-

It can tell the controller to start the I/O and then switch to

other tasks. The controller must then interrupt

the OS when the I/O is done. Less waiting, but harder

(concurrency!). Also on modern processors a single is rather

costly. Much more than a single memory reference, but much, much

less than a disk I/O.

-

Some controllers can do

DMA (Direct Memory Access)

in which case they deal directly with memory after being started

by the CPU. This takes work from the CPU and halves the number of

bus accesses.

We discuss this more in chapter 5. In particular, we explain the last

point about halving bus accesses.

================ Start Lecture #3 ================

Note: Everyone has an acct on i5.nyu.edu; please confirm that

your account is set up correctly.

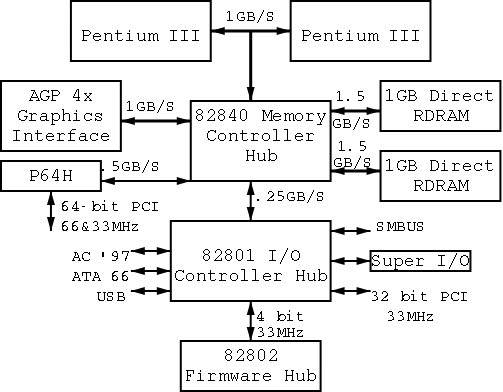

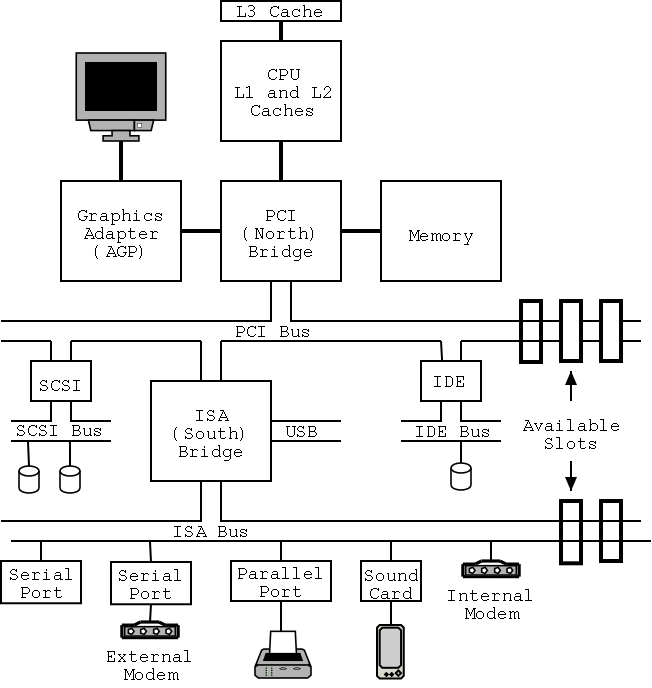

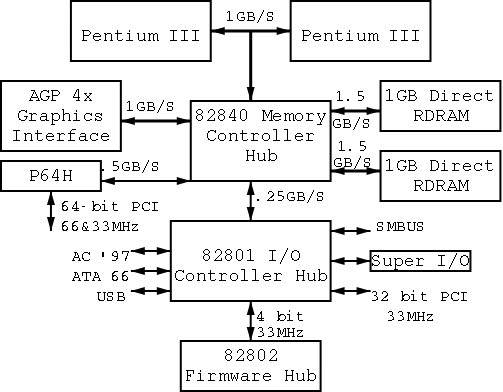

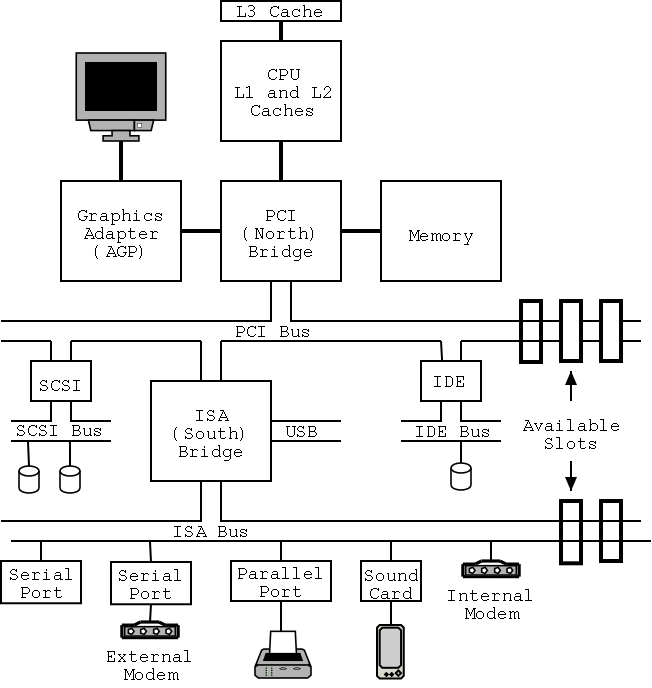

1.4.4: Buses

I don't care very much about the names of the buses, but the diagram

given in the book doesn't show a modern design. The one below

does. On the right is a figure showing the specifications for a modern chip

set (introduced in 2000). The chip set has two different width PCI

busses, which is not shown below. Instead of having the chip set

supply USB, a PCI USB controller may be used. Finally, the use of ISA

is decreasing. Indeed my last desktop didn't have an ISA bus and I

had to replace my ISA sound card with a PCI version.

1.5: Operating System Concepts

This will be very brief. Much of the rest of the course will consist in

“filling in the details”.

1.5.1: Processes

A program in execution. If you run the same program twice, you have

created two processes. For example if you have two editors running in

two windows, each instance of the editor is a separate process.

Often one distinguishes the state or context (memory image, open

files) from the thread of control. Then if one has many

threads running in the same task, the result is a

“multithreaded processes”.

The OS keeps information about all processes in the process

table.

Indeed, the OS views the process as the entry.

This is an example of an active entity being viewed as a data structure

(cf. discrete event simulations).

An observation made by Finkel in his (out of print) OS textbook.

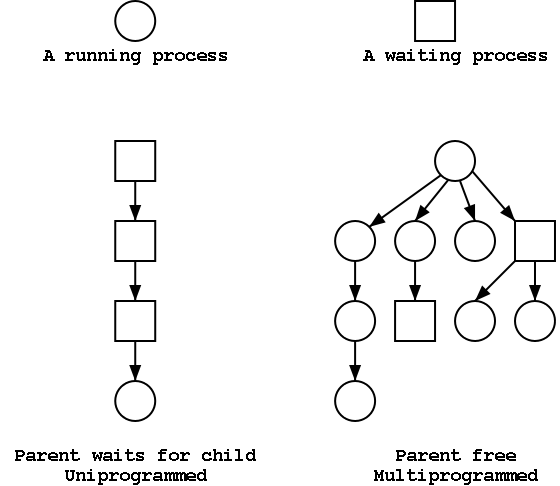

The Process Tree

The set of processes forms a tree via the fork system call. The

forker is the parent of the forkee, which is called a

child. If the system blocks the parent until

the child finishes, the “tree” is quite simple, just a line. But

the parent (in many OSes) is free to continue executing and in

particular is free to fork again producing another child.

A process can send a signal to another process to

cause the latter to

execute a predefined function (the signal handler).

This can be tricky to program since the programmer does not know when

in his “main” program the signal handler will be invoked.

Each user is assigned User IDentification (UID)

and all processes created by that user have this UID.

One UID is special (the superuser or

administrator) and has extra privileges.

A child has the same UID as its parent. It is sometimes possible to

change the UID of a running process. A group of users can be formed

and given a Group IDentification, GID.

Access to files and devices can be limited to a given UID or GID.

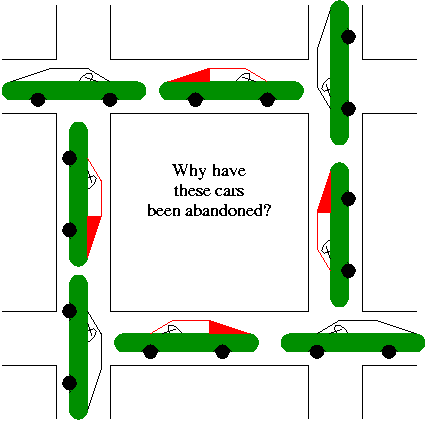

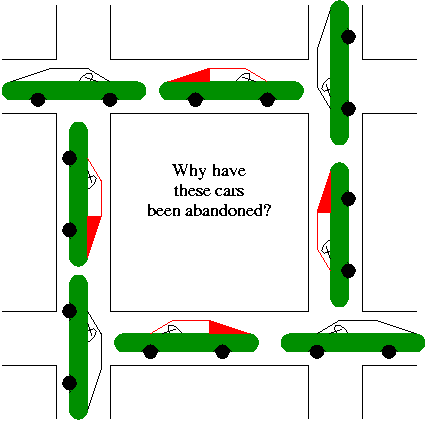

1.5.2: Deadlocks

A set of processes each of which is blocked by a process in the

set. The automotive equivalent, shown at right, is gridlock.

1.5.3: Memory Management

Each process requires memory. The linker produces a load module

that assumes the process is loaded at location 0. The operating

system ensures that the processes are actually given disjoint

memory. Current operating systems permit each process to be

given more (virtual) memory than the

total amount of (real) memory on the

machine.

1.5.4: Input/Output

There are a wide variety of I/O devices that the OS must manage.

For example, if two processes are printing at the same time, the OS

must not interleave the output. The OS contains device

specific code (drivers) for each device as well as device-independent

I/O code.

1.5.5: Files

Modern systems have a hierarchy of files. A file system tree.

-

In MSDOS the hierarchy is a forest not a tree. There is no file,

or directory that is an ancestor of both a:\ and c:\.

-

In recent versions of Windows, “My Computer” is the parent of

a:\ and c:\.

-

In unix the existence of (hard) links weakens the tree to a DAG

(directed acyclic graph).

-

Unix also has symbolic links, which when used indiscriminately,

permit directed cycles (i.e., the result is not a DAG).

-

Windows has shortcuts, which are similar to symbolic links.

You can name a file via an absolute path starting

at the root directory or via a relative path starting

at the current working directory.

In addition to regular files and directories, Unix also uses the

file system namespace for devices (called special

files, which are typically found in the /dev directory.

Often utilities that are normally applied to (ordinary)

files can be applied as well to some special files.

For example, when

you are accessing a unix system using a mouse and do not have anything

serious going

on (e.g., right after you log in), type the following command

cat /dev/mouse

and then move the mouse. You kill the cat by typing cntl-C. I tried

this on my linux box and no damage occurred. Your mileage may vary.

Before a file can be accessed, it must be opened

and a file descriptor obtained.

Subsequent I/O system calls (e.g., read and write) use the file

descriptor rather that the file name.

This is an optimization that enables the OS to find the file once and

save the information in a file table accessed by the file descriptor.

Many systems have standard files that are automatically made available

to a process upon startup. These (initial) file descriptors are fixed.

- standard input: fd=0

- standard output: fd=1

- standard error: fd=2

A convenience offered by some command interpretors is a pipe or

pipeline. The pipeline

dir | wc

which pipes the output dir into a character/word/line counter,

will give the number of files in the directory (plus other info).

1.5.6: Security

Files and directories have associated permissions.

-

Most systems supply at least rwx (readable, writable, executable).

-

User, group, world

-

A more general mechanism is an

access control lists.

-

Often files have “attributes” as well.

For example the linux ext2 and ext3

file systems support a “d” attribute that is a hint to

the dump program not to backup this file.

-

When a file is opened, permissions are checked and, if the open is

permitted, a file descriptor is

returned that is used for subsequent operations

Security has of course sadly become a very serious concern.

The topic is very serious and I do not feel that the necessarily

superficial coverage that time would permit is useful so we are not

covering the topic at all.

1.5.7: The Shell or Command Interpreter (DOS Prompt)

The command line interface to the operating system. The shell

permits the user to

-

Invoke commands

-

Pass arguments to the commands

-

Redirect the output of a command to a file or device

-

Pipe one command to another (as illustrated above via ls | wc)

Homework: 8

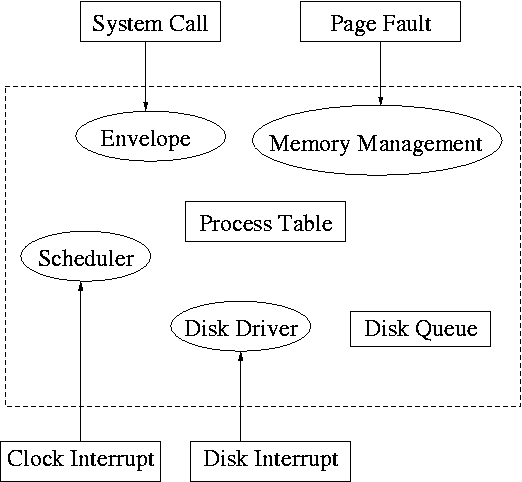

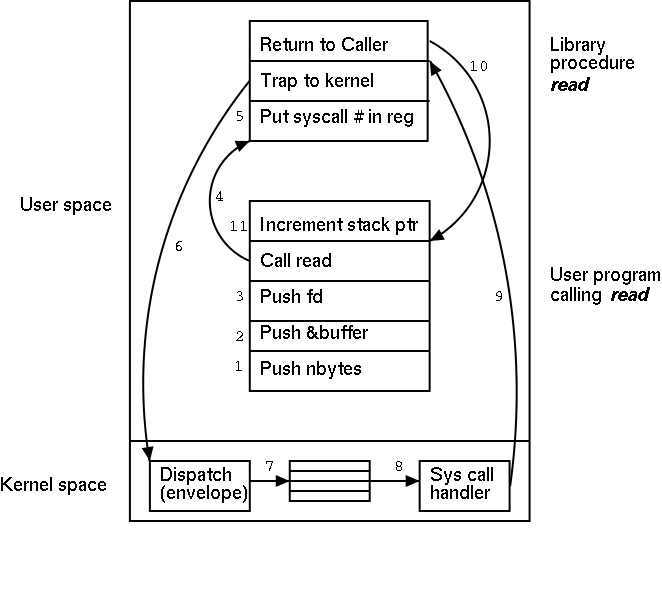

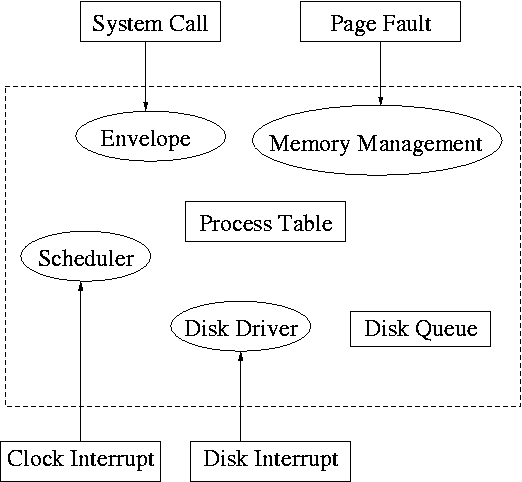

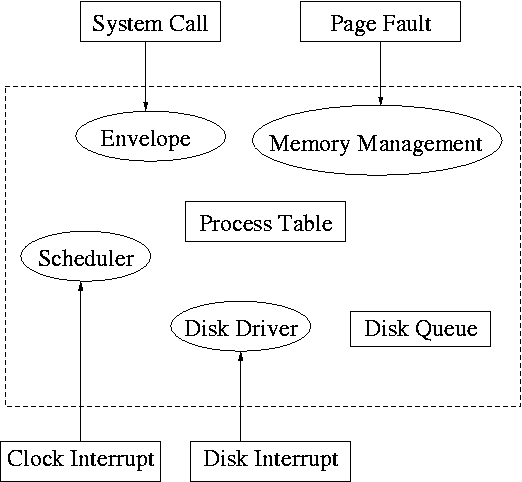

1.6: System Calls

System calls are the way a user (i.e., a program)

directly interfaces with the OS. Some textbooks use the term

envelope for the component of the OS responsible for fielding

system calls and dispatching them. On the right is a picture showing

some of the OS components and the external events for which they are

the interface.

Note that the OS serves two masters.

The hardware (below) asynchronously sends interrupts and the

user synchronously invokes system calls and generates page faults.

Homework: 14

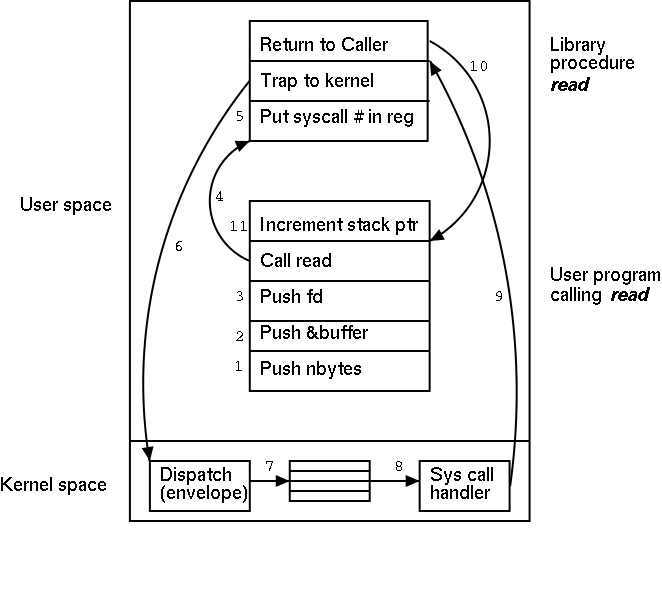

What happens when a user executes a system call such as read()?

We show a more detailed picture below, but at a high level what

happens is

-

Normal function call (in C, Ada, Pascal, Java, etc.).

-

Library routine (probably in C).

-

Small assembler routine.

-

Move arguments to predefined place (perhaps registers).

-

Poof (a trap instruction) and then the OS proper runs in

supervisor mode.

-

Fix up result (move to correct place).

The following actions occur when the user executes the (Unix)

system call

count = read(fd,buffer,nbytes)

which reads up to

nbytes from the file described by fd into buffer. The actual number

of bytes read is returned (it might be less than nbytes if, for

example, an eof was encountered).

-

Push third parameter on to the stack.

-

Push second parameter on to the stack.

-

Push first parameter on to the stack.

-

Call the library routine, which involves pushing the return

address on to the stack and jumping to the routine.

-

Machine/OS dependent actions. One is to put the system call

number for read in a well defined place, e.g., a specific

register. This requires assembly language.

-

Trap to the kernel (assembly language). This enters the operating

system proper and shifts the computer to privileged mode.

Assembly language is again used.

-

The envelope uses the system call number to access a table of

pointers to find the handler for this system call.

-

The read system call handler processes the request (see below).

-

Some magic instruction returns to user mode and jumps to the

location right after the trap.

-

The library routine returns (there is more; e.g., the count must

be returned).

-

The stack is popped (ending the function call read).

A major complication is that the system call handler may block.

Indeed for read it is likely that a block will occur. In that case a

switch occurs to another process. This is far from trivial and is

discussed later in the course.

================ Start Lecture #4 ================

| Process Management

|

| Posix | Win32 | Description

|

| Fork

| CreateProcess

| Clone current process

|

| exec(ve)

| Replace current process

|

| waid(pid)

| WaitForSingleObject

| Wait for a child to terminate.

|

| exit

| ExitProcess

| Terminate current process & return status

|

| File Management

|

| Posix | Win32 | Description

|

| open

| CreateFile

| Open a file & return descriptor

|

| close

| CloseHandle

| Close an open file

|

| read

| ReadFile

| Read from file to buffer

|

| write

| WriteFile

| Write from buffer to file

|

| lseek

| SetFilePointer

| Move file pointer

|

| stat

| GetFileAttributesEx

| Get status info

|

| Directory and File System Management

|

| Posix | Win32 | Description

|

| mkdir

| CreateDirectory

| Create new directory

|

| rmdir

| RemoveDirectory

| Remove empty directory

|

| link

| (none)

| Create a directory entry

|

| unlink

| DeleteFile

| Remove a directory entry

|

| mount

| (none)

| Mount a file system

|

| umount

| (none)

| Unmount a file system

|

| Miscellaneous

|

| Posix | Win32 | Description

|

| chdir

| SetCurrentDirectory

| Change the current working directory

|

| chmod

| (none)

| Change permissions on a file

|

| kill

| (none)

| Send a signal to a process

|

| time

| GetLocalTime

| Elapsed time since 1 jan 1970

|

A Few Important Posix/Unix/Linux and Win32 System Calls

The table on the right shows some systems calls; the descriptions

are accurate for Unix and close for win32. To show how the four

process management calls enable much of process management, consider

the following highly simplified shell. (The fork() system call

returns true in the parent and false in the child.)

while (true)

display_prompt()

read_command(command)

if (fork() != 0)

waitpid(...)

else

execve(command)

endif

endwhile

Simply removing the waitpid(...) gives background jobs.

Homework: 18.

1.6A: Addendum on Transfer of Control

The transfer of control between user processes and the operating

system kernel can be quite complicated, especially in the case of

blocking system calls, hardware interrupts, and page faults.

Before tackling these issues later, we begin with the familiar example

(discussed at length in 101 and 102)

of a procedure call within a user-mode process.

An important OS objective is that, even in the more complicated

cases of page faults and blocking system calls requiring device

interrupts, simple procedure call semantics are observed

from a user process viewpoint.

The complexity is hidden inside the kernel itself, yet another example

of the operating system providing a more abstract, i.e., simpler,

virtual machine to the user processes.

More details will be added when we study memory management (and know

officially about page faults)

and more again when we study I/O (and know officially about device

interrupts).

A number of the points below are far from standardized.

Such items as where are parameters placed, which routine saves the

registers, exact semantics of trap, etc, vary as one changes

language/compiler/OS.

Indeed some of these are referred to as “calling conventions”,

i.e. their implementation is a matter of convention rather than

logical requirement.

The presentation below is, we hope, reasonable, but must be viewed as

a generic description of what could happen instead of an exact

description of what does happen with, say, C compiled by the Microsoft

compiler running on Windows NT.

1.6A.1: User-mode procedure calls

Procedure f calls g(a,b,c) in process P.

Actions by f prior to the call:

-

Save the registers by pushing them onto the stack (in some

implementations this is done by g instead of f).

-

Push arguments c,b,a onto P's stack.

Note: Stacks usually

grow downward from the top of P's segment, so pushing

an item onto the stack actually involves decrementing the stack

pointer, SP.

Note: Some compilers store arguments in registers not on the stack.

Executing the call itself

-

Execute PUSHJ <start-address of g>.

This instruction

pushes the program counter PC onto the stack, and then jumps to

the start address of g.

The value pushed is actually the updated program counter, i.e.,

the location of the next instruction (the instruction to be

executed by f when g returns).

Actions by g upon being called:

-

Allocate space for g's local variables by suitably decrementing SP.

-

Start execution from the beginning of the program, referencing the

parameters as needed.

The execution may involve calling other procedures, possibly

including recursive calls to f and/or g.

Actions by g when returning to f:

- If g is to return a value, store it in the conventional place.

-

Undo step 4: Deallocate local variables by incrementing SP.

-

Undo step 3: Execute POPJ, i.e., pop the stack and set PC to the

value popped, which is the return address pushed in step 4.

Actions by f upon the return from g:

-

We are now at the step in f immediately following the call to g.

Undo step 2: Remove the arguments from the stack by incrementing

SP.

-

(Sort of) undo step 1: Restore the registers by popping the

stack.

-

Continue the execution of f, referencing the returned value of g,

if any.

Properties of (user-mode) procedure calls:

-

Predictable (often called synchronous) behavior: The author of f

knows where and hence when the call to g will occur. There are no

surprises, so it is relatively easy for the programmer to ensure

that f is prepared for the transfer of control.

-

LIFO (“stack-like”structure of control transfer: we

can be sure that control will return to f when this call

to g exits. The above statement holds even if, via recursion, g

calls f. (We are ignoring language features such as

“throwing” and “catching” exceptions, and

the use of unstructured assembly coding, in the latter case all

bets are off.)

-

Entirely in user mode and user space.

1.6A.2: Kernel-mode procedure calls

We mean one procedure running in kernel mode calling another

procedure, which will also be run in kernel mode. Later, we will

discuss switching from user to kernel mode and back.

There is not much difference between the actions taken during a

kernel-mode procedure call and during a user-mode procedure call. The

procedures executing in kernel-mode are permitted to issue privileged

instructions, but the instructions used for transferring control are

all unprivileged so there is no change in that respect.

One difference is that a different stack is used in kernel

mode, but that simply means that the stack pointer must be set to the

kernel stack when switching from user to kernel mode. But we are

not switching modes in this section; the stack pointer already points

to the kernel stack.

1.6A.3: The Trap instruction

The trap instruction, like a procedure call, is a synchronous

transfer of control:

We can see where, and hence when, it is executed; there are no

surprises.

Although not surprising, the trap instruction does have an

unusual effect, processor execution is switched from user-mode to

kernel-mode. That is, the trap instruction itself is executed in

user-mode (it is naturally an UNprivileged instruction) but

the next instruction executed (which is NOT the instruction

written after the trap) is executed in kernel-mode.

Process P, running in unprivileged (user) mode, executes a trap.

The code being executed was written in assembler since there are no

high level languages that generate a trap instruction.

There is no need to name the function that is executing.

Compare the following example to the explanation of “f calls g”

given above.

Actions by P prior to the trap

-

Save the registers by pushing them onto the stack.

-

Store any arguments that are to be passed.

The stack is not normally used to store these arguments since the

kernel has a different stack.

Often registers are used.

Executing the trap itself

-

Execute TRAP <trap-number>.

Switch the processor to kernel (privileged) mode, jumps to a

location in the OS determined by trap-number, and saves the return

address.

For example, the processor may be designed so that the next

instruction executed after a trap is at physical address 8 times the

trap-number.

The trap-number should be thought of as the “name” of the

code-sequence to which the processor will jump rather than as an

argument to trap.

Indeed arguments to trap, are established before the trap is executed.

Actions by the OS upon being TRAPped into

-

Jump to the real code.

Recall that trap instructions with different trap numbers jump to

locations very close to each other.

There is not enough room between them for the real trap handler.

Indeed one can think of the trap as having an extra level of

indirection; it jumps to a location that then jumps to the real

start address. If you learned about writing jump tables in

assembler, this is very similar.

-

Check all arguments passed. The kernel must be paranoid and

assume that the user mode program is evil and written by a

bad guy.

-

Allocate space by decrementing the kernel stack pointer.

The kernel and user stacks are separate.

-

Start execution from the jumped-to location, referencing the

parameters as needed.

Actions by the OS when returning to user mode

- Undo step 6: Deallocate space by incrementing the kernel stack

pointer.

-

Undo step 3: Execute (in assembler) another special instruction,

RTI or ReTurn from Interrupt, which returns the processor to user

mode and transfers control to the return location saved by the trap.

Actions by P upon the return from the OS

- We are now in at the instruction right after the trap

Undo step 1: Restore the registers by popping the stack.

-

Continue the execution of P, referencing the returned value(s) of

the trap, if any.

Properties of TRAP/RTI:

- Synchronous behavior: The author of the assembly code in P

knows where and hence when the trap will occur. There are no

surprises, so it is relatively easy for the programmer to prepare

for the transfer of control.

- Trivial control transfer when viewed from P:

The next instruction of P that will be executed is the

one following the trap.

As we shall see later, other processes may execute between P's

trap and the next P instructions.

- Starts and ends in user mode and user space, but executed in

kernel mode and kernel space in the middle.

Remark:

A good way to use the material in the addendum is to compare the first

case (user-mode f calls user-mode g) to the TRAP/RTI case line by line

so that you can see the similarities and differences.

Homework solutions posted, give passwd.

TAs assigned

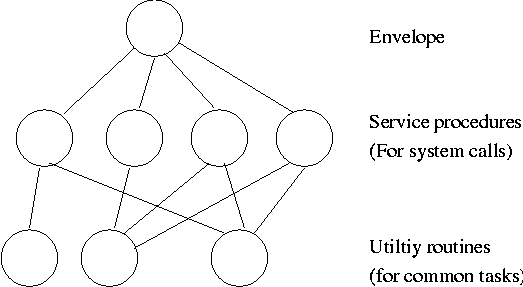

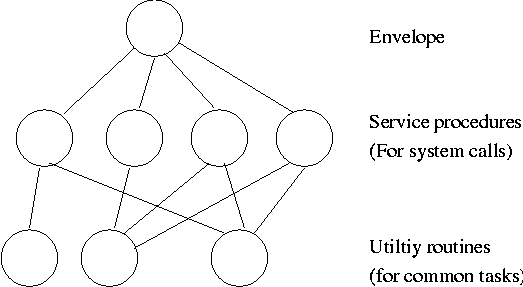

1.7: OS Structure

I must note that Tanenbaum is a big advocate of the so called

microkernel approach in which as much as possible is moved out of the

(supervisor mode) kernel into separate processes. The (hopefully

small) portion left in supervisor mode is called a microkernel.

In the early 90s this was popular. Digital Unix (now called True64)

and Windows NT/2000/XP are examples. Digital Unix is based on Mach, a

research OS from Carnegie Mellon university. Lately, the growing

popularity of Linux has called into question the belief that “all new

operating systems will be microkernel based”.

1.7.1: Monolithic approach

The previous picture: one big program

The system switches from user mode to kernel mode during the poof and

then back when the OS does a “return” (an RTI or return

from interrupt).

But of course we can structure the system better, which brings us to.

1.7.2: Layered Systems

Some systems have more layers and are more strictly structured.

An early layered system was “THE” operating system by Dijkstra. The

layers were.

- The operator

- User programs

- I/O mgt

- Operator-process communication

- Memory and drum management

The layering was done by convention, i.e. there was no enforcement by

hardware and the entire OS is linked together as one program. This is

true of many modern OS systems as well (e.g., linux).

The multics system was layered in a more formal manner. The hardware

provided several protection layers and the OS used them. That is,

arbitrary code could not jump to or access data in a more protected layer.

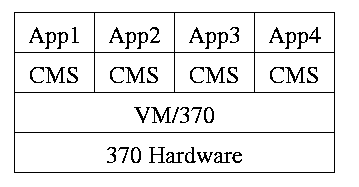

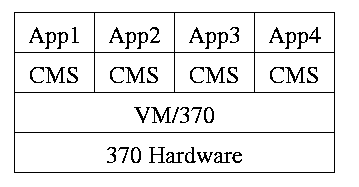

1.7.3: Virtual Machines

Use a “hypervisor” (beyond supervisor, i.e. beyond a normal OS) to

switch between multiple Operating Systems. Made popular by

IBM's VM/CMS

-

Each App/CMS runs on a virtual 370.

-

CMS is a single user OS.

-

A system call in an App (application) traps to the corresponding CMS.

-

CMS believes it is running on the machine so issues I/O.

instructions but ...

-

... I/O instructions in CMS trap to VM/370.

-

This idea is still used.

A modern version (used to “produce” a multiprocessor from many

uniprocessors) is “Cellular Disco”, ACM TOCS, Aug. 2000.

- Another modern usage is JVM the “Java Virtual Machine”.

1.7.4: Exokernels (unofficial)

Similar to VM/CMS but the virtual machines have disjoint resources

(e.g., distinct disk blocks) so less remapping is needed.

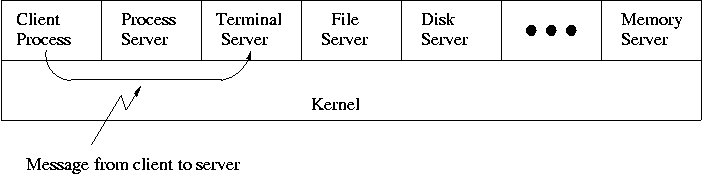

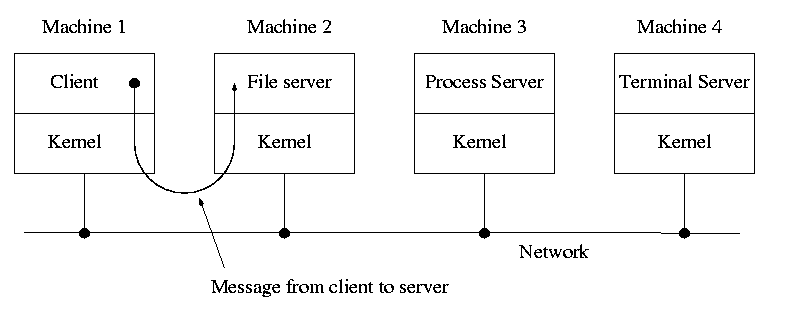

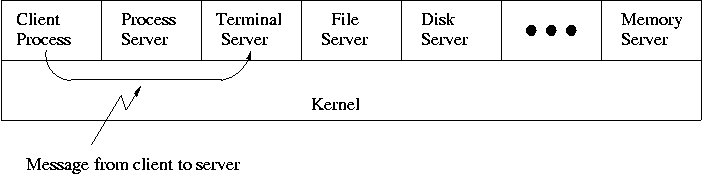

1.7.5: Client-Server

When implemented on one computer, a client-server OS uses the

microkernel approach in which the microkernel just handles

communication between clients and servers, and the main OS functions

are provided by a number of separate processes.

This does have advantages. For example an error in the file server

cannot corrupt memory in the process server. This makes errors easier

to track down.

But it does mean that when a (real) user process makes a system call

there are more processes switches. These are

not free.

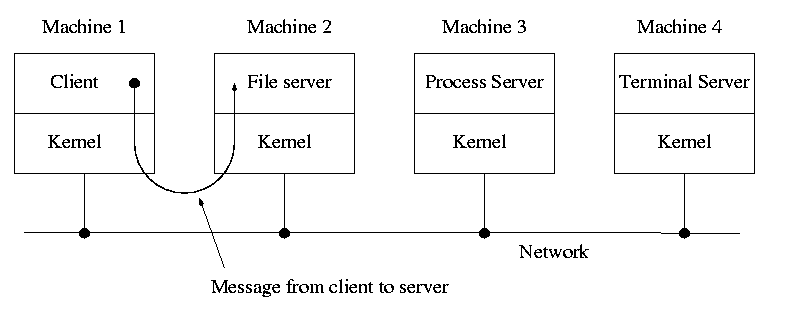

A distributed system can be thought of as an extension of the

client server concept where the servers are remote.

Today with plentiful memory, each machine would have all the

different servers. So the only reason a message would go to another

computer is if the originating process wished to communicate with a

specific process on that computer (for example wanted to access a

remote disk).

Homework: 23

Microkernels Not So Different In Practice

Dennis Ritchie, the inventor of the C programming language and

co-inventor, with Ken Thompson, of Unix was interviewed in February

2003. The following is from that interview.

What's your opinion on microkernels vs. monolithic?

Dennis Ritchie: They're not all that different when you actually

use them. "Micro" kernels tend to be pretty large these days, and

"monolithic" kernels with loadable device drivers are taking up more

of the advantages claimed for microkernels.

Chapter 2: Process and Thread Management

Tanenbaum's chapter title is “Processes and Threads”.

I prefer to add the word management. The subject matter is processes,

threads, scheduling, interrupt handling, and IPC (InterProcess

Communication--and Coordination).

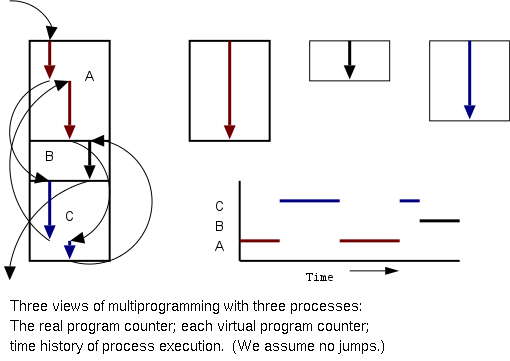

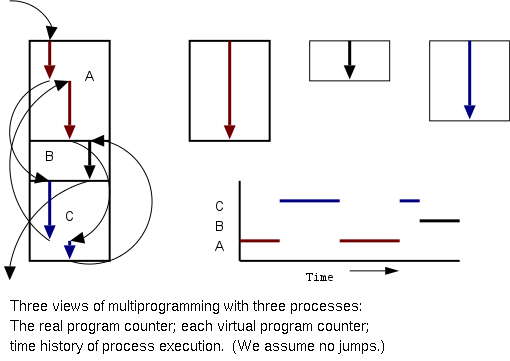

2.1: Processes

Definition: A process is a

program in execution.

-

We are assuming a multiprogramming OS that

can switch from one process to another.

-

Sometimes this is

called pseudoparallelism since one has the illusion of a

parallel processor.

-

The other possibility is real

parallelism in which two or more processes are actually running

at once because the computer system is a parallel processor, i.e., has

more than one processor.

-

We do not study real parallelism (parallel

processing, distributed systems, multiprocessors, etc) in this course.

2.1.1: The Process Model

Even though in actuality there are many processes running at once, the

OS gives each process the illusion that it is running alone.

-

Virtual time: The time used by just this

processes. Virtual time progresses at

a rate independent of other processes. Actually, this is false, the

virtual time is

typically incremented a little during systems calls used for process

switching; so if there are more other processors more “overhead”

virtual time occurs.

-

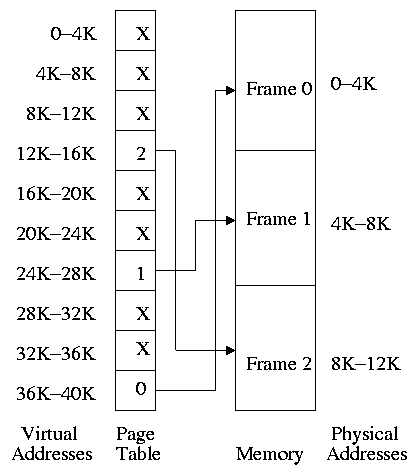

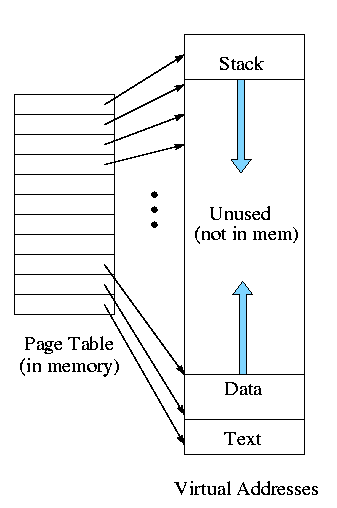

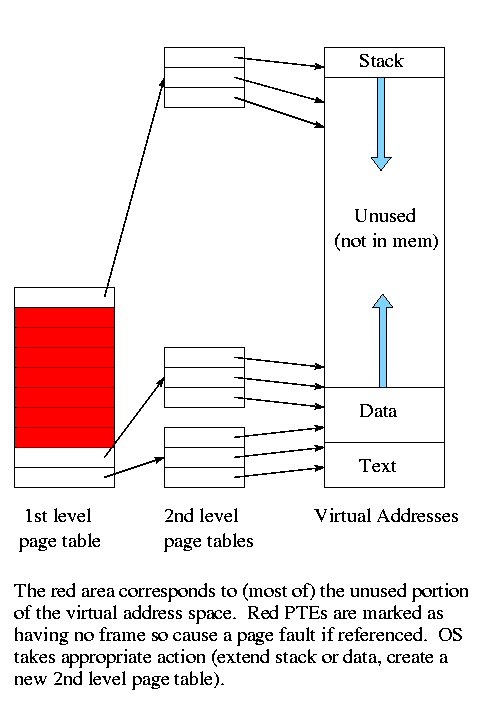

Virtual memory:

The memory as viewed by the

process. Each process typically believes it has a contiguous chunk of

memory starting at location zero. Of course this can't be true of all

processes (or they would be using the same memory) and in modern

systems it is actually true of no processes (the memory assigned is

not contiguous and does not include location zero).

Think of the individual modules that are input to the linker.

Each numbers its addresses from zero;

the linker eventually translates these relative addresses into

absolute addresses.

That is the linker provides to the assembler a virtual memory in which

addresses start at zero.

Virtual time and virtual memory are examples of abstractions

provided by the operating system to the user processes so that the

latter “sees” a more pleasant virtual machine than actually exists.

================ Start Lecture #5 ================

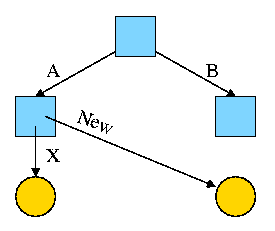

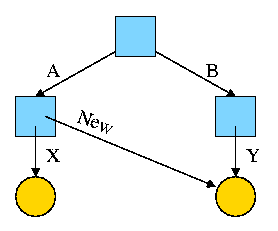

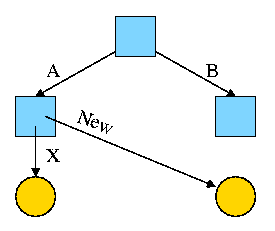

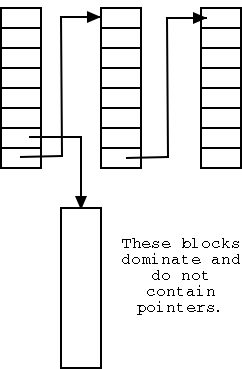

2.1.2: Process Creation

From the users or external viewpoint there are several mechanisms

for creating a process.

-

System initialization, including daemon (see below) processes.

-

Execution of a process creation system call by a running process.

-

A user request to create a new process.

-

Initiation of a batch job.

But looked at internally, from the system's viewpoint, the second

method dominates. Indeed in unix only one process is created at

system initialization (the process is called init); all the

others are children of this first process.

Why have init? That is why not have all processes created via

method 2?

Ans: Because without init there would be no running process to create

any others.

Definition of daemon

Many systems have daemon process lurking around to perform

tasks when they are needed.

I was pretty sure the terminology was

related to mythology, but didn't have a reference until

a student found

“The {Searchable} Jargon Lexicon”

at http://developer.syndetic.org/query_jargon.pl?term=demon

daemon: /day'mn/ or /dee'mn/ n. [from the mythological meaning, later

rationalized as the acronym `Disk And Execution MONitor'] A program

that is not invoked explicitly, but lies dormant waiting for some

condition(s) to occur. The idea is that the perpetrator of the

condition need not be aware that a daemon is lurking (though often a

program will commit an action only because it knows that it will

implicitly invoke a daemon). For example, under {ITS}, writing a file

on the LPT spooler's directory would invoke the spooling daemon, which

would then print the file. The advantage is that programs wanting (in

this example) files printed need neither compete for access to nor

understand any idiosyncrasies of the LPT. They simply enter their

implicit requests and let the daemon decide what to do with

them. Daemons are usually spawned automatically by the system, and may

either live forever or be regenerated at intervals. Daemon and demon

are often used interchangeably, but seem to have distinct

connotations. The term `daemon' was introduced to computing by CTSS

people (who pronounced it /dee'mon/) and used it to refer to what ITS

called a dragon; the prototype was a program called DAEMON that

automatically made tape backups of the file system. Although the

meaning and the pronunciation have drifted, we think this glossary

reflects current (2000) usage.

2.1.3: Process Termination

Again from the outside there appear to be several termination

mechanism.

-

Normal exit (voluntary).

-

Error exit (voluntary).

-

Fatal error (involuntary).

-

Killed by another process (involuntary).

And again, internally the situation is simpler. In Unix

terminology, there are two system calls kill and

exit that are used. Kill (poorly named in my view) sends a

signal to another process. If this signal is not caught (via the

signal system call) the process is terminated. There

is also an “uncatchable” signal. Exit is used for self termination

and can indicate success or failure.

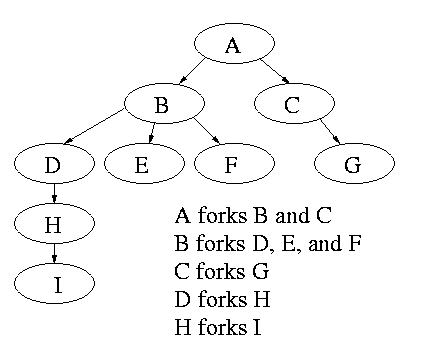

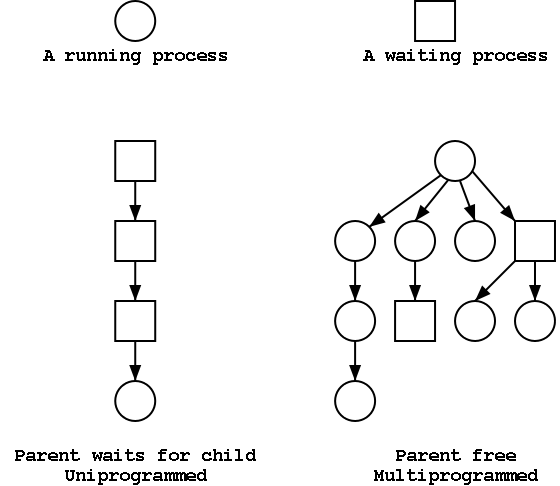

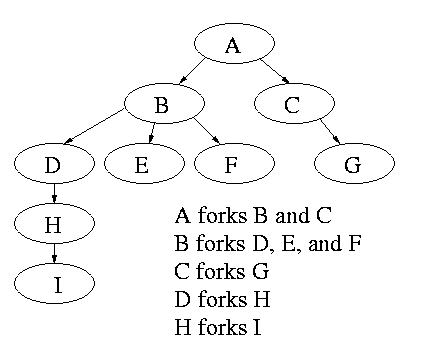

2.1.4: Process Hierarchies

Modern general purpose operating systems permit a user to create and

destroy processes.

-

In unix this is done by the fork

system call, which creates a child process, and the

exit system call, which terminates the current

process.

-

After a fork both parent and child keep running (indeed they

have the same program text) and each can fork off other

processes.

-

A process tree results. The root of the tree is a special

process created by the OS during startup.

-

A process can choose to wait for children to terminate.

For example, if C issued a wait() system call it would block until G

finished.

Old or primitive operating system like MS-DOS are not fully

multiprogrammed, so when one process starts another, the first process

is automatically blocked and waits until the second is

finished.

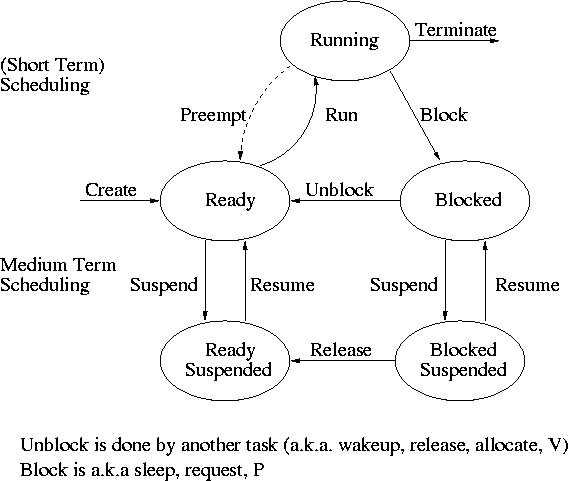

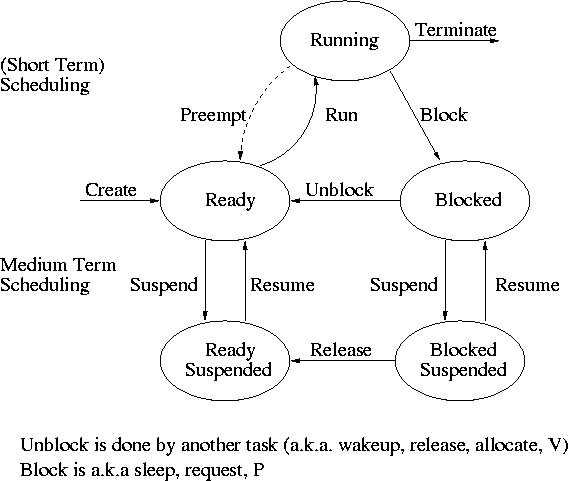

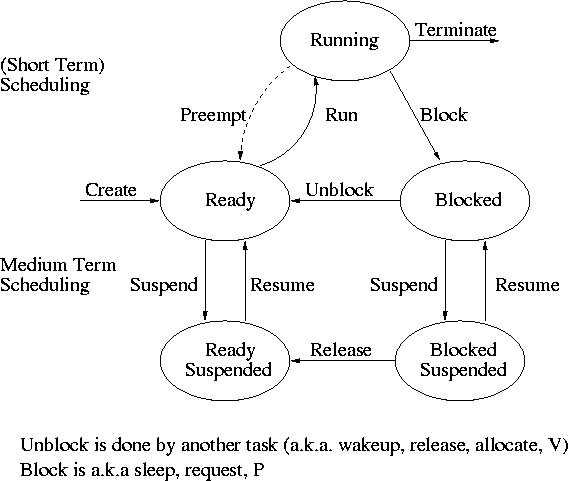

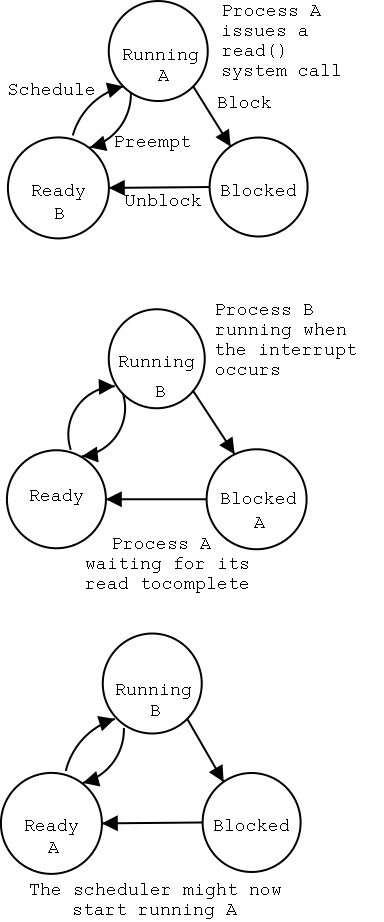

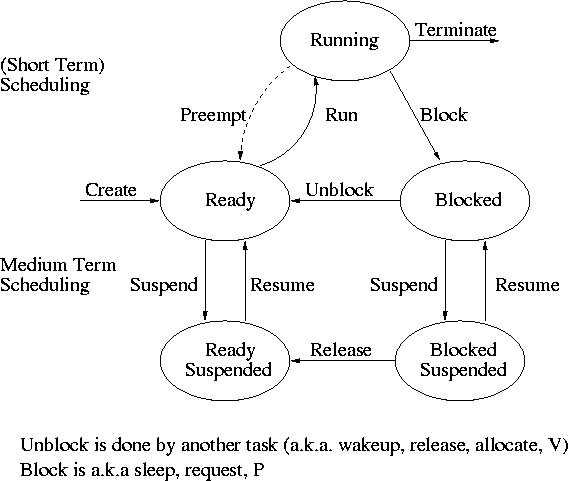

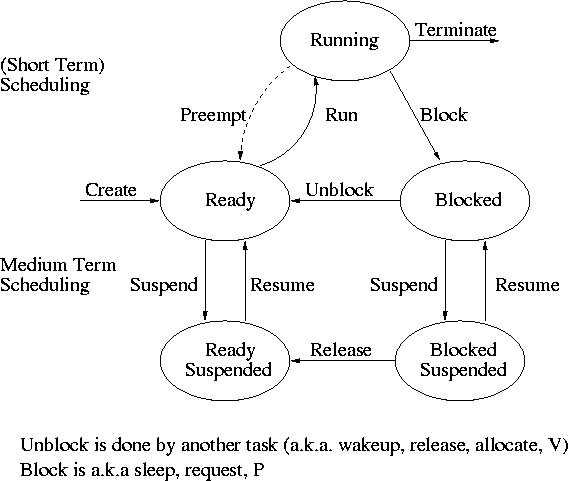

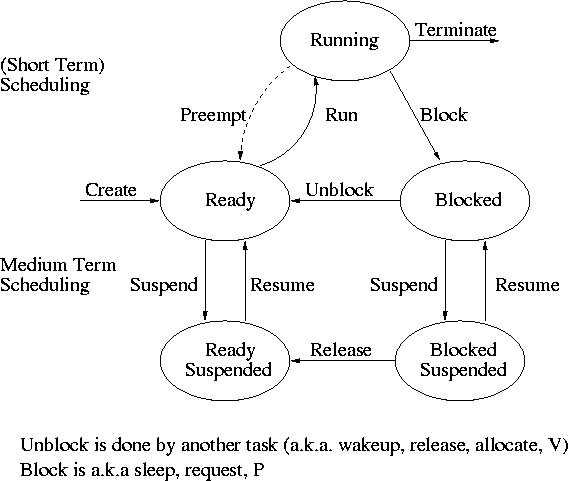

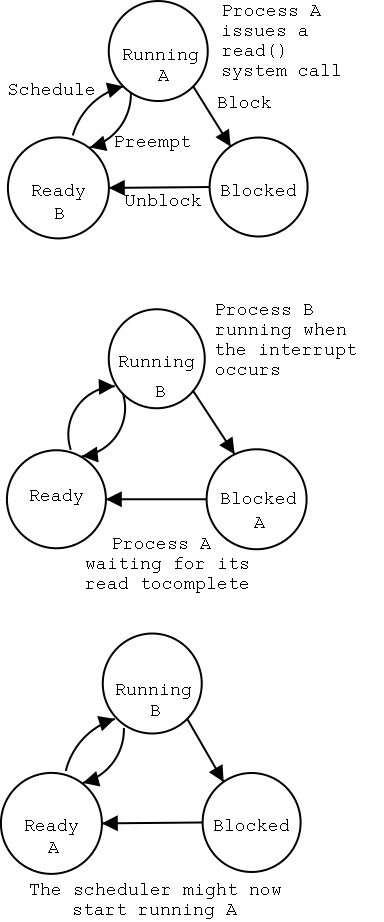

2.1.5: Process States and Transitions

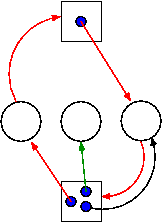

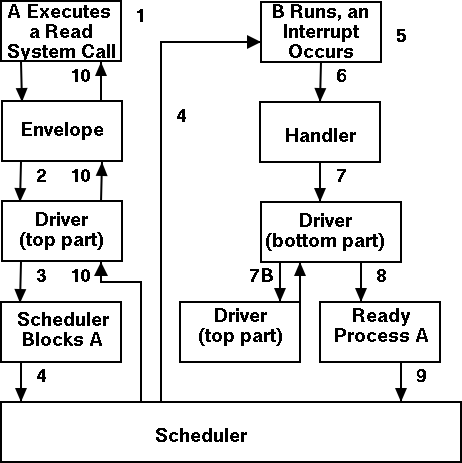

The diagram on the right contains much information.

-

Consider a running process P that issues an I/O request

-

The process blocks

-

At some later point, a disk interrupt occurs and the driver

detects that P's request is satisfied.

-

P is unblocked, i.e. is moved from blocked to ready

-

At some later time the operating system scheduler looks for a

ready job to run and picks P.

-

A preemptive scheduler has the dotted line preempt;

A non-preemptive scheduler doesn't.

-

The number of processes changes only for two arcs: create and

terminate.

-

Suspend and resume are medium term scheduling

-

Done on a longer time scale.

-

Involves memory management as well.

As a result we study it later.

-

Sometimes called two level scheduling.

Homework: 1.

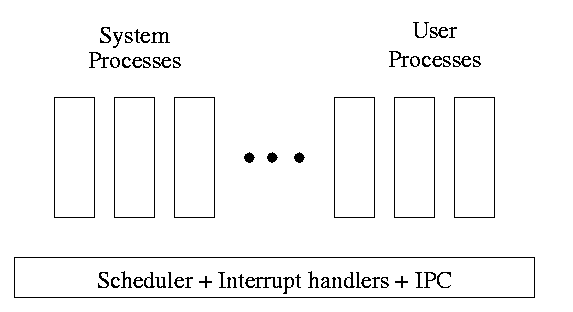

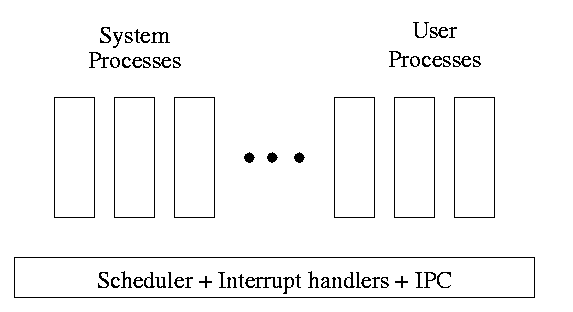

One can organize an OS around the scheduler.

One can organize an OS around the scheduler.

-

Write a minimal “kernel” (a micro-kernel) consisting of the

scheduler, interrupt handlers, and IPC (interprocess

communication).

-

The rest of the OS consists of kernel processes (e.g. memory,

filesystem) that act as servers for the user processes (which of

course act as clients).

-

The system processes also act as clients (of other system processes).

-

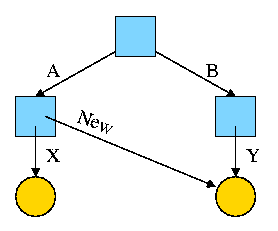

The above is called the client-server model and is one Tanenbaum likes.

His “Minix” operating system works this way.

-

Indeed, there was reason to believe that the client-server model

would dominate OS design.

But that hasn't happened.

-

Such an OS is sometimes called server based.

-

Systems like traditional unix or linux would then be

called self-service since the user process serves itself.

-

That is, the user process switches to kernel mode and performs

the system call.

-

To repeat: the same process changes back and forth from/to

user<-->system mode and services itself.

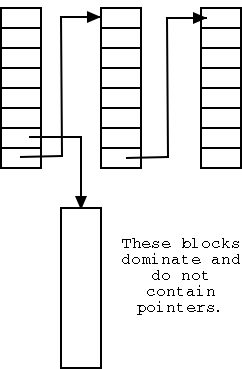

2.1.6: Implementation of Processes

The OS organizes the data about each process in a table naturally

called the process table.

Each entry in this table is called a

process table entry (PTE) or

process control block.

-

One entry per process.

-

The central data structure for process management.

-

A process state transition (e.g., moving from blocked to ready) is

reflected by a change in the value of one or more

fields in the PTE.

-

We have converted an active entity (process) into a data structure

(PTE). Finkel calls this the level principle “an active

entity becomes a data structure when looked at from a lower level”.

-

The PTE contains a great deal of information about the process.

For example,

-

Saved value of registers when process not running

-

Program counter (i.e., the address of the next instruction)

-

Stack pointer

-

CPU time used

-

Process id (PID)

-

Process id of parent (PPID)

-

User id (uid and euid)

-

Group id (gid and egid)

-

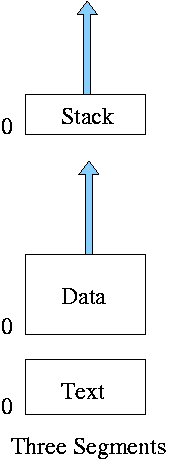

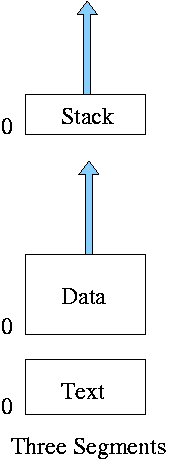

Pointer to text segment (memory for the program text)

-

Pointer to data segment

-

Pointer to stack segment

-

UMASK (default permissions for new files)

-

Current working directory

-

Many others

2.1.6A: An addendum on Interrupts

This should be compared with the addendum on

transfer of control.

In a well defined location in memory (specified by the hardware) the

OS stores an interrupt vector, which contains the

address of the (first level) interrupt handler.

-

Tanenbaum calls the interrupt handler the interrupt service routine.

-

Actually one can have different priorities of interrupts and the

interrupt vector contains one pointer for each level. This is why

it is called a vector.

Assume a process P is running and a disk interrupt occurs for the

completion of a disk read previously issued by process Q, which is

currently blocked.

Note that disk interrupts are unlikely to be for the currently running

process (because the process that initiated the disk access is likely

blocked).

Actions by P prior to the interrupt:

-

Who knows??

This is the difficulty of debugging code depending on interrupts,

the interrupt can occur (almost) anywhere. Thus, we do not

know what happened just before the interrupt.

Executing the interrupt itself:

-

The hardware saves the program counter and some other registers

(or switches to using another set of registers, the exact mechanism is

machine dependent).

-

Hardware loads new program counter from the interrupt vector.

-

Loading the program counter causes a jump.

-

Steps 2 and 3 are similar to a procedure call.

But the interrupt is asynchronous.

-

As with a trap, the hardware automatically switches the system

into privileged mode.

(It might have been in supervisor mode already, that is an

interrupt can occur in supervisor mode).

Actions by the interrupt handler (et al) upon being activated

-

An assembly language routine saves registers.

-

The assembly routine sets up new stack.

(These last two steps are often called setting up the C environment.)

-

The assembly routine calls a procedure in a high level language,

often the C language (Tanenbaum forgot this step).

-

The C procedure does the real work.

-

Determines what caused the interrupt (in this case a disk

completed an I/O)

- How does it figure out the cause?

-

It might know the priority of the interrupt being activated.

-

The controller might write information in memory

before the interrupt

-

The OS can read registers in the controller

- Mark process Q as ready to run.

-

That is move Q to the ready list (note that again

we are viewing Q as a data structure).

-

The state of Q is now ready (it was blocked before).

-

The code that Q needs to run initially is likely to be OS

code. For example, Q probably needs to copy the data just

read from a kernel buffer into user space.

-

Now we have at least two processes ready to run, namely P and

Q.

There may be arbitrarily many others.

-

The scheduler decides which process to run (P or Q or

something else).

This loosely corresponds to g calling other procedures in the

simple f calls g case we discussed previously.

Eventually the scheduler decides to run P.

Actions by P when control returns

-

The C procedure (that did the real work in the interrupt

processing) continues and returns to the assembly code.

-

Assembly language restores P's state (e.g., registers) and starts

P at the point it was when the interrupt occurred.

Properties of interrupts

-

Phew.

-

Unpredictable (to an extent).

We cannot tell what was executed just before the interrupt

occurred.

That is, the control transfer is asynchronous; it is difficult to

ensure that everything is always prepared for the transfer.

-

The user code is unaware of the difficulty and cannot

(easily) detect that it occurred.

This is another example of the OS presenting the user with a

virtual machine environment that is more pleasant than reality (in

this case synchronous rather asynchronous behavior).

-

Interrupts can also occur when the OS itself is executing.

This can cause difficulties since both the main line code

and the interrupt handling code are from the same

“program”, namely the OS, and hence might well be

using the same variables.

We will soon see how this can cause great problems even in what

appear to be trivial cases.

-

The interprocess control transfer is neither stack-like

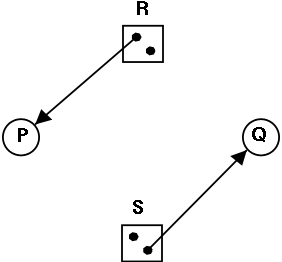

nor queue-like.

That is if first P was running, then Q was running, then R was

running, then S was running, the next process to be run might be

any of P, Q, or R (or some other process).

-

The system might have been in user-mode or supervisor mode when

the interrupt occurred.

The interrupt processing starts in supervisor mode.

================ Start Lecture #6 ================

2.2: Threads

| Per process items | Per thread items

|

|---|

| Address space | Program counter

|

| Global variables | Machine registers

|

| Open files | Stack

|

| Child processes

|

| Pending alarms

|

| Signals and signal handlers

|

| Accounting information

|

The idea is to have separate threads of control (hence the name)

running in the same address space.

An address space is a memory management concept.

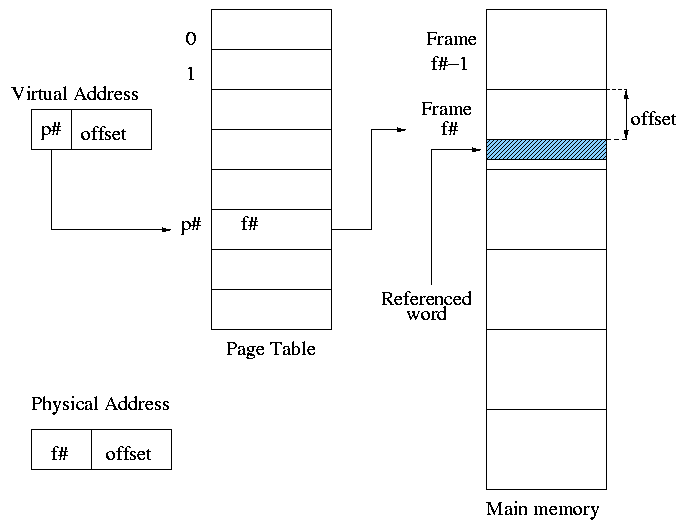

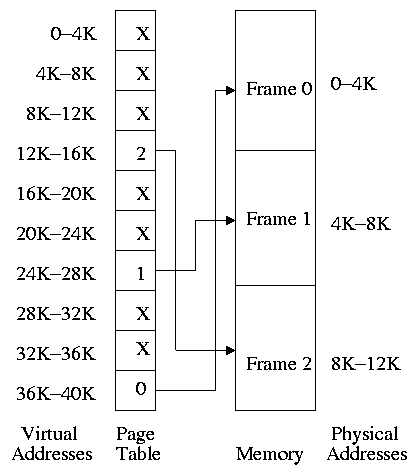

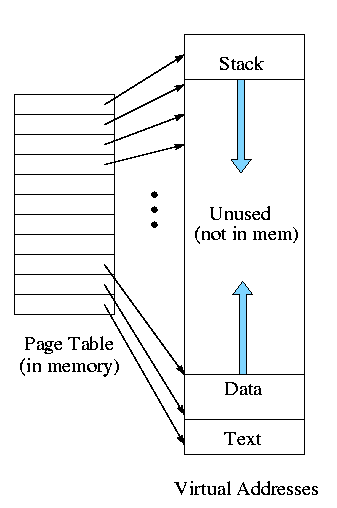

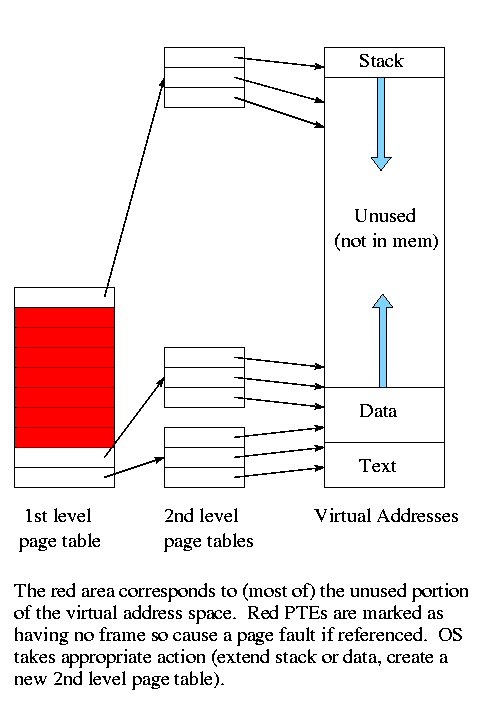

For now think of an address space as the memory in which a process

runs and the mapping from the virtual addresses (addresses in the

program) to the physical addresses (addresses in the machine).

Each thread is somewhat like a

process (e.g., it is scheduled to run) but contains less state

(e.g., the address space belongs to the process in which the thread

runs.

2.2.1: The Thread Model

A process contains a number of resources such as address space,

open files, accounting information, etc. In addition to these

resources, a process has a thread of control, e.g., program counter,

register contents, stack. The idea of threads is to permit multiple

threads of control to execute within one process. This is often

called multithreading and threads are often called

lightweight processes. Because threads in the same

process share so much state, switching between them is much less

expensive than switching between separate processes.

Individual threads within the same process are not completely

independent. For example there is no memory protection between them.

This is typically not a security problem as the threads are

cooperating and all are from the same user (indeed the same process).

However, the shared resources do make debugging harder. For example

one thread can easily overwrite data needed by another and if one thread

closes a file other threads can't read from it.

2.2.2: Thread Usage

Often, when a process A is blocked (say for I/O) there is still

computation that can be done. Another process B can't do this

computation since it doesn't have access to the A's memory. But two

threads in the same process do share memory so that problem doesn't

occur.

An important modern example is a multithreaded web server.

Each thread is responding to a single WWW connection.

While one thread is blocked on I/O, another thread can be processing

another WWW connection.

Question: Why not use separate processes, i.e., what is the shared

memory?

Ans: The cache of frequently referenced pages.

A common organization is to have a dispatcher thread that fields

requests and then passes this request on to an idle thread.

Another example is a producer-consumer problem

(c.f. below)

in which we have 3 threads in a pipeline.

One thread reads data from an I/O device into

a buffer, the second thread performs computation on the input buffer

and places results in an output buffer, and the third thread

outputs the data found in the output buffer.

Again, while one thread is blocked the

others can execute.

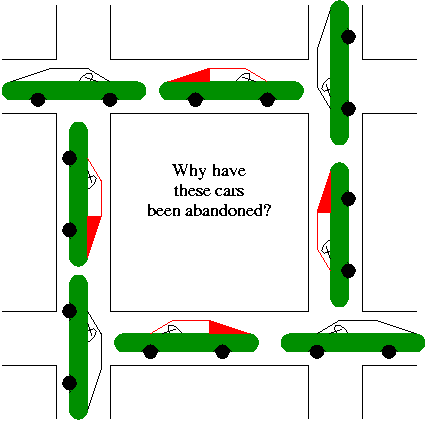

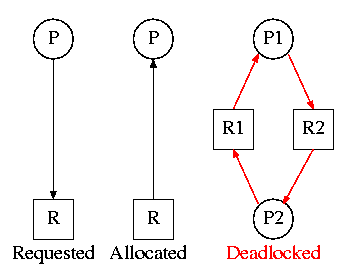

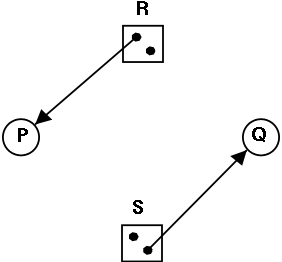

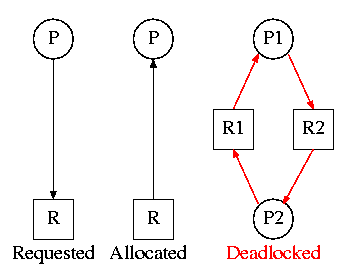

Question: Why does each thread block?

Answer:

-

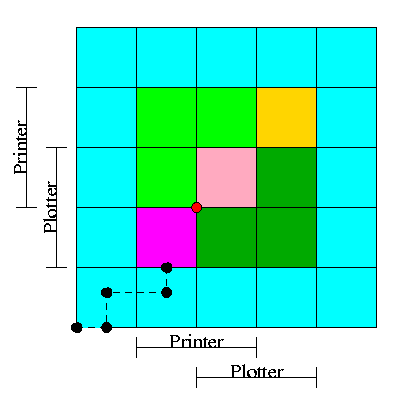

The first thread blocks waiting for the device to finish reading

the data. It also blocks if the input buffer is full.

-

The second thread blocks when either the input buffer is empty or

the output buffer is full.

-

The third thread blocks when the output device is busy (it might

also block waiting for the output request to complete, but this is

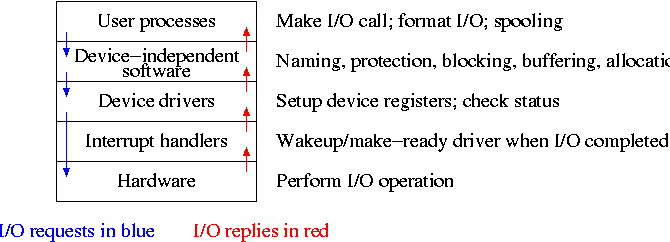

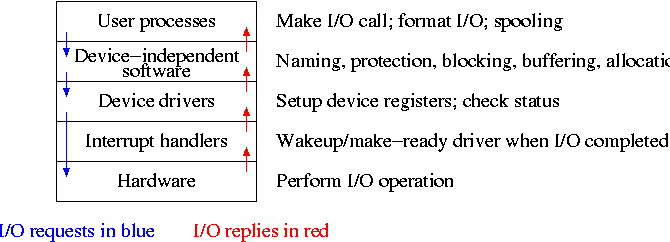

not necessary). It also blocks if the output buffer is empty.

Homework: 9.

A final (related) example is that an application that wishes to

perform automatic backups can have a thread to do just this.

In this way the thread that interfaces with the user is not blocked

during the backup.

However some coordination between threads may be needed so that the

backup is of a consistent state.

2.2.3: Implementing threads in user space

Write a (threads) library that acts as a mini-scheduler and

implements thread_create, thread_exit,

thread_wait, thread_yield, etc. The central data

structure maintained and used by this library is the thread

table, the analogue of the process table in the operating system

itself.

Advantages

-

Requires no OS modification.

-

Requires no OS modification.

-

Requires no OS modification.

-

Very fast since no context switching.

-

Can customize the scheduler for each application.

Disadvantages

-

Blocking system calls can't be executed directly since that would block

the entire process. For example the producer consumer example, if

implemented in the natural manner would not work well as whenever

an I/O was issued that caused the process to block, all the

threads would be unable to run (but see just below).

-

Similarly a page fault would block the entire process (i.e., all

the threads).

-

A thread with an infinite loop prevents all other threads in this

process from running.

-

Re-doing the effort of writing a scheduler.

Possible methods of dealing with blocking system calls

-

Perhaps the OS supplies a non-blocking version of the system call,

e.g. non-blocking read.

-

Perhaps the OS supplies another system call that tell if the

blocking system call will in fact block.

For example, a unix select() can be used to tell if a read would

block. It might not block if

-

The requested disk block is in the buffer cache (see I/O

chapter).

-

The request was for a keyboard or mouse or network event that

has already happened.

2.2.4: Implementing Threads in the Kernel

Move the thread operations into the operating system itself. This

naturally requires that the operating system itself be (significantly)

modified and is thus not a trivial undertaking.

-

Thread-create and friends are now system calls and hence much

slower than with user-mode threads.

They are, however, still much faster than creating/switching/etc

processes since there is so much shared state that does not need

to be recreated.

-

A thread that blocks causes no particular problem. The kernel can

run another thread from this process or can run another process.

-

Similarly a page fault, or infinite loop in one thread does not

automatically block the other threads in the process.

2.2.5: Hybrid Implementations

One can write a (user-level) thread library even if the kernel also

has threads. This is sometimes called the M:N model since M user mode threads

run on each of N kernel threads.

Then each kernel thread can switch between user level

threads. Thus switching between user-level threads within one kernel

thread is very fast (no context switch) and we maintain the advantage

that a blocking system call or page fault does not block the entire

multi-threaded application since threads in other processes of this

application are still runnable.

2.2.6: Scheduler Activations

Skipped

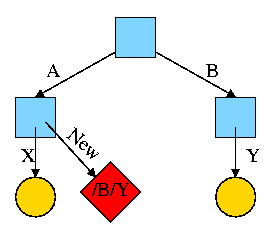

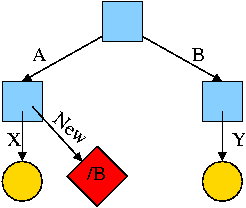

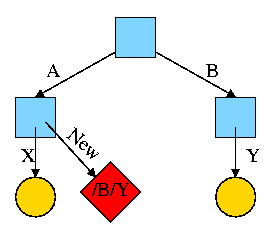

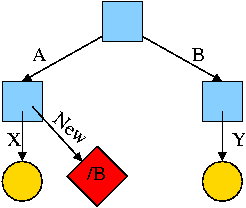

2.2.7: Popup Threads

The idea is to automatically issue a thread-create system call upon

message arrival.

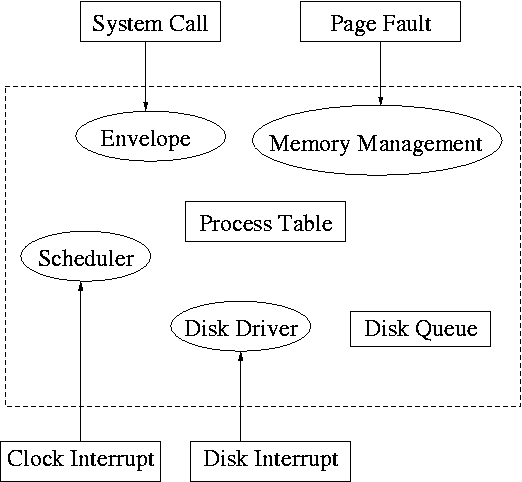

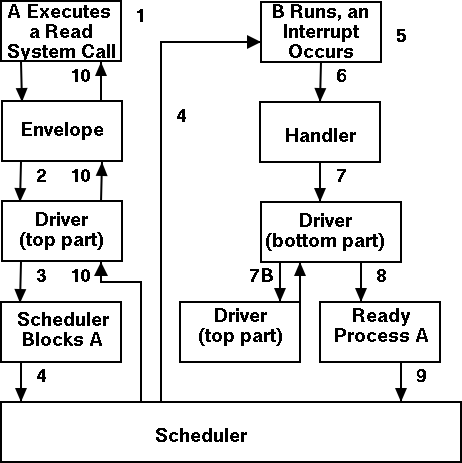

(The alternative is to have a thread or process

blocked on a receive system call.)

If implemented well, the latency between message arrival and thread

execution can be very small since the new thread does not have state

to restore.

Making Single-threaded Code Multithreaded

Definitely NOT for the faint of heart.

-

There often is state that should not be shared.

A well-cited

example is the unix errno variable that contains the error

number (zero means no error) of the error encountered by the last

system call.

Errno is hardly elegant (even in normal,

single-threaded, applications), but its use is widespread.

If multiple threads issue faulty system calls the errno value of

the second overwrites the first and thus the first errno value may

be lost.

-

Much existing code, including many libraries, are not

re-entrant.

-

Managing the shared memory inherent in multi-threaded applications

opens up the possibility of race conditions that we will be

studying next.

-

What should be done with a signal sent to a process. Does it go

to all or one thread?

-

How should stack growth be managed. Normally the kernel grows the

(single) stack automatically when needed. What if there are

multiple stacks?

2.3: Interprocess Communication (IPC) and Coordination/Synchronization

2.3.1: Race Conditions

A race condition occurs when two (or more)

processes are about to perform some action. Depending on the exact

timing, one or other goes first. If one of the processes goes first,

everything works, but if another one goes first, an error, possibly

fatal, occurs.

Imagine two processes both accessing x, which is initially 10.

-

One process is to execute x <-- x+1

-

The other is to execute x <-- x-1

-

When both are finished x should be 10

-

But we might get 9 and might get 11!

-

Show how this can happen (x <-- x+1 is not atomic)

-

Tanenbaum shows how this can lead to disaster for a printer

spooler

Homework: 18.

2.3.2: Critical sections

We must prevent interleaving sections of code that need to be atomic with

respect to each other. That is, the conflicting sections need

mutual exclusion. If process A is executing its

critical section, it excludes process B from executing its critical

section. Conversely if process B is executing is critical section, it

excludes process A from executing its critical section.

Requirements for a critical section implementation.

-

No two processes may be simultaneously inside their critical

section.

-

No assumption may be made about the speeds or the number of CPUs.

-

No process outside its critical section (including the entry and

exit code)may block other processes.

-

No process should have to wait forever to enter its critical

section.

-

I do NOT make this last requirement.

-

I just require that the system as a whole make progress (so not

all processes are blocked).

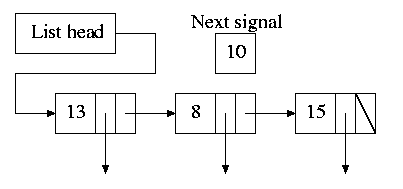

-