Learning

In the previous section, Representation, we described the model which we use to represent an object category. In learning our task is to estimate the parameter values that correspond to a good model of the object category.

We assume that the object instance is the only visually consistent thing across all training the images, with the background of the objects being random in nature. By modeling this consistency across the training images, we therefore model the object itself.

The feature detector outputs many regions on the image (~30), many more than the number of parts in the model (~6), so introduce an assignment variable which selected regions in the image to be placed into the model. Finding the best assignment in each image is a dual problem to finding the best parameter settings: if we know one it is trivial to compute the other.

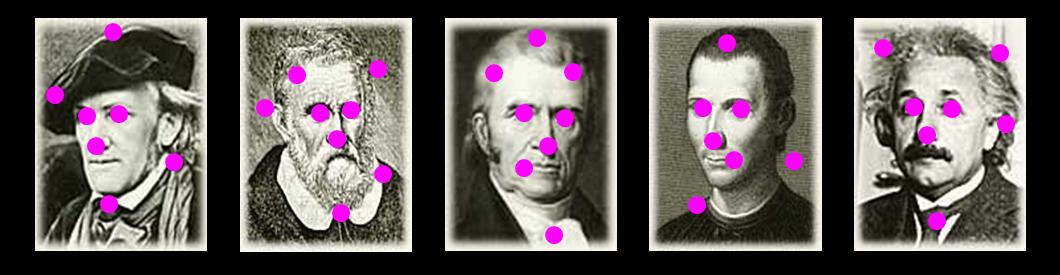

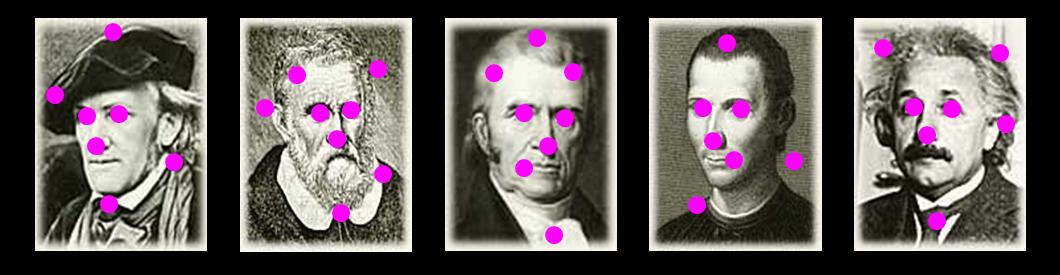

So we need to find both the optimal parameter setting and the best assignment of regions. For this "chicken-and-egg" problem we use the EM algorithm [Dempster]. Below we give a toy example which illustrates the problem. We have 5 images contraining faces (usually we would be using 100's). We have found a number of regions on each one (centres indicated with magenta dots). Initially we have no idea which ones are consistent features on the object and which are part of the background.

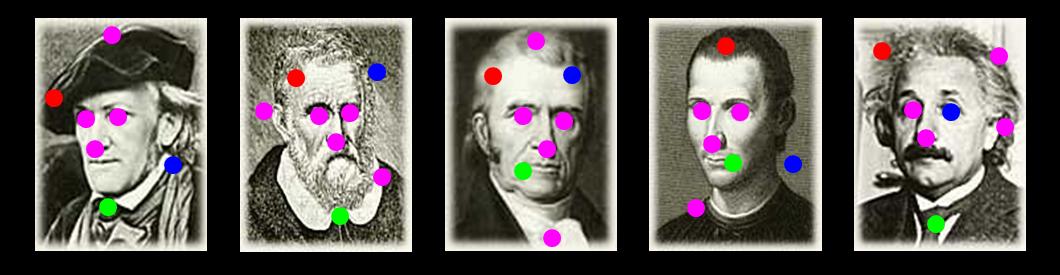

In our toy problem, we use a 3 part model. We have no idea initially, as to the optimal parameter settings, so we start off with a random assignment. In the figure below we show the best assignment in each frame, according these initial parameters. The red dot indicates the best assignment for the first part; green for the second and blue for the third. The remaining regions assigned to the background model are in magenta. Notice that there is no consistency between frames.

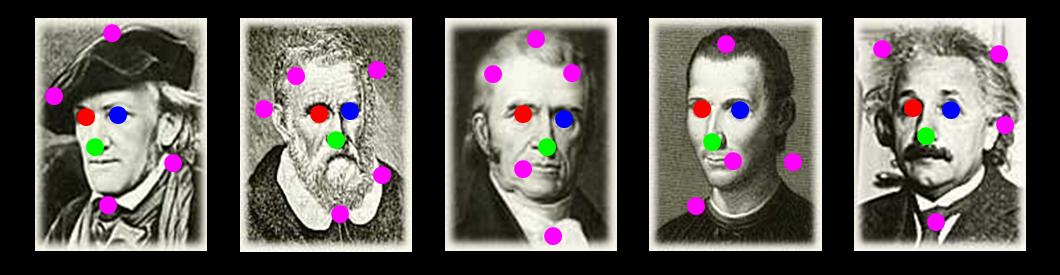

The EM algorithm uses an iterative approach, alternatively optimizing the parameters and then the assignments to find the optimal settings for both. After running it for a few iterations we end up with the assignment shown in the figure below. The model chooses the most stable and consistent configuration (and appearance & scale) of the regions, with the more random regions in the background being assigned to the background model. Note in finding the optimal parameters, we are simultaneously fitting the shape, scale, appearance and occlusion statistics of the model. Many other approaches optimize the different properties separately.

Once we have found to optimal setting of the parameters, we can then use the model to recognize new instances of the category in new images. Read the Recognition section for more details.

References:

A. Dempster, N. Laird, and D. Rubin. Maximum likelihood from incomplete data via the em algorithm. JRSS B, 39:1–38, 1976.