|

|

As I mentioned in class this week, in order to efficiently render fully shaded versions of our animated shapes, we are going to need to paint them as individual triangles into the image, while interpolating color and perspective z.

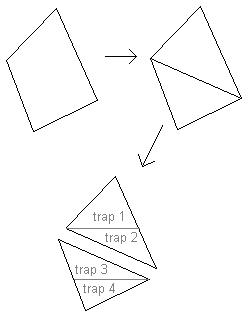

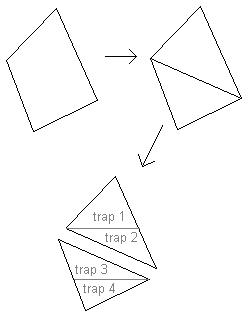

As I said in class, some of your polygons have four vertices. Fortunately you can always convert a four sided polygon to two triangles at the moment when you need to render it:

(a,b,c,d) → (a,b,c) and (a,c,d)The most computationally efficient way to do shading is to only apply a shading algorithm (such as the Phong shading algorithm) at the vertices. This is call vertex shading.

The more flexible and powerful (although slower) way to do shading is to apply a shading algorithm at each pixel, interpolating the surface normals themselves. This is called pixel shading, and the interpolation itself is called Phong interpolation (not to be confused with Phong shading).

In practice, renderers tend to use a combination of vertex shaders and pixel shaders. For now we will just be doing vertex shading, because it is simple and it runs fast. Later, if there is time, we might try pixel shading and Phong interpolation, which can help with things like texturing.

When you do vertex shading, you first do the shading algorithm at each vertex of your polygon - so you already have colors at each vertex, and now you just need to interpolate them. This means that by the time you do scan conversion, the data you have at each vertex is:

[ px , py , pz , red , green , blue ]You are going to use the

px

and

py

to figure out which pixel the vertex falls on in the image,

first you do the perspective calculation.

If you place the camera at the origin, as we

did in our ray tracing assignments, then

the perspective computation looks like this:

px ← fx / z py ← fy / z pz ← 1 / zOn the other hand, if you place the camera at point (0,0,f) along the positive z axis, looking at the origin, as we did in the earlier part of the semester (so that you can center your geometry around the origin), then the perspective computation looks like this:

px ← fx / (f - z) py ← fy / (f - z) pz ← 1 / (f - z)Feel free to use either of the above two models. Remember: the first one places the camera at the origin, and the second works well if you model your geometry at the origin.

Note: the scale of pz doesn't matter, since pz will be used only to compare the front-to-back order of scan-converted triangles.

After computing the perspective transformation, you just do your standard viewport transformation on px,py to compute which pixel the vertex lands on.

As we discussed in class,

you are going to be implementing

the Z-buffer algorithm,

in which

you interpolate the three vertex

[ red , green , blue , pz]

values

over all the image pixels covered by the triangle

in order to figure out

which triangle is the frontmost one

at each pixel of the image,

and also to what color should be at that pixel.

Next week I am going to ask you to implement the complete Z-buffer algorithm. This week I just want you to work on the core part of the scan conversion: Interpolating vertex values across image pixels.

As we discussed in class, this scan-conversion step is the key place in your rendering pipeline where things are either going to be fast enough to do interactive graphics, or not. There are two things that are crucial for making this algorithm go fast:

|

|

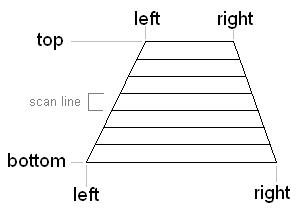

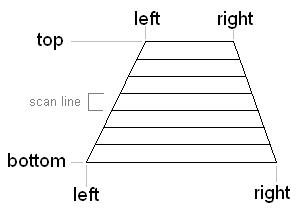

Once you have a trapezoid, then, as we said in class, the only thing you need to do is efficiently interpolate the [r,g,b,pz] values from the four corners down to the pixel level.

To find the interpolated values at any given pixel (x,y), you first find the interpolated values at the left and right edges of each scan line, and then you interpolate those two values to get the value at the pixel. In other words:

// COMPUTE TOP-TO-BOTTOM INTERPOLATION FRACTION FOR THIS SCAN LINE

ty = (y - TLy) / (BLy - TLy)

// LINEARLY INTERPOLATE TO GET VALUES AT LEFT AND RIGHT EDGES OF THIS SCAN LINE

L = lerp(ty, TL, BL)

R = lerp(ty, TR, BR)

// COMPUTE LEFT-TO-RIGHT INTERPOLATION FRACTION OF THE PIXEL

tx = (x - Lx) / (Rx - Lx)

// LINEARLY INTERPOLATE TO GET VALUE AT PIXEL

[r,g,b,pz]x,y = lerp(tx, L, R)

where

L

and

R

are vectors containing values for r,g,b and pz.

Homework due Thursday April 26

For your homework, which is due next Thursday, you should at least demonstrate that you can split a triangle into two trapezoids, and then apply the above math to interpolate [r,g,b,pz] values down to the pixel level. To do this, I suggest you write a Java applet that extends MISApplet (which is what you did for ray tracing), and show that your applet correctly interpolates given any choices of locations [x,y] and colors [r,g,b] at each of the three triangle vertices.

Once you get that much working, try implementing the two kinds of speed-ups, iterative methods and integer arithmetic.

In more detail, here's how you can implement those speed-ups:

// COMPUTE TOTAL NUMBER OF SCAN LINES IN TRAPEZOID

int n = BLy - TLy

dL_dy = (BL - TL) / n

dR_dy = (BR - TR) / n

L = TL

R = TR

for (int y = TLy ; y <= BLy ; y++) {

// INCREMENTALLY UPDATE VALUES ALONG LEFT AND RIGHT EDGES

L += dL_dy

R += dR_dy

// COMPUTE TOTAL NUMBER OF PIXELS ACROSS THIS SCAN LINE

int m = Rx - Lx

d_dx = (R - L) / m

[r,g,b,pz] = L

for (int x = Lx ; x < Rx ; x++) {

// INCREMENTALLY UPDATE VALUE AT PIXEL

[r,g,b,pz] += d_dx

}

}

If you implement both of the above speed-ups, you'll see a very dramatic improvement in running time.