Homework 6, due Tuesday, Nov 16

Meshes and Z-buffers

We're going back mesh

objects,

but we'll be using the PixApplet method

for setting the color at each pixel

that you have been using for Ray Tracing.

And this time you'll be able to use a more

general mesh structure.

I'll be going over all of the material

here

in detail in class next Tuesday (Nov 9),

and then you'll still have another week

after that before you need to hand in

this assignment.

As we discussed in class, a more general way

to store polyhedral meshes is to use

a vertex array and a face array.

Each element of the

vertex array contains point/normal values for a single vertex:

vertices = {

{ x,y,z, nx,ny,nz }0,

{ x,y,z, nx,ny,nz }1, ... }

Each element of the face array contains

an ordered list of the vertices in that face:

faces = {

{ v0,v1,v2,... }0,

{ v0,v1,v2,... }1, ... }

A vertex is specified by both its location

and its normal vector direction.

As I said in class, if vertex normals are different,

then two vertices are not the same.

For example, here is a declaration for the vertices

and the faces of a unit cube:

int[][] faces = {

{ 0, 1, 2, 3 },

{ 4, 5, 6, 7 },

{ 8, 9, 10, 11 },

{ 12, 13, 14, 15 },

{ 16, 17, 18, 19 },

{ 20, 21, 22, 23 }

};

double[][] vertices = {

{-1,-1,-1,-1, 0, 0 },

{-1,-1, 1,-1, 0, 0 },

{-1, 1, 1,-1, 0, 0 },

{-1, 1,-1,-1, 0, 0 },

{ 1,-1,-1, 1, 0, 0 },

{ 1, 1,-1, 1, 0, 0 },

{ 1, 1, 1, 1, 0, 0 },

{ 1,-1, 1, 1, 0, 0 },

{-1,-1,-1, 0,-1, 0 },

{ 1,-1,-1, 0,-1, 0 },

{ 1,-1, 1, 0,-1, 0 },

{-1,-1, 1, 0,-1, 0 },

{-1, 1,-1, 0, 1, 0 },

{-1, 1, 1, 0, 1, 0 },

{ 1, 1, 1, 0, 1, 0 },

{ 1, 1,-1, 0, 1, 0 },

{-1,-1,-1, 0, 0,-1 },

{-1, 1,-1, 0, 0,-1 },

{ 1, 1,-1, 0, 0,-1 },

{ 1,-1,-1, 0, 0,-1 },

{-1,-1, 1, 0, 0, 1 },

{ 1,-1, 1, 0, 0, 1 },

{ 1, 1, 1, 0, 0, 1 },

{-1, 1, 1, 0, 0, 1 }

};

compute normals, transforming normals:

If you want to create the illusion of smooth

interpolated normals when you're approximating

rounded shapes (like spheres and cylinders)

you can do so either by using special

purpose methods for that particular shape (like using the direction

from the center of a sphere to each vertex),

or else you can use the following more general purpose method:

-

For each face, approximate the gradient for

that face by taking the cross product between

v1-v0 and

v2-v1,

where

v0, v1 and

v2 are the first three vertices in that face.

-

For each vertex, sum up the

gradients for all faces that contain that vertex.

Normalizing this sum gives a good approximation

to the normal vector at this vertex.

To transform a normal vector, you

need to transform it by the transpose of M-1,

where M is the matrix that

you are using to transform the associate vertex location.

As before, you can use the

MatrixInverter class

to compute a matrix inverse.

As usual, try to make fun, cool and interesting content.

See if you can make various shapes that are built up from

things like tubes and cylinders or tori, as in

the applet I showed in class.

We haven't done the math yet to do spline surfaces like teapots.

Z-BUFFERING:

The Z-buffer algorithm is a way

to get from triangles to shaded pixels.

You use the Z buffer algorithm

to figure out which thing is in front

at every pixel when you are creating

fully shaded versions of your mesh objects,

such as when you use the Phong surface shading algorithm.

You will want to have a list of Materials,

where each Material object gives the data that

you need to simulate a particular

kind of surface using the Phong algorithm

(eg: DiffuseColor, SpecularColor, etc.).

You will also want to have a list of Light source objects,

just as you did when you were doing ray tracing.

The algorithm starts with an empty zBuffer, indexed by pixels [X,Y],

and initially set to zero for each pixel.

You also need an image FrameBuffer filled

with background color.

This frameBuffer can be the pix[] array you currently use for ray tracing.

The general flow of things is:

-

Loop through all your polygons, where each

polygon has a list of vertices and

a Material object identifier.

-

Split the polygon into triangles, where each triangle vertex has:

- An (x,y,z) location

- A (nx,ny,nz) surface normal

-

Transform the vertices of the triangle

according to your animation.

-

Do shading/lighting on each vertex,

using the (x,y,z) and (nx,ny,nz) and

the Material properties.

After you do this,

each triangle vertex has:

- An (x,y,z) location

- An (r,g,b) color

-

Do the view transformation on the x,y,z location of each vertex

-

Do the perspective transformation on the x,y,z location of each vertex

to (px,py,pz) = (f*x/z,f*y/z,f/z), where f is focal length.

-

Do the viewport transformation on px an py, to convert them

to integer pixel values (X,Y).

-

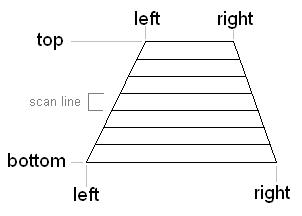

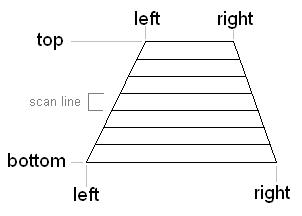

Split the triangle into a top and bottom scan-line-aligned trapezoid.

To do this you'll need to split one of the triangle's edges,

and you'll need to interpolate the location and color

at that edge.

Each of these trapezoids has four vertex locations

on the image:

(XTL, YTOP) (XTR, YTOP)

(XBL, YBOTTOM) (XBR, YBOTTOM)

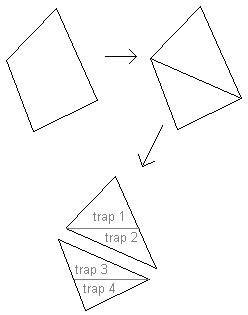

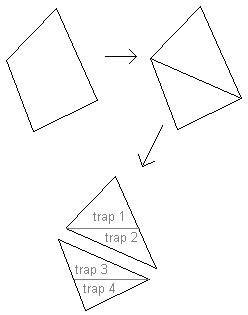

You can see the process repesented here:

First a polygon is split into triangles,

and the each triangle is split into scan-line aligned

trapezoids:

Each vertex of one of these scan-line aligned trapezoids

will have both color and perspective z, or (r,g,b,pz).

-

Scan convert the trapezoid by linear interpolation to get

a single span with a leftmost pixel X_LEFT and a rightmost pixel X_RIGHT.

You will have an (r,g,b,pz) at each of these ends.

-

Scan convert the single span by linear interpolation

to get an (r,g,b,pz) value at each pixel.

If pz < zBuffer[X,Y] then replace the values at that pixel:

zBuffer[X,Y] ← pz

frameBuffer[X,Y] ← (r,g,b)

-

Display the frameBuffer

A note about linear interpolation:

In order to interpolate values from the triangle

to the trapezoid, then from the trapezoid to

the horizontal span for each scan-line, then

from the span down individual pixels,

you'll need to use linear interpolation.

Generally speaking, linear interpolation

involves the following two steps:

-

Compute some linear interpolant t, where

t is between zero and one.

-

Use t to do linear interpolation between

two values a and b:

value = a + t * (b - a)

In order to compute t, you just need your

extreme values and the intermediate value

where you want the results.

For example, to compute the value of t

to interpolate from scan-line Y_TOP and Y_BOTTOM

to a single scan-line Y:

t = (double)(Y - YTOP) / (YBOTTOM - YTOP)

Similarly, to compute the value of t

to interpolate from pixels X_lEFT and X_RIGHT

to values at a single pixel X:

t = (double)(X - XLEFT) / (XRIGHT - XLEFT)