| Polygon to trapezoids: | One trapezoid in detail: | |

|---|---|---|

|

|

For this assignment you're going to implement the first steps of the scan conversion algorithm. For content, use a scene you've created, or create an interesting new one, that consists of one of your parametric surface meshes (eg: spheres, tori, etc).

The first step for this week's work is to calculate surface normals at all the mesh vertices. As I described in class, given a mesh stored as a two dimensional array of points pi,j, you do this as follows:

for each mesh location i,j:

compute edge vectors u = pi+1,j-pi,j and v = pi,j+1-pi,j

store cross product u×v in an array ci,j

for each mesh location i,j:

normali,j = normalize(ci-1,j-1 + ci,j-1 + ci-1,j + ci,j)

When I refer to i-1,i+1,j-1,j+1

above, of course I don't mean you should index

out of bounds of the array that stores the mesh points.

Rather, you should either wrap around,

by doing something like (i+1)%N,

(in a case like sphere latitude, where the

right edge wraps around into the left edge),

or, in a case like the north and south

poles of a latitude/longitude sphere mesh you can

just sum up all the computed cross products

in the first row of the mesh to compute

the normal at the north pole.

Similarly,

sum up all the computed cross products

in the last row of the mesh to compute

the normal at the south pole.

The second step for this week's assignment is to place your mesh of points and normals on the image, by doing scan conversion. You can do this as follows:

Compute and store the surface normal vector at all vertices

Initialize the zbuffer

For each polygon

For each vertex:

Compute (r,g,b)v = shade(surfacePointv, surfaceNormalv)

Compute perspective coords (px,py,pz) = (fx/z,fy/z,1/z)

Viewport transform (px,py) to get pixel coords of vertex

Scan-convert the polygon into pixels, interpolating (r,g,b,pz)

At each pixel (i,j):

if (pz < zbuffer[i][j]) {

framebuffer[i][j] = packPixel(r,g,b)

zbuffer[i][j] = pz

}

You can use one of two methods to shade each vertex:

red = 0.5 + 0.5 * nx green = 0.5 + 0.5 * ny blue = 0.5 + 0.5 * nz

The model works as follows. The ambient component is just modeled by a constant color, such as (ar,ag,ab) = (0.1,0.1,0.1). The diffuse component is modeled by taking a dot product between the surface normal direction and the direction to the light source, and then never allowing the result to go negative. This component is given by: (dr,dg,db) max(0, n • L), where (dr,dg,db) is the diffuse color, n is the surface normal direction vector, and L is the direction vector to the light source. For example, if L = normalize((1,1,1)) = (0.577,0.577,0.577), then the object will appear to be lit from the top front right. The complete Gouraud shading expression is:

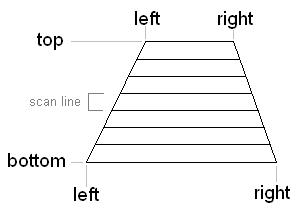

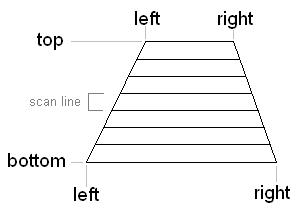

As we said in class, to scan-convert a polygon you first split it up into triangles, and then split each triangle into two scan-line aligned trapezoids:

| Polygon to trapezoids: | One trapezoid in detail: | |

|---|---|---|

|

|

Within each trapezoid you should obtain the value at the left and right edges of each scan-line by linearly interpolating (r,g,b,pz). Then within each scan-line you should obtain the value at each pixel by linearly interpolating (r,g,b,pz) between the left and right edges of that scan-line.

Remember that to do linear interpolation you first need to get a value t between 0 and 1 to use as an interpolant. For example, if you're trying to interpolate between scan-line y0 and y1, then at scan-line y, the value of interpolant t = (y - y0) / (y1 - y0).

Once you have your interpolant t

then you can interpolate any

values a and b between the two scan-lines

by using a linear interpolation or "lerp" function:

lerp(double t, double a, double b) { return a + t * (b - a); }

You can then use the same approach to interpolate down to individual pixels within each scan-line between the values at left pixel x0 and right pixel x1.