Start Lecture #1

I start at 0 so that when we get to chapter 1, the numbering will agree with the text.

There is a web site for the course. You can find it from my home page, which is http://cs.nyu.edu/~gottlieb

Start Lecture #1marker above can be thought of as

after lecture #0.

The course text is Dale, Joyce, and Weems,

Object-Oriented Data Structures Using Java

.

It is available at the NYU Bookstore.

Replyto contribute to the current thread, but NOT to start another topic.

top post, that is, when replying, I ask that you either place your reply after the original text or interspersed with it.

musttop post.

Grades are based on the labs, the midterm, and the final exam, with each very important. The weighting will be approximately 30%*Labs, 30%*Midterm, and 40%*Final (but see homeworks below).

I use the upper left board for lab/homework assignments and announcements. I should never erase that board. Viewed as a file it is group readable (the group is those in the room), appendable by just me, and (re-)writable by no one. If you see me start to erase an announcement, let me know.

I try very hard to remember to write all announcements on the upper left board and I am normally successful. If, during class, you see that I have forgotten to record something, please let me know. HOWEVER, if I forgot and no one reminds me, the assignment has still been given.

I make a distinction between homeworks and labs.

Labs are

Homeworks are

Homeworks are numbered by the class in which they are assigned. So any homework given today is homework #1. Even if I do not give homework today, the homework assigned next class will be homework #2. Unless I explicitly state otherwise, all homeworks assignments can be found in the class notes. So the homework present in the notes for lecture #n is homework #n (even if I inadvertently forgot to write it to the upper left board).

I feel it is important for majors to be familiar with basic

client-server computing (nowadays sometimes called

cloud computing

in which one develops on a client machine (for

us, most likely your personal laptop), but run programs on a remote

server (for us, most likely i5.nyu.edu).

You submit the jobs from the server and your final product remains

on the server (so that we can check dates in case we lose you

lab).

I have supposedly given you each an account on i5.nyu.edu. To access i5 is different for different client (laptop) operating systems.

You may develop lab assignments on any system you wish, but ...

I sent it ... I never received itdebate. Thank you.

The following is supposed to work; let's see.

mailx -s "the subject goes here" gottlieb@nyu.edu words go here and go in the msg. The next line causes a file to be placed in the msg (not attached simply copied in) ~r filename .

Good methods for obtaining help include

You labs must be written in Java.

Incomplete

The rules for incompletes and grade changes are set by the school and not the department or individual faculty member.

The rules set by CAS can be found here. They state:

The grade of I (Incomplete) is a temporary grade that indicates that the student has, for good reason, not completed all of the course work but that there is the possibility that the student will eventually pass the course when all of the requirements have been completed. A student must ask the instructor for a grade of I, present documented evidence of illness or the equivalent, and clarify the remaining course requirements with the instructor.

The incomplete grade is not awarded automatically. It is not used when there is no possibility that the student will eventually pass the course. If the course work is not completed after the statutory time for making up incompletes has elapsed, the temporary grade of I shall become an F and will be computed in the student's grade point average.

All work missed in the fall term must be made up by the end of the following spring term. All work missed in the spring term or in a summer session must be made up by the end of the following fall term. Students who are out of attendance in the semester following the one in which the course was taken have one year to complete the work. Students should contact the College Advising Center for an Extension of Incomplete Form, which must be approved by the instructor. Extensions of these time limits are rarely granted.

Once a final (i.e., non-incomplete) grade has been submitted by the instructor and recorded on the transcript, the final grade cannot be changed by turning in additional course work.

This email from the assistant director, describes the departmental policy.

Dear faculty, The vast majority of our students comply with the department's academic integrity policies; see www.cs.nyu.edu/web/Academic/Undergrad/academic_integrity.html www.cs.nyu.edu/web/Academic/Graduate/academic_integrity.html Unfortunately, every semester we discover incidents in which students copy programming assignments from those of other students, making minor modifications so that the submitted programs are extremely similar but not identical. To help in identifying inappropriate similarities, we suggest that you and your TAs consider using Moss, a system that automatically determines similarities between programs in several languages, including C, C++, and Java. For more information about Moss, see: http://theory.stanford.edu/~aiken/moss/ Feel free to tell your students in advance that you will be using this software or any other system. And please emphasize, preferably in class, the importance of academic integrity. Rosemary Amico Assistant Director, Computer Science Courant Institute of Mathematical Sciences

The university-wide policy is described here

You should have taken 101 (or if not should have experience in Java programming).

A goal of this course is to improve your Java skills. We will not be learning many new aspects of Java. Instead, we will be gaining additional experience with those parts of Java taught in 101.

The primary goal of this course is to learn several important data structures and to analyze how well they perform. I do not assume any prior knowledge of this important topic.

A secondary goal is to learn and practice a little client-server computing. My section of 101 did this, but I do not assume you know anything about it.

In 101 our goal was to learn how to write correct programs in Java.

Step 1 in that goal, the main step in 101, was to learn how to write anything in Java.

We did consider a few problems (e.g., sorting) that required some thought to determine a solution, independent of the programming language, but mostly we worried about how to do it in Java.

In this course we will go beyond getting a correct program and seek programs that are also high performance, i.e., that have comparatively short running time even for large problems.

One question will be how to measure the performance.

Of course we can not sacrifice correctness for performance, but sometimes we will sacrifice simplicity for performance.

public class Max {

public int max(int n, int[]a) {

// assumes n > 0

int ans = a[0];

for (int i=0; i<n; i++)

if (a[i] > ans)

ans = a[i];

return ans;

}

}

Assume you have N numbers and want to find the kth largest. If k=1 this is simply the minimum; If k=N this is simply the maximum; If k=N/2 this is essentially the median.

To do maximum you would loop over N numbers as shown on the right. Minimum is the same simple idea.

Both of these are essentially optimal: To find the max, we surely need to examine each number so the work done will be proportional to N and the program above is proportional to N.

Homework: Write a Java method that computes the 2nd largest. Hint calculate both the largest and 2nd largest; return the latter.

The median is not so simple to do optimally. One method is fairly obvious and works for any k: Read the elements into an array; sort the array; select the desired element.

It might seem silly to sort all N elements since we don't care about the order of all those past the median. So we could do the following.

This second method also works for any k.

Both these methods are correct (the most important criterion) and simple. The trouble is that neither method has optimal performance. For large N, say 10,000,000 and k near N/2, both methods take a long time; whereas a more complicated algorithm is very fast.

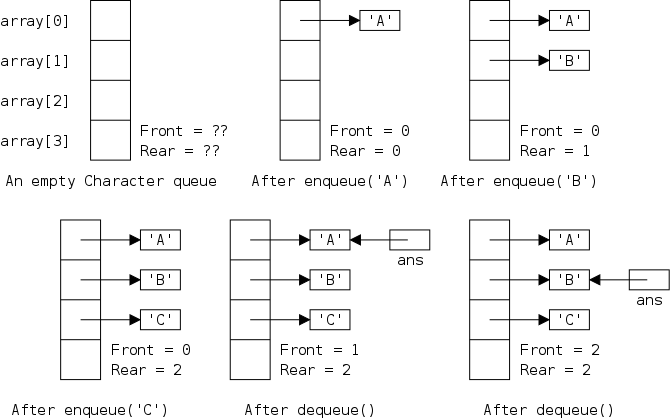

Another example is given in section 5.3 where we choose a circular array implementation for queues rather than a simpler array implementation because the former is faster.

In 101, we had objects of various kinds, but basically whenever we

had a great deal of data, it was organized as an array (of

something).

An array is an example of a data structure

.

As the course title suggests, we will learn a number of other data

structures this semester.

Sometimes these data structures will be more complicated than simpler ones you might think of. Their compensating advantage is that they have higher performance.

Also we will learn techniques useful for large programs. Although we will not write any large programs, such programs are crucially important in practice. For example, we will use Java generics and interfaces to help specify the behavior we need to implement.

Client-server systems (now often referred to as

cloud computing

are of increasing importance.

The idea is that you work on your client computer with assistance

from other server systems.

We will do some of this.

In particular, we will use the computer i5.nyu.edu as a server.

We need to confirm that everyone has an account on i5.nyu.edu.

Homework: As mentioned previously, those of you with Windows clients, need to get putty and winSCP. They are definitely available on the web at no cost. (The last time I checked, winSCP available through ITS and putty was at http://bit.ly/hy9IZj).

I will do a demo of this software next class. Please do install it by then so you can verify that it works for you.

macOS users should see how to have their terminal run in plain (ascii) text mode.

Read.

Read.

Read.

We have covered this in 101, but these authors tie the attributes of object orientation to the goals of quality software just mentioned.

Read

This section is mostly a quick review of concepts we covered in 101. In particular, we covered.

Most of you learned Java from Liang's book, but any Java text should be fine. Another possibility is my online class notes from 101.

The book does not make clear that the rules given are for data fields and methods. Visibility modifiers can also be used on classes, but the usage and rules are different.

I have had difficulty deciding how much to present about visibility. The full story is complicated and more than we need, but I don't want to simplify it to the extent of borderline inaccuracy. I will state all the possibilities, but describe only those that I think we will use.

A .java file consists of 1 or

more classes.

These classes are called top level

since they are not inside

any other classes.

Inside a class we can define methods, (nested) classes, and fields The later are often called data fields and sometimes called variables. I prefer to reserve the name variables for those declared inside methods.

class TopLevel1 {

public int pubField;

private int pvtField;

protected int proField;

int defField;

public class PubNestedClass {}

private class PriNestedClass {}

protected class ProNestedClass {}

class DefNestedClass {}

}

public class TopLevel2 {

public static void main (String[] arg) {}

private static void pvtMethod() {}

protected static void proMethod() {}

static void defMethod() {}

}

Top-level classes have two possible visibilities: public and package-private (the default when no visibility keyword is used). Each .java file must contain exactly one top-level public class, which corresponds to the name of the file

Methods, fields, and nested classes each have four possible visibilities: public, private, protected, and package-private (the default).

The two visibilities for top-level classes, plus the four visibilities for each of the three other possibilities give a total of 14 possible visibilities, all of which are illustrated on the right.

Question: What is the filename?

For now we will simplify the situation and write .java files as shown on the right.

// one package statement

// import statements

public class NameOfClass {

// private fields and

// public methods

}

An object is an instantiation of a class. Each object contains a copy of every instance (i.e., non-static) field in the class. Conceptually, each object also contains a copy of every instance method. (Some optimizations do occur to reduce the number of copies, but we will not be concerned with that.)

In contrast all the objects instantiated from the same class share class (i.e., static) fields and methods.

public class ReferenceVsValueSemantics {

public static void main (String[] args) {

int a1 = 1; C c1 = new C(1);

int a2 = 1; C c2 = new C(1);

a1 = a2; c1 = c2;

a1 = 5; c1.setX(5);

System.out.println(a2 + " " + c2.getX());

}

}

public class C {

private int x;

C (int x) { this.x = x; }

public int getX() { return x; }

public void setX(int x) { this.x = x; }

}

javac ReferenceVsValueSemantics.java C.java

java ReferenceVsValueSemantics

This is a huge deal in Java. It is needed to understand the behavior of objects and arrays. Unfortunately it can seem a little strange at first. Consider the program on the right, which is composed of two .java files.

Below the source files are the two commands I used to compile and run the program.

I formatted the main method to show that the a's and the c's are treated similarly.

Let's review this small example carefully as it illustrates a number of issues.

You should be absolutely sure you understand why the two values printed are not the same.

Start Lecture #2

Remark: If your last name begins with A-K your grader is Arunav Borthakur; L-Z have Prasad Kapde. This determines to whom you email your lab assignments

Remark: Chen has kindly created a wiki page on using WinSCP and Putty. See https://wikis.nyu.edu/display/~xc402/How+to+connect+to+NYU+i5+server.

Lab: Lab 1 parts 1 and 2 are assigned and are due 13 September 2012. Explain in class what is required.

In all Java systems, you run a program by invoking the

Java Virtual Machine

(aka JVM).

The primitive method to do this, which is the only one I use, is to

execute a program named java.

The argument to java is the name of the class file (without the .class extension) containing the main method. A class file is produced from a Java source file by the Java compiler javac. Each .java file is often called a compilation unit since each one is compiled separately.

If you are using an Integrated Development Environment

(IDE), you probably click on some buttons somewhere, but the result

is the same: The Java compiler javac is given each .java

file as a compilation unit and produces class files; the JVM

program java is run on the class file containing the main

method.

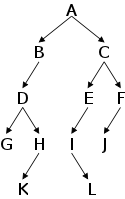

Inheritance was well covered in 101; here I add just a few comments.

public class D extends B {

...

}

biggerthan B objects.

biggerthan the set of C objects

Java (unlike C++) permits only what is called

single inheritance

.

That is, although a parent can have several children, a child can

have just one parent (a biologist would call this asexual

reproduction).

Single inheritance is simpler than multiple inheritance.

For one thing, single inheritance implies that the classes form a

tree, i.e., a strict hierarchy.

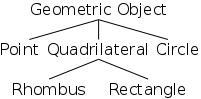

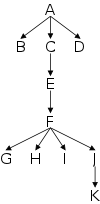

For example, on the right we see a hierarchy of geometric concepts.

Note that a point is a

geometric object and a rhombus

is a

quadrilateral.

Indeed, for every line in the diagram, the lower concept is a

upper concept.

This so-called ISA relation suggests that the lower concept should

be a class derived from the upper.

Indeed the handout shows classes with exactly these inheritances. The Java programs can be found here.

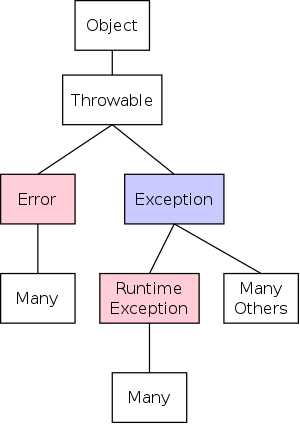

You might think that Java classes form a forest not a tree since you can write classes that are not derived from any other. One example would be ReferenceVsValueSemantics above. However, this belief is mistaken. Any class definition that does not contain the extends keyword, actually extends the built-in class object. Thus Java classes do indeed form a tree, with object as the root.

(I reordered the material in this section.)

Java permits grouping a number of classes together to for a package, which gives the following advantages.

package packageName;

The syntax of the package statement is trivial as shown on the right. The only detail is that this statement must be the first (nonblank, noncomment) line in the file.

import packageName.ClassName; import packageName.*

To access the contents of another package, you must either

import java.util.Scanner;

public class Test {

public static void main(String[] arg) {

int x;

Scanner getInput = new Scanner(System.in);

x = getInput.nextInt();

}

public class Test {

public static void main(String[] arg) {

int x;

java.util.Scanner getInput =

new java.util.Scanner(System.in);

x = getInput.nextInt();

}

}

For example, recall from 101 the important Scanner class used to read free-form input. This class is found in the package java.util.

To read an int, one creates a Scanner object (I normally call mine getInput) and then invokes the nextInt() method in this object.

The two examples on the right illustrate the first and third procedures mentioned above for accessing a package's contents. (For the second procedure, simply change java.util.Scanner to java.util.*.

Note that both the Scanner class and the nextInt() method have public visibility. How do I know this and where can you go to check up on me?

The key is to go to java.sun.com. This will send you to an Oracle site, but Java is a Sun product; Oracle simply bought Sun. You want Java SE-->documentation-->API

Let's check and see if I was right that Scanner and nextInt() are public.

Homework: How many methods are included in the Scanner class? How many of them are public?

The name of a package constrains where its files are placed in the filesystem. First, assume a package called packageName consisting of just one file ClassName.java (this file defines the public class ClassName). Although not strictly necessary, we will place this .java files in a directory called packageName.

javac packageName/ClassName.java

To compile this file we go to the parent directory of packageName and type the command on the right. (I use the Unix notation, / for directories; Windows uses \.)

Unlike the situation above, most packages have multiple .java files.

javac packageName/*.java

If the package packageName contains many .java files, we place them all in a directory also called packageName and compile them by going to the parent of packageName and executing the command on the right.

Just as the .java files for a package named packageName go in a directory named packageName, the .java files for a package named first.second go in the subdirectory second of a directory named first.

javac first/second/*.java

To compile these .java files, go to the parent of first and type the command on the right

javac first/second/third*.java

Similarly a package named first.second.third would be compiled by the command on the right.

The simplest situation is to leave the generated .class flies in the same directory as the corresponding .java files and execute the java command from the same directory that you executed the javac command, i.e., the parent directory.

I show several examples on the right.

java packageName/ClassName java testPackage/Test java bigPackage/M java first/second/third/M

The examples above assume that there is just one package, we keep the .class files in the source directory, and we only include classes from the Java library. All of these restrictions can be dropped by using the CLASSPATH environment variable.

public class Test {

public static void main (String[] args) {

C c = new C();

c.x = 1;

}

}

public class C {

public int x;

}

The basic question is

Where do the compiler and JVM look for .java

and .class files?

.

First consider the 2-file program on the right. You can actually compile this with a simple javac Test.java. How does the compiler find the definition of the class C?

The answer is that it looks in all .java files in the current directory. We use CLASSPATH if we want the compiler to look somewhere else.

Next consider the familiar line

include java.util.Scanner;

.

How does the compiler (and the JVM) find the definition of

the Scanner class?

Clearly java.util is a big clue, but where do they look

for java.util.

The answer is that they look in the system jar file

(whatever that is).

We use CLASSPATH if we want it to look somewhere else in

addition.

Many of the Java files used in the book are available on the web (see page 28 of the text for instructions on downloading). I copied the directory tree to /a/dale-datastructures/bookFiles on my laptop.

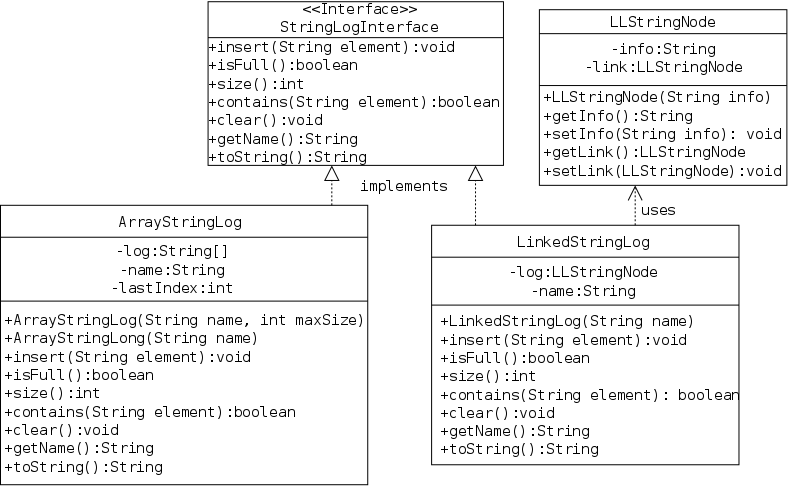

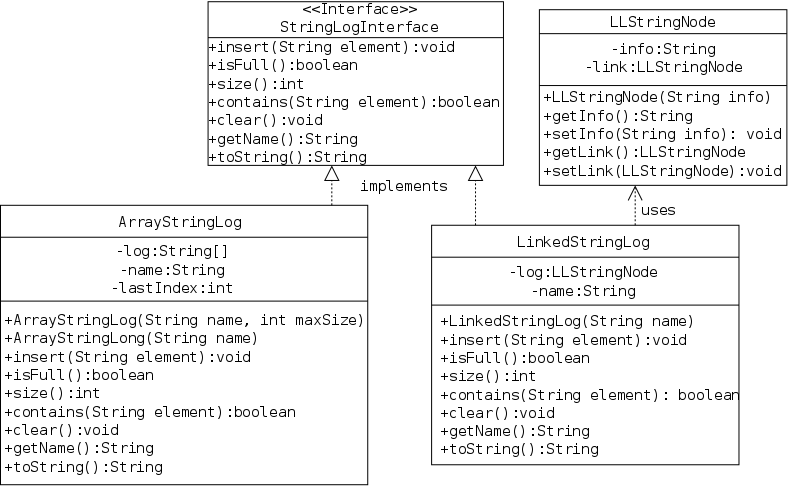

In the subdirectory ch02/stringLogs we find all the .java files for the package ch02.stringLogs.

One of these files is named LLStringNode.java and contains the definition of the corresponding class. Hopefully the name signifies that this class defines a node for a linked list of strings.

import ch02.stringLogs.LLStringNode;

public class DemoCLASSPATH {

public static void main (String[] args) {

LLStringNode lLSN =

new LLStringNode("Thank you CLASSPATH");

System.out.println(lLSN.getInfo());

}

}

I then went to directory java-progs not related to /a/dale-datastructures/bookFiles and wrote the simple program on the right.

A naive attempt to compile this with javac DemoCLASSPATH.java fails since it cannot find a the class LLStringNode in the subdirectory ch02/stringLogs (indeed java-progs has no such directory.

export CLASSPATH=/a/dale-datastructures/bookFiles:.

However once CLASSPATH was set correctly, the compilation succeeded. On my (gnu-linux) system the needed command is shown on the right.

Homework: 28. (When just a number is given it refers to the problems at the end of the chapter in the textbook.)

Data structures describe how data is organized.

Some structures give the physical organization, i.e., how the data is stored on the system. For example, is the next item on a list stored in the next higher address, or does the current item give the location explicitly, or is there some other way.

Other structures give the logical organization of the data. For example is the next item always larger than the current item? For another example, as you go through the items from the first, to the next, to the next, ..., to the last, are you accessing them in the order in which they were entered in the data structure?

Well studied in 101.

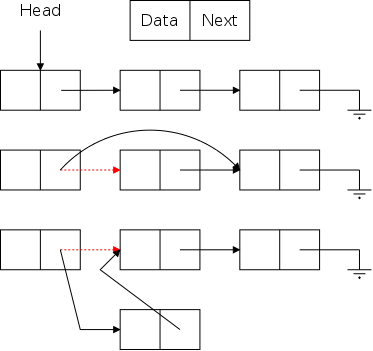

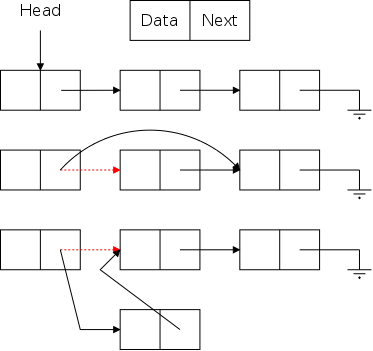

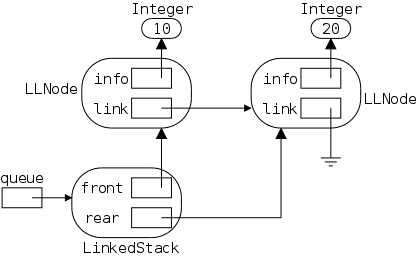

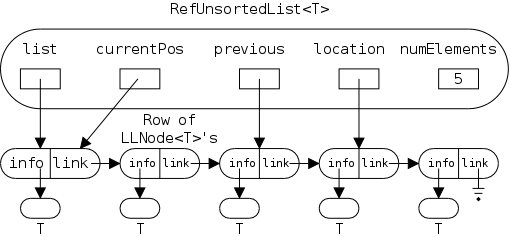

We will study linked implementations a great deal this semester. For now, we just comment on the simple diagram to the right.

groundsymbol from electrical engineering).

Start Lecture #3

Remark: Chen will have an office hour Wednesdays 5-6pm in CIWW 412.

For these structures, we don't describe how the items are stored, but instead which items can be directly accessed.

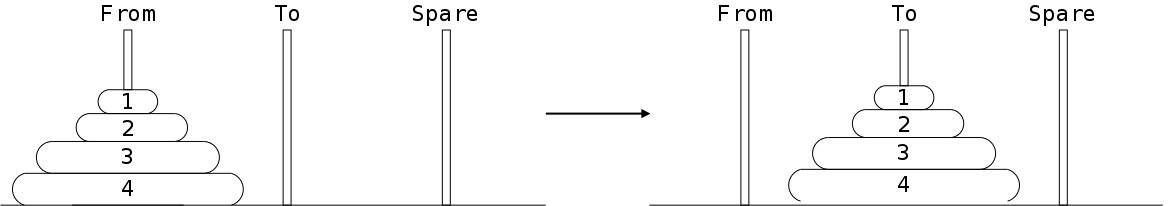

The defining characteristic of a stack is that you can access or remove only the remaining item that was most recently inserted. We say it has last-in, first-out (LIFO) semantics. A good real-world example is a stack of dishes, e.g., at the Hayden dining room.

In Java-speak

we say a stack object has three public

methods: top() (which returns the most recently inserted

item remaining on the list), pop() (which removes the

most recently inserted remaining item), and push() (which

inserts its argument on the top of the list).

Many authors define pop() to both return the top element and remove it from the stack. That is, many authors define pop() to be what we would call top();pop().

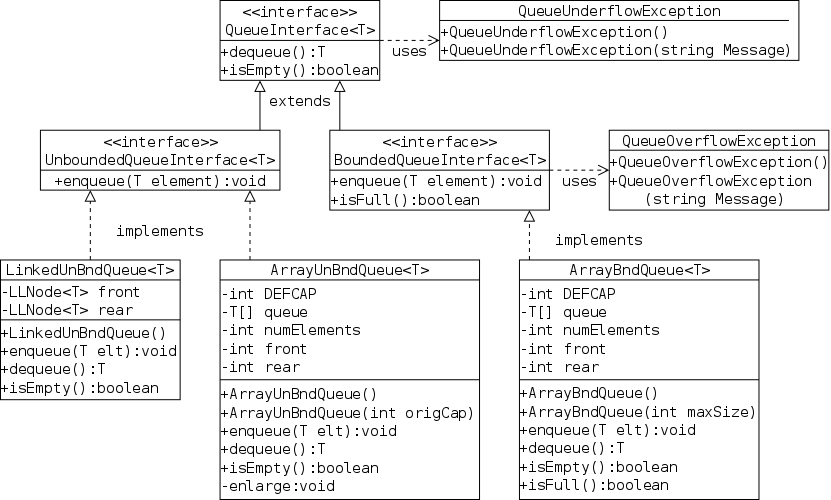

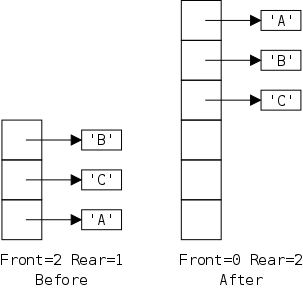

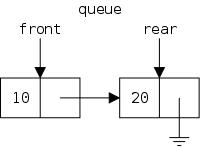

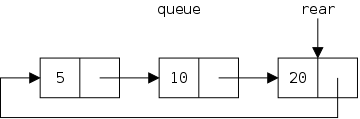

The defining characteristic of a queue is that you can remove only the remaining item that was least recently inserted. We say it has first-in, first-out (FIFO) semantics. A good example is an orderly line at the bank.

In java-speak, we have enqueue() (at the rear) and dequeue() (from the front). (We might also have accessors front(), and rear().

Homework: Customers at a bank with many tellers form a single queue. Customers at a supermarket with many cashiers form many queues. Which is better and why?

Here the first element is the smallest, each succeeding element is larger (or equal to) its predecessor, and hence the last element is the largest. It is also possible for the elements to be in the reverse order with the largest first and the smallest last.

One natural implementation of a sorted list is an array with the elements in order; another is a linked list, again with the elements in order. We shall learn other structures for sorted lists, some of which provide searching performance much higher than either an array or simple linked list.

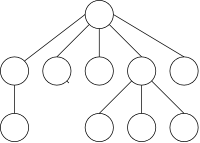

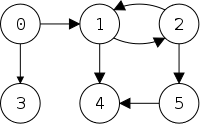

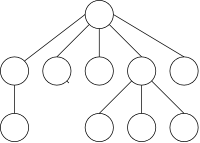

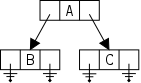

The structures we have discussed are one dimensional (except for higher-dimensional arrays). Each element except the first has a unique predecessor, each except the last has a unique successor, and every element can be reached by starting at one end and heading toward the other.

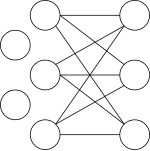

Trees, for example the one on the right, are different. There is no single successor; pictures of trees invariable are drawn in two dimensions, not just one.

Note that there is exactly one path between any two nodes.

Trees are a special case of graphs. In the latter, we drop the requirement of exactly one path between any two nodes. Some nodes may be disconnected from each other and others may have multiple paths between them.

A specific method for organization, either logically or physically.

A references is a pointer to (or the address of) an object; they are typically drawn as arrows.

public class ReferenceVsValueSemantics {

public static void main (String[] args) {

int a1 = 1; C c1 = new C(1);

int a2 = 1; C c2 = new C(1);

a1 = a2; c1 = c2;

a1 = 5; c1.setX(5);

System.out.println(a2 + " " + c2.getX());

}

}

public class C {

private int x;

C (int X) { this.x = x; }

public int getX() { return x; }

public void setX(int x) { this.x = x; }

}

javac ReferenceVsValueSemantics.java C.java

java ReferenceVsValueSemantics

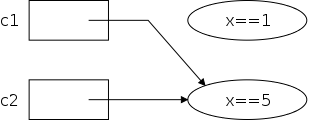

We covered this topic is section 1.3, but it is such a huge deal in Java that we will cover it again here (using the same example). Reference semantics determine the behavior of objects and arrays. Unfortunately these semantics can seem a little strange at first. Consider the program on the right, which is composed of two .java files.

Below the source files are the two commands I used to compile and run the program.

I formatted the main method to show that the a's and the c's are treated similarly.

Let's review this small example carefully as it illustrates a number of issues.

You should be absolutely sure you understand why the two values printed are not the same.

Continuing with the last example, we note that c1 and c2 refer to the same object. They are sometimes called aliases. As the example illustrated, aliases can be confusing; it is good to avoid them if possible.

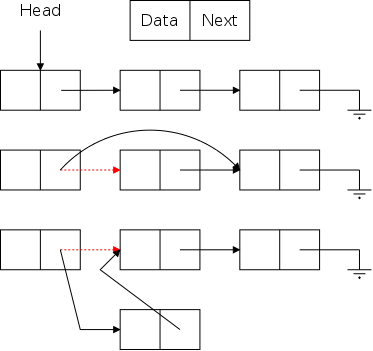

What about the top ellipse (i.e., object) in the previous diagram for which there is no longer a reference? Since it cannot be named, it is just wasted space that the programmer cannot reclaim. The technical term is that the object is garbage. However, there is no need for programmer action or worry, the JVM detects garbage and collects it automatically.

The technical terminology is that Java has a built-in garbage collector.

Thus Java, like most modern programming languages supports the run-time allocation and deallocation of memory space, the former is via new, the latter is performed automatically. We say that Java has dynamic memory management.

// Same class C as above

public class Example {

public static void main (String[] args) {

C c1 = new C(1);

C c2 = new C(2);

C c3 = c1;

for (int i=0; i<1000; i++) {

c3 = c2;

c3 = c1;

}

}

}

Execute the code on the right in class. Note that there are two objects (one with x==1 and one with x==2) and three references (the ones in c1, c2, and c3).

As execution proceeds the number of references to the object with x==1 changes from 1 to 2 to 1 to 2 ... . The same pattern occurs for the references to the other object.

Homework: Can you

write a program where the number of references to a fixed object

changes from 1 to 2 to 3 to 2 to 3 to 2 to 3 ... ?

Can you write a program where the number of references to a fixed

object changes from 1 to 0 to 1 to 0 to 1 ... ?

In Java, when you write r1==r2, where the r's are variables referring to objects, the references are compared, not the contents of the objects. Thus r1==r2 evaluates to true if and only if r1 and r2 are aliases.

We have seen equals() methods in 101 (and will see them again this semester). Some equals() methods, such as the one in the String class, do compare the contents of the referred to objects. Others, for example the one in the object class, act like == and compare the references.

First we need some, unfortunately not standardized, terminology. Assume in your algebra class the teacher defined a function f(x)=x+5 and then invoked it via f(12). What would she call x and what would she call 12. As mentioned above, usage differs.

I call x (the item in the callee's definition) a parameter and I call 12 (the item in the caller's invocation) an argument. I believe our text does as well.

Others refer to the item in the callee as the

formal parameter

and the item in the caller as the

actual parameter

.

Still others use argument

and parameter

interchangeably.

After settling on terminology, we need to understand Java's parameter passing semantics. Java always uses call-by-value semantics. This means.

public class DemoCBV {

public static void main (String[] args) {

int x = 1;

CBV cBV = new CBV(1);

System.out.printf("x=%d cBV.x=%d\n", x, cBV.x);

setXToTen(x, cBV);

System.out.printf("x=%d cBV.x=%d\n", x, cBV.x);

}

public static void setXToTen(int x, CBV cBV) {

x = 10; cBV.x = 10;

}

}

public class CBV {

public int x;

public CBV (int x) {

this.x = x;

}

}

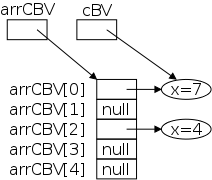

Be careful when the argument is a reference. Call-by-value still applies to the reference but don't mistakenly apply it to the object referred to. For example, be sure you understand the example on the right.

Well covered in 101.

There is nothing new to say: an array of objects is an array of

objects.

You must remember that arrays are references and objects are

references, so there can be two layers of arrows

.

Also remember that you will need a new to create the

array and another new to create each

object.

The diagram on the right shows the result of executing

cBV = new CBV(7); CBV[] arrCBV = new CBV[5]; arrCBV[0] = cBV; arrCBV[2] = new CBV(4);

Note that only two of the five array slots have references to objects; the other three are null.

Lab: Lab 1 part 3 is assigned and is due in 7 days.

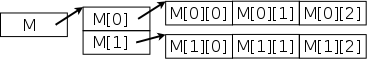

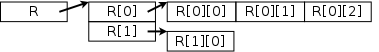

As discussed in 101, Java does not, strictly speaking, have 2-dimensional arrays (or 3D, etc). It has only 1D arrays. However each entry in an array can itself be an array

On the right we see a two dimensional array M with two rows and three columns that can be produced by executing

int[][] M; M = new int [2][3];

Note that M, as well as each M[i] is a reference. The latter are references to int's; whereas, the former is a reference to an array of int's.

2d-arrays such as M above in which all rows have the same length are often called matrices or rectangular arrays and are quite important.

Java however does support so-called ragged arrays

such as

R shown on the right.

That example can be produced by executing

int [][] R; R = new int [2][]; R[0] = new int [3]; R[1] = new int [1];

Homework: Write a Java program that creates a 400 by 500 matrix with entry i,j equal to i*j.

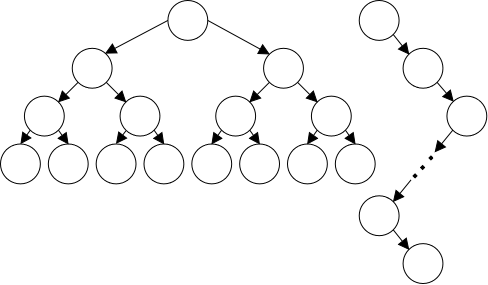

The idea is to approximate the time required to execute an algorithm (or a program implementing the algorithm) independent of the computer language (or the compiler / computer). Most importantly we want to understand how this time grows as the problem size grows.

For example, suppose you had an unordered list of N names and wanted to search for a name that happens not to be on the list.

You would have to test each of the names. Without knowing more, it is not possible to give the exact time required. It could be 3N+4 seconds or 15n+300 milliseconds, or many other possibilities.

But it cannot be 22 seconds or even 22log(N) seconds. You just can't do it that fast (for large N).

If we look at a specific algorithm, say the obvious check one entry at a time, we can see the time is not 5N2+2N+5 milliseconds. It isn't that slow (for large N).

Indeed that obvious algorithm takes AN+B seconds, we just don't know A and B.

We will crudely approximate the above analysis by saying the algorithm has (time) complexity (or takes time) O(N).

That last statement is really sloppy. We just did three analyses. First we found that the complexity was greater than 22log(N), and then we found that the complexity was less than 5N2+2N+5, and finally we asserted the complexity was AN+B. The big-Oh notation strictly speaking covers only the second (upper-bound) analysis; but is often used, e.g., by the authors, to cover all three.

The rest of this optional section is from a previous incarnation of 102, using a text by Weiss.

We want to capture the concept of comparing function growth where

we ignore additive and multiplicative constants.

For example we want to consider 4N2-500N+1 to

be equivalent

to 50N2+1000N and want to

consider either of them to be bigger than

1000N1.5log(N).

Definition: A function T() is said to be big-Oh of f() if there exists constants c and n0 such that T(N)≤cf(N) whenever N≥n0. We write T=O(f).

Definition: A function T() is said to be big-Omega of f() if f=O(T). We write T=Ω(f).

Definition: A function T() is said to be Theta of f() if T=O(f) and T=Ω(f). We write T=Θ(f).

Definition: A function T() is said to be little-Oh of f() if T=O(f) but T≠Θ(f). We write T=o(f).

Definition: A function T() is said to be little-Omega of f() if f=o(T). We write T=ω(f).

It is difficult to decide how much to emphasize algorithmic analysis, a rather mathematical subject. On the one hand

On the other hand

As a compromise, we will cover it, but only lightly (as does our text).

For the last few years we used a different text, with a more mathematical treatment. I have left that material at the end of these notes, but, naturally, it is not an official part of this semester's courses.

As mentioned above we will be using the big-O

(or big-Oh

) notation to indicate we are giving an

approximation valid for large N.

(I should mention that the O above is really the Greek Omicron.)

We will be claiming that, if N is large (enough), 9N30 is insignificant when compared to 2N.

Most calculators cannot handle really large numbers, which makes the above hard to demo.

import java.math.BigInteger;

public class DemoBigInteger {

public static void main (String[] args) {

System.out.println(

new BigInteger("5").multiply(

new BigInteger("2").pow(1000)));

}

}

Fortunately the Java library has a class BigInteger that allows arbitrarily large integers, but it is a little awkward to use. To print the exact result for 5*21000 you would write a program something like the one shown on the right.

To spare you the anxiety of wondering what is the actual answer, here it is (I added the line breaks manually).

53575430359313366047421252453000090528070240585276680372187519418517552556246806 12465991894078479290637973364587765734125935726428461570217992288787349287401967 28388741211549271053730253118557093897709107652323749179097063369938377958277197 30385314572855982388432710838302149158263121934186028340346880

Even more fortunately, my text editor has a built in infinite precision calculator so we can try out various examples. Note that ^ is used for exponentiation, B for logarithm (to any base), and the operator is placed after the operands, not in between.

Do the above example using emacs calc.

The following functions are ordered from smaller to larger

N-3 N-1 N-1/2 N0 N1/3 N1/2 N1 N3/2 N2 N3 ... N10 ... N100Three questions that remain (among many others) are

To answer the first question we will (somewhat informally) divide one function by the next. If the quotient tends to zero when N gets large, the second is significantly larger that the first. If the quotient tends to infinity when N gets large, the second is significantly smaller than the first.

Hence in the above list of powers of N, each entry is significantly larger than the preceding. Indeed, any change in exponent is significant.

The answer to the second question is that log(N) comes after N0 and before NP for every fixed positive P. We have not proved this.

The answer to the third question is that 2N comes after NP for every fixed P. (The 2 is not special. For every fixed Q>1, QN comes after every fixed NP.) Again, we have not proved this.

Do some examples on the board.

Read. The idea is to consider two methods to add 1+2+3+4+...+N

These have different big-Oh values.

So a better algorithm has better (i.e., smaller) complexity.

Imagine first that you want to calculate (0.3)100. The obvious solution requires 99 operations.

In general for a fixed b≠0, raising bN using the natural algorithm requires N-1=O(N) operations.

I describe the algorithm for the special case of .3100 but it works in general for bN. The basic idea is to write 100 in binary and only use those powers. On the right is an illustration.

1 2 4 8 16 32 64 128

0 0 1 0 0 1 1 0

.3 .32 .34 .38 .316 .332 .364

.32 × .332 × .364

Since there were just 4 large steps, each of which has complexity O(log(N)), so does the entire algorithm.

Homework: The example above used N=100. The natural algorithm would do 99 multiplications, the better algorithm does 8. Assume N≤1,000,000. What is the maximum number of multiplications that the natural algorithm would perform? What is the maximum number of multiplications that the better algorithm would perform?

Start Lecture #4

Assume the phone book has N entries.

If you are lucky, the first entry succeeds, this takes O(1) time.

If you are unlucky, you need the last entry or, even worse, no entry works. This takes O(N) time.

Assuming the entry is there, on average it will be in the middle. This also takes O(N) time.

From a big-Oh viewpoint, the best case is much better than the average, but the worst case is the same as the average.

Lab: Lab 1 part 4 is assigned and is due in 7 days.

The above is kinda-sorta OK. When we do binary search for real will get the details right. In particular we will worry about the case where the sought for item is not present.

This algorithm is a big improvement. The best case is still O(1), but the worst and average cases are O(log(N)).

BUT binary search requires that the list is sorted. So either we need to learn how to sort (and figure out its complexity) or learn how to add items to a sorted list while keeping it sorted (and figure out how much this costs.

This are not trivial questions; we will devote real effort on their solutions.

Read

public class Quadrilateral extends GeometricObject {

...

public Quadrilateral (Point p1, Point p2,

Point p3, Point P4)

public double area() {...}

}

Definition: An Abstract Data Type is a data type whose properties are specified but not their implementations. For example, we know from the abstract specification on the right that a Quadrilateral is a Geometric Object, that it is determined by 4 points, and that its area can be determined with no additional information.

The idea is that the implementor of a modules does not reveal all the details to the user. This has advantages for both sides.

In 201 you will learn how integers are stored on modern computers (twos compliment), how they were stored on the CDC 6600 (ones complement), and perhaps packed decimal (one of the ways they are stored on IBM mainframes). For many applications, however, the implementations are unimportant. All that is needed is that the values are mathematical integers.

For any of the implementations, 3*7=21, x+(y+z)=(x+y)+z (ignoring overflows), etc. That is, to write many integer-based programs we need just the logical properties of integers and not their implementation. Separating the properties from the implementation is called data abstraction.

Consider the BigDecimal class in the Java library that we mentioned previously (it includes unbounded integers). There are three perspectives or levels at which we can study this library.

Preconditions are requirements placed on the user of a method in order to ensure that the method behaves properly. A precondition for ordinary integer divide is that the divisor is not zero.

A postcondition is a requirement on the implementation. If the preconditions are satisfied, then upon method return the postcondition will be satisfied.

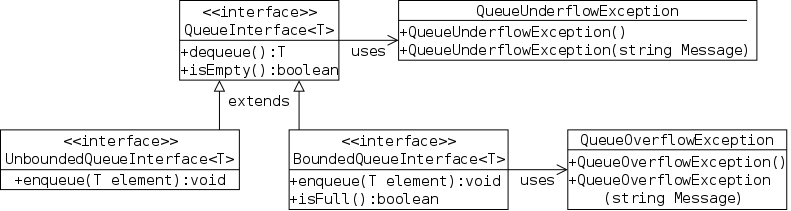

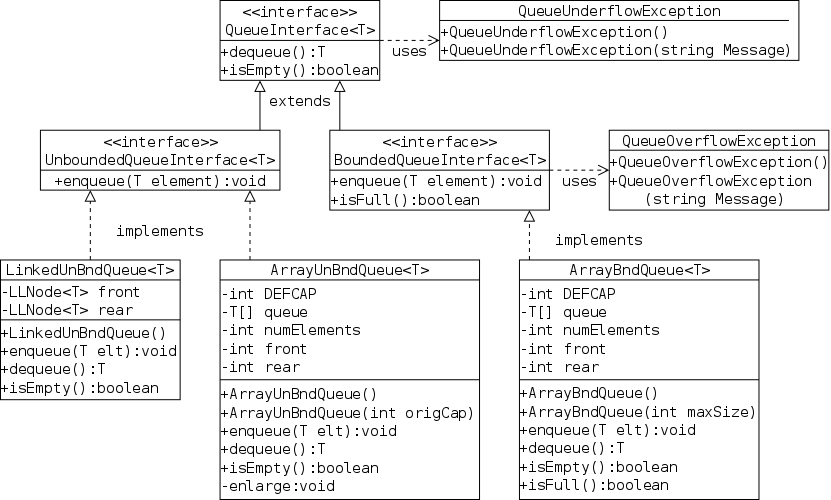

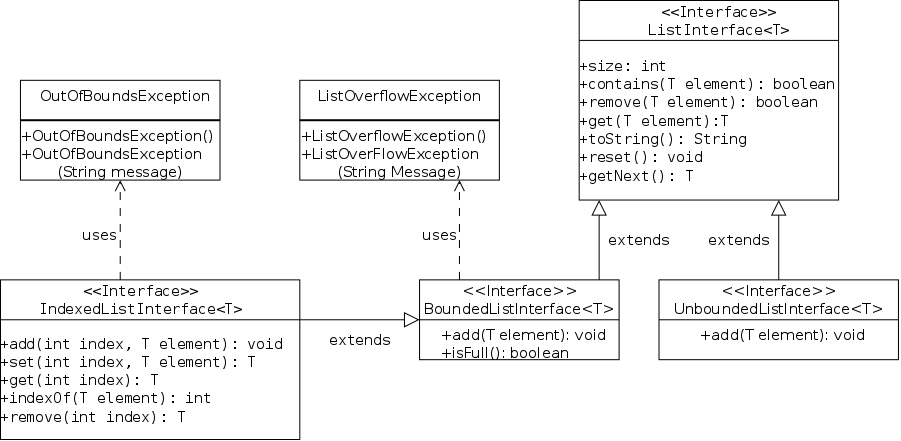

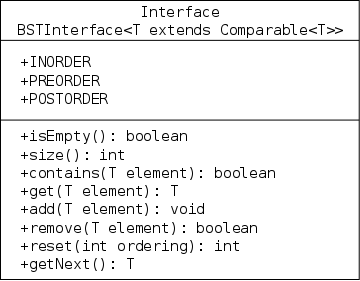

This is Java's way of specifying an ADT. An interface is a class with two important restrictions.

The implied keywords public static final and public abstract can be (and typically are) omitted.

A method without a body is specified at the user level, it tells you what arguments you must supply and the type of the value returned. It does not tell you how any properties of the return value or any side effects of the method (e.g., values printed).

Each interface used must be implemented by one or more real classes that have non-abstract versions of all the methods.

The next two sections give examples of interfaces and their implementing classes. You can also read the FigureGeometry interface in this section.

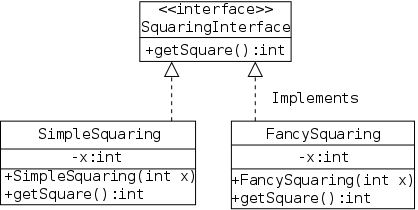

As a warm up for the book's Stringlog interface, I present an ADT and two implementations of the trivial operation of squaring an integer. I even threw in a package. All in all it is way overblown. The purpose of this section is to illustrates the Java concepts in an example where the actual substance is trivial and the concepts are essentially naked.

There are four .java files involved as shown on the right and listed below. The first three constitute the squaring package and are placed in a directory with the same name (standard for packages).

package squaring;

public interface SquaringInterface {

int getSquare();

}

package squaring;

public class SimpleSquaring implements SquaringInterface {

private int x;

public SimpleSquaring(int x) { this.x = x; }

public int getSquare() { return x*x; }

}

package squaring;

public class FancySquaring implements SquaringInterface {

private int x;

public FancySquaring(int x) { this.x = x; }

public int getSquare() {

int y = x + 55;

return (x+y)*(x-y)+(y*y);

}

}

import squaring.*;

public class DemoSquaring {

public static void main(String[] args) {

SquaringInterface x1 = new SimpleSquaring(5);

SquaringInterface x2 = new FancySquaring(6);

System.out.printf("(x1.x)*(x1.x)=%d (x2.x)(x2.x)=%d\n",

x1.getSquare(), x2.getSquare());

}

}

The first file is the ADT. The only operation supported is to compute and return the square of the integer field of the class. Note that the (non-constant) data field is not present in the ADT and the (abstract) operation has no body.

The second file is the natural implementation, the square is computed by multiplying. Included is a standard constructor to create the object and set the field.

The third file computes the square in a silly way (recall that (x+y)*(x-y)=x*x-y*y). The key point is that from a client's view, the two implementations are equivalent (ignoring performance).

The fourth file is probably the most interesting. It is located in the parent directory. Both variables have declared type SquaringInterface. However, they have actual types SimpleSquaring and FancySquaring respectively.

A Java interface is not a real class so no object can have actual type SquaringInterface (only a real class can follow new). Since the getSquare() in each real class overrides (not overloads) the one in the interface, the actual type is used to choose which one to invoke. Hence x1.getSquare() invokes the getSquare() in SimpleSquaring; whereas x2.getSquare() invokes the getSquare() is FancySquaring().

UML is a standardize way of summarizing

classes and

interfaces.

For example, the 3 classes/interfaces for in the squaring

package would be written as shown on the right.

The commands in this section are run from the parent directory of squaring. Note that the name of this parent is not important. What is important is that the name of the squaring must agree with the package name.

javac squaring/*.java javac DemoSquaring.java java DemoSquaring

It would be perfectly fine to execute the three lines on the right, first compiling the package and then compiling and running the demo.

However, the first line is not necessary. The import statement in DemoSquaring.java tells the compiler that all the classes in the squaring package are needed. As a result the compiler looks in subdirectory squaring and compiles all the classes.

Homework: 9, 10.

In contrast to the above, we now consider an example of a useful package.

The book's stringLogs package gives the specification and two implementations of an ADT for keeping logs of strings. A log has two main operations, you can insert a string into the string and ask if a given string is already present in the log.

Homework: If you haven't already done so, download the book's programs onto your computer. It can be found at http://samples.jbpub.com/9781449613549/bookFiles.zip.

Unzip the file and you will have a directory hierarchy starting at XXX/bookFiles, where XXX is the absolute name (i.e. starting with / or \) of the directory at which you executed the unpack. Then set CLASSPATH via

As mention previously much of the book's code can be downloaded onto your system. In my case I put it in the directory /a/dale-dataStructures/bookFiles. Once again the name of this directory is not important, but the names of all subdirectories and files within must be kept as is to agree with the usage in the programs

import ch02.stringLogs.*

public class DemoStringLogs {

}

To prepare for the serious work to follow I went to a scratch directory and compiled the trial stub for a stringLog demo shown on the right. The compilation failed complaining that there is no ch02 since DemoStringLogs.java is not in the parent directory of ch02.

Since I want to keep my code separate from the book's, I do not want my demo in /a/dale-dataStructures/BookFiles. Instead I need to set CLASSPATH.

export CLASSPATH=/a/dale-dataStructures/bookFiles:.

The command on the right is appropriate for my gnu/linux system and (I believe) would be the same on MacOS. Windows users should see the book. This will be demoed in the recitation.

Now javac DemoStringLogs.java compiles as expected.

StringLog(String name);

Since a user might have several logs in their program, it is useful for each log to have a name. So we will want a constructor something like that on the right.

For some implementations there is a maximum number of entries possible in the log. (We will give two implementations, one with and one without this limit.)

StringLog(String name, int maxEntries);

If we have a limit, we might want the user to specify its value with a constructor like the one on the right.

nicely formattedversion of the log.

Here is the code downloaded from the text web site. One goal of an interface is that a client who reads the interface can successfully use the package.

That is an ambitious goal; normally more documentation is needed.

How about the questions I raised in the previous section?

import ch02.stringLogs.*;

public class DemoStringLogs {

public static void main (String[] args) {

StringLogInterface demo1;

demo1 = new ArrayStringLog ("Demo #1");

demo1.insert("entry 1");

demo1.insert("entry 1"); // ??

System.out.println ("The contains() " +

"method is case " +

(demo1.contains("Entry 1") ? "in" : "")

+ "sensitive.");

System.out.println(demo1);

}

}

The contains() method is case insensitive.

Log: Demo #1

1. entry 1

2. entry 1

On the right we have added some actual code to the demo stub above. As is often the case we used test cases to find out what the implementation actually does. In particular, by compiling (javac) and running (java) DemoStringLogs we learn.

conditional expression(borrowed from the C language).

nicely formattedoutput of the toString() method.

Homework: 15.

Homework: Recall that a stringlog can contain multiple copies of the same string. Describe another method that would be useful if multiple strings do exist.

Start Lecture #5

Now it is time to get to work and implement StringLog.

package ch02.stringLogs;

public class ArrayStringLog

implements StringLogInterface {

...

}

In this section we use a simple array based technique in which a StringLog is basically an array of strings. We call this class ArrayStringLog to emphasize that it is array based and to distinguish it from the linked-list-based implementation later in the chapter.

private String[] log; private int lastIndex = -1; private String name;

Each StringLog object will contain the three instance fields on the right: log is the basic data structure, an array of strings with each array entry containing one log entry. name is the name of the log and lastIndex is is the last index (i.e., largest) into which an item has been placed.

The book uses protected (not private) visibility. For simplicity I will keep to the principal that fields are private and methods public until we need something else.

public ArrayStringLog(String name,

int maxSize) {

this.name = name;

log = new String[maxSize];

}

public ArrayStringLog(String name) {

super(name, 100); // 100 arbitrary

}

We must have a constructor

Why?

That is, why isn't the default constructor adequate?

Answer: Look at the fields, we need to set name and

create the actual array.

Clearly the user must supply the name, the size of the array can either be user-supplied or set to a default. This gives rise to the two constructors on the right.

Although the constructor executes only two Java statements, its complexity is O(N) (not simply O(1). This is because each string in the array is automatically initialized to the empty string. You can see this if you read the String constructor.

Both constructors have the same name, but their signatures (name plus parameter types) are different so Java can distinguish them.

public void insert (String s) {

log[++lastIndex] = s;

}

This mutator executes just one simple statement so is O(1). Inserting an element into the log is trivial because.

public void clear() {

for(int i=0; i<=lastIndex; i++)

log(i) = null;

lastIndex = -1;

}

To empty a log we just need to reset lastIndex.

Why?

If so, why the loop?

public boolean isFull() {

return lastIndex == log.length-1;

}

public String size() {

return lastIndex+1;

}

public String getName() {

return name;

}

The isFull() accessor is trivial (O(1)), but easy to get wrong by forgetting the -1. Just remember that in this implementation the index stored is the last one used not the next one to use and all arrays start at index 0.

For the same reason size() is lastIndex+1. It is also clearly O(1)

The accessor getName() illustrates why accessor methods

are sometimes called getters.

So that users cannot alter fields

behind the implementor's back

, the fields are normally

private and the implementor supplies a getter.

This practice essentially gives the user read-only access to the

fields.

public boolean contains (String str) {

for (int i=0; i<=lastIndex; i++)

if (str.equalsIgnoreCase(log(i))

return true;

return false;

}

The contains() method loops through the log looking for the given string. Fortunately, the String class includes an equalsIgnoreCase() so case insensitive searching is no extra effort.

Since the program loops through all N entries it must take time that grows with N. Also each execution of equalsIgnoreCase() can take time that grows with the length of the entry. So the complexity is more complicated and not just O(1) or O(N)

public String toString() {

String ans = "Log: " + name + "\n\n";

for (int i=0; i<=lastIndex; i++)

ans = ans + (i+1) + ". " + log[i] + "\n";

return ans;

}

The toString() accessor is easy but tedious.

It's specification is to return a nicely formatted

version of

the log.

We need to produce the name of the log and each of its entries.

Different programmers would often produce different programs since

what is nicely formatted

for one, might not be nicely

formatted for another.

The code on the right (essentially straight from the book) is

one reasonable implementation.

With such a simple implementation, the bugs should be few and shallow (i.e., easy to find). Also we see that the methods are all fast except for contains() and toString(). All in all it looks pretty good, but ...

Conclusion: We need to learn about linked lists.

Homework: 20, 21.

Read.

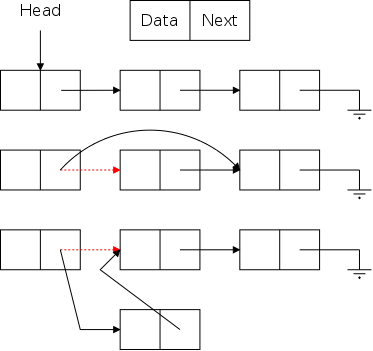

As noted in section 1.5, arrays are accessed via an index, a value that is not stored with the array. For a linked representation, each entry (except the last) contains a reference or pointer to the next entry. The last entry contains a special reference, null.

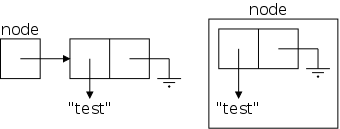

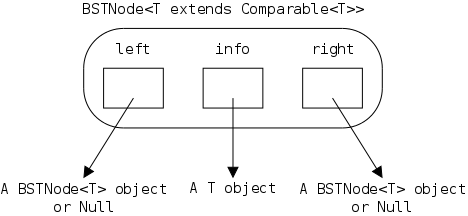

Before we can construct a linked version of a stringlog, we need to define a node, one of the horizontal boxes (composed of two squares) on the right.

The first square Data is application dependent.

For a stringlog, it would be a string, but in general it could be

arbitrarily complex.

However, it need not be arbitrarily complex.

Why?

The Next entry characterizes a linked list; it is a pointer to another node. Note that I am not saying one Node contains another Node, rather that one node contains a pointer to (or a reference to) another node.

As an analogy consider a treasure hunt

game where you are

given an initial clue, a location (e.g., on top of the basement TV).

At this location, you find another clue, ... , until the last clue

points you at the final target.

It is not true that the first clue contains the second, just that the first clue points to the second.

public class LLStringNode {

private String info;

private LLStringNode link;

}

Despite what was just said, the Java code on the right makes it

look as if a node does contain another node.

This confusion is caused by our friend reference semantics

.

Since LLStringNode is a class (and not a

primitive type), a variable of that type, such as link

shown on the right, is a reference, i.e., a pointer

(link corresponds to the Next box above;

info corresponds to Data).

The previous paragraph is important. It is a key to understanding linked data structures. Be sure you understand it.

public LLStringNode(String info) {

this.info = info;

link = null;

}

The LLStringNode constructor simply initializes the two fields. We set link=null to indicate that this node does not have a successor.

Forgive me for harping so often on the same point, but please be sure you understand why the constructor above, when invoked via the Java statement

node = new LLStringNode("test");

gives rises to the situation depicted on the near right and not the situation depicted on the far right.

Recall from 101 that all references are the same size. My house in the suburbs is much bigger than the apartment I had in Washington Square Village, but their addresses are (about) the same size.

Now let's consider a slightly larger example, 3 nodes. We execute the Java statements

node1 = LLStringNode("test1");

node2 = LLStringNode("test2");

node3 = LLStringNode("test3");

node3.setLink(node2);

node2.setLink(node1);

setLink() is the usual mutator that sets

the link field of a node.

Similarly we define setInfo(), getLink(),

and getInfo().

Homework: 41, 42.

Remark: In 2012-12 fall, this was assigned next lecture.

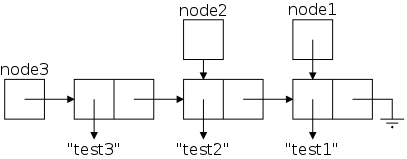

From the diagram on the right, which we have seen twice before, the Java code for simple linked list operations is quite easy.

The usual terminology is that you traverse

a

list, visiting

each node in order of its occurrence on the

list.

We need to be given a reference to the start of the list (Head in

the diagram) and need an algorithm to know when we have reached the

end of the list (the link component is null).

As the traversal proceeds each node is visited

.

The visit program is unaware of the list; it processes the Data

components only (called info in the code).

public static void traverse(LLStringNode node) {

while (node != null) {

visit(node.getInfo());

node = node.getLink();

}

}

Note that this works fine if the argument is null, signifying a list of length zero (i.e., an empty list).

Note also that you can traverse() a list starting at any node.

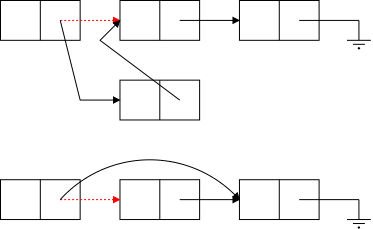

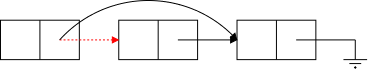

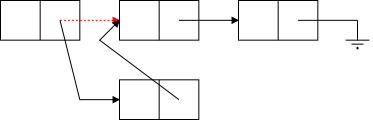

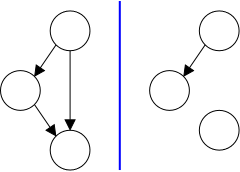

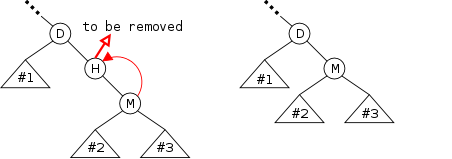

The bottom row of the diagram show the desired result of inserting an node after the first node. (The middle row shows deletion.) Two references are added and one (in red) is removed (actually replaced).

The key point is that to insert a node you need the red reference as well as a reference to the new node. In the picture the red reference is the Next field of a node. Another possibility is that it is an external reference to the first node (e.g. Head in the diagram). A third possibility is that the red reference is the null in the link field of the last node. Yet a fourth possibility is that the list is currently empty and the red reference is a null in Head.

However in all these cases the same two operations are needed (in this order).

We will see the Java code shortly.

Mosts list support deletion. However our stringlog example does not, perhaps because logs normally do not (or perhaps because it is awkward for an array implementation).

The middle row in the diagram illustrates deletion for (singly) linked lists. Again there are several possible cases (but the list cannot be empty) and again there is one procedure that works for all cases.

You are given the red reference, which points to the node to be deleted and you set it to the reference contained in the next field of the node to which it was originally pointing.

(For safety, you might want to set the original next field to null).

General lists support traversal as well as arbitrary insertions and deletions. For stacks, queues, and stringlogs only a subset of the operations are supported as shown in the following table. The question marks will be explaned in the next section.

| Insertion | Deletion | ||||||

|---|---|---|---|---|---|---|---|

| Structure | Traversal | Beginning | Middle | End | Beginning | Middle | End |

| Stringlog | XX | ?? | ?? | ||||

| Stack | XX | XX | |||||

| Queue | XX | XX | |||||

| General List | XX | XX | XX | XX | XX | XX | XX |

package ch02.stringLogs;

public class LinkedStringLog

implements StringLogInterface { ... }

This new class LinkedStringLog implements the same ADT as did ArrayStringLog and hence its header line looks almost the same.

private LLStringNode log; private String name;

We again have a name for each stringlog, but the first field looks

weird.

Why do we have a single node associated with each log?

It seems that all questions have the same answer:

reference semantics

.

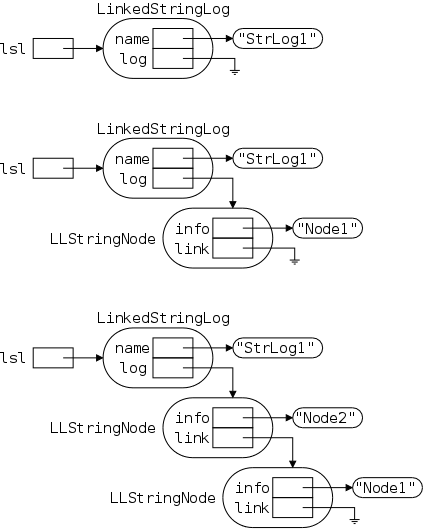

An LLStringNode is not a node but a reference or pointer to a node. Referring to the pictures in the previous section, the log field corresponds to head in the picture and not to one of the horizontal boxes containing data and next components. If the declaration said LLStringNode log new LLStringNode(...), then log would still not be a horizontal box but would point to one. As written the declaration yields an uninitialized data field.

public LinkedStringLog(String name) {

this.name = name;

log = null;

}

An advantage of linked over array based implementations is that there is no need to specify (or have a default for) the maximum size. The linked structure grows as needed. This observation explains why there is no size parameter in the constructor.

As far as I can tell the log=null; statement could have be omitted if the initialization was specified in the declaration.

There are many names involved so a detailed picture may well prove helpful. Note that the rounded boxes (let's hope apple doesn't have a patent on this as well) are actual objects; whereas references are drawn as rectangular boxes. There are no primitive types in the picture.

Note also that all the references are the same size; but not all the objects. The names next to (not inside) an object give the type (i.e., class) of the object. To prevent clutter I did not write String next to the string objects, believing that the "" makes the type clear.

The first frame shows the result of executing

LinkedStringLog lsl =

new LinkedStringLog("StrLog1");

Let's go over this first frame and see that we understand every detail in it.

The other two frames show the result after executing

lst.insert ("Node1");

lst.insert ("Node2");

We will discuss them in a few minutes. First, we will introduce insert() with our familiar, much less detailed, picture and present the Java commands that do the work.

Note that the size of a LinkedStringLog and of an LLStringNode look the same in the diagram because they both contain two fields, each of which is a reference (and all references are the same size). This is just a coincidence. Some objects contain more references than do others; some objects contain values of primitive types; and we have not shown the methods associated with types.

An interesting question arises here that often occurs.

A liberal interpretation of the word log

permits a more

efficient implementation than would be possible for a stricter

interpretation.

Specifically does the term log imply that new entries are inserted

at the end of the log?

I think of logs as structures that are append only, but that was never stated in the ADT. For an array-based implementation, insertion at the end is definitely easier, but for linked, insertion at the beginning is easier.

However, the idea of a beginning and an end really doesn't apply to the log. Instead, the beginning and end are defined by the linked implementation. As far as the log is concerned, we can call either side of the picture the beginning.

The bottom row of the picture on the right shows the result of inserting a new element after the first existing element. For stringlogs we want to insert at the beginning of the linked list, so the red reference is the value of Head in the picture or log in the Java code.

public void insert(String element) {

LLStringNode node = new LLStringNOde(element);

node.setLink(log);

log = node;

}

The code for insert() is very short, but does deserve

study.

The first point to make is that we are inserting at the beginning of

the linked list so we need a pointer to

the node before the first node

.

The stringlog itself serves as a surrogate for this pre-first

node.

Let's study the code using the detailed picture in the

An Example

section above.

public void clear() {

log = null;

}

The clear() method simply sets the log back to initial state, with the node pointer null.

Start Lecture #6

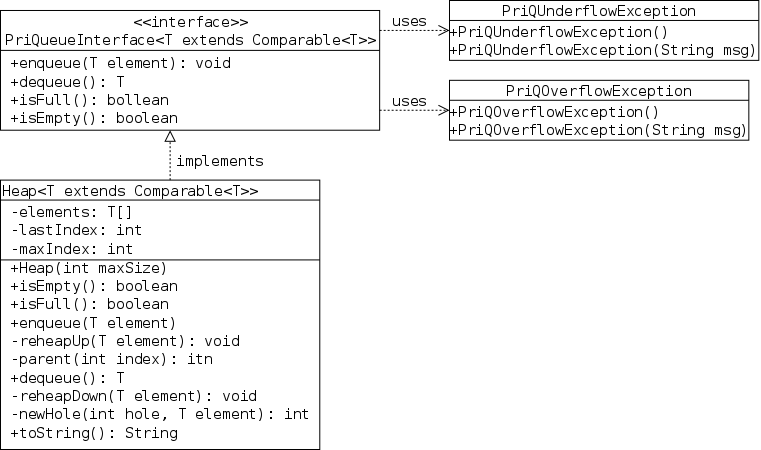

Remarks: I added a uses

arrow to the

diagram in section 2.B.

Redo insert using the new detailed diagram.

Homework: 41, 42.

Remark: This homework should have been assigned last lecture.

Lab: 2 assigned. It is due 2 October 2012 and includes an honors supplement.

public boolean isFull() {

return false;

}

With a linked implementation, no list can be full.

Recall that the user may not know the implementation chosen or may wish to try both linked- and array- based stringlogs. Hence we supply isFull() even though it may never be used and will always return false.

public String getName() {

return name;

}

getName() is identical for both the linked- and array-based implementations.

public int size() {

int count = 0;

LLStringNode node = log;

while (node != null) {

count ++;

node = node.getLink();

}

return count;

}

The size() method is harder for this linked representation than for out array-based implementation since we do not keep an internal record of the size and must traverse the log as shown on the right

An alternate implementation would be to maintain a count field and have insert() increment count by 1 each time.

One might think that the alternate implementation is better for the following reason. Currently insert() is O(1) and size() is O(N). For the alternative implementation both are O(1). However, it is not that simple, the number of items that size() must count is the number of inserts() that have been done, so there could be N insert()'s for one size().

A proper analysis is more subtle.

public String toString() {

String ans = "Log: " + name + "\n\n";

int count = 0;

for (LLStringNode node=log; node!=null; node=node.getLink())

ans = ans + (++count) + ". " + node.getInfo() + "\n";

return ans;

}

The essence of toString() is the same as for the array-based implementation. However, the loop control is different. The controlling variable is a reference, it is advanced by getLink() and termination is a test for null.

public boolean contains(String str) {

for (LLStringNode node=log; node!=null; node=node.getLink())

if (str.equalsIgnoreCase(node.getInfo()))

return true;

return false;

}

Again the essence is the same as for the array-based implementation, but the loop control is different. Note that contains() and toString() have the same loop control. Indeed, advancing by following the link reference until it is null is quite common for any kind of linked list.

The while version of this loop control was used in size().

Homework: 44, 47, 48.

Read

Read

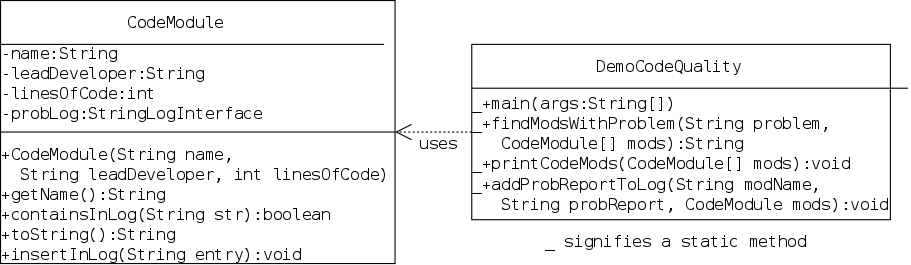

A group is developing a software system that is comprised of several modules, each with a lead developer. They collect the error messages that are generated during each of their daily builds. Then they wish to know, for specified error messages, which modules encountered these errors.

I wrote a CodeModule class that contains the data and methods for one code module, including a stringlog of its problem reports. This class contains

This is not a great example since stringlogs are not a perfect fit. I would think that you would want to give a key word such as documentation and find matches for documentation missing and inadequate documentation . However, stringlogs only check for (case-insensitive) complete matches.

The program reads three text files.

Let's look at the UML and sketch out how the code should be constructed. Hand out the UML for stringlogs. The demo class could be written many ways, but we should pretty much all agree on CodeModule.java.

The Java programs, as well as the data files, can be found here.

Note that program is a package named codeQuality. Hence it must live in a directory also named codeQuality. To compile and run the program, I would be in the parent directory and execute.

export CLASSPATH=/a/dale-dataStructures/bookFiles:. javac codeQuality/DemoCodeQuality.java java codeQuality/DemoCodeQuality

You would do the same but the replace /a/dale-dataStructures/BookFiles by wherever you downloaded the book's packages.

It is important to distinguish the user's and implementers view of data structures. The user just needs to understand how to use the structure; whereas, the implementer must write the actual code. Java has facilities (classes/interfaces) that make this explicit.

Start Lecture #7

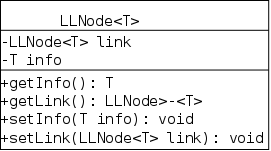

We will now learn our first general-purpose data structure, taking a side trip to review some more Java.

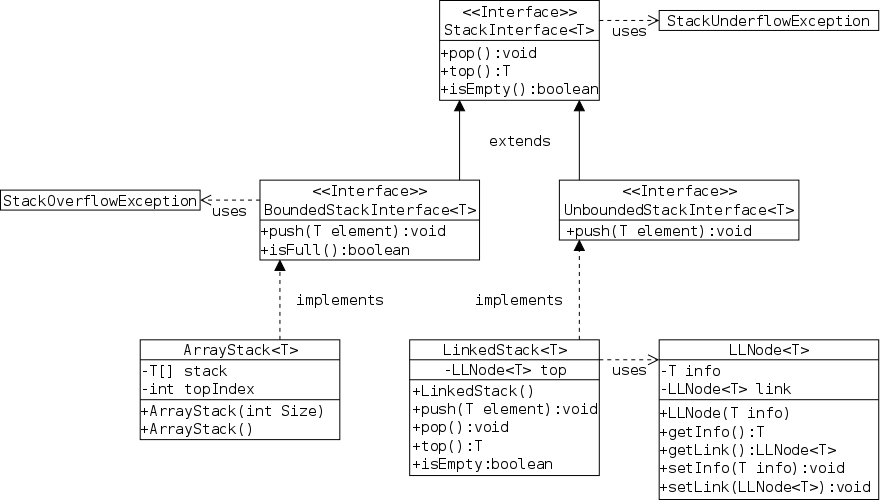

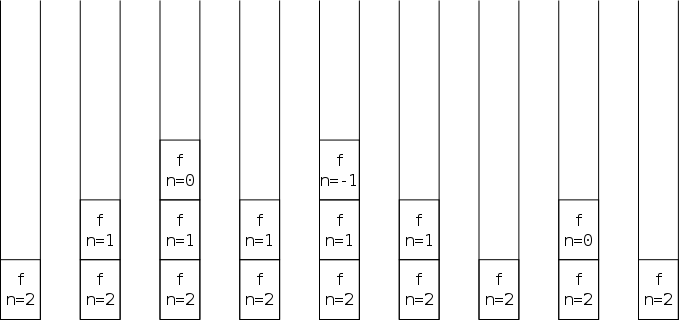

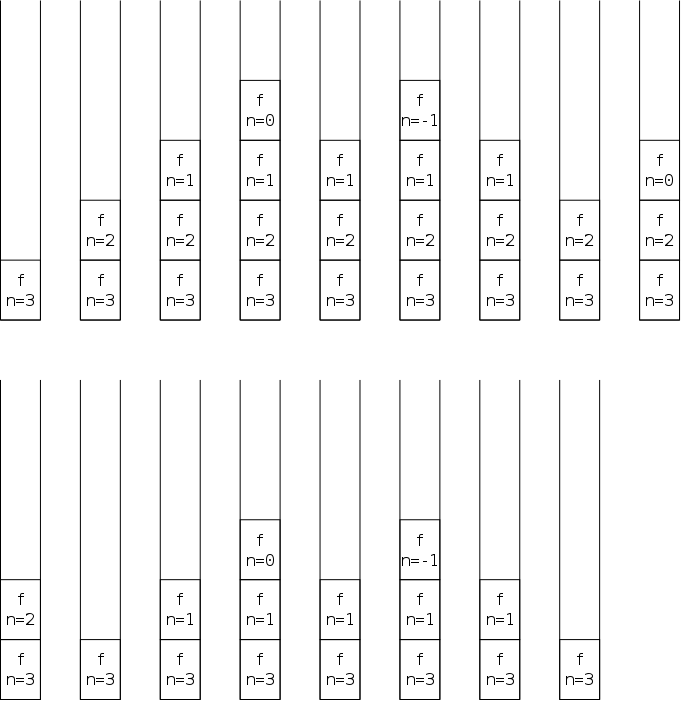

Definition: A stack is a data structure from which one can remove or retrieve only the element most recently inserted.

This requirement that removals are restricted to the most recent insertion implies that stacks have the well-known LIFO (last-in, first-out) property.

In accordance with the definition the only methods defined for stacks are push(), which inserts an element, pop(), which removes the most recently pushed element still on the stack, and top(), which retrieves (but does not remove) the most recently pushed element still on the stack.

An alternate approach is to have pop() retrieve as well as remove the element.

Stacks are a commonly used structure, especially when working with data that can be nested. For example consider evaluating the following postfix expression (or its infix equivalent immediately below).

22 3 + 8 * 2 3 4 * - - ((22 + 3) * 8) - (2 - 3 * 4))

A general solution for infix expressions would be challenging for us, but for postfix it is easy!

Homework: 1, 2

In Java a collection represents a group of objects, which are called the collections's elements. A collection is a very general concept that can be refined in several ways. We shall see several such refinements.

For example, some collections permit duplicates; whereas others do not. Some collections, for example, stacks and queues, restrict where insertions and deletions can occur. In contrast other collections are general lists, which support efficient insertion/deletion at arbitrary points.

Last semester I presented a sketch of these concepts as an optional topic. You might wish to read it here.

Our ArrayStringLog class supports a collection of Strings. It would be easy, but tedious, to produce a new class called ArrayIntegerLog that supports a collection of Integers. It would then again be easy, but now annoying, to produce a third class ArrayCircleLog, and then ArrayRectangleLog, and then ... .

If we needed logs of Strings, and logs of Circles, and logs of Integers, and logs of Rectangles, we could cut the ArrayStringlog class, paste it four times and do some global replacements to produce the four needed classes. But there must be a better way!

In addition this procedure would not work for a single heterogeneous log that is to contain Strings, Circles, Integers, and Rectangles.

public class T {

...

}

public class S extends T {

...

}

public class Main {

public static void main(String[] args) {

T t;

S s;

...

t = s;

}

}

Recall from 101 that a variable of type T can be assigned a value from any class S that is a subtype of T.

For example, consider three files T.java, S.java, and Main.java as shown on the right. We know that the final assignment statement t=s; is legal; whereas, the reverse assignment s=t; may be invalid;

When applied to our ArrayStringLog example, we see that, if we developed an ArrayObjectLog class (each instance being a log of Java objects) and constructed objLog as an ArrayObjectLog, then objLog could contain three Strings, or it could contain two Circles, or it could contain four Doubles, or it could contain all 9 of these objects at the same time.

For this heterogeneous example where a single log is to contain items of many different types, ArrayObjectLog is perfect.

As we just showed, an ArrayObjectLog can be used for either homogeneous logs (of any type) or for heterogeneous logs. I asserted it is perfect for the latter, but (suspiciously?) did not comment on its desirability for the former.

To use ArrayObjLog as the illustrative example, I must extend it a little. Recall that all these log classes have an insert() method that adds an item to the log.. Pretend that we have augmented the classes to include a retrieve() method that returns some item in the log (ignore the possibility that the log might be empty). So the ArrayStringLog method retrieve() returns a String, the ArrayIntegerLog method retrieve() returns an Integer, the ArrayObjectLog method retrieve() returns an Object, etc.

Recall the setting. We wish to obviate the need for all these log classes except for ObjectLog, which should be capable of substituting for any of them. The code on the right uses an ObjectLog to substitute for an IntegerLog.

ArrayObjectLog integerLog =

new ArrayObjectLog("Perfect?");

Integer i1=5, i2;

...

integerLog.insert(i1); // always works

i2 = integerLog.retrieve(); // compile error

i2 = (Integer)integerLog.retrieve(); // risky

Object o = integerLog.retrieve();

if (o instanceof Integer)

i2 = (Integer)o; // safe

else

// What goes here?

// Answer: A runtime error msg

// or a Java exception (see below).

Java does not know that this log will contain only Integers so a naked retrieve() will not compile.

The downcast will work providing we in fact insert() only Integers in the log. If we erroneously insert something else, retrieve()d will generate a runtime error that the user may well have trouble understanding, especially if they do not have the source code.

Using instanceof does permit us to generate a (hopefully) informative error message, and is probably the best we can do. However, when we previously used a real ArrayIntegerLog no such runtime error could occur, instead any erroneous insert() of a non-Integer would fail to compile. This compile-time error is a big improvement over the run-time error since the former would occur during program development; whereas, the latter occurs during program use.

Summary: A log of Objects can be used

for any log; it is perfect for heterogeneous logs, but

can degrade

compile-time error into run-time errors for

homogeneous logs.

We will next see a perfect

solution (using Java

generics) for homogeneous logs.

Homework: 4.

Recall that ArrayStringLog is great for logs of Strings, ArrayIntegerLog is great for logs of Integers, etc. The problem is that you have to write each class separately even though they differ only in the type of the logs (Strings, vs Integers, vs etc). This is exactly the problem generics are designed to solve.

The idea is to parameterize the type as I now describe.

y1 = tan(5.1) + 5.13 + cos(5.12) y2 = tan(5.3) + 5.33 + cos(5.32) y3 = tan(8.1) + 8.13 + cos(8.12) y4 = tan(9.2) + 9.23 + cos(9.22) f(x) = tan(x) + x3 + cos(x2) y1 = f(5.1) y2 = f(5.3) y3 = f(8.1) y4 = f(9.2)

It would be boring and repetitive to write code like that on the top right (using mathematical not Java notation).

Instead you would define a function that parameterizes the numeric value and then invoke the function for each value desired. This is illustrated inn the next frame.

Compare the first y1 with f(x) and note that we replaced each 5.1 (the numeric value) by x (the function parameter). By then invoking f(x) for different values of the argument x we obtain all the ys.

In our example of ArrayStringLog and friends we want to

write one parameterized

class (in Java it would be called

a generic class and named ArrayLog<T>,

with T the type parameter) and then instantiate

the parameter

to String, Integer, Circle, etc. to

obtain the equivalent of ArrayStringLog,

ArrayIntegerLog, ArrayCircleLog, etc.

public class ArrayLog<T> {

private T[] log;

private int lastIndex = -1;

private String name;

...

}