V22.0201 Computer Systems Organization

2018-19 Fall—Allan Gottlieb

Tuesdays and Thursdays 3:30-4:45 Room 102 Ciww

Start Lecture #1

Chapter 0 Administrivia

I start at 0 so that when we get to chapter 1, the numbering will

agree with the text.

0.1 Contact Information

- email: my-last-name AT nyu DOT edu (best method)

- web: cs.nyu.edu/~gottlieb

- office: 60 Fifth Ave, Room 316

- office phone: 212 998 3344

0.2 Course Web Page

There is a web site for the course.

You can find it from my home page, which is listed above, or from

the department's home page.

- You can find these lecture notes on the course home page.

Please let me know if you can't find them.

- The notes are updated as bugs are found or improvements made.

As a result, I do not recommend printing the notes now (if at

all).

- I will place markers at the end of each lecture after the

lecture is given.

For example, the

Start Lecture #1

marker above can be

thought of as End Lecture #0

.

0.3 Textbook

The course has two texts.

- Bryant and O.Hallaron,

Computer Systems: A programmer's Perspective

- We will also study the C programming language so you will need a

book on C.

I own, like, and will use Kernighan and Richie (Richie is the

creator of C).

However, if you already own another C book, that is probably good

enough.

0.4 Email, and the Class Mailing List

- You should have all been automatically added to the mailing list

for this course and should have received a test message from

me.

- Mail to the list should be sent to OS-201-FA18-fadd@nyu.edu

- Membership on the list is Required; I assume

that messages I send to the mailing list are read.

- If you want to send mail just to me, use my-last-name AT nyu DOT

edu not the mailing list.

- Questions on the labs should go to the mailing list.

You are encouraged to answer questions posed on the list as well.

Note that replies are sent to the list.

- I will respond to all questions.

If another student has answered the question before I get to it, I

will confirm if the answer given is correct.

- Please use proper mailing list etiquette.

- Send plain text messages rather than (or at

least in addition to) html.

- Use

Reply

to contribute to the current thread,

but NOT to start another topic.

- If quoting a previous message, trim off irrelevant parts.

- Use a descriptive Subject: field when starting a new topic.

- Do not use one message to ask two unrelated questions.

- As you will see, when I respond to a message, I either place my

reply after the original text or interspersed with it (rather than

putting the reply at the top).

This preference is most relevant for detailed questions that lead

to serious conversations involving many messages.

I find it quite useful when reviewing a serious conversations to

have the entire conversation in chronological order.

I believe you would also find it useful when reviewing for an

exam.

0.5 Grades

Grades are based on the labs and exams; the weighting will be

approximately

30%*LabAverage + 30%*MidtermExam + 40%*FinalExam (but see homeworks

below).

0.6 The Upper Left Board

I use the upper left board for lab/homework assignments and

announcements.

I should never erase that board.

Viewed as a file it is group readable (the group is those in the

room), appendable by just me, and (re-)writable by no one.

If you see me start to erase an announcement, please let me know.

I try very hard to remember to write all announcements on the upper

left board and I am normally successful.

If, during class, you see that I have forgotten to record something,

please let me know.

HOWEVER, even if I forgot and no one reminds me,

the assignment has still been given.

0.7 Homeworks and Labs

I make a distinction between homeworks and labs.

Labs are

- Required.

- Due several lectures later (date given on assignment).

- Announced in the notes and during class class.

Details in NYU Classes with supplemental material on separate web

pages.

Your solution is submitted via NYU Classes.

- Graded and form part of your final grade.

- Penalized for lateness.

- The penalty is 1 point per day up to 10 days; then 5 points

per day.

- This penalty is too mild—a one week late lab can be an

A; two weeks late might be a C.

Homeworks are

- Optional.

- Due the beginning of two lectures later.

- Not accepted late.

- The assignment is given in the notes and NYU Classes; your

solution is submitted via Classes.

- Checked for completeness and graded 0/1/2.

- Able to help, but not hurt, your final grade.

0.7.1 Homework Numbering

Homeworks are numbered by the class in which they are assigned.

So any homework given today is homework #1.

Even if I do not give homework today, any homework assigned next

class would be homework #2.

So the homework present in the notes for lecture #n is homework #n

(even if I inadvertently forgot to write it to the upper left

board).

0.7.2 Doing Labs on non-NYU Systems

You may develop (i.e., write and test) lab assignments on

any system you wish, e.g., your laptop.

However, ...

- You are responsible for any non-nyu machine.

I extend deadlines if the nyu machines are down, not if yours are.

So you should back up on an nyu server any work done on your

personal computers.

- You should test your assignments on the nyu systems, for this

class that means

courses2.cims.nyu.edu.

More on how to do this later.

- If some confusion arises, I can (and do) believe dates on

courses2 and friends.

I can not believe dates on your laptop since you

can change them backwards in time.

- In an ideal world, a program written in a high level language

such as Python, Java, C, or C++ that works on one system would

also work on any other system.

Sadly, this ideal is not always achieved, despite marketing claims

to the contrary.

So, although you may develop your lab on any system, you

must ensure that it runs on courses2, which the TAs

will use when grading your labs.

- You submit your labs using

NYU Classes

.

0.7.3 Obtaining Help with the Labs

Good methods for obtaining help include

- Asking me during office hours (see my home page for my hours).

- Asking the mailing list.

- Asking another student.

- But ...

Your lab must be your own.

That is, each student must submit a unique lab.

Naturally, simply changing comments, variable names, etc. does

not produce a unique lab.

0.7.4 Computer Language Used for Labs

This course uses the C computer language.

0.8 A Grade of Incomplete

The rules for incompletes and grade changes are set by the school>

and not the department or individual faculty member.

The rules set by CAS can be found in

<http://cas.nyu.edu/object/bulletin0608.ug.academicpolicies.html>,

which states:

The grade of I (Incomplete) is a temporary grade that

indicates that the student has, for good reason, not

completed all of the course work but that there is the

possibility that the student will eventually pass the

course when all of the requirements have been completed.

A student must ask the instructor for a grade of I,

present documented evidence of illness or the equivalent,

and clarify the remaining course requirements with the

instructor.

The incomplete grade is not awarded automatically. It is

not used when there is no possibility that the student

will eventually pass the course. If the course work is

not completed after the statutory time for making up

incompletes has elapsed, the temporary grade of I shall

become an F and will be computed in the student's grade

point average.

All work missed in the fall term must be made up by the end of the

following spring term.

All work missed in the spring term or in a summer session must be

made up by the end of the following fall term.

Students who are out

of attendance in the semester following the one in which the course

was taken have one year to complete the work.

Students should contact the College Advising Center for an Extension

of Incomplete Form, which must be approved by the

instructor.

Extensions of these time limits are rarely granted.

Once a final (i.e., non-incomplete) grade has been submitted by the

instructor and recorded on the transcript, the final grade cannot be

changed by turning in additional course work.

0.9Academic Integrity Policy

This email from the assistant director, describes the policy.

Dear faculty,

The vast majority of our students comply with the

department's academic integrity policies; see

www.cs.nyu.edu/web/Academic/Undergrad/academic_integrity.html

www.cs.nyu.edu/web/Academic/Graduate/academic_integrity.html

Unfortunately, every semester we discover incidents in

which students copy programming assignments from those of

other students, making minor modifications so that the

submitted programs are extremely similar but not identical.

To help in identifying inappropriate similarities, we

suggest that you and your TAs consider using Moss, a

system that automatically determines similarities between

programs in several languages, including C, C++, and Java.

For more information about Moss, see:

http://theory.stanford.edu/~aiken/moss/

Feel free to tell your students in advance that you will be

using this software or any other system. And please emphasize,

preferably in class, the importance of academic integrity.

Rosemary Amico

Assistant Director, Computer Science

Courant Institute of Mathematical Sciences

Remark: The chapter/section numbers for the

material on C, agree with Kernighan and Plauger.

However, the material is quite standard so, as mentioned before, if

you already own a C book that you like, it should be fine.

Chapter 1 A Tutorial Introduction

Since Java includes much of C, my treatment can be very brief for

the parts in common (e.g., control structures).

1.1 Getting Started

C programs consist of functions, which contain

statements, and variables, the latter store

values.

The Hello World Function

#include <stdio.h>

main()

{

printf("Hello, world\n");

}

- All complete programs must have a main function.

The program begins execution there.

- # introduces preprocessor directives, #include

is the most common.

#include <stdio.h> tells the preprocessor to look

in the standard place (due to the <>) for a file named

stdio.h and include it right here.

That file contains the declaration of printf().

- printf() produces formatted output.

The easiest case is simply a character string as shown here (\n

signifies a newline).

Although the this program works, the second line should be

int main(int argc, char *argv[])

Remember how long it took you to really understand

public static void main (String[] args)

1.2 Variables and Arithmetic Expressions

1.3 The For Statement

1.4 Symbolic Constants

Fahrenheight-Celsius

#include <stdio.h>

main() {

int F, C;

int lo=0, hi=300, incr=20;

for (F=lo; F<=hi; F+=incr) {

C = 5 * (F-32) / 9;

printf("%d\t%d\n", F, C);

}

}

- C has int, char, short, long, double, float

- %d means the next argument is (treated as) an int; convert it

from the internal form (two's complement binary) to printable form

(ascii, unicode, ...).

- %d uses the

right amount

of space (i.e., leaves one blank.

- \t is a tab.

- printf() accepts a variable number of arguments.

Note that the value of the first argument determines the number of

additional arguments.

- Would be better if numbers were right justified.

We must know how much space to use; in this case I know 3 digits

are enough.

- Should use floating point.

Floating Point Fahrenheight-Celcius

#include <stdio.h>

#define LO 0

#define HI 300

#define INCR 20

main() {

int F;

for (F=LO; F<=HI; F+=INCR)

printf("%3d\t%5.1f\n", F, (F-32)*(5.0/9.0));

}

- Note 5.0/9.0 to get floating point divide.

- Note %3d, which right justifies using 3 digits.

- Note %5.1f.

This means 5=3+1+1 columns total with

1 digit after the decimal point.

- Call to printf() now contains an expression instead of

just simple variable.

- #define to introduce symbolic constants.

By convention these are all caps.

1.5 Character Input and Output

getchar() / putchar()

The simplest (i.e., most primitive) form of character I/O is

getchar() and putchar(), which read and print a

single character.

Both getchar() and putchar() are defined in

stdio.h.

1.5.1 File Copying

#include <stdio.h>

main() {

int c;

while ((c = getchar()) != EOF)

putchar(c);

}

File copy is conceptually trivial: getchar() a char and

then putchar() this char until eof.

The code is on the right and does require some comment despite is

brevity.

- The program is basically just the while statement,

which has just one semicolon

- getchar() returns an int not

a char!

That is done so that we can return EOF, which is not a

char (and cannot be a char ); it is

an int (in fact it is -1).

Question: Why can't EOF be a char?

Answer: Because all chars are legal

(non-EOF) values that getchar() can return.

- C is an expression language, statements return

values.

In particular, an assignment statement returns the value of its

RHS.

This explains the condition part of the while

statement, once you notice the

extra

parens, which are

definitely not extra.

- getchar() reads from stdin and putchar()

writes to stdout.

- Illustrate in class how to use stdin/stdout and redirection.

Homework: Write a (C-language) program to print

the value of EOF.

(This is 1-7 in the book but I realize not everyone will have the

book so I will type them in.)

Homework: (1-9) Write a program to copy its input

to its output, replacing each string of one or more blanks by a

single blank.

1.5.2 Character Counting

This is essentially a one-liner (in two ways).

while (getchar() != EOF) ++numChars;

for (nc = 0; getchar() != EOF; ++nc);

1.5.3 Line Counting

Now we need two tests.

Perhaps the following is really a two-liner, but it does have only

one semicolon.

while ((c = getchar()) != EOF) if (c == '\n') ++numLines;

So if a file has no newlines, it has no lines.

Demo this with echo -n >noEOF "hello"

1.5.4 Word Counting

The Unix wc Program

The Unix wc program prints the number of characters, words, and

lines in the input.

It is clear what the number of characters means.

The number of lines is the number of newlines (so if the last line

doesn't end in a newline, it doesn't count).

The number of words is less clear.

In particular, what should be the word separators?

#include <stdio.h>

#define WITHIN 1

#define OUTSIDE 0

main() {

int c, num_lines, num_words, num_chars, within_or_outside;

within_or_outside = OUTSIDE; // C doesn't have Boolean type

num_lines = num_words = num_chars = 0;

while ((c = getchar()) != EOF) {

++num_chars;

if (c == '\n')

++num_lines;

if (c == ' ' || c == '\n' || c == '\t')

within_or_outside = OUTSIDE;

else if (within_or_outside == OUTSIDE) { // starting a word

++num_words;

within_or_outside = WITHIN;

}

}

printf("%d %d %d\n", num_lines, num_words, num_chars);

}

- This program assums blank, newline, and tab are the only word

separators.

- C doesn't have a real Boolean type.

Instead int is used; 0 is false; everything else is true.

- The key idea in the program, which is independent of the

language used, is to keep track of when we are within a word and

to bump the word counter at the the start of a

new word.

- The program begins outside a word.

- Whenever it encounters a separator, it becomes outside.

- Whenever it encounters a non-separator (i.e., a word constituent)

and was outside, then it has found the start

of a new word.

This puts the program within a word and is when it bumps the

word counter.

- Java if-then-else is the same as C.

Same for while and do / while.

Same for switch / case.

Same for continue, break, and return.

Homework: (1-12) Write a program that prints its

input one word per line.

1.6 Arrays

We are hindered in our examples because we don't yet know how to

input anything other than characters and don't want to write the

program to convert a string of characters into an integer or (worse)

a floating point number.

Mean and Standard Deviation

#include <stdio.h>

#define N 10 // imagine you read in N

main() {

int i;

float x, sum=0, mu;

for (i=0; i<N; i++) {

x = i; // imagine you read in X[i]

sum += x;

}

mu = sum / N;

printf("The mean is %f\n", mu);

}

#include <stdio.h>

#define N 10 // imagine you read in N

#define MAXN 1000

main() {

int i;

float x[MAXN], sum=0, mu;

for (i=0; i<N; i++) {

x[i] = i; // imagine you read in X[i]

}

for (i=0; i<N; i++) {

sum += x[i];

}

mu = sum / N;

printf("The mean is %f\n", mu);

}

#include <stdio.h>

#include <math.h>

#define N 5 // imagine you read in N

#define MAXN 1000

main() {

int i;

double x[MAXN], sum=0, mu, sigma;

for (i=0; i<N; i++) {

x[i] = i; // imagine you read in x[i]

sum += x[i];

}

mu = sum / N;

printf("The mean is %f\n", mu);

sum = 0;

for (i=0; i<N; i++) {

sum += pow(x[i]-mu,2);

}

sigma = sqrt(sum/N);

printf("The std dev is %f\n", sigma);

}

I am sure you know the formula for the mean (average) of N numbers:

Add the numbers and divide by N.

The mean is normally written μ.

The standard deviation is the RMS (root mean squared) of the

deviations-from-the-mean, it is normally written σ.

Symbolically, we write μ = ΣXi/N and

σ = √(Σ((Xi-μ)2)/N).

(When computing σ we sometimes divide by N-1

not N.

Ignore the previous sentence.)

The First Version (Just the Mean; No Array Used)

The first program on the right naturally

reads N, then reads N numbers, and the computes

the mean of the latter.

There is a problem; we don't know how to read numbers.

So I faked it by having N a symbolic constant and making

x[i]=i.

The Second Version (Just the Mean: Array Used)

I do not like the second version with its gratuitous array.

It is (a little) longer, slower, and more complicated.

Much worse it takes space proportional to N, for no reason.

Hence it might not run at all for large N.

However, I have seen students write such programs.

Apparently, there is an instinct to use a three step procedure for

all assignments:

- Read everything in.

- Do all the computation.

- Print all the answers.

But that is silly if, as in this example, you no longer need each

value after you have read the next one.

The Third Version Mean and Standard Deviation)

The last example is a good use of arrays for

computing the standard deviation using the RMS formula above.

We do need to keep the values around after computing the mean so

that we can compute all the deviations from the mean and, using

these deviations, compute the standard deviation.

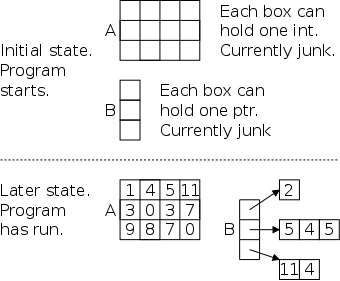

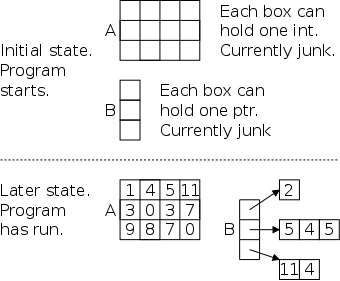

Note that, unlike Java, no use of new (or the C analogue

malloc()) appears.

Arrays declared as in this program have a lifetime of the routine in

which they are declared.

Specifically sum and x are both allocated when

main is called and are both freed when main is finished.

Arrays in Java are references.

So, when you write in a Java function f()

int[] A = new int[3];

The lifetime of the array is not tied to the lifetime

of f().

We will battle with lifetimes in C later in the course when we look

carefully at pointers and malloc().

Non-primitive Declarations

Note the declaration int x[MAXN] in the third version.

In C, to declare a complicated variable (i.e., one that is not a

primitive type like int or char), you write what

has to be done to the variable to get one of the primitive

types.

- For example the declaration int *(x[]) says

that x is something that, if you take an element of it

and then dereference that element, then you will get an integer.

So x is an array of pointers to integers.

- int (*y)[] says that if you first

dereference y and take an element of the result, you get

an integer.

So y is a pointer to an array of integers.

- Thus int *(x[]) is not the same

as (*x)[].

- This declaration style is a controversial feature of C.

Some like it, some don't (I don't).

But that is the way it is.

In C if we have int X[10]; then writing X in your

program is the same as writing &X[0].

& is the address of

operator.

More on this later when we discuss pointers.

1.7 Functions

There is of course no limit to the useful functions one can write.

Indeed, the main() programs we have written above are all

functions.

A C program is a collection of functions (and global variables).

Exactly one of these functions must be called main and that

is the function at which execution begins.

#include <stdio.h>

// Determine letter grade from score

// Demonstration of functions

char letter_grade (int score) {

if (score >= 90) return 'A';

else if (score >= 80) return 'B';

else if (score >= 70) return 'C';

else if (score >= 60) return 'D';

else return 'F';

} // end function letter_grade

main() {

short quiz;

char grade;

quiz = 75; // should read in quiz

grade = letter_grade(quiz);

printf("For a score of %3d the grade is %c\n",

quiz, grade);

} // end main

cc -o grades grades.c; ./grades

For a score of 75 the grade is C

One important issue is type matching.

If a function f takes one int argument and

f is called with a short, then the

short must be converted to an int.

Since this conversion is widening, the compiler will automatically

coerce the short into an int, providing it knows

that an int is required.

It is fairly easy for the compiler to know all this

providing f() is defined before it is used, as in

the code on the right.

Computing Letter Grades

We see on the right a function letter_grade defined.

It has one int argument and returns a char.

Finally, we see the main program that calls the

function.

The main program uses a short to hold the

numerical grade and then calls the function with this short

as the argument.

The C compiler generates code to coerce this short value to

the int required by the function.

Averages and Sorting

// Average and sort array of random numbers

#define NUMELEMENTS 50

void sort(int A[], int n) {

int temp;

for (int x=0; x<n-1; x++)

for (int y=x+1; y<n; y++)

if (A[x] < A[y]) {

temp = A[y];

A[y] = A[y+1];

A[y+1] = temp;

}

}

double avg(int A[], int num) {

int sum = 0;

for (int x=0; x<n; x++)

sum = sum + A[x];

return (sum / n);

}

main() {

int table[NUMELEMENTS];

double average;

for (int x=0; x<NUMELEMENTS; x++) {

table[x] = rand(); /* assume defined */

printf("The elt in pos %d is %d\n",

x, table[x]);

}

average = avg(table, NUMELEMENTS );

printf("The average is %5.1f ", average);

sort(table, NUMELEMENTS );

for (x-=; x<NUMELEMENTS; x++)

printf("The element in position %3d is %3d \n",

x, table[x]);

}

The next example illustrates a function that has an array

argument.

Remember that in a C declaration you decorate

the item being

declared with enough stuff (e.g., [], *) so that the result is a

primitive type such as int, double, or

char.

The function sort has two parameters, the second one

n is simply an int.

The parameter A, however, is more complicated.

It is the kind of thing that when you take an element of it, you get

an int.

That is, A is an array of ints.

Unlike the array example in section 1.6, A does not

have an explicit upper bound on its index.

This is because the function can be called with arrays of different

sizes.

Since the function needs to know the size of the array (look at the

for loops), a second parameter n is used for

this purpose.

This example has two function calls:

main calls both avg and sort.

Looking at the call from main to sort we see that

table is assigned to A and

NUMELEMENTS is assigned to n.

Looking at the code in main itself, we see that indeed

NUMELEMENTS is the size of the array table and

thus in sort, n is the size of A.

All seems well provided the called function appears

before the function that calls it.

Our examples have followed this convention.

So far so good; but if f calls g and (recursively)

g calls f, we are in trouble.

How can we have f before g, and g before

f?

This will be answered in our next example.

Start Lecture #2

1.8 Arguments—Call by Value

Arguments in C are passed by value (the same as Java does

for arguments that are not objects).

1.9 Character Arrays

Unlike Java, C does not have a string datatype.

A string in C is an array of chars.

String operations like concatenate and copy (assignment) become

functions in C.

Indeed there are a number of standard library routines for

strings.

The most common implementation of strings in C is

null terminated

.

That is, a string of length 5 actually contains 6 characters, the 5

characters of the string itself and a sixth character = '\0' (called

null) indicating the end of the string.

Print Longest Line

This program reads lines from the terminal, converts them to C strings

by appending '\0', and prints the longest one found.

Pseudo code would be

while (more lines)

read line

if (longer than previous longest)

save line and its length

Thus we need the ability to read in a line and the ability to save

a line.

We write two functions getLine and copy for these

tasks (the book uses getline (all lower case), but that doesn't

compile for me since there is a library routine in stdio.h with the

same name and different signature).

#include <stdio.h>

#define MAXLINE 1000

int getLine(char line[], int maxline);

void copy(char to[], char from[]);

int main() {

int len, max;

char line[MAXLINE], longest[MAXLINE];

max = 0;

while ((len=getLine(line,MAXLINE))>0)

if (len > max) {

max = len;

copy(longest,line);

}

if (max>0)

printf("%s", longest);

return 0;

}

int getLine(char s[], int lim) {

int c, i;

for (i=0; i<lim-1 &&

(c=getchar())!=EOF &&

c!='\n';

++i)

s[i] = c;

if (c=='\n') {

s[i]= c;

++i;

}

s[i] = '\0';

return i;

}

void copy(char to[], char from[]) {

int i;

i=0;

while ((to[i] = from[i]) != '\0')

++i;

}

The main() Function

Given the two supporting routines, main is

fairly simple, needing only a few small comments.

- Note that getLine and copy are declared

before main.

They are defined later in the file but C requires

declare (or define) before use

so either main would have to

come last or the declarations are needed.

Since only main uses the routines, the declarations could

have been in main but it is common practice to put them

outside as shown.

- Note %s inside printf.

This is used for (null-terminated) strings.

- Note that main is declared to return an integer and

it does return 0.

In unix at least, this is the indication of a successful

run.

- Note the while loop structure.

Having a parenthesized assignment as part of the condition being

tested is a fairly common idiom.

The getline() Helper

The for continuation condition

in getLine is rather complex.

(Note that the for loop has an empty body; the entire

action occurs in the for statement itself.)

The condition part of the for tests for 3 situations.

- We have filled up s[].

- We get to the end of the input.

- We find an end-of-line

Perhaps it would be clearer if the test was simply

i<lim-1 and the rest was done with if-break

statments inside the loop.

A Subtlety in The Three Tests

In C, if you write f(x)+g(y)+h(z) you have

no guarantee of the order the functions will be invoked.

However, the && and || operators do

guarantee left-to-right ordering to enforce short-circuit

condition evaluation.

The copy() Helper

The copy() function is declared and defined

to return void.

- This means that it does not return a value.

- Similarly, a function taking no arguments should be declared and

defined to have a void argument list.

Leaving the argument list blank (i.e., writing fun_name())

actually means that you are not specifying the argument signature.

This weird rule is for compatibility with older versions of C that

played a little fast and loose with such issues.

Homework: Simplify the for condition

in getline() as just indicated.

1.10 External Variables and Scope

Solving Quadratic Equations

#include <stdio.h>

#include <math.h>

#define A +1.0 // should read

#define B -3.0 // A,B,C

#define C +2.0 // using scanf()

void solve (float a, float b, float c);

int main() {

solve(A,B,C);

return 0;

}

void solve (float a, float b, float c) {

float d;

d = b*b - 4*a*c;

if (d < 0)

printf("No real roots\n");

else if (d == 0)

printf("Double root is %f\n", -b/(2*a));

else

printf("Roots are %f and %f\n",

((-b)+sqrt(d))/(2*a),

((-b)-sqrt(d))/(2*a));

}

#include <stdio.h>

#include <math.h>

#define A +1.0 // main() should read

#define B -3.0 // A,B,C

#define C +2.0 // using scanf()

void solve(void);

float a, b, c; // definition

int main() {

extern float a, b, c; // declaration

a=A;

b=B;

c=C;

solve();

return 0;

}

void solve () {

extern float a, b, c; // declaration

float d;

d = b*b - 4*a*c;

if (d < 0)

printf("No real roots\n");

else if (d == 0)

printf("Double root is %f\n", -b/(2*a));

else

printf("Roots are %f and %f\n",

((-b)+sqrt(d))/(2*a),

((-b)-sqrt(d))/(2*a));

}

The two programs on the right find the real roots (no complex

numbers) of the quadratic equation

ax2+bx+c

They proceed by using the standard technique of first calculating

the discriminant

d = b2-4ac

These programs deal only with real roots, i.e., when

d≥0.

The programs themselves are not of much interest.

Indeed a Java version would be too easy

to be a midterm exam

question in 101.

Our interest is confined to the method in which the

coefficients a, b, and c are passed from

the main() function to the helper

routine solve().

Method 1 Arguments and Parameters

The first program calls a function solve()

passing it as arguments the three coeficients A,B,C.

There is little to say.

Method 1 is a simple program and uses nothing new.

Method 2 External (a.k.a. Global) Variables

The second program communicates with solve

using external variables rather than arguments/parameters.

- Note the single definition, of

a, b, and c.

This definition is before and hence outside any

function.

The definition causes space to be set aside for these variables

and is visible inside main() and solve().

- Also note the multiple declarations, one inside each

function.

They include the keyword extern and indicate that a

definition is provide elsewhere (externally).

- As noted in the preceding section, both main()

and solve() are defined in this file

and solve is also also declared.

- To repeat, in C you must

declare (or define) before use

.

If you define before using, you don't need to also declare.

But if you have recursion (f() calls g() and g() calls f()), you

can't have both definitions before the corresponding uses so you

need a declaration.

- Within a single .c file, the definition of a, b, c is

enough since it is before any function that uses the variables.

Normally the definitions come before all functions, as done in the

example, and hence the declarations are not needed.

- For larger programs consisting of many functions, the

functions are normally spread across multiple .c files.

In this case each file must contain a declaration or definition

prior to any use of the variable.

Exactly one of the .c files must contain a definition.

- Typically, the definition is directly in a single .c file and

the declaration is placed in a .h (header) file that is included

in all the .c files (so one .c has a declaration and a

definition).

Chapter 2 Types, Operators, and Expressions

2.1 Variable Names

Similar to Java: A variable name must begin with a letter and then

can use letters and numbers.

An underscore is a letter, but you shouldn't begin a variable name

with one since that is conventionally reserved for library routines.

Keywords such as if, while, etc are reserved and

cannot be used as variable names.

2.2 Data Types and Sizes

C has very few primitive types.

- char: One byte in size; can hold a character.

C will coerce a char to an int if needed.

- int: The

natural

size of an integer on the host

machine.

- float: Single precision floating point.

- double: Double precision floating point.

There are qualifiers that can be added.

One pair is long/short, which are used with int.

Typically short int is abbreviated short and

long int is abbreviated long.

long must be at least as big as int, which must

be as least as big as short.

There is no short float, short double,

or long float.

The type long double specifies extended precision.

The qualifiers signed or unsigned can be applied to

char or any integer type.

They basically determined how the sign bit is interpreted.

An unsigned char uses all 8 bits for the integer value and

thus has a range of 0–255; whereas, a signed char has

an integer range of -128–127.

2.3 Constants

Integer Constants

A normal integer constant such as 123 is an int, unless it

is too big in which cast it is a long.

But there are other possibilities.

- 123 is an int

- 1234567 is an int if int's are 32-bits; it

is a long if long's are 32-bits and

int's are only 16.

- 123u is an unsigned int.

- 1223ul is an unsigned long.

- A character constant is written inside single quotes, e.g. '0'.

These constants have an integer value.

For '0' the value happens to be 48.

Some single characters are

written as two characters.

For example '\0' is the ascii null character, which is used to

terminate C strings.

Its integer value is 0.

Also important are '\n' and '\t'.

There are others.

String Constants

Although there are no string variables, there are string constants,

written as zero or more characters surrounded by double quotes.

A null character '\0' is automatically appended.

- 'x' is a single character; 'x'+1 is a valid integer expression.

- "x" contains two characters, 'x' followed by '\0'.

It is a string constant, not an integer.

- "xy" "yz" is combined (at compile time) into "xyyz".

This is called concatenation.

- Note that we concatenated two strings each with 3 characters and

get one string with 5 character.

The null ending the first string is not in the concatenation

(otherwise it would end the concatenated string).

- "" is the empty string consisting just of '\0'.

- strlen() returns the length of a string, excluding the

terminating '\0'.

Enum Constants

Alternative method of assigning integer values to symbolic names.

enum Boolean {false, true}; // false is zero, true is 1

enum Month {Jan=1, Feb, Mar, Apr, May, Jun, Jul, Aug, Sep, Oct, Nov, Dec};

2.4 Declarations

Perhaps they should be called definitions since space is allocated.

Similar to Java for scalars.

int x, y;

char c;

double q1, q2;

(Stack allocated) arrays are simple since the entire array is

allocated not just a reference (no new/malloc required).

int x[10];

Initializations may be given.

int x=5, y[2]={44,6}; z[]={1,2,3};

char str[]="hello, world\n";

The qualifier const makes the variable read only so it

must be initialized in the declaration.

2.5 Arithmetic Operators

Mostly the same as java.

Please do not call % the mod operator, unless you

know that the operands are positive.

2.6 Relational and Logical Operators

Again very little difference from Java.

Please remember that && and || are required to be

short-circuit operators.

That is, they evaluate the right operand only if needed.

2.7 Type Conversion

There are two kinds of conversions: automatic conversion,

called coercion, and explicit conversions.

Automatic Conversions

C coerces narrow

arithmetic types to wide ones.

{char, short} → int → long

float → double → long double

long → float // precision can be lost

int atoi(char s[]) {

int i, n=0;

for (i=0; s[i]>='0' && s[i]<='9'; i++)

n = 10*n + (s[i]-'0'); // assumes ascii

return n;

}

The program on the right (ascii to integer) converts a character

string representing an integer to the integral value.

- This works only for ascii (or some other system where the

character form of the digits are consecutive and in the correct

order).

- Stops at first non digit.

For example, it will stop at the terminating '\0'.

Unsigned coercions are more complicated; you can read about them in

the book.

Explicit Casts

The syntax

(type-name) expression

converts the value to the type specified.

Note that e.g., (double) x converts the value

of x; it does not change x

itself.

Homework: 2-3.

Write the function htoi(s), which converts a string of

hexadecimal digits (including an option 0x or 0X) into its

equivalent integer value.

The allowable digits are 0 through 9, a through f, and A through

F.

2.8 Increment and Decrement Operators

The same as Java.

Remember that x++ or ++x are not the same as

x=x+1 because, with the operators, x is evaluated

only once, which becomes important when x is itself an

expression with side effects.

x[i++]++ // increments some (which?) element of an array

x[i++] = x[i++]+1 // puts incremented value in ANOTHER slot

Homework: 2-4.

Write an alternate version of squeeze(s1,s2) that deltets

ecah character that mataches any character is the string

s2.

2.9 Bitwise Operators

The same as Java

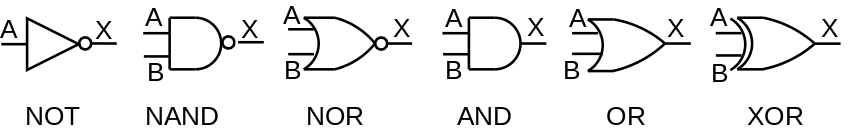

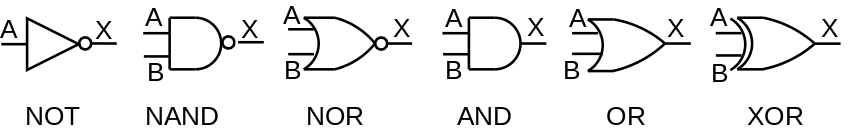

- & bit wise AND

- | bitwise OR

- ^ bitwise XOR (exclusive or)

- << left shift

- >> right shift

- ~ bitwise complement

2.10 Assignment Operators and Expressions

int bitcount (unsigned x) {

int b;

for (b=0; x!=0; x>>= 1)

if (x&01) // octal (not needed)

b++;

return b;

}

The same as Java:

+= -= *= /= %= <<= >>= &= ^= |=

The program on the right counts how many bits of its argument are 1.

Right shifting the unisigned x causes it to be

zero-filled.

Anding with a 1, gives the LOB (low order bit).

Writing 01 indicates an octal constant (any integer beginning with

0; similarly starting with 0x indicates hexadecimal).

Both are convenient for specifying specific bits (because both 8 and

16 are powers of 2).

Since the constant in this case has value 1, the 0 has no

effect.

Homework: 2-10.

Rewrite the function lower(), which converts upper case

letters to lower case with a conditional expression instead

of if-else.

2.11 Conditional Expressions

printf("You enrolled in %d course\s.\n", n, (n==1) ? "" : "s");

The same as Java:

Precedence and Associativity of C Operators

| Operators | Associativity |

|---|

| |

| () [] -> . | left to right |

| ! ~ ++ -- + - * & (type) sizeof |

right to left |

| * / % | left to right |

| + - | left to right |

| << >> | left to right |

| < <= > >= | left to right |

| == != | left to right |

| & | left to right |

| ^ | left to right |

| | | left to right |

| && | left to right |

| || | left to right |

| ?: | right to left |

| = += -= *= /= %= &= ^= |= <<= >>= |

right to left |

| , | left to right |

2.12 Precedence and Order of Evaluation

The table on the right is copied (hopefully correctly) from the

book.

It includes all operators, even those we haven't learned yet.

I certainly don't expect you to memorize the table.

Indeed one of the reasons I typed it in was to have an online

reference I could refer to since I do not know all the

precedences.

Homework: Check the table above for typos and

report any on the mailing list.

Not everything is specified.

For example if a function takes two arguments, the order in which

the arguments are evaluated is not specified.

Also the order in which operands of a binary operator like + are

evaluated is not specified.

So f() could be evaluated before or after g() in

the expression f()+g().

This becomes important if, for example, f() alters a global

variable that g() reads.

Start Lecture #3

#include <stdio.h>

void main (void) {

int x=3, y;

y = + + + + + x;

y = - + - + + - x;

y = - ++x;

y = ++ -x;

y = ++ x ++;

y = ++ ++ x;

}

Question: Which of the expressions on the right are

illegal?

Answer: The last three.

They apply ++ to values not variables (i.e, to r-values not

l-values).

I mention this because at the end of last time there was some

discussion about ++ ++ and ++++.

The distinction between l-values and r-values will become very

relevant when we discuss pointers.

Since pointers have presented difficulties for students in the

past, I use every opportunity to give ways of looking at the

problem.

Since ++ does an assignment (as well as an addition) it

needs a place to put the result, i.e., an l-value.

Chapter 3: Control Flow

3.1 Statements and Blocks

int t[]={1,2};

int main() {

22;

return 0;

}

C is an expression language; so 22

and

x=33

have values.

One simple statement is an expression followed by a semicolon;

For example, the program on the right is legal.

As in Java, a group of statements can be enclosed in braces to form

a compound statement or block.

We will have more to say about blocks later in the course.

3.2 If-Else

Same as Java.

3.3 Else-IF

Same as Java.

3.4 Switch

Same as Java.

3.5 Loops—While and For

#include <ctype.h>

int atoi(char s[]) {

int i, n, sign;

for (i=0; isspace(s[i]); i++) ;

sign = (s[i]=='-') ? -1 : 1;

if (s[i]=='+' || s[i]=='-')

i++;

for (n=0; isdigit(s[i]); i++)

n = 10*n + (s[i]-'0');

return sign * n;

}

Same as Java.

As we shall see, the loops in the book show the hand of a

master.

The program on the right (ascii to integer) illustrates several

points.

- The C library contains a number of string/character routines.

In this program two simple ones are used.

- The first for loop skips over leading spaces.

The loop has an empty body; this is not strange.

The

work

is done in the termination test.

- The conditional expression is used to determine the sign.

- The program depends on the ascii property that the digits are

consecutive and in numerical order.

The Comma Operator

for (i=0, j=0; i+j<n; i++,j+=3)

printf ("i=%d and j=%d\n", i, j);

If two expressions are separated by a comma, they are evaluated

left to right and the final value is the value of the one on the

right.

This operator often proves convenient in for statements

when two variables are to be incremented.

3.6 Loops—Do-While

Same as Java.

3.7 Break and Continue

Same as Java.

3.8 Goto and Labels

The syntax is

goto label;

for (...) {

for (...) {

while (...) {

if (...) goto out;

}

}

}

out: printf("Left 3 loops\n");

The label has the form of a variable name.

A label followed by a colon can be attached to any statement in the

same function as the goto.

The goto transfers control to that statement.

Note that a break in C (or Java) only leaves one level of

looping so would not suffice for the example on the right.

The goto statement was deliberately omitted from Java.

Poor use of goto can result in code that is hard to

understand and hence goto is rarely used in modern

practice.

The goto statement was much more commonly used in the

past.

Homework: Write a C

function escape(char s[], char t[]) that converts the

characters newline and tab into two character sequences \n

and \t as it copies the string t to the

string s.

Use the C switch statement.

Also write the reverse function unescape(char s[], char

t[]).

Chapter 4 Functions and Program Structure

4.1 Basics of Functions

Very Simplified Unix grep

The Unix utility grep (Global Regular Expression Print) prints all

occurrences of a given string (or more generally a regular

expression) from standard input.

A very simplified version is on the right.

The basic program is

while there is another line

if the line contains the string

print the line

Getting a line and seeing if there is more is getline();

a slightly revised version is on the right.

Note that a length of 0 means EOF was reached; an "empty" line still

has a newline char '\n' and hence has length 1.

Printing the line is printf().

#include <stdio.h>

#define MAXLINE 100

int getline(char line[], int max);

int strindex(char source[], char searchfor[]);

char pattern[]="x y"; // "should" be input

main() {

char line[MAXLINE];

int found=0;

while (getline(line,MAXLINE) > 0)

if (strindex(line, pattern) >= 0) {

printf("%s", line);

found++;

}

return found;

}

int getline(char s[], int lim) {

int c, i;

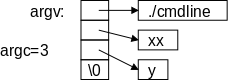

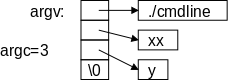

i = 0;

while (--lim>0 && (c=getchar())!=EOF && c!='\n')

s[i++] = c;

if (c == '\n')

s[i++] = c;

s[i] = '\0';

return i;

}

int strindex(char s[], char t[]) {

int i, j, k;

for(i=0; s[i]!='\0'; i++) {

for (j=i,k=0; t[k]!='\0' && s[j]==t[k]; j++,k++) ;

if (k>0 && t[k]=='\0')

return i;

}

return -1;

}

Checking to see if the string is present is the new code.

The choice made was to define a function strindex() that is

given two strings s and t and returns the position

(the index in the array) in s where t occurs.

strindex() returns -1 if t does not occur

in s.

The program is on the right; some comments follow.

- The string to look for is hardwired into the program in the

variable pattern.

We do this to avoid including a routine to read a string.

- If you think of found as a Boolean, you would expect

to see found=1; and not found++;

Actually, found counts the number of occurrences

of pattern in the input.

- Note the declarations of getline()

and strindex.

In particular see how their parameters are declared

C-style

, i.e., the code specifies you do to each parameter

in order to get a char or int.

These are not definitions of getline()

and strindex().

They include only the header information and not the body.

The declarations describe only how to use the functions, not what

they do.

- The while inside getline() is quite nice and

replaces a for in the previous version that looked like

a while in disguise.

- Note that the assignment to c is guaranteed to be done

before c is used in the comparison.

- The strindex outer for loops of where you

start in s; the inner for loops over matching

successive characters.

- The inner for uses the comma operator to initialize and

increment two variables.

Cute, but I believe j is always i+k so this usage is not

needed.

Form of a Function Definition

Note that a function definition is of the form

return-type function-name(parameters)

{

declaratons and statements

}

The default return type is int, but I recommend not

utilizing this fact and instead always declaring the return

type.

The return statement is like Java.

4.2 Functions Returning Non-integers

The book correctly gives all the defaults and explains why they are

what they are (compatibility with previous versions of C).

I find it much simpler to always

- Use no defaults when defining a function.

- If it returns int say so even though that is the

default.

- If it has no parameters, write void as the

parameter list.

- Declare all functions that are used in a file.

Have these declarations early, before any function

definitions.

4.3 External Variables

A C program consists of external objects, which are either

variables or functions.

External vs. Internal

Variables and functions defined outside any function are called

external.

Variables defined inside a function are

called internal.

Functions defined inside another function would also be

called internal; however standard C does not have internal

functions.

That is, you cannot in C define a function inside another function.

In this sense C is not a fully block-structured language

(see block structure

below).

Defining External Variables

As stated, a variable defined outside functions is external.

All subsequent functions in that file will see the definition

(unless it is overridden by an internal definition).

These can be used, instead of parameters/arguments to pass

information between functions.

It is sometimes convenient to not have to repeat a long list of

arguments common to several functions, but using external variables

has problems as well:

It makes the exact information flow harder to deduce when reading

the program.

When we solved quadratic equations in section 1.10 our second

method used external variables.

4.4 Scope Rules

The scope rules give the visibility of names in a program.

In C the scope rules are fairly simple.

Internal Names (Variables)

Since C does not have internal functions, all internal names are

variables.

Internal variables can be automatic or static.

We have seen only automatic internal variables, and this section

will discuss only them.

Static internal variables are discussed in section 4.6

below.

An automatic variable defined in a function is visible from the

definition until the end of the function (but see

block structure, below).

If the same variable name is defined internal to two functions, the

variables are unrelated.

Parameters of a function are the same as local variables in this

respect.

External Names

int main(...) {...}

int value;

float joe(...) {...}

float sam;

int bob(...) {...}

An external name (function or variable) is visible from the point

of its definition (or declaration as we shall see below) until the

end of that file.

In the example on the right main() cannot call

joe() or bob(), and cannot use either

value or sam.

bob() can call joe(), but not vice versa.

Definitions and Declarations

There can be only one definition of an external name in

the entire program (even if the program includes many files).

However, there can be multiple declarations of the same

name.

A declaration describes a variable (gives its type) but does not

allocate space for it.

A definition both describes the variable and allocates space for

it.

extern int X;

extern double z[];

extern float f(double y);

Thus we can put declarations of a variable X, an array

z[], and a function f() at the top of every file

and then X and z are visible in every function in

the entire program.

Declarations of z[] do not give its size since space is not

allocated; the size is specified in the definition.

If declarations of joe() and bob() were added

at the top of the previous example, then main() would be

able to call them.

If an external variable is to be initialized, the initialization

must be put with the definition, not with a declaration.

4.5 Header Files

#include <stdio.h>

double f(double x);

int main() {

float y;

int x = 10;

printf("x is %f\n", (double)x);

printf("f(x) is %f\n", f(x));

return 0;

}

double f(double x) {

return x;

}

x is 0.000000

f(x) is 10.000000

The code on the right shows how valuable having the types declared

can be.

The function f() is the identity function.

However, main() knows that f() takes a

double so the system automatically converts x to a

double.

Without the explicit cast (double) in the

first printf(), the compiler would give a warning about a

type mismatch, but the program would still work.

I prefer to put in the casts and not have to worry about the

warnings.

It would be awkward to have to change every file in a big

programming project when a new function was added or had a change of

signature (types of arguments and return value).

What is done instead is that all the declarations are included in a

header file.

For now assume the entire program is in one directory.

Create a file with a name like functions.h containing the

declarations of all the functions.

Then early in every .c file write the line

#include "functions.h"

Note the quotes not angle brackets, which indicates that functions.h

is located in the current directory, rather than in the standard

place

that is used for <>.

4.6: Static Variables

The adjective static has very different meanings when

applied to internal and external variables.

- For external variables, static

decreases the visibility.

- For internal variables, static

increases the lifetime.

int main(...){...}

static int b16;

void sam(...){...}

double beth(...){...}

If an external variable is defined with the static

attribute, its visibility is limited to the current file.

In the example on the right b16 is naturally visible in

sam() and beth(), but not main().

The addition of static means that if another file

has a definition or declaration of b16, with or without

static, the two b16 variables are not related.

If an internal variable is declared static, its

lifetime is the entire execution of the program.

This means that if the function containing the variable is called

twice, the value of the variable at the start of the second call is

the final value of that variable at the end of the first call.

Static Functions

As we know there are no internal functions in standard C.

If an (external) function is defined to be static, its

visibility is limited to the current file (as for static external

variables).

4.7 Register Variables

Ignore this section.

Register variables were useful when compilers were primitive.

Today, compilers can generally decide, better than

programmers, which variables should be put in register.

Start Lecture #4

4.8: Block Structure

Standard C does not have internal functions, that is you cannot in

C define a function inside another function.

In this sense C is not a fully block-structured language.

Of course C does have internal variables; we have used them in

almost every example.

That is, most functions we have written (and will write) have

variables defined inside them.

#include <stdio.h>

int main(void) {

int x = 5;

printf ("The value of outer x is %d\n", x);

{

int x = 10;

printf ("The value of inner x is %d\n", x);

}

printf ("The value of the outer x is %d\n", x);

return 0;

}

The value of outer x is 5.

The value of inner x is 10.

The value of outer x is 5.

Also C does have block structure with respect to variables.

This means that inside a block (remember that a block is a bunch of

statements surrounded by {}) you can define a new variable

with the same name as the old one.

These two variables are unrelated.

The lifetime of the new variable is just the lifetime of the

execution of the block in which it is defined.

For example, the program on the right produces the output shown.

Remark: The gcc compiler for C does permit one to

define a function inside another function.

These are called nested functions.

Some consider this gcc extension to be evil.

Homework: Write a C funcion

int odd (int x) that returns 1 if x is odd

and returns 0 if x is even.

Can you do it without an if statement?

4.9 Initialization

Default Initialization

Static and external variables are, by default, initialized to zero.

Automatic, internal variables (the only kind left) are

not initialized by default.

Initializing Scalar Variables

As in Java, you can write int X=5-2;.

For external or static scalars, that is all you can do.

int x=4;

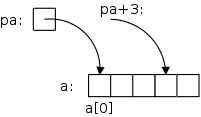

int y=x-1;

For automatic, internal scalars the initialization expression can

involve previously defined values as shown on the right (even

function calls are permitted).

Initializing Arrays

int BB[8] = {4,9,2}

int AA[] = {3,5,12,7};

char str[] = "hello";

char str[] = {'h','e','l','l','o','\0'}

You can initialize an array by giving a list of initializers as

shown on the right.

- The last 5 elements of BB are uninitialized.

- The size of AA is automatically 4.

- The last two are the same; the size of str is 6.

4.10 Recursion

The same as Java.

4.11 The C Preprocessor

Normally, before the compiler proper sees your program, a utility

called the C preprocessor is invoked to include files and perform

macro substitutions.

4.11.1 File Inclusion

#include <filename>

#include "filename"

We have already discuss both forms of file inclusion.

In both cases the file mentioned is textually inserted at the point

of inclusion.

The difference between the two is that the first form looks for

filename in a system-defined standard place

;

whereas, the second form first looks in the current directory.

4.11.2 Macro Substitution

#define MAXLINE 20

#define MULT(A, B) ((A) * (B))

#define MAX(X, Y) ((X) > (Y)) ? (X) : (Y)

#undef getchar

We have already used examples of macro substitution similar to the

first line on the right.

The second line, which illustrates a macro with arguments is more

interesting.

Without all the parentheses on the RHS, the macro would be legal,

but would (sometimes) give the wrong answers.

Question: Why?

Answer: Consider MULT(x+4, y+3)

Note that macro substitution is not the same as a

function call (with standard call-by-value or call-by-reference

semantics).

Even with all the parentheses in the third example you can get into

trouble since MAX(x++,5) can increment x twice.

If you know call-by-name from algol 60 fame, this will seem

familiar.

We probably will not use the fourth form.

It is used to un-define a macro from a library so that you can write

another version.

There is some fancy stuff involving # in the RHS of the macro

definition.

See the book for details; I do not intend to use it.

#if integer-expr

...

#elif integer-expr

...

#else

...

#endif

4.11.3 Conditional Inclusion

The C-preprocessor has a very limited set of control flow items.

On the right we see how the C

if (cond1)

...

else if (cond2)

...

else

..

end if

construct is written.

The individual conditions are simple integer expressions consisting

of integers, some basic operators and little else.

Perhaps the most useful additions are the preprocessor

function defined(name), which evaluates to 1 (true)

if name has been #define'd, and the ! operator,

which converts true to false and vice versa.

#if !defined(HEADER22)

#define HEADER22

// The contents of header22.h

// goes here

#endif

We can use defined(name) as shown on the right to ensure

that a header file, in this case header22.h, is included

only once.

Question: How could a header file be included

twice unless a programmer foolishly wrote the same #include

twice?

Answer: One possibility is that a user might

include two systems headers h1.h and h2.h each of

which includes h3.h.

Two other directives #ifdef and #ifndef test

whether a name has been defined.

Thus the first line of the previous example could have been written

ifndef HEADER22.

#if SYSTEM == MACOS

#define HDR "macos.h"

#elsif SYSTEM == WINDOWS

#define HDR "windows.h"

#elsif SYSTEM == LINUX

#define HDR "linux.h"

#else

#define HDR "empty.h"

#define MSG No header found for System

#endif

#include HDR

On the right we see a slightly longer example of the use of

preprocessor directives.

Assume that the name SYSTEM has been set to the name of the system

on which the current program is to be run (not

compiled).

Assume also that individual header files have been written for

macos, windows, and linux systems.

Then the code shown will include the appropriate header file.

In addition, if the SYSTEM is not one of the three on which the

program is designed to be run, the code to the right will define

MSG, a diagnostic that could be printed.

Note: The quotes used in the

various #defines for HDR are not required

by #define, but instead are needed by the final

#include.

Chapter 5 Pointers and Arrays

public class X {

int a;

public static void main(String args[]) {

int i1;

int i2;

i1 = 1;

i2 = i1;

i1 = 3;

System.out.println("i2 is " + i2);

X x1 = new X();

X x2 = new X();

x1.a = 1;

x2 = x1; // NOT x2.a = x1.a

x1.a = 3;

System.out.println("x2.a is " + x2.a);

}

}

Much of the material on pointers has no explicit analogue in Java;

it is there kept under the covers.

If in Java you have an Object obj, then obj is

actually what C would call a pointer.

The technical term is that Java has reference semantics for all

objects.

In C this will all be quite explicit

To give a Java example, look at the snippet on the right.

The first part works with integers.

We define 2 integer variables; initialize the first; set the second

to the first; change the first; and print the second.

Naturally, the second has the initial value of the first, namely

1.

The second part deals with X, a trivial class, whose

objects have just one data component, an integer.

We mimic the above algorithm.

We define two X's and work with their integer field

(a).

We then proceed as above: initialize the first integer field; set

the second to the first; change the first; and print the second.

The result is different from the above!

In this case the second has the altered value of the first, namely

3.

The key difference between the two parts is that (in Java) simple

scalars like i1 have value semantics; whereas objects

like x1 have reference semantics.

But enough Java, we are interested in C.

5.1 Pointers and Addresses

You will learn in 202, that the OS finagles memory in ways that

would make Bernie Madoff smile.

But, in large part thanks to those shenanigans, user programs can

have a simple view of memory.

For us C programmers, memory is just a large array of consecutively

numbered addresses.

The machine model we will use in this course is that the

fundamental unit of addressing is a byte and a character

(a char) exactly fits in a byte.

Other types like short, int, double,

float, long normally take more than one byte, but

always a consecutive range of bytes.

l-values and r-values

One consequence of our memory model is that associated with

int z=5; are two numbers.

The first number is the address of the location in which z

is stored.

The second number is the value stored in that location; in this case

that value is 5.

The first number, the address, is often called the l-value; the

second number, the contents, is often called the r-value.

Why l and r?

Consider z = z + 1;

To evaluate the right hand side

(RHS) we need to add 5 to 1.

In particular, we need the value contained in the memory location

assigned to z, i.e., we need 5.

Since this value is what is needed to evaluate the RHS of an

assignment statement it is called an r-value.

Then we compute 6=5+1.

Where should we put the 6?

We look at the LHS and see that we put the 6 into z; that

is, into the memory location assigned to z.

Since it is the location that is needed when evaluating a LHS, the

address is called an l-value.

The Unary Operators & and *

As we have just seen, when a variable appears on the LHS, its

l-value or address is used.

What if we want the address of a variable that appears on the RHS;

how do we get it?

In a language like Java the answer is simple; we don't.

In C we use the unary operator & and

write p=&x; to assign the address of x

to p.

After executing this statement we say that p points to

x or p is a pointer to x.

That is, after execution, the r-value of p is the l-value

of x.

int x=3;

int *p = &x;

Look at the declarations on the right.

x is familiar; it is an integer variable initially

containing 3.

Specifically, the r-value of x is 3.

What about the l-value of x, i.e., the location in which

the 3 is stored?

It is not an int; it is an address into which

an int can be stored.

Alternately said it is pointer to an int.

The unary prefix operator & produces the address of a

variable, i.e., &x gives the l-value of x, i.e. it

gives a pointer to x.

The unary operator * does the reverse action.

When * is applied to a pointer, it gives the value of the

object (object is used in the English not OO sense) pointed to.

The * operator is called the dereferencing or

indirection operator.

Now look at the declaration of p, which says

that p is the kind of thing that when you apply *

to it you get an int, i.e., p is a pointer to an

int.

That is why we can initialize p to &x.

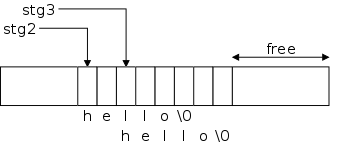

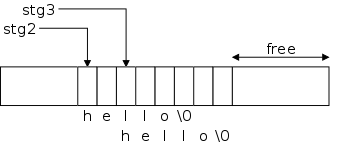

// part one of three

int x=1;

int y=2;

int z[10];

int *ip;

int *jp;

ip = &x;

Consider the code sequence on the right (part one).

The first 3 lines we have seen many times before; the next three are

new.

Recall that in a C declaration, all the doodads around a variable

name tell you what you must do the variable to get the base type at

the beginning of the line.

Thus the fourth line says that if you dereference ip you

get an integer.

Common parlance is to call ip an integer pointer (which is

why we named it ip).

Similarly, jp is another integer pointer.

At this point both ip and jp are uninitialized.

The last line sets ip to the address, of x.

Note that the types match, both ip and &x are

pointers to an int.

// part two of three

y = *ip; // L1

*ip = 0; // L2

ip = &z[0]; // L3

*ip = 0; // L4

jp = ip; // L5

*jp = 1; // L6

In part two, L1 sets y=1 as follows: ip now points

to x, * does the dereference so *ip is x.

Since we are evaluating the RHS, we take the contents not the

address of x and get 1.

L2 sets x=0;.

The RHS is clearly 0.

Where do we put this zero?

Look at the LHS:

ip currently points to x, * does a dereference

so *ip is x.

Since we are on the LHS, we take the address and not the contents of

x and hence we put 0 into x.

L3 changes ip; it now points to z[0].

So L4 sets z[0]=0;

Pointers can be used without the deferencing operator.

L5 sets jp to ip.

Since ip currently points to z[0], jp

does as well.

Hence L6 sets z[0]=1;

// part three of three

ip = &x; // L1

*ip = *ip + 10; // L2

y = *ip + 1; // L3

*ip += 1; // L4

++*ip; // L5

(*ip)++; // L6

*ip++; // L7

Part three begins by re-establishing ip as a pointer

to x so L2 increments x by 10 and the L3

sets y=x+1;.

L4 increments x by 1 as does L5

(because the unary operators ++ and * are right

associative).

L6 also increments x, but L7 does not.

By right associativity we see that the increment precedes the

dereference, but the full story awaits section 5.4

below.

5.2 Pointers and Function Arguments

void bad_swap(int x, int y) {

int temp;

temp = x;

x = y;

y = temp;

}

The program on the right is what a novice programer just learning C

(or Java) would write.

It is supposed to swap the two arguments it is called with, but

fails due to call by value semantics for function calls in C.

What happens is, when another function calls swap(a,b)

the values of the arguments a

and b are transmitted to the parameters x

and y and then swap() interchanges the values

in x and y.

But when swap() returns, the final values

in x and y are NOT transmitted

back to the arguments: a and b are unchanged.

But programs that change their arguments are useful!

Actually, what is useful is to be able to change the value of

variables used in the caller (even if some other

variables

become the arguments) and that distinction is the key.

Just because we want to swap the values of a

and b, doesn't mean the arguments have to be literally

a and b.

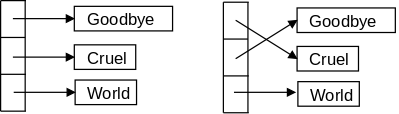

void swap(int *px, int *py) {

int temp;

temp = *px;

*px = *py;

*py = temp;

}

The program on the right has two parameters px and

py each of which is a pointer to an integer (*px

and *py are the integers).

Since C is a call-by-value language, changes to the parameters,

which are the pointers px and py

would not result in changes to the corresponding arguments.

But the program on the right doesn't change the pointers at all,

instead it changes the values they point to.

Since the parameters are pointers to integers, so must be the

arguments.

A typical call to this function would

be swap(&A,&B).

Understanding how this call results in A receiving the

value previously in B and B receiving the value

previously in A is crucial.

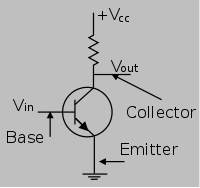

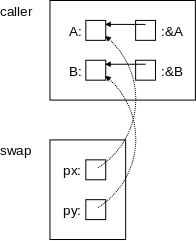

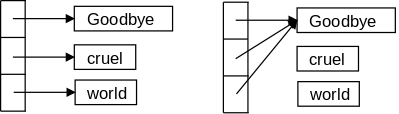

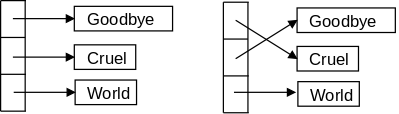

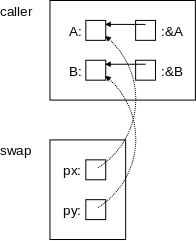

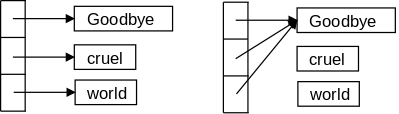

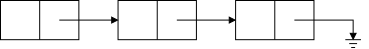

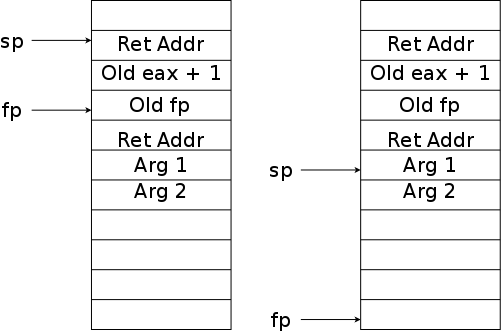

On the right is a pictorial explanation.

A has a certain address.

&A equals

that address (more precisely the

r-value of &A = the l-value of A).

Similarly for B and &B.

These are shown by the solid arrows in the diagram.

The call swap(&A,&B) copies (the r-value

of) &A into (the r-value of) the first parameter, which

is px.

Similarly for &B and the second parameter, py.

These are shown by the dotted arrows.

Thus the value of px is the address of A, which is

indicated by the arrow.

Again, to be pedantic, the r-value of px equals the r-value

of &A, which equals the l-value of A.

Similarly for B and py.

Swapping px with py would change the dotted

arrows, but would not change anything in the caller.

However, we don't swap px with py, instead we

swap *px with *py.

That is we dereference the pointers and swap the things pointed to!

This subtlety is the key to understanding the effect of many C

functions.

It is crucial.

Homework: Write rotate3(A,B,C) that sets

A to the old value of B, sets B to old

C, and C to old A.

Homework: Write plusminus(x,y) that sets

x to old x + old y and sets y

to old x - old y.

Start Lecture #5

A Larger Example—getch(), ungetch(), and getint()

The program pair getch() and ungetch() generalize

getchar() by supporting the notion of unreading a

character, i.e., having the effect of pushing back several already

read characters.

Note that ungetch() is careful not to exceed the size of

the buffer used to stored the pushed back characters.

Remember that C does not generate run-time checks that you are not

accessing an array beyond its bound.

Recall I mentioned that in the past an number of break ins were

caused by the lack of such checks in library programs like this.

#include <stdio.h>

#define BUFSIZE 100

char buf[BUFSIZE];

int bufp = 0;

int getch(void);

void ungetch(int);

int getint(int *pn);

int getch(void) {

return (bufp>0) ? buf[--bufp] : getchar();

}

void ungetch(int c) {

if (bufp >= BUFSIZE)

printf("ungetch: too many chars\n");

else

buf[bufp++] = c;

}

#include <stdio.h>

#include <ctype.h>

int getint(int *pn) {

int c, sign;

while (isspace(c=getch())) ;

if (!isdigit(c) && c!=EOF && c!='+' && c!='-') {

ungetch(c);

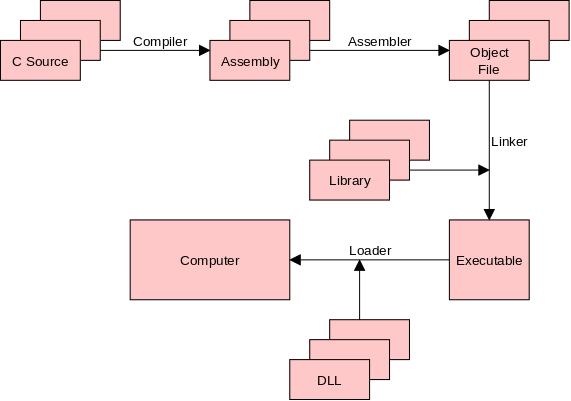

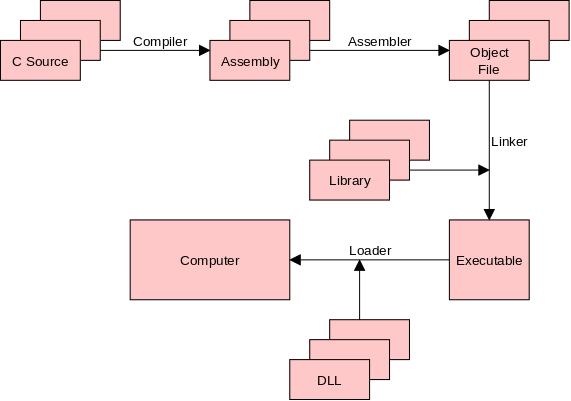

return 0;