Start Lecture #1

I start at 0 so that when we get to chapter 1, the numbering will agree with the text.

There is a web site for the course. You can find it from my home page, which is http://cs.nyu.edu/~gottlieb

The course text is Weiss,

Data Structures and Algorithm Analysis in Java

.

It is available in the NYU Bookstore.

(If you buy it elsewhere be careful with the title, Weiss has another

book that also covers data structures and java.)

Replyto contribute to the current thread, but NOT to start another topic.

top post, that is, when replying, I ask that you either place your reply after the original text or interspersed with it.

musttop post.

Grades are based on the labs, the midterm, and the final exam, with each very important. (but see homeworks below).

I use the upper left board for lab/homework assignments and announcements. I should never erase that board. Viewed as a file it is group readable (the group is those in the room), appendable by just me, and (re-)writable by no one. If you see me start to erase an announcement, let me know.

I try very hard to remember to write all announcements on the upper left board and I am normally successful. If, during class, you see that I have forgotten to record something, please let me know. HOWEVER, if I forgot and no one reminds me, the assignment has still been given.

I make a distinction between homeworks and labs.

Labs are

Homeworks are

Homeworks are numbered by the class in which they are assigned. So any homework given today is homework #1. Even if I do not give homework today, the homework assigned next class will be homework #2. Unless I explicitly state otherwise, all homeworks assignments can be found in the class notes. So the homework present in the notes for lecture #n is homework #n (even if I inadvertently forgot to write it to the upper left board).

I feel it is important for majors to be familiar with basic

client-server computing (nowadays sometimes called

cloud computing

in which you develop on a client machine

(likely your personal laptop), but run programs on a remote server

(for us, most likely i5.nyu.edu).

You submit the jobs from the server and your final product remains

on the server (so that we can check dates in case we lose you

lab).

You may solve lab assignments on any system you wish, but ...

request receiptfeature from home.nyu.edu or mail.nyu.edu and select the

when deliveredoption.

I sent it ... I never received itdebate. Thank you.

I believe you all have accounts on i5.nyu.edu. Your username and password should be the same as on home.nyu.edu (at least that works for me).

Good methods for obtaining help include

You labs must be written in Java.

Incomplete

The rules for incompletes and grade changes are set by the school and not the department or individual faculty member. The rules set by CAS can be found in http://cas.nyu.edu/object/bulletin0608.ug.academicpolicies.html. They state:

The grade of I (Incomplete) is a temporary grade that indicates that the student has, for good reason, not completed all of the course work but that there is the possibility that the student will eventually pass the course when all of the requirements have been completed. A student must ask the instructor for a grade of I, present documented evidence of illness or the equivalent, and clarify the remaining course requirements with the instructor.

The incomplete grade is not awarded automatically. It is not used when there is no possibility that the student will eventually pass the course. If the course work is not completed after the statutory time for making up incompletes has elapsed, the temporary grade of I shall become an F and will be computed in the student's grade point average.

All work missed in the fall term must be made up by the end of the following spring term. All work missed in the spring term or in a summer session must be made up by the end of the following fall term. Students who are out of attendance in the semester following the one in which the course was taken have one year to complete the work. Students should contact the College Advising Center for an Extension of Incomplete Form, which must be approved by the instructor. Extensions of these time limits are rarely granted.

Once a final (i.e., non-incomplete) grade has been submitted by the instructor and recorded on the transcript, the final grade cannot be changed by turning in additional course work.

This email from the assistant director, describes the policy.

Dear faculty,

The vast majority of our students comply with the

department's academic integrity policies; see

www.cs.nyu.edu/web/Academic/Undergrad/academic_integrity.html

www.cs.nyu.edu/web/Academic/Graduate/academic_integrity.html

Unfortunately, every semester we discover incidents in

which students copy programming assignments from those of

other students, making minor modifications so that the

submitted programs are extremely similar but not identical.

To help in identifying inappropriate similarities, we

suggest that you and your TAs consider using Moss, a

system that automatically determines similarities between

programs in several languages, including C, C++, and Java.

For more information about Moss, see:

http://theory.stanford.edu/~aiken/moss/

Feel free to tell your students in advance that you will be

using this software or any other system. And please emphasize,

preferably in class, the importance of academic integrity.

Rosemary Amico

Assistant Director, Computer Science

Courant Institute of Mathematical Sciences

You should have taken 101 (or if not should have experience in Java programming).

A goal of this course is to improve your Java skills. We will not be learning many new aspects of Java. Instead, we will be gaining additional experience with those parts of Java taught in 101.

The primary goal of this course is to learn several important data structures and to analyze how well they perform. I do not assume any prior knowledge of this important topic.

A secondary goal is to learn and practice a little client-server computing. My section of 101 did this, but I do not assume you know anything about it.

Confirm that everyone with putty/ssh installed has an account on i5.nyu.edu.

Homework: For those of you with Windows clients, get putty and winscp. They are definitely available on the web at no cost. The Putty software for Windows 7 is not available through ITS. However it is available free online via http://bit.ly/hy9IZj.

I will do a demo on thursday of this software so please do install it by then so you can verify that it works for you.

In 101 our goal was to learn how to write correct programs in Java.

Step 1, the main step in 101, was to learn how to write anything in Java.

We did consider a few problems (e.g., sorting) that required some thought to determine a solution, independent of the programming language, but mostly we worried about how to do it in Java.In this course we will go beyond getting a correct program and seek programs that are also high performance, i.e., that have comparatively short running time even for large problems.

Of course we can not sacrifice correctness for performance, but sometimes we will sacrifice simplicity for performance.

public class Max {

public int max(int n, int[]a) {

// assumes n > 0

int ans = a[0];

for (int i=0; i<n; i++)

if (a[i] > ans)

ans = a[i];

return ans;

}

}

Assume you have N numbers and want to find the kth largest. If k=1 this is simply the minimum; If k=N this is simply the maximum; If k=N/2 this is essentially the median.

To do maximum you would read the N numbers in to an array and call a program like the one on the right. Minimum is the same simple idea.

Both of these are essentially optimal: To find the max, we surely need to examine each number so the work done will be proportional to N and the program above is proportional to N.

The median is not so simple to do optimally. One method is fairly obvious and works for any k: Read the elements into an array; sort the array; select the desired element.

It might seem silly to sort all N elements since we don't care about the order of all those past the median. So we could do the following.

The trouble is that neither method is optimal. For large N, say 10,000,000 and k near N/2, both take a long time; whereas a more complicated algorithm is very fast.

In mathematics log(x) means loge(x), but in computer science, including this course, log(x) means log2(x).

We will not be considering complex numbers.

When we write logA(B), we assume B>0, A>0, and A≠1.

To me the first question is

what does ab actually mean?

.

One thing is certain: 6π does

NOT mean that you multiply 6 by itself π times.

Since this is not a math course the explanation I will give is unofficial (and is not in these notes).

XA+B = XAXB

XA-B = XA / XB

XA*B = (XA)B

XAB = X(AB)

(this is the definition)

20=1, 210=1024, 220=10242

logX(B)=A means XA=B

(definition??)

logA(B) = log(B) / log(A)

(definition??)

logA(B) = logC(B) / logC(A)

(a more general version of the previous line)

log(A*B) = log(A) + log(B)

log(A/B) = log(A) - log(B)

log(AB) = B*log(A)

log(X) < XX (clear for positive integers)

A>B ⇒ log(A)>log(B)

log(1) = 0, log(2) = 1, log(1024) = 10 log(10242)=20

A series is where you sum many (possibly infinitely many) numbers.

An important formula is the sum of a so-called geometric

series, i.e., a series where each term equals the previous one times

a fixed number (A in the formula below)

I don't know how to get summation formulas to print nicely in html.

If you can solve this problem, please let me know.

ΣNi=0 Ai = (AN+1-1) / (A-1)

It is easy to extend and specialize this formula.

Now each term differs equals the previous one plus a fixed number.

If that fixed number is 1 and we start at 1 the formula is

ΣNi=1 i = N*(N+1) / 2

I prefer the proof that says add the numbers in pairs, one from each end. Each pair sums to N+1 and there are N/2 pairs.

Again you can generalize to an arbitrary fixed number and starting point. So the sum

7 + 12 + 17 + 22 + 27 + 32 + 37 + 42 + 47 = 54 * (9/2)

The second approximation below fails for k=-1 so we use

the third, which might remind you of integrating 1/x.

ΣNi=1 i2 = N*(N+1)*(2N+1)/6

ΣNi=1 ik ≅

Nk+1/|k+1|

ΣNi=1 1/i ≅ loge(N)

Start Lecture #2

Lab 1, Part 1 is assigned and is due in 7 days. It consists of sending an email with the correct subject to the grader.

Homework: 1.1.

We say A is congruent to B modulo N, written A≡B(mod N), if N divides A-B evenly (i.e., with no remainder). This is the same as saying that A and B have the same remainder when divided by N.

From the definition it is easy to see that if A≡B(mod N) then

A three step procedure for showing that a statement S(N) is true for all positive integers N.

As an example we show that ΣNi=1 i2 = N*(N+1)*(2N+1)/6

The base case for N=1 is trivial 1=1*2*3/6.

Assume true for N≤k.

To prove for N=k+1 we apply the inductive hypothesis to

show that

Σk+1i=1 i2 =

Σki=1 i2 +

(k+1)2 =

k(k+1)(2k+1)/6 + (k+1)2

Algebra shows that this equals (k+1)((k+1)+1)(2(k+1)+1)/6 as desired.

You just need one case to show that a statement is false. To show that x2>x is false we just plug in x=1 and see that 12>1 is false.

To show something is true, we assume it is false and derive a contradiction. Perhaps the most famous example is Euclid's proof that there are infinitely many primes.

public class Recurse {

public static double recurse(int x) {

// assume x >= 0

return (x < 2) ? x+1.0 : recurse(x-1)*recurse(x-2);

}

public static void main(String[] argv) {

for(int i=0; i<20; i++)

System.out.printf("recurse(%d)=%f\n", i, recurse(i));

}

}

For many Java methods, executing the method does not result in calling the method. For example executing the max() method above does not result in calling the max() method.

Consider the code on the right. The recurse() method implements the mathematical function f(0)=1, f(1)=2, f(x)=f(x-1)*f(x-2) for x>1. Note that executing recurse(2) results in a call of recurse(1) and a call of recurse(0). This is called recursion.

At first blush both the mathematical definition and the Java program look nonsensical. How can we define something in terms of itself?

It is OK because

closerto a base case than x is. That is, eventually we reach a base case and then get a definite answer.

You might find the output of this class amusing.

Very often recursive programs are shown to be correct by

mathematical induction.

Indeed, we find the term base case

in both.

Just as we assume the inductive hypothesis when proving a theorem by induction, we assume the recursive call is correct when designing a recursive program.

closerto a base case than the original call.

Our recurse() method violates the fourth rule. This violation can be seen easily: f(3)=f(2)*f(1)=f(1)*f(0)*f(1). Indeed, the recurse() method can be easily rewritten to not be recursive.

(define f

(lambda (x)

(if (< x 2)

(+ x 1)

(* (f (- x 1)) (f (- x 2))))))

For fun, I rewrote recurse in the programming language Scheme since

that language has built-in support for bignums

.

The Scheme code for recurse is shown on the right.

Note that in Scheme, the operator precedes the operands.

When I asked the Scheme interpreter to evaluate (f 20), the response was click here.

Even more fun was triggered when I stumbled across the java

BigInteger class.

I found

this class as I was going through the great online

resource http://java.sun.com/javase/6/docs/api.

(Now that Sun Microsystems has been bought by Oracle, this site

redirects you to http://down.oracle/com/javase/6/docs/api.

But do not be fooled, Java was created

at Sun and, at least

for me will always be associated with Sun).

So I modified the Recurse class above to the add a bigRecurse() method analogous to the recurse() method above. I didn't find a way to use infix operators + and * so the code, shown below looks bigger, but it really is not. The one deviation I made was to use if-then-else and not ?-: since that would have led to one huge line.

The output is here.

import java.math.BigInteger;

public class Recurse {

public static BigInteger bigRecurse(BigInteger x) {

// assume x >=0

BigInteger TWO = new BigInteger("2");

if (x.compareTo(TWO) < 0)

return x.add(BigInteger.ONE);

else

return bigRecurse(x.subtract(BigInteger.ONE)).multiply(bigRecurse(x.subtract(TWO)));

}

public static double recurse(int x) {

// assume x >= 0

return (x < 2) ? x+1 : recurse(x-1)*recurse(x-2);

}

public static void main(String[] argv) {

for(Integer i=0; i<21; i++) {

System.out.printf(" recurse(%d)=%1.0f\n", i, recurse(i));

System.out.printf("bigRecurse(%d)=%s\n", i,

bigRecurse(new BigInteger(i.toString())).toString());

}

}

}

Homework: 1.5

A generic method is one that works for objects of different types.

Please recall from 101 what it means for a method name to be overloaded, and what it means for one method to override another.

In both overloading and overriding we have two (or more) methods with the same name and wish to choose the correct one for the situation. For genericity, we wish to have just one function that functions correctly in two (or more) situations, i.e., can be applied with two different signatures.

Naturally, we cannot write a squareRoot() method and have it apply to rectangle objects. An example of what we seek is to write one sort() method and have it apply to an array of integers and to an array of doubles.

If two classes C1 and C2 are both subclasses of a superclass S (i.e., C1 and C2 are derived from S), then genericity can be achieved by simply defining the method to have a parameter of type S and then the method can automatically accept an argument of type C1 or of type C2.

public class MemoryCell {

private Object storedValue;

public Object read() {

return storedValue;

}

public void write (Object x) {

storedValue = x;

}

}

This seems too easy! Since the class Object is a superclass of every class, if we define our method to have an Object parameter, then it can have any (not quite, see below) argument at all.

The trouble is the method is limited to just those operations that are defined on Object. So we can do only very simple generic things as illustrated on the right.

Also when another method M() invokes read() it obtains an Object. It the actual item stored is say a Rectangle, then M must downcast the value returned.

Homework: 1.13. Assume no errors occur (items to remove are there, the array doesn't overflow, etc).

While it is true that all Java objects are members of the class Object, there are values in Java that are not objects, namely values of the 8 primitive types (byte, char, short, int, long, boolean, float, double).

public class WrapperDemo {

public static void main(String[]arg) {

MemoryCell mC = new MemoryCell();

mC.write(new Integer(10));

Integer wrappedX = (Integer)mC.read();

int x = wrappedX.intValue();

System.out.println(x);

}

}

In order to accommodate these values, Java has a

wrapper type

for each of the primitives, namely

(Byte, Character, Short, Integer, Long, Boolean, Float, Double).

The code on the right shows how to use the wrapper class to enable MemoryCell to work with integer values. We will see shortly that this manual converting between int and Integer values can be done automatically by the compiler.

public class GenSortDemo {

public static void sort(Comparable[] a) {

for(int i=0; i<a.length-1; i++)

for (int j=i+1; j<a.length; j++)

if (a[i].compareTo(a[j]) > 0) {

Object temp = a[i];

a[i] = a[j];

a[j] = (Comparable)temp;

}

}

public static void main(String[]arg) {

String[] sArr ={"one","two","three","four"};

Integer[] iArr = { 5, 3, 8, 0 };

sort(sArr);

sort(iArr);

for (int i=0; i<4; i++)

System.out.println(iArr[i]+" "+ sArr[i]);

}

}

0 four

3 one

5 three

8 two

As mentioned, a method cannot do much with an Object. Our example just did load and store.

The next step us is to make use of Java interfaces. A class can be derived from only one full-blown class (Java, unlike C++, has single inheritance). However a class can inherit from many interfaces.

Recall from 101 that a Java interface is a class in which all methods are abstract (i.e., have no implementation) and all data fields are constant (static final in Java-speak). If a (non-abstract) class inherits from an interface, then the class must actually implement all the methods defined in the interface.

Consider an important interface called Comparable, which has no data fields and only one method, the instance method int compareTo(Object o). The intention is that compareTo() returns a negative/zero/positive value if the instance object is </=/> the argument.

Thus, if we write a method that accepts a bunch of Comparable objects, we can compare them, which enables sorting as shown on the right.

Remarks:

Start Lecture #3

Lab 1, Part 2 assigned. Due in 7 days.

Suppose class D is derived from C.

Then a D object IS-A C object.

Question: True or false, a D[] object IS-A

C[] object.

In Java the answer is true. The technical term is that Java has a covariant array type.

This can cause trouble when you have also E derived from C. It might be more logical to not have covariant array types, but, before generics, useful constructs would be illegal. See the book for details.

public class GenericMemoryCell<T> {

private T storedValue;

public T read() {

return storedValue;

}

public void write(T x) {

storedValue = x;

}

}

public class GenericMemoryCellDemo {

public static void main(String[]arg) {

String s1 = "str", s2;

Integer i;

GenericMemoryCell<String> gMC =

new GenericMemoryCell<String>();

gMC.write(s1);

s2 = gMC.read();

// i = gMC.read(); Compile(!!) error

System.out.println(s2);

}

}

Look back at our code for the MemoryCell class. All the data were Objects. The good news is that we only wrote the code once and could use it to store a value of any class. The bad news is that if we in fact stored a String, read it and downcast the value to a Rectangle the error would not be caught until run time.

With Java 1.5 generics, the equivalent error would be caught at compile time. For example consider the code at the right.

The first frame shows the generic class.

The T inside <> is a type parameter

.

That is this generic class is a template for an infinite number of

instantiations where T is replaced by any class.

In the second frame we see a use of this class with T

replaced by String.

The resulting object gMC can only be used to store and read

a String.

The commented line listed as an error would be erroneous in the

first implementation as well.

The difference is that the error would be caught only at run time

when the Object stored is downcast to a String.

The downsides to the java 1.5 approach are

public class WrapperDemo15 {

public static void main(String[]arg) {

MemoryCell mC = new MemoryCell();

mC.write(10);

int x = (Integer)mC.read();

System.out.println(x);

}

}

Another addition in Java 5 is automatic boxing and unboxing; that is, the compiler now converts between int and Integer automatically (also for the other 7 primitive types).

As an example, the WrapperDemo can actually be written in the simplified form shown on the right.

When we used <T> above the type T was arbitrary. Sometimes we want to limit the possible types for T. For example, imagine wanting to limit T to be a GeometricObject or one of its subclasses Point, Rectangle, Circle, etc. Java has syntax to limit these types to any type extending another type S (i.e., require T be a subtype of S). Java also has syntax to require T to be a supertype of S.

This material and the remainder of chapter, may well prove important later in the course. But it seems like too much new Java to introduce without any serious examples to use it. So we will revisit the topics later if needed.

We will return to this in section 4.3, later in the course.

Caused by the type erasure implementation.

We will go light on this material in 102. It is done more seriously in 310, Basic Algorithms. We write functions as though they are applied to real numbers, e.g., f(x)=xe, but are mostly interested in evaluating these functions for x a positive integer. For this reason we normally use N or n instead of x or X for the variable. We also are really only interested in functions whose values are positive.

Our primary interest is for N to be the size of a problem and f(N) to be the time it takes to run the problem. As a result we often use T for the function name to remind us that we are discussing the time needed to execute a program and not the value it computes.

We want to capture the concept of comparing function growth where

we ignore additive and multiplicative constants.

For example we want to consider 4N2-500N+1 to be

equivalent

as 50N2+1000N and want to

consider either of them to be bigger than

1000N1.5log(N).

Definition: A function T() is said to be big-Oh of f() if there exists constants c and n0 such that T(N)≤cf(N) whenever N≥n0. We write T=O(f).

Definition: A function T() is said to be big-Omega of f() if f=O(T). We write T=Ω(f).

Definition: A function T() is said to be Theta of f() if T=O(f) and T=Ω(f). We write T=Θ(f).

Definition: A function T() is said to be little-Oh of f() if T=O(f) but T≠Θ(f). We write T=o(f).

Definition: A function T() is said to be little-Omega of f() if f=o(T). We write T=ω(f).

We are trying to capture the growth rate of a function.

Note: We don't write O(4N2+5). Instead we would write O(N2), which is equivalent since 4N2+5=Θ(N2).

Note:

Here is an easy way to see that the order (big O or big Theta) of

any polynomial is just the highest term.

Take for example

31N4+1030N3+N2+876.

To show this is O(N4) it suffices to show that, for

large N, the above is less than

32N4.

Take 4 derivatives of both the original and

32N4.

The original gives 31(4!), 32N4 gives

32(4!).

So 32N4 always has a bigger 4th

derivative.

So for big N it has a bigger 3rd derivative.

So for bigger N, it has a bigger 2nd derivative.

So for yet bigger N, it has a bigger 1st derivative.

So for even yet bigger N, it is bigger.

Done.

End of Note

Homework: 2.1, 2.2, 2.5, 2.7a(1)-(5) (remind me to do this one in class).

Lab 1, Part 3: 2.7b and 2.7c (again (1)-(5)). Due 7 days after next lecture, when we go over 2.7a

We use a simple model, with infinite memory and where all simple operations take one time unit.

We study the running time, which by our model is the number of instructions executed. For this reason we often write T(N) instead of f(N). For a given algorithm, the running time normally depends on the size of the input. Indeed, it is the size of the input to the algorithm that is the N in our formulas and T(N) is the number of instructions used by the algorithm on an input of size N.

But wait, some algorithms take longer (sometimes much longer) on some inputs than on others. Normally, we study Tworst(N), the worst-case time, i.e., the max of the times the algorithm takes over all possible inputs of size N.

Often more useful, but normally harder to compute, is the average time over all inputs of size N.

Normally less useful is the best-case performance, i.e., the least time over all inputs of size N.

public static int sum(int n) {

int ans = 0;

for (int i=0; i<n; i++)

ans += i*i*i:

return ans;

}

On the right we see a simple program to compute ΣN-1i=0i3

The loop is executed N times (the code use n (lower case) since that is the Java convention). The body has 2 multiplications, one addition, and one assignment (this is really quite primitive; probably the sum does the assignment). Thus the body requires 4N instruction.

The loop control requires one instruction to initialize i, N+1 tests (N succeed the last one fails) and N increments. This gives 2N+2.

So the total, using our crude analysis is 6N+4, which is O(N). One advantage of the approximate nature of the big-Oh notation is that those constants that we ignore are very hard to calculate and differ for different compilers and CPUs.

Since we are interested only in growth rate (in this case big-Oh), it is silly to calculate (crudely) all the constants and then throw them out.

Since, we are primarily concerned with worst case analysis, we do not worry about what percentage of time an if statement executes the then path. We just assume the longest path is executed each time.

The next section gives some rules we can use to calculate the growth rate.

Start Lecture #4

Remark:

Remember the rules above are designed for a worst-case analysis.

The code on the right calculates Fibonacci numbers (1, 1, 2, 3, 5, 8, 13, ...). It is basically the standard recursive definition written in Java. It is wonderfully clear and obviously correct since it corresponds so closely to the definition. How long does it take to run?

Let T(N) be the number of steps to compute fib(n).

public class Fib {

public static long fib (int n) {

System.out.printf ("Calling fib(%d)\n", n);

if (n<=1)

return 1;

return fib(n-1) + fib(n-2);

}

public static void main(String[]arg) {

int n = Integer.valueOf(arg[0]);

System.out.printf("--> fib(%d)=%d\n", n, fib(n));

}

}

Since fib(n) is just two other fibs and one

addition, it is clear that

T(N) = T(N-1) + T(N-2) +1.

With some work we could show that

T(N) = Ω((3/2)N)

which is horrible!

It can also be shown that

T(N) = O((5/3)N)

which limits the horribleness.

One measure of the horror is that when I executed

java Fib 20 | grep "fib(0)" | wc

I found out that fib(0) is evaluated 4181 times (always

giving the answer of 1) for one evaluation of fib(20).

Summary: the code on the right is wonderfully clear but clearly not wonderful.

We do very little of this section.

Consider searching for an element in a sorted array of size N. By checking the middle of the array, we either find the answer or divide the size of the search space in half. So the worst that can happen is that we keep dividing N by 2 until we just have one element to check (the above is crude).

How many times can you divide N by 2 before getting 1?

(Really we divide by 2 and then take the ceiling).

Answer: Θ(log(N)).

You can run a program for many inputs and see if the running time grows as you expect.

Homework:

time java Fib 5

to see how long java Fib 5 takes to run.Sometimes the worst case occurs only very rarely and unfortunately the average case time is very hard to calculate.

Gives the specification of the type (the objects and the operations) but not their implementation. Indeed, can have multiple (very different) implementations for the same ADT.

But do note that just specifying the objects is not enough. For example, if the objects are sets of integers, we might or might not want to support the operations of union, intersection, search, etc.

A list is more than a set of elements; there is the concept of position. For example, every nonempty list has a first and last element.

The list ADT might include insert, remove, find, print, makeEmpty, previous, next, findKth.

The material will be presented in three parts.

Arrays are great for some aspects of Lists.

// code to double the size of an array // could triple/halve/etc as easily int[] newArr = new int[2*arr.length]; for (int i=0; i<arr.length; i++) newArr[i] = arr[i]; arr = newArr; // be sure to understand

The code on the right shows how to double the size of an array during execution. This was difficult with older languages without dynamic memory allocation and back then one had to declare the array to be the maximum size it would ever need to be.

Let's understand this code since it illustrates

Arrays, however, are far from perfect. If insertions/deletions are to be performed at arbitrary points in the array (instead of just at the end), the operations are (Θ(N)). For this reason, we need an alternate implementation, which we do next.

Start Lecture #5

The problem with arrays was the requirement that consecutive elements be stored contiguously, which requires that insertions and deletions move all the elements after the insertion/deletion site to keep the list contiguous.

On the board draw pictures showing insertion/deletion into/from an array.

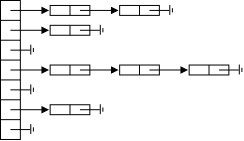

Linked lists in contrast have each list item explicitly point to the next, removing the necessity of contiguous allocation.

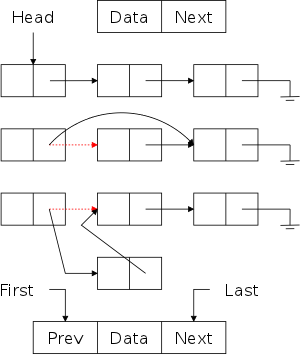

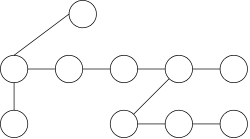

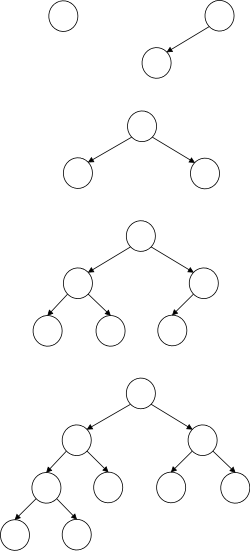

On the right are some pictures. The top row shows a single-linked list node. In addition to the data component (the only content of an array-based list), there is a next component that (somehow, how?) refers to the next node.

The second row shows 3-element long single-linked list. Note the electrical ground symbol that is used to indicate a null reference. To access the list, a reference to the head is stored and, from this reference, the entire list can be found by following next references.

The third row shows the actions needed to delete a node (in this case the center node) from the top list. Note that to delete the center node we need to alter the next component of the previous node and thus need a reference to this node. Thus, given just a reference to a node (and the list head), deletion is a Θ(N) operation, with the most effort needed to find the previous node.

The fourth row shows the actions needed to insert a node between the first and second nodes of the top list. This operation requires a reference to the first node, i.e., to the node after which the insertion is to take place.

Finally, the fifth row shows a single node double-linked list.

On the board do the equivalent of the rows 2-4 for a double-linked list.

Lists, sets, bags, queues, dequeues, stacks, etc. all represent collections of elements (with some additional properties). The Java library implementation of these ADTs all implement the Collection interface.

We need to begin by reviewing some Java.

Recall from 101 that an interface is a class having no real methods (they are all abstract) and no true variables (they are all constants, static final in Java-speak).

One interface can extend one or more other interfaces and one class can implement one or more interfaces; whereas a class can extend only one other class.

Typically, an interface specifies a property satisfied by various classes. For example, any class that implements the Comparable interface is guaranteed to provide a compareTo() instance method, which can be used to compare two objects of the class. The idea is that, if obj1.compareTo(obj2) returns a negative/zero/positive integer, then obj1 is less/equal/greater than obj2.

One advantage of having interfaces is that it ensures uniformity. For example, every class that implements Comparable supplies compareTo() rather than compareWith() or comparedTo() or ... .

The Collection interface abstracts the notion of the English word collection: all classes extending Collection contain objects that are collections of elements. What type are these elements?

The Java Collection interface is generic and so it normally written as Collection<E>, the E is a type variable giving the type of the elements (the letter E is used to remind us that the type parameter determines the elements used).

For example, if a class C implements Collection<String> then a C object contains a collection of Strings.

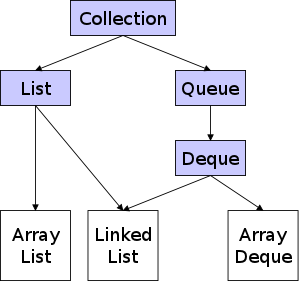

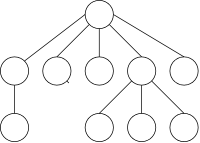

We shall see that there are many kinds of Java collections; indeed the online API shows 10 interfaces and 31 classes in the Collection hierarchy. A very small subset of the hierarchy is shown on the right. Those boxes shown with a light blue background are interfaces; those with a white background are real classes. The longer names are split just for formatting.

Since List is only an interface (not a full class like LinkedList), you can write.

List list1 = new LinkedList(...);

or

LinkedList list2 = new LinkedList(...);

but not

List list3 = new List(...).

Why would you ever want to use list1 instead of

list2?

Because then you would be sure that you are employing only the

List properties of list1.

Thus, if you wanted to switch from a LinkedList

implementation to an ArrayList implementation, you would

need change just the declaration of List1 to

List list1 = new ArrayList(...);

and you are assured that everything else will work unchanged.

Note that a LinkedList IS-A Deque as well as a

List, but by using list1, you can be sure that you

are not using any Deque methods.

Since Collection is the root of the hierarchy, all the components of the Collection interface are available in the 10 other interfaces in the hierarchy as well as the 31 classes.

public interface Collection <E> extends Iterable<E> {

int size();

boolean isEmpty();

boolean contains();

void clear(); // destructive

boolean add(Object o); // destructive

boolean remove(Object o); // destructive

java.util.Iterator<E> iterator();

// 9 others

}

The Collection interface includes a number of simple methods.. For example, size() returns the number of elements currently present, contains() determines if an element is present, and isEmpty() checks if the collection is currently empty.

The above methods only query the collection, they do not modify it.

Other methods in Collection actually change the collection

itself.

Apparently, these are called destructive

, but I don't believe

that is a good name since in English destroy

suggests

delete

or at least render useless

, which overstate

mere modifications.

Three of these destructive methods are clear(), which

empties the collection, add(), which inserts, and

remove(), which deletes.

Remark: I find the following material on the Iterable<E> and Iterator interfaces and the associated methods (one of which is iterator()) to be unmotivated at this point so will study the material in a slightly different order. We will skip to 3.3.3 and come back here after 3.4. At that point I believe it is much easier to motivate.

In fact Collection is itself a subinterface of Iterable (which has no superinterface). The later interface supports the for-each loops, touched upon briefly in 101 for arrays.

Recall from 101 that Java has a for-each construct that permits one to loop sequentially through an array examining (but not changing) each element in turn.

for(int i=0; i<a.length; i++) { | for(double x: a) {

use (NOT modify) a[i] | use (NOT modify) x

} | }

Assume that a has been defined as an array of doubles.

Then instead of the standard for loop on the near right,

we can use the shortened, so-called for-each

variant on the

far right.

public class Test {

public static <E> void f(Iterable<E> col) {

int count=0;

for (E i : col)

count++;

}

}

This interface guarantees the existence of a for-each loop for objects. Thus any class that implements Iterable provides the for-each loop for its objects, analogous to the for-each taught in 101 for arrays. This is great news for any user of the class; but is an obligation for the implementor of the class.

The trivial code on the right shows how to count the number of elements in any Interable object.

Since Collection extends Iterable, we can use a for-each loop to iterate over the elements in any collection.

But what if you wanted a more general loop, say a while loop or what if you want to remove some of the elements? Lets look a little deeper

The Iterable<T> interface also supplies an iterator() method that when invoked returns an Iterator<T> object. This object has three methods: next(), which returns the next element in T; hasNext(), which specifies if there is a next element; and remove(), which removes the element returned by the last next().

Given the first two methods it is not hard to see how the for-each is implement by Java.

Note that remove() can be called at most once per call to next() and that it is illegal (and gives unspecified results) if the Collection is modified during an iteration in any way than by remove().

The List interface extends Collection and so has more stuff (constants, methods) specified. If you are a user of a class that implements List (such as ArrayList or LinkedList) this is good news: there is more available for you to use.

If, on the other hand you are an implementor of a class that is required to extend List, this is bad news. There is more for you to implement.

At different times we will take on both roles. For now we are users so we are anxious to find out what goodies are available that weren't available for Collection.

Note that the real section heading should be

The List<E> Interface, and the

ArrayList<E>, and LinkedList<E>

(Classes)

.

Although we will write Java that does use the type parameter, for a

while will not emphasize it.

The fundamental concept that List adds to Collection is that the elements in a list are ordered. That is a list, unlike a collection, has a concept of first element, a 25th element, etc.. Note that this is not a size ordering as you can have a list of Integers such as {10, 20, 10, 20}, which is clearly not in size ordering (for any definition of size).

public interface List<E> extends Collection<E> {

E get(int index);

E set(int index, E element);

void add(int index, E element);

E remove(int index); // book has void not E

ListIterator<E> listIterator(int index);

}

The code on the right illustrates some of the consequences of this ordered nature. We notice that add() and remove() now include an index parameter, specifying the position at which the method performs its insertion or removal. Naturally the one-argument add() from Collection is also available. The List specification states that this add(), for a List, will insert at the end, where it is fast. (The specification for Collection just states that, after add() is executed, the element is there. So if it was there already, a second instance may or man not be added). We can also get() and set() the element at a given position.

Start Lecture #6

import java.util.*;

public class DemoMakeSumList {

private static final int LIST_SIZE = 200000;

public static void main (String[]args) {

// One or the other of the next 2 declarations

List<Long> list = new ArrayList<Long>();

List<Long> list = new LinkedList<Long>();

// One of the other of the next 2 method calls

makelist1(list, LIST_SIZE);

makelist2(list, LIST_SIZE);

System.out.println(sum(list));

}

public static void makeList1(List<Long> list, int N) {

list.clear();

for (long i=0; i<N; i++)

list.add(i); // insert at the end

}

public static void makeList2(List<Long> list, int N) {

list.clear();

for (long i=0; i<N; i++)

list.add(0,i); // insert at the beginning

}

public static int sum(List<Long>, list) {

int ans = 0;

for (int i=0; i<list.size(); i++);

ans += list.get(i);

return ans; // missing in book

}

}

We will see two implementations of the List interface: ArrayList and LinkedList. The code on the right illustrates how they can be used.

The important item to note is that only the declaration of list changes; the rest of the program stays exactly the same. However, the performance of the list operations does change.

As expected ArrayList uses a (dynamically resizeable) array to store the list. This makes retrieving an item from a known position very fast (O(1), i.e., constant time). Thus executing sum() is quite fast (O(N)). The bad news is that inserting and removing items are slow (O(N), i.e., linear time) unless the item is near the end of the list. Thus executing makeList1() is fast (O(N)), but executing makeList2 is slow (O(N2)).

The LinkedList implementation uses a double-linked list as we pictured above. This makes all insertions and deletions O(1) (provided we know the position at which to operate, or are operating at an index near an end). Thus both makeList1() and makeList2() are fast (O(N). However, getting and setting by index are O(N) (unless the index is near one of the ends). Thus sum() is slow (O(N2)).

Consider the problem of removing every even number from a List of Longs. Note that no (reasonable) implementation of remove() can make the code efficient if list is actually an ArrayList, but we can hope for a fast implementation if list is a LinkedList.

public static void removeEvens1(List<Long> list) {

int i = 0;

while (i < list.size())

if (list.get(i) % 2 == 0)

list.remove(i);

else

i++;

}

A straightforward solution using the index nature of a List is shown on the right. This does indeed work, but stepping through a list by index guarantees poor performance for a LinkedList, the one case we had hopes for good performance. The trouble is that we are using the same code to march through list and this code is good for one List implementation and poor for the other. The right solution uses iterators, which we are downplaying for now.

public static void removeEvens2(List<Long> list) {

for (Long x: list)

if (x % 2 == 0)

list.remove(x);

}

Given the task of removing all even numbers from a

List<Long>, a for-each

would appear

to be perfect, slick, and best of all easy; a trifecta.

Since List extends Iterable, a List

object such as list can have all its elements accessed

via a for-each

loop, with the benefit that the low level

code will be tailored for the actual class of list

(ArrayList or LinkedList).

The code is shown on the right.

Unfortunately, we actually hit a tetrafecta: perfect, slick,

easy, and wrong!

The rules involving for-each

loops, whether for arrays or

for Iterable classes, forbid modifying the array or

collection during loop execution.

public static void removeEvens3(List<Long> list) {

Iterator<Long> iter = list.iterator();

while (iter.hasNext())

if (iter.next() % 2 == 0)

iter.remove();

}

The solution is to write the loop ourselves using the hasNext() and next() methods of the Iterator. We then use the remove() method of the Iterator to delete an element if it is even. Note that this remove() is not the same method mistakenly used above. This one is from the Iterator class; the previous one was from the List class. Also note that remove() is called at most once after each call of next(). This is required! The correct code is on the right.

A ListIterator, unlike a mere Iterator found in a Collection can retrieve either the next or previous element.

Specifically, an instance of ListIterator has the additional methods hasPrevious() and previous(). Moreover, remove() may now be called once per call to either next() or previous() and it removes the element most recently returned by next() or previous().

Lab 1, Part 4 (the last part) assigned. Due 1 March 2011.

As mentioned previously we will look at lists from three viewpoints:

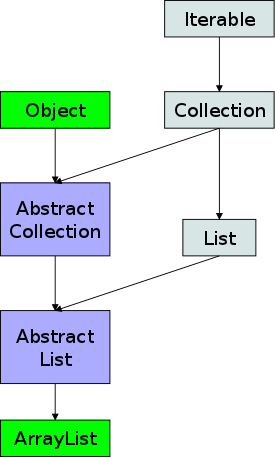

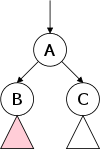

The Java library has excellent list implementations, which as we

have seen, form a fairly deep hierarchy.

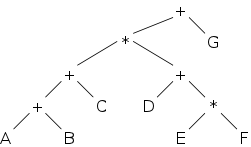

For example, the diagram on the right shows the classes (green),

abstract classes (dark blue), and interfaces (light blue) that

lie above

ArrayList in the hierarchy.

The abstract classes serve as starting points for user written classes. They implement some of the methods in the interface.

We cannot hope to match the quality of this high-class implementation and if one needed to use an ArrayList, I would strongly recommend the library class and not the one we will develop, whose purpose is to better understand how to implement a list as an array.

We will be content to just implement a version of ArrayList that we will call MyArrayList and will not reproduce any of the hierarchy.

void clear(); int size(); boolean isEmpty(); E get(int index); E set(int index, E element); boolean add(Object o); void add(int index, E element); E remove(int index); boolean remove(Object o); boolean contains(); Iterator<E> iterator();

To implement ArrayList we need to implement all the methods in List. Since List extends Collection, which extends Iterable, we need to implement all of those methods as well.

If we wrote our ArrayList as an extension of AbstractList this task would be fairly easy since the latter implements most of the needed methods. However, we are working from scratch.

Looking back to our discussions of Collection and List we see that our task is to implement the methods on the right. Both classes have other methods as well; we will be content to implement these.

One simplification we are making, at least initially, is to ignore Iterable. I have removed those portions from the code below, which is an adaptation of figures 3.15 and 3.16.

public class MyArrayList<E>

implements Iterable<E> {

private static final int DEFAULT_CAPACITY=10;

private int theSize;

private E[] theElements;

public MyArrayList() {

clear();

}

public void clear() {

theSize = 0;

ensureCapacity(DEFAULT_CAPACITY);

}

private void ensureCapacity(int newCapacity) {

if (newCapacity < theSize)

return;

E[] old = theElements;

theElements = (E[]) new Object[newCapacity];

for (int i=0; i<size(); i++)

theElements[i] = old[i];

}

public int size() {

return theSize;

}

public boolean isEmpty() {

return size() == 0;

}

public E get(int index) {

if (index < 0 || index >= size())

throw new ArrayIndexOutOfBoundsException();

return theElements[index];

}

public E set(int index, E newVal) {

if (index < 0 || index >= size())

throw new ArrayIndexOutOfBoundsException();

E old = theElements[index];

theElements[index] = newVal;

return old;

}

public boolean add(E x) {

add(size(), x);

return true;

}

public void add(int index, E x) {

if (theElements.length == size())

ensureCapacity(size() * 2 + 1);

for (int i=theSize; i>index; i--)

theElements[i] = theElements[i-1];

theElements[index] = x;

theSize++;

}

public E remove(int index) {

E removedElement = theElements[index];

for (int i=index; i<size()-1; i++)

theElements[i] = theElements[i+1];

theSize--;

return removedElement;

}

public boolean remove (Object o) {

// part of lab 2

}

public boolean contains(Object o) {

for (int i=0; i<size(); i++) {

E e = theElements[i];

if ( (o==null) ? e==null : o.equals(e) )

return true;

}

return false;

}

// ignore the rest for now

public java.util.Iterator<E> iterator() {

return new ArrayListIterator<E>(this);

}

private static class ArrayListIterator<E>

implements java.util.Iterator<E> {

}

}

The first thing to notice is that MyArrayList is generic and that the elements in the list are of type E. You can think of E as Integer (but NOT int), or String, or even Object.

As written MyArrayList does not implement List. To do so would require implementing a whole bunch of methods, which will be done when we revisit this class later.

The class contains two data fields theElements, the actual array used to store the elements and theSize, the current number of elements. Note that theSize is not theElements.lenght, we refer to the later as the capacity and the code makes use of the auxiliary method ensureCapacity(), discussed below, to keep the capacity of the array large enough to contain theSize elements. There is also a symbolic constant DEFAULT_CAPACITY.

All of these are declared private since we do not want clients (i.e., users) of our lists to access these items.

There is just one (no-arg) constructor. Please be sure you recall from 101 the situation when the constructor is executed: The data fields are already declared, but they are not initialized. For theSize this is clear, we have an uninitialized int. For the array theElements it means that the variable exists, but does not yet point to an array. The latter is the job of clear(), which uses the helper method ensureCapacity() to set theElements() to an initialized array capable of holding DEFAULT_CAPACITY elements (but currently holding none).

This method, using the technique in 3.2.1, changes the capacity of the array, but never to a value less than the current size of the list. As a helper functions, ensureCapacity() is naturally private. I don't know why the book has it public.

These methods are given an index at which to operate. Naturally, get() returns the value found and wisely set() returns the old value, which can prove useful. Both methods raise an exception if the index is out of bounds, which seems inconsistent since add() and remove() do not make the corresponding check.

Collection specifies an add() method with one parameter, an element, which is added somewhere to the collection. List refines this specification to require that the element is added at the end. List also specifies an additional add() with a 2nd parameter giving the position to add the element.

The second method is implemented by moving forward all the elements from that position onward and then inserting into the resulting hole. The first method is implemented as a special case of the second.

If the List is full prior to the insertion, its size is first doubled via ensureCapacity().

The remove() added to Collection by List is fairly simple. It removes and returns the element at the specified index, sliding down any succeeding elements. The size is adjusted.

The remove in Collection is given an element and returns a boolean indicating whether the element is present in the list. If it is present, one occurrence is removed. List strengthens this specification to require that, if the element is present, the first occurrence is removed.

This second method will be part of lab 2.

The textbook has the parameter as E, the Java specification states Object. The book has no implementation (actually it is an exercise). The at first strange looking code on the right is essentially straight from the specification. Let us look at the specification for contains() and see how it gives rise to the code on the right. This is a useful skill since it is the key to effective use of the Java library.

We are skipping this part now; it will be discussed later.

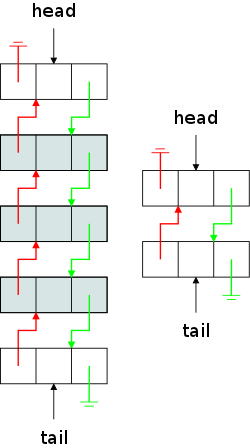

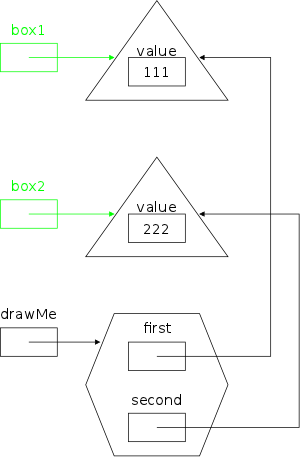

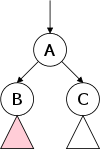

Early in the chapter we sketched a double linked list just to show

the forward and backward links.

It turns out to be easier to program such lists (fewer special

cases) if the two end nodes are not used for data but as special

head

and tail

nodes.

As a result the diagram on the near right represents a list with

only three elements, the shaded nodes.

As before, we use the electrical ground

symbol to represent a

null reference.

The green arrows are the next references and the red arrows

are the prev references.

The middle component is the data.

Since we are implementing List<E>, the type of the

data is E.

Assuming the list is not empty, the first element of the list is

(the central data component of) head.next and the last

element is tail.prev.

An empty double linked list, an example of which is drawn on the far right, would therefore have two nodes that point to each other as well as to ground. Those are the same two that were unshaded in the near right diagram. For an empty list tail.prev==head and head.next==tail.

Our task is basically the same as with ArrayList: We need to implement the List interface, whose methods are repeated on the right. Naturally, the implementation is different and the corresponding methods will have different performance in the two List implementations.

void clear(); int size(); boolean isEmpty(); E get(int index); E set(int index, E element); boolean add(Object o); void add(int index, E element); boolean remove(Object o); E remove(int index); boolean contains();

One significant implementation difference is that the basic data structure in the ArrayList implementation is an array, which is already in Java. For LinkedList we need, in addition to a few variables, an unbounded number of nodes (N+2 nodes for a list with N elements).

So we need two classes MyLinkedList and MyNode. The former class will have all the methods as well as the two nodes constituting an empty list, the latter class just contains the three data fields that comprise a node. Two of these fields (prev and next) should be knowable only to the two classes. In particular they should be hidden from the users of these classes.

The right

way to do this is to have the MyNode class

defined inside the MyLinkedList class and we will do this

eventually.

For now to avoid taking a time out to learn about nested (and inner)

classes we will make both classes top level, understanding that this

does not hide prev and next as well as we

should.

Start Lecture #7

public class Node<E> {

public E data;

public Node<E> prev;

public Node<E> next;

public Node(E d, Node<E> p, Node<E> n) {

data=d; prev=p; next=n;

}

}

The node class looks easy. It has three fields and a constructor that initializes them to the given arguments.

But wait it looks impossible!

How can a node contain two nodes (and something else).

Is this magic or a typo?

Neither, it's an old friend, reference semantics.

As written this class implements neither List nor Iterable. We shall revisit MyLinkedList and enhance the implementation so that List and Iterable are indeed implemented.

public class MyLinkedList<E> {

private int theSize;

private Node<E> beginMarker;

private Node<E> endMarker;

public MyLinkedList() {

clear();

}

public void clear() {

beginMarker = new Node<E>(null, null, null);

endMarker = new Node<E>(null, beginMarker, null);

beginMarker.next = endMarker;

theSize = 0;

}

public int size() {

return theSize;

}

public boolean isEmpty() {

return size() == 0;

}

public E get(int index){

return getNode(index).data;

}

private Node<E> getNode(int index) {

Node<E> p;

if (index<0 || index>size())

throw new IndexOutOfBoundsException();

if (index < size()/2) {

p = beginMarker.next;

for (int i=0; i<index; i++)

p = p.next;

} else {

p = endMarker;

for (int i=size(); i>index; i--)

p = p.prev;

}

return p;

}

public E set(int index, E newVal) {

Node<E> p = getNode(index);

E oldVal = p.data;

p.data = newVal;

return oldVal;

}

public void add(int index, E x) {

addBefore(getNode(index), x);

}

public boolean add(E x) {

add(size(), x);

return true;

}

private void addBefore(Node<E> p, E x) {

Node<E> newNode = new Node<E>(x, p.prev, p);

newNode.prev.next = newNode;

p.prev = newNode;

theSize++;

}

public E remove(int index) {

return remove(getNode(index));

}

private E remove(Node<E> p) {

p.next.prev = p.prev;

p.prev.next = p.next;

theSize--;

return p.data;

}

public boolean remove(Object o) {

// part of lab 2

}

}

There are just three fields, the two end nodes present in any list and the current size of the list.

The constructor just uses clear() to produce an empty list. Show, on the board show that clear() does produce our picture.

Note that if clear is employed by the user to clear a nonempty

list, the original nodes are just left hanging around.

A bug?

No.

Garbage collection will retrieve them.

The get() method, which was straightforward with the array-based implementation, is harder this time. The difficulty is that we are given an index as an argument, but a linked list, unlike an array-based list, does not have indices.

Pay attention to the getNode() helper (i.e., private) method, which starts at the nearest end and proceeds forward or backward (via next or prev) until it arrives at the appropriate node. This node can be the end marker, but not the begin marker.

The set() method uses the same helper to find the node in question. Note that set returns the old value present, which can prove very useful.. Pay particular attention to the declarations without a new operator and remember reference semantics.

Specifically, the declaration of p does not create a new node. It just creates a new node variable and initializes this variable to refer to an existing node. Similarly the declaration of oldVal does not create a new element (a new E), but just a new element variable.

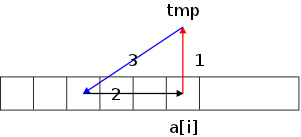

The add() specified in List is given an index as well as an element and is to add a node with this element so that it appears at the given index. This implies that it must be inserted before the node currently at that index. The task is accomplished using two helpers: getNode(), which we have already seen, and addBefore (see below), which does the actual insertion.

The add() specified in Collection just supplies the element, which may be inserted anywhere. The List specification refines this to require the element be added at the end of the list. Therefore, this method simply calls the other add() giving size() as the insertion site.

Thanks to getNode(), addBefore() is given an element and a node before which it is to add this element. Show the pointer manipulation on the board.

The List remove() is given an index and removes the corresponding node (found by getNode()). Draw pictures on the board to show that the code (in the helper remove() method) is correct.

Analogous to the situation with add(), the Collection remove(), specifies that an occurrence of the argument Object be removed and the List spec refines this to the first occurrence.

This remove() will be part of lab 2.

List<Long> list; // Maybe Array or maybe Linked ... Long sum = 0; for (int i=0; i<list.size(); i++) sum += list.get(i);

We have now seen the implementation for two different implementations of the List interface. For either one we can sum the elements as shown on the right. It is great that the exact same code can be used for either implementation.

What is not great is that the code used is very efficient for an ArrayList, but is quite inefficient for a LinkedList. See the timing comparison below.

The problem is that when we change list from

ArrayList to LinkedList, the methods change

automatically, but the looping behavior does not change ...

... until now.

A for loop is tailor made for an array, but is inappropriate for a linked structure. The solution is to use a more generic loop made from the three parts of a for: initialization, test, advance-to-next.

The initialization is done as a special case of advance-to-next. What is needed are methods to test for completion and to advance to the next (or first) element of the List (actually this works for any Collection, really for any Iterable<E>). These methods are called hasNext() and next() respectively.

public class DemoMakeSumList {

private static final int LIST_SIZE = 20;

public static void main (String[]args) {

//List<Long> list = new ArrayList<Long>();

List<Long> list = new LinkedList<Long>();

//makeList1(list, LIST_SIZE);

makeList2(list, LIST_SIZE);

System.out.println(sum(list));

}

public static void makeList1(List<Long>list,

int N) {

list.clear();

for (long i=0; i<N; i++)

list.add(i); // insert at the end

}

public static void makeList2(List<Long>list,

int N) {

list.clear();

for (long i=0; i<N; i++)

list.add(0,i); // insert at beginning

}

public static long sum(List<Long> list) {

long ans = 0;

for (int i=0; i<list.size(); i++)

ans += list.get(i);

return ans; // missing in book

}

}

On the right is code we saw previously that makes and sums a list of Longs. Note that the code for sum() uses list.get() for each index in the loop, a great plan for ArrayList, but a poor technique for LinkedList.

The code below shows sum() rewritten to use hasNext() and next().

public static long sum(List<Long> list) {

long ans = 0;

Iterator<Long> iter = list.iterator();

while (iter.hasNext())

ans += iter.next();

return ans;

}

Compare the above with using a Scanner. We declare and create (with new) a Scanner instance (I normally call mine getInput) and then to get the next (or first) String, I use getInput.next(). To find if there is another String, I use getInput.hasNext().

The Iterator (really Iterator<E>) interface abstracts this next/hasNext idea. Indeed, the Scanner class implements Iterator<String>

The List interface does not extend Iterator so the code above could not say list.next(). Instead, List extends (Collection, which extends) the Iterable interface. Thus, any class implementing List, must implement Iterable. Iterable specifies a parameterless method iterator(), which returns an Iterator. That is how the code above created iter. Like any Iterator, iter contains instance methods next(), hasNext(), and remove(), the first two of which are used above.

public static long sum(List<Long> list) {

long ans = 0;

for (long x: list)

ans += x;

return ans;

}

We have just seen that, if a class implements Iterable

(not Iterator), then each instance of the class can create

an Iterator, which contains next() and

hasNext() methods.

This permits the loop we just showed.

The extra goody is that the Java compiler permits a more pleasant

syntax to be used in this case, the for-each

loop that we saw

in 101 for arrays.

It is shown on the right.

(Offering an alternative nicer

syntax, is often called

syntatic sugaring

.

import java.util.*;

public class DemoMakeSumList {

private static Calendar cal;

private static final int LIST_SIZE = 200000;

public static void main (String[]args) {

long sum;

List<Long> list1 = new ArrayList<Long>();

List<Long> list2 = new LinkedList<Long>();

printTime("Start Time (ms): ");

makeList1(list1, LIST_SIZE);

printTime("Made ArrayList at end: ");

makeList2(list1, LIST_SIZE);

printTime("Made ArrayList at beg: ");

sum = sum1(list1);

printTime("Sum ArrayList std for: ");

System.out.println(" " + sum);

sum = sum2(list1);

printTime("Sum ArrayList foreach: ");

System.out.println(" " + sum);

}

public static void printTime(String msg) {

cal = Calendar.getInstance();

System.out.println(msg + cal.getTimeInMillis());

}

public static void makeList1(List<Long>list,int N) {

list.clear();

for (long i=0; i<N; i++)

list.add(i); // insert at the end

}

public static void makeList2(List<Long>list,int N) {

list.clear();

for (long i=0; i<N; i++)

list.add(0,i); // insert at the beginning

}

public static long sum1(List<Long> list) {

long ans = 0;

for (int i=0; i<list.size(); i++)

ans += list.get(i);

return ans; // missing in book

}

public static long sum2(List<Long> list) {

long ans = 0;

for (long x: list)

ans += x;

return ans;

}

}

On the right is the beginnings of an attempt to actually time the results of the various versions of list, makeList(), and sum()

The Calendar class seems to be the way to get timing information. See my printTime() on the right. After declaring a Calendar you can initialize it using Calendar.getInstance() and then find the number of milliseconds (a long) since the Epoch (Midnight GMT, 1 January 1970) by invoking getTimeInMillis().

The output of the code on the right is as follows.

Start Time (ms): 1297877874798 Made ArrayList at end: 1297877874816 Made ArrayList at beg: 1297877879840 Sum ArrayList std for: 1297877879849 19999900000 Sum ArrayList foreach: 1297877879860 19999900000

We see that making an ArrayList by inserting at the end

required 0.018 seconds, but inserting at the beginning raised that

to 5.024 seconds.

Summing this list with a for loop took 0.009 seconds and

with a for-each

loop 0.011 seconds.

Certainly this could be presented better, and what about the other

half, i.e., using the LinkedList list2?

Answer: That will be (an easy) part of lab 2.

For example your version will do the subtractions that we had to do mentally to figure out how long each step required. Also dividing by 1000 and using something like "%6.3f" as a format, would give seconds rather than milliseconds.

But this timing data should not obscure the important point that to change from an ArrayList to a LinkedList requires us to change the declaration and nothing else.

Homework:

Add main() methods to each of our two

List implementations, MyArrayList and

MyLinkedList.

The main() methods should be essentially identical.

Each should create one or more lists (that is the difference, one

will say Array

where the other says Linked

).

The add a few elements, do some gets and sets, maybe a remove.

In general exercise the methods of the classes.

Note that except for the list declarations and creations, the two

main programs should be character for character the same.

Start Lecture #8

Homework: 3.1, 3.2.

Remark: Now we can go back and explain much of the previously gray'ed out material. I have given a light green background to those parts of the notes that appeared previously.

In fact Collection is itself a subinterface of Iterable (which has no superinterface). The later interface supports the for-each loops, touched upon briefly in 101 for arrays.

Recall from 101 that Java has a for-each construct that permits one to loop sequentially through an array examining (but not changing) each element in turn.

for(int i=0; i<a.length; i++) { | for(double x: a) {

use (NOT modify) a[i] | use (NOT modify) x

} | }

Assume that a has been defined as an array of doubles.

Then instead of the standard for loop on the near right,

we can use the shortened, so-called for-each

variant on the

far right.

This interface guarantees the existence of a for-each loop for objects. Thus any class that implements Iterable provides the for-each loop for its objects, analogous to the for-each taught in 101 for arrays. This is great news for any user of the class; but is an obligation for the implementor of the class.

public class Test {

public static <E> void f(Iterable<E> col) {

int count=0;

for (E i : col)

count++;

}

}

The generic method on the right counts the number of elements in any Interable object. The first <E> tells Java that E is a type parameter. Were it omitted, the compiler would think that the second E is an actual type (and would print an error since there is no such type).

Since List extends Collection, which extends Iterable, we can use a for-each loop to iterate over the elements in any Collection (in particular any List).

But what if you wanted a more general loop, say a while loop or what if you want to remove some of the elements? Let's look a little deeper.

The Iterable<T> interface specifies an iterator() method that when invoked returns an Iterator<T> object. This object has three instance methods: next(), which returns the next element in T; hasNext(), which specifies if there is a next element; and remove(), which removes the element returned by the last next().

Given the first two methods it is not hard to see how the for-each is implement by Java.

Note that remove() can be called at most once per call to next() and that it is illegal (and gives unspecified results) if the Collection is modified during an iteration in any way other than by remove().

Consider the problem of removing every even number from a List of Longs. Note that no (reasonable) implementation of remove() can make the code efficient if list is actually an ArrayList, but we can hope for a fast implementation if list is a LinkedList.

public static void removeEvens1(List<Long> list) {

int i = 0;

while (i < list.size())

if (list.get(i) % 2 == 0)

list.remove(i);

else

i++;

}

A straightforward solution using the index nature of a List is shown on the right. This does indeed work, but stepping through a list by index guarantees poor performance for a LinkedList, the one case we had hopes for good performance. The trouble is that we are using the same code to march through any kind of list and this code is good for one List implementation and poor for the other.

public static void removeEvens2(List<Long> list) {

for (Long x: list)

if (x % 2 == 0)

list.remove(x);

}

Given the task of removing all even numbers from a

List<Long>, a for-each

solution would appear

to be perfect, slick, and best of all easy; a trifecta.

Since List extends Iterable, a List

object such as list can have all its elements accessed

via a for-each

loop, with the benefit that the low level

code will be tailored for the actual class of list

(ArrayList or LinkedList).

The code is shown on the right.

Unfortunately, we actually hit a tetrafecta: perfect, slick,

easy, and wrong!

The rules involving for-each

loops, whether for arrays or

for Iterable classes, forbid modifying the array or

collection during loop execution.

public static void removeEvens3(List<Long> list) {

Iterator<Long> iter = list.iterator();

while (iter.hasNext())

if (iter.next() % 2 == 0)

iter.remove();

}

The solution is to write the loop ourselves using the hasNext() and next() methods of the Iterator. We can then use the remove() method of the Iterator to delete an element if it is even. Note that this remove() is not the same method mistakenly used above. This one is from the Iterator class; the previous one was from the List class. Also note that remove() is called at most once after each call of next(). This is required! The correct code is on the right.

A ListIterator, unlike a mere Iterator found in a Collection can retrieve either the next or previous element.

Specifically, an instance of ListIterator has the additional methods hasPrevious() and previous(). Moreover, remove() may now be called once per call to either next() or previous() and it removes the element most recently returned by next() or previous().

public class MyArrayList<E>

implements Iterable<E> {

private static final int DEFAULT_CAPACITY=10;

private int theSize;

private E[] theElements;

public MyArrayList() {

clear();

}

public void clear() {

theSize = 0;

ensureCapacity(DEFAULT_CAPACITY);

}

private void ensureCapacity(int newCapacity) {

if (newCapacity < theSize)

return;

E[] old = theElements;

theElements = (E[]) new Object[newCapacity];

for (int i=0; i<size(); i++)

theElements[i] = old[i];

}

public int size() {

return theSize;

}

public boolean isEmpty() {

return size() == 0;

}

public E get(int index) {

if (index < 0 || index >= size())

throw new ArrayIndexOutOfBoundsException();

return theElements[index];

}

public E set(int index, E newVal) {

if (index < 0 || index >= size())

throw new ArrayIndexOutOfBoundsException();

E old = theElements[index];

theElements[index] = newVal;

return old;

}

public boolean add(E x) {

add(size(), x);

return true;

}

public void add(int index, E x) {

if (theElements.length == size())

ensureCapacity(size() * 2 + 1);

for (int i=theSize; i>index; i--)

theElements[i] = theElements[i-1];

theElements[index] = x;

theSize++;

}

public E remove(int index) {

E removedElement = theElements[index];

for (int i=index; i<size()-1; i++)

theElements[i] = theElements[i+1];

theSize--;

return removedElement;

}

public boolean remove (Object o) {

// part of lab 2

return true; // so that it compiles

}

public boolean contains(Object o) {

for (int i=0; i<size(); i++) {

E e = theElements[i];

if ( (o==null) ? e==null : o.equals(e) )

return true;

}

return false;

}

public java.util.ListIterator<E> iterator() {

return new ArrayListIterator<E>(this);

}

private static class ArrayListIterator<E>

implements java.util.ListIterator<E> {

private int current = 0;

private MyArrayList<E> theList;

public ArrayListIterator(MyArrayList<E> list) {

theList = list;

}

public boolean hasNext() {

return current < theList.size();

}

public E next() {

return theList.theElements[current++];

}

public void remove() {

theList.remove(--current);

}

public int nextIndex() {

return current;

}

public boolean hasPrevious() {

return current > 0;

}

public E previous() {

return theList.theElements[--current];

}

public int previousIndex() {

return current-1;

}

public void add(E e) {

throw new UnsupportedOperationException();

}

public void set(E e) {

throw new UnsupportedOperationException();

}

}

Recall that we haven't yet fulfilled two obligation with respect to the ArrayList class. Our previous version of MyArrayList has no iterator and so does not implement Iterable it is also missing a number of methods needed to implement List.

A new version implementing Iterable is shown on the right; the missing List methods will appear later.