Operating Systems

Start Lecture #9

Remark: Lab 3 assigned.

It is due in 2 weeks on 10 November 2010.

Chapter 3 Memory Management

Also called storage management or

space management.

The memory manager must deal with the

storage hierarchy present in modern machines.

- The hierarchy consists of registers (the highest level),

cache, central memory, disk, tape (backup).

- Various (hardware and software) memory managers moves data

from level to level of the hierarchy.

- We are concerned with the central memory ↔ disk boundary.

- The same questions are asked about the cache ↔ central

memory boundary when one studies computer architecture.

Surprisingly, the terminology is almost completely different!

- When should we move data up to a higher level?

- Fetch on demand (e.g. demand paging, which is dominant now

and which we shall study in detail).

- Prefetch

- Read-ahead for file I/O.

- Large cache lines and pages.

- Extreme example.

Entire job present whenever running.

- Unless the top level has sufficient memory for the entire

system, we must also decide when to move data down to a lower

level.

This is normally called evicting the data (from the higher

level).

- In OS classes we concentrate on the central-memory/disk layers

and transitions.

- In architecture we concentrate on the cache/central-memory

layers and transitions (and use different terminology).

We will see in the next few weeks that there are three independent

decision:

- Should we have segmentation.

- Should we have paging.

- Should we employ fetch on demand.

Memory management implements address translation.

- Convert virtual addresses to physical addresses

- Also called logical to real address translation.

- A virtual address is the address expressed in

the program.

- A physical address is the address understood

by the computer hardware.

- The translation from virtual to physical addresses is performed by

the Memory Management Unit or (MMU).

- Another example of address translation is the conversion of

relative addresses to absolute addresses

by the linker.

- The translation might be trivial (e.g., the identity) but not

in a modern general purpose OS.

- The translation might be difficult (i.e., slow).

- Often includes addition/shifts/mask—not too bad.

- Often includes memory references.

- VERY serious.

- Solution is to cache translations in a

Translation Lookaside Buffer (TLB).

Sometimes called a translation buffer (TB).

Homework:

What is the difference between a physical address and a virtual address?

When is address translation performed?

- At compile time

- Compiler generates physical addresses.

- Requires knowledge of where the compilation unit will be loaded.

- No linker.

- Loader is trivial.

- Primitive.

- Rarely used (MSDOS .COM files).

- At link-edit time (the

linker lab

)

- Compiler

- Generates relative (a.k.a. relocatable) addresses for each

compilation unit.

- References external addresses.

- Linkage editor

- Converts relocatable addresses to absolute.

- Resolves external references.

- Must also converts virtual to physical addresses by

knowing where the linked program will be loaded.

Linker lab

does

this, but it is trivial since we

assume the linked program will be loaded at 0.

- Loader is still trivial.

- Hardware requirements are small.

- A program can be loaded only where specified and

cannot move once loaded.

- Not used much any more.

- At load time

- Similar to at link-edit time, but do not fix

the starting address.

- Program can be loaded anywhere.

- Program can move but cannot be split.

- Need modest hardware: base/limit registers.

- Loader sets the base/limit registers.

- No longer common.

- At execution time

- Addresses translated dynamically during execution.

- Hardware needed to perform the virtual to physical address

translation quickly.

- Currently dominates.

- Much more information later.

Extensions

- Dynamic Loading

- When executing a call, check if the module is loaded.

- If it is not loaded, have a linking loader load it and

update the tables to indicate that it now is loaded and where

it is.

- This procedure slows down all calls to the routine (not just

the first one that must load the module) unless you rewrite

code dynamically.

- Not used much.

- Dynamic Linking.

- This is covered later.

- Commonly used.

Note: I will place ** before each memory management

scheme.

3.1 No Memory Management

The entire process remains in memory from start to finish and does

not move.

The sum of the memory requirements of all jobs in the system cannot

exceed the size of physical memory.

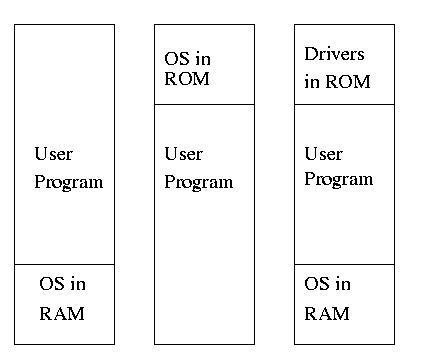

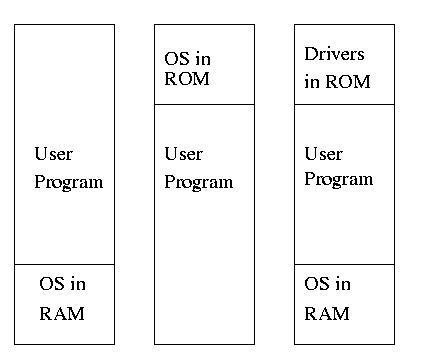

Monoprogramming

The good old days

when everything was easy.

- No address translation done by the OS (i.e., address translation is

not performed dynamically during execution).

- Either reload the OS for each job (or don't have an OS, which is almost

the same), or protect the OS from the job.

- One way to protect (part of) the OS is to have it in ROM.

- Of course, must have the OS (read-write) data in RAM.

- Can have a separate OS address space that is accessible

only in supervisor mode.

- Might put just some drivers in ROM (BIOS).

- The user employs overlays if the memory needed

by a job exceeds the size of physical memory.

- Programmer breaks program into pieces.

- A

root

piece is always memory resident.

- The root contains calls to load and unload various pieces.

- Programmer's responsibility to ensure that a piece is already

loaded when it is called.

- No longer used, but we couldn't have gotten to the moon in the

60s without it (I think).

- Overlays have been replaced by dynamic address translation and

other features (e.g., demand paging) that have the system support

logical address sizes greater than physical address sizes.

- Fred Brooks (leader of IBM's OS/360 project and author of

The

mythical man month

) remarked that the OS/360 linkage editor was

terrific, especially in its support for overlays, but by the time

it came out, overlays were no longer used.

Running Multiple Programs Without a Memory Abstraction

This can be done via swapping if you have only one program loaded

at a time.

A more general version of swapping is discussed below.

One can also support a limited form of multiprogramming, similar to

MFT (which is described next).

In this limited version, the loader relocates all relative

addresses, thus permitting multiple processes to coexist in physical

memory the way your linker permitted multiple modules in a single

process to coexist.

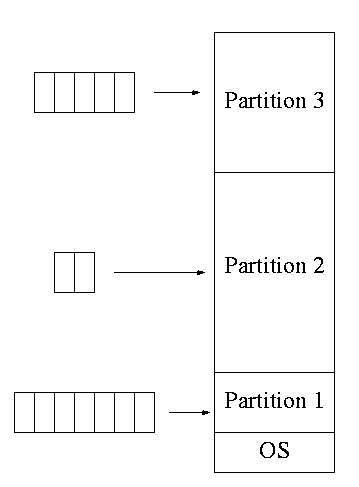

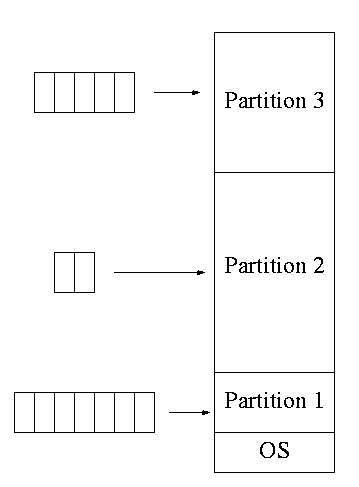

**Multiprogramming with Fixed Partitions

Two goals of multiprogramming are to improve CPU utilization, by

overlapping CPU and I/O, and to permit short jobs to finish quickly.

- This scheme was used by IBM for system 360 OS/MFT

(multiprogramming with a fixed number of tasks).

- An alternative would have a single input

list

instead

of one queue for each partition.

- With this alternative, if there are no big jobs, one can

use the big partition for little jobs.

- The single list is not a queue since would want to remove

the first job for each partition.

- I don't think IBM did this.

- You can think of the input lists(s) as the ready list(s)

with a scheduling policy of FCFS in each partition.

- Each partition was monoprogrammed, the

multiprogramming occurred

across partitions.

- The partition boundaries are not movable

(must reboot to move a job).

- So the partitions are of fixed size.

- MFT can have large internal fragmentation,

i.e., wasted space inside a region of memory assigned

to a process.

- Each process has a single

segment

(i.e., the virtual

address space is contiguous).

We will discuss segments later.

- The physical address space is also contiguous (i.e., the program

is stored as one piece).

- No sharing of memory between process.

- No dynamic address translation.

- OS/MFT is an example of address translation during load time.

- The system must

establish addressability

.

- That is, the system must set a register to the location at

which the process was loaded (the bottom of the partition).

Actually this is done with a user-mode instruction so could

be called execution time, but it is only done once at the

very beginning.

- This register (often called a base register by ibm) is

part of the programmer visible register set.

Soon we will meet base/limit registers, which, although

related to the IBM base register above, have the important

difference of being outside the programmer's control or

view.

- Also called relocation.

- In addition, since the linker/assembler

allow the use of addresses as data, the loader itself

relocates these at load time.

- Storage keys are adequate for protection (the IBM method).

- An alternative protection method is base/limit registers,

which are discussed below.

- An advantage of the base/limit scheme is that it is easier to

move a job.

- But MFT didn't move jobs so this disadvantage of storage keys

is moot.

3.2 A Memory Abstraction: Address Spaces

3.2.1 The Notion of an Address Space

Just as the process concept creates a kind of abstract CPU to run

programs, the address space creates a kind of abstract memory for

programs to live in.

This does for processes, what you so kindly did for modules in the

linker lab: permit each to believe it has its own memory starting at

address zero.

Base and Limit Registers

Base and limit registers are additional hardware, invisible to the

programmer, that supports multiprogramming by automatically adding

the base address (i.e., the value in the base register) to every

relative address when that address is accessed at run time.

In addition the relative address is compared against the value in

the limit register and if larger, the processes aborted since it has

exceeded its memory bound.

Compare this to your error checking in the linker lab.

The base and limit register are set by the OS when the job starts.

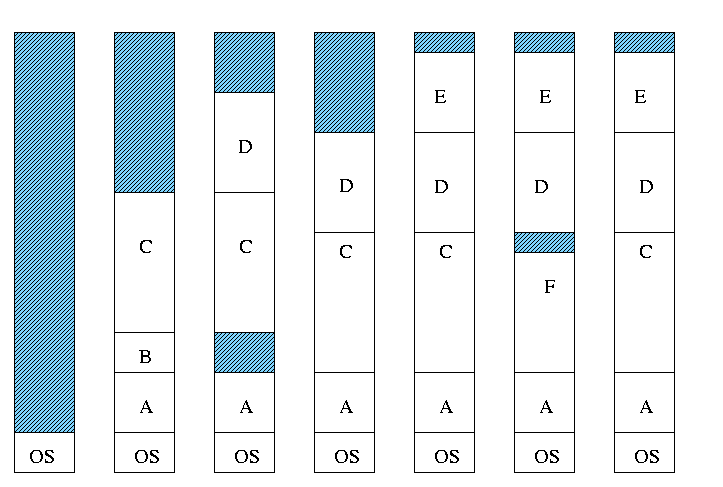

3.2.2 Swapping

Moving an entire processes between disk and memory is called

swapping.

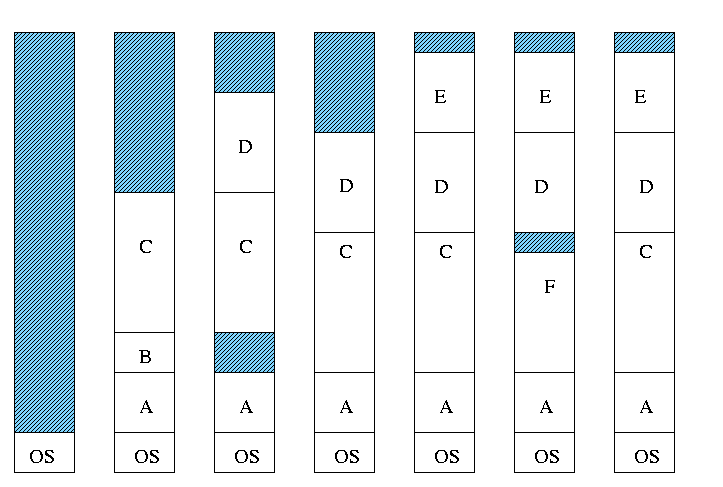

Multiprogramming with Variable Partitions

Both the number and size of the

partitions change with time.

Homework: A swapping system eliminates holes by

compaction.

Assume a random distribution of holes and data segments, assume the

data segments are much bigger than the holes, and assume a time to

read or write a 32-bit memory word of 10ns.

About how long does it take to compact 128 MB?

For simplicity, assume that word 0 is part of a hole and the highest

word in memory conatains valid data.

3.2.3 Managing Free Memory

MVT Introduces the Placement Question

That is, which hole (partition) should one choose?

- There are various algorithms for choosing a hole including best

fit, worst fit, first fit, circular first fit, quick fit, and

Buddy.

- Best fit doesn't waste big holes, but does leave slivers and

is expensive to run.

- Worst fit avoids slivers, but eliminates all big holes so

a big job will require compaction.

Even more expensive than best fit (best fit stops if it

finds a perfect fit).

- Quick fit keeps lists of some common sizes (but has other

problems, see Tanenbaum).

- Buddy system

- Round request to next highest power of two (causes

internal fragmentation).

- Look in list of blocks this size (as with quick fit).

- If list empty, go higher and split into buddies.

- When returning coalesce with buddy.

- Do splitting and coalescing recursively, i.e. keep

coalescing until can't and keep splitting until successful.

- See Tanenbaum (look in the index) or an algorithms

book for more details.

- A current favorite is circular first fit, also known as next fit.

- Use the first hole that is big enough (first fit) but start

looking where you left off last time.

- Doesn't waste time constantly trying to use small holes that

have failed before, but does tend to use many of the big holes,

which can be a problem.

- Buddy comes with its own implementation.

How about the others?

Homework:

Consider a swapping system in which memory consists of the following

hole sizes in memory order: 10K, 4K, 20K, 18K 7K, 9K, 12K, and 15K.

Which hole is taken for successive segment requests of

- 12K

- 10K

- 9K

for first fit?

Now repeat the question for best fit, worst fit, and next fit.

Memory Management with Bitmaps

Divide memory into blocks and associate a bit with each block, used

to indicate if the corresponding block is free or allocated.

To find a chunk of size N blocks need to find N consecutive

bits indicating a free block.

The only design question is how much memory does one bit represent.

- Big: Serious internal fragmentation.

- Small: Many bits to store and process.

Memory Management with Linked Lists

Instead of a bit map, use a linked list of nodes where each node

corresponds to a region of memory either allocated to a process or

still available (a hole).

- Each item on list gives the length and starting location of

the corresponding region of memory and says whether it is a hole

or Process.

- The items on the list are not taken from the memory to be

used by processes.

- The list is kept in order of starting address.

- Merge adjacent holes when freeing memory.

- Use either a singly or doubly linked list.

Memory Management using Boundary Tags

See Knuth, The Art of Computer Programming vol 1.

- Use the same memory for list items as for processes.

- Don't need an entry in linked list for the blocks in use, just

the avail blocks are linked.

- The avail blocks themselves are linked, not a node that points to

an avail block.

- When a block is returned, we can look at the boundary tag of the

adjacent blocks and see if they are avail.

If so they must be merged with the returned block.

- For the blocks currently in use, just need a hole/process bit at

each end and the length.

Keep this in the block itself.

- We do not need to traverse the list when returning a block can use

boundary tags to find predecessor.

MVT also introduces the Replacement Question

That is, which victim should we swap out?

This is an example of the suspend arc mentioned in process

scheduling.

We will study this question more when we discuss

demand paging in which case

we swap out only part of a process.

Considerations in choosing a victim

- Cannot replace a job that is pinned,

i.e. whose memory is tied down. For example, if Direct Memory

Access (DMA) I/O is scheduled for this process, the job is pinned

until the DMA is complete.

- Victim selection is a medium term scheduling decision

- A job that has been in a wait state for a long time is a good

candidate.

- Often choose as a victim a job that has been in memory for a long

time.

- Another question is how long should it stay swapped out.

- For demand paging, where swaping out a page is not as drastic

as swapping out a job, choosing the victim is an important

memory management decision and we shall study several policies.

NOTEs:

- So far the schemes presented so far have had two properties:

- Each job is stored contiguously in memory.

That is, the job is contiguous in physical addresses.

- Each job cannot use more memory than exists in the system.

That is, the virtual addresses space cannot exceed the

physical address space.

- Tanenbaum now attacks the second item.

I wish to do both and start with the first.

- Tanenbaum (and most of the world) uses the term

paging

to mean what I call demand paging.

This is unfortunate as it mixes together two concepts.

- Paging (dicing the address space) to solve the placement

problem and essentially eliminate external fragmentation.

- Demand fetching, to permit the total memory requirements of

all loaded jobs to exceed the size of physical memory.

- Most of the world uses the term virtual memory as a synonym for

demand paging.

Again I consider this unfortunate.

- Demand paging is a fine term and is quite descriptive.

- Virtual memory

should

be used in contrast with

physical memory to describe any virtual to physical address

translation.

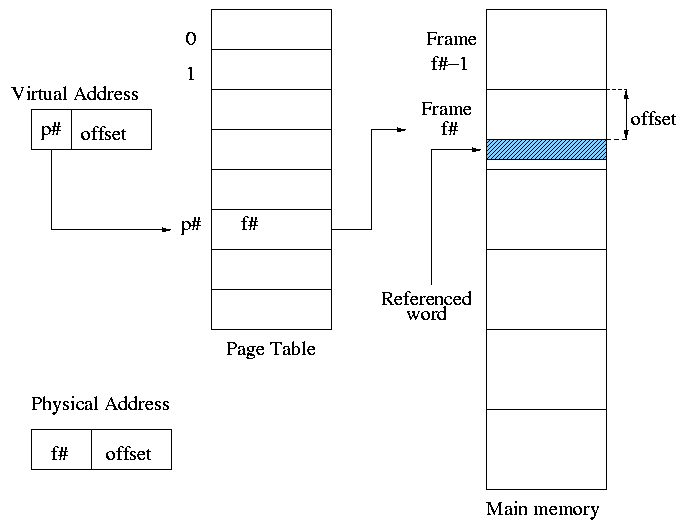

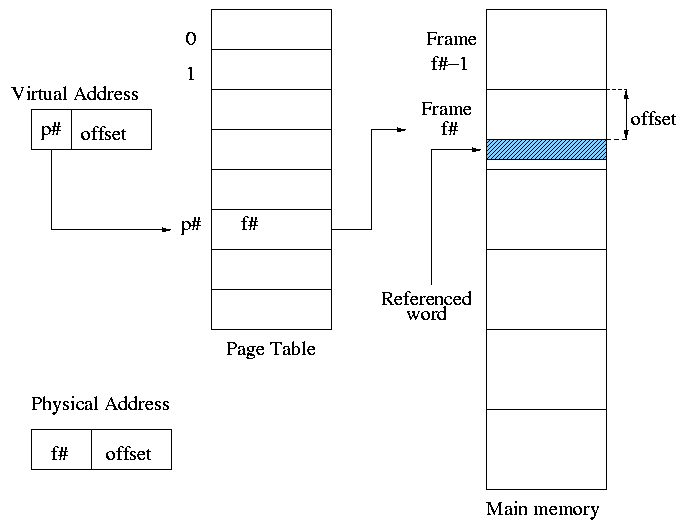

** (non-demand) Paging

Simplest scheme to remove the requirement of contiguous physical

memory.

- Chop the program into fixed size pieces called

pages, which are invisible to the user.

Tanenbaum sometimes calls pages

virtual pages.

- Chop the real memory into fixed size pieces called

page frames or

simply frames.

- The size of a page (the page size) = size of a frame (the frame

size).

- Sprinkle the pages into the frames.

- Keep a table (called the page table) having

an entry for each page.

The page table entry or PTE for page p contains

the number of the frame f that contains page p.

Example: Assume a decimal machine with

page size = frame size = 1000.

Assume PTE 3 contains 459.

Then virtual address 3372 corresponds to physical address 459372.

Properties of (non-demand) paging (without segmentation).

- The entire process must be memory resident to run.

- No holes, i.e., no external fragmentation.

- If there are 50 frames available and the page size is 4KB than

any job requiring ≤ 200KB will fit, even if the available

frames are scattered over memory.

- Hence (non-demand) paging is useful.

- Indeed, it was used (but no longer).

- Introduces internal fragmentation approximately equal to 1/2 the

page size for every process (really every segment).

- Can have a job unable to run due to insufficient memory and

have some (but not enough) memory available.

This is not called external fragmentation since it is

not due to memory being fragmented.

- Eliminates the placement question.

All pages are equally good since don't have external

fragmentation.

- The replacement question remains.

- Since page boundaries occur at

random

points and can

change from run to run (the page size can change with no effect

on the program—other than performance), pages are not

appropriate units of memory to use for protection and sharing.

But if all you have is a hammer, everything looks like a nail.

Segmentation, which is discussed later, is sometimes more

appropriate for protection and sharing.

- Virtual address space remains contiguous.

Address translation

- Each memory reference turns into 2 memory references

- Reference the page table

- Reference central memory

- This would be a disaster!

- Hence the MMU caches page#→frame# translations.

This cache is kept near the processor and can be accessed

rapidly.

- This cache is called a translation lookaside buffer

(TLB) or translation buffer (TB).

- For the above example, after referencing virtual address 3372,

there would be an entry in the TLB containing the mapping

3→459.

- Hence a subsequent access to virtual address 3881 would be

translated to physical address 459881 without an extra memory

reference.

Naturally, a memory reference for location 459881 itself would be

required.

Choice of page size is discuss below.

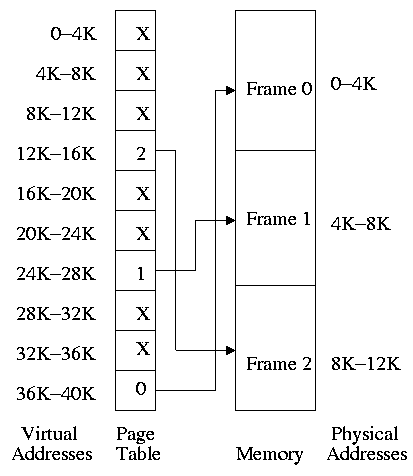

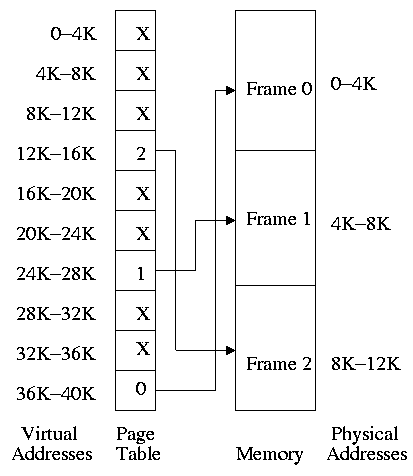

Homework:

Using the page table of Fig. 3.9, give the physical address

corresponding to each of the following virtual addresses.

- 20

- 4100

- 8300

3.3: Virtual Memory (meaning Fetch on Demand)

The idea is to enable a program to execute even if only the active

portion of its address space is memory resident.

That is, we are to swap in and swap out portions of

a program.

In a crude sense this could be called automatic overlays

.

Advantages

- Can run a program larger than the total physical memory.

- Can increase the multiprogramming level since the total size of

the active, i.e. loaded, programs (running + ready + blocked) can

exceed the size of the physical memory.

- Since some portions of a program are rarely if ever used, it

is an inefficient use of memory to have them loaded all the

time.

Fetch on demand will not load them if not used and will

(hopefully) unload them during replacement if they are not used

for a long time.

- Simpler for the user than overlays or

aliasing variables (older techniques to run large programs using

limited memory).

Disadvantages

- More complicated for the OS.

- Execution time less predictable (depends on other jobs).

- Can over-commit memory.

The Memory Management Unit and Virtual to Physical Address Translation

The memory management unit is a piece of hardware in the processor

that translates virtual addresses (i.e., the addresses in the

program) into physical addresses (i.e., real hardware addresses in

the memory).

The memory management unit is abbreviated as and normally referred

to as the MMU.

(The idea of an MMU and virtual to physical address translation

applies equally well to non-demand paging and in olden days the

meaning of paging and virtual memory included that case as well.

Sadly, in my opinion, modern usage of the term paging and virtual

memory are limited to fetch-on-demand memory systems, typically some

form of demand paging.)

** 3.3.1 Paging (Meaning Demand Paging)

The idea is to fetch pages from disk to memory when they are

referenced,hoping to get the most actively used pages in memory.

The choice of page size is discussed below.

Demand paging is very common: More complicated variants,

multilevel-level paging and paging plus segmentation (both of which

we will discuss), have been used and the former dominates modern

operating systems.

Started by the Atlas system at Manchester University in the 60s

(Fortheringham).

Each PTE continues to contain the frame number if the page is

loaded.

But what if the page is not loaded (i.e., the page exists only on disk)?

The PTE has a flag indicating if the page is loaded (can think of

the X in the diagram on the right as indicating that this flag is

not set).

If the page is not loaded, the location on disk could be kept

in the PTE, but normally it is not

(discussed below).

When a reference is made to a non-loaded page (sometimes

called a non-existent page, but that is a bad name), the system

has a lot of work to do.

We give more details below.

- Choose a free frame, if one exists.

- What if there is no free frame?

Make one!

- Choose a victim frame.

This is the replacement question about

which we will have much more to say latter.

- Write the victim back to disk if it is dirty,

- Update the victim PTE to show that it is not loaded.

- Now we have a free frame.

- Copy the referenced page from disk to the free frame.

- Update the PTE of the referenced page to show that it is

loaded and give the frame number.

- Do the standard paging address translation

(p#,off)→(f#,off).

Really not done quite this way

- There is

always

a free frame because ...

- ... there is a deamon active that checks the number of free

frames and if this number is too small, chooses victims and

pages them out

(writing them back to disk if dirty).

- The deamon is reactivated when the low water mark passed and

is suspended when the high water mark is passed.

Homework: 9.

3.3.2: Page tables

A discussion of page tables is also appropriate for (non-demand)

paging, but the issues are more acute with demand paging and the

tables can be much larger.

Why?

- The total size of the active processes is no longer limited to

the size of physical memory.

Since the total size of the processes is greater, the total size

of the page tables is greater and hence concerns over the size of

the page table are more acute.

- With demand paging an important question is the choice of a

victim page to page out.

Data in the page table can be useful in this choice.

We must be able access to the page table very quickly since it is

needed for every memory access.

Unfortunate laws of hardware.

- Big and fast are essentially incompatible.

- Big and fast and low cost is hopeless.

So we can't just say, put the page table in fast processor registers,

and let it be huge, and sell the system for $1000.

The simplest solution is to put the page table in main memory.

However it seems to be both too slow and two big.

- This solution seems too slow since all memory references now

require two reference.

- We will soon see how to largely eliminate the extra reference

by using a TLB.

- This solution seems too big.

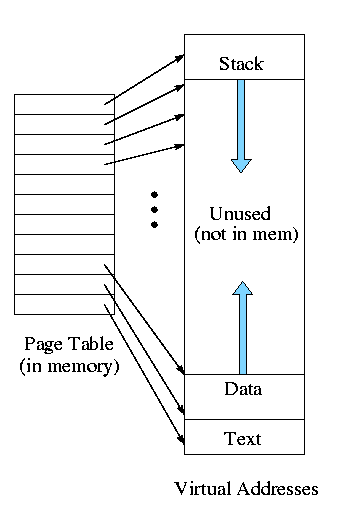

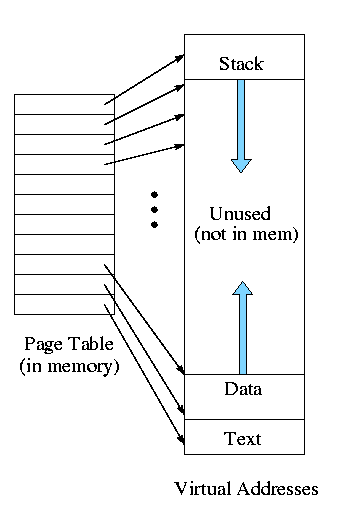

- Currently we are considering contiguous virtual

addresses ranges (i.e. the virtual addresses have no holes).

- One often puts the stack at one end of the virtual address

space and the global (or static) data at the other end and

let them grow towards each other.

- The virtual memory in between is unused.

- That does not sound so bad.

Why should we care about virtual memory?

- This unused virtual memory can be huge (in address range) and

hence the page table (which is stored in real memory)

will mostly contain unneeded PTEs.

- Works fine if the maximum virtual address size is small, which

was once true (e.g., the PDP-11 of the 1970s) but is no longer the

case.

- A

fix

is to use multiple levels of mapping.

We will see two examples below:

multilevel page tables and

segmentation plus paging.

Structure of a Page Table Entry

Each page has a corresponding page table entry (PTE).

The information in a PTE is used by the hardware and its format is

machine dependent; thus the OS routines that access PTEs are not

portable.

Information set by and used by the OS is normally kept in other OS

tables.

(Actually some systems, those with software

TLB reload, do not require hardware access to

the page table.)

The page table is indexed by the page number; thus the page number

is not stored in the table.

The following fields are often present in a PTE.

- The Frame Number.

This field is the main reason for the table.

It gives the virtual to physical address translation.

It is the only field in the page table for

(non-demand) paging.

- The Valid bit.

This tells if the page is currently loaded (i.e., is in a

frame).

If set, the frame number is valid.

It is also called the presence or

presence/absence bit.

If a page is accessed whose valid bit is unset, a

page fault is generated by the hardware.

- The Modified or Dirty bit.

Indicates that some part of the page has been written since it

was loaded.

This is needed when the page is evicted so that the OS can tell

if the page must be written back to disk.

- The Referenced or Used bit.

Indicates that some word in the page has been referenced.

Used to select a victim: unreferenced pages make good victims by

the locality property (discussed below).

- Protection bits.

For example one can mark text pages as execute only.

This requires that boundaries between regions with different

protection are on page boundaries.

Normally many consecutive (in logical address) pages have the

same protection so many page protection bits are redundant.

Protection is more naturally done with

segmentation, but in many current

systems, it is done with paging (since the systems don't utilize

segmentation, even though the hardware supports it).

Why are the disk addresses of non-resident pages

not in the PTE?

On most systems the PTEs are accessed by the hardware automatically

on a TLB miss (see immediately below).

Thus the format of the PTEs is determined by the hardware and

contains only information used on page hits.

Hence the disk address, which is only used on page faults, is not

present.

3.3.3 Speeding Up Paging

As mentioned above, the simple scheme of storing the page table in

its entirety in central memory alone appears to be both too slow and

too big.

We address both these issues here, but note that a second solution

to the size question (segmentation) is discussed later.

Translation Lookaside Buffers (and General Associative Memory)

Note: Tanenbaum suggests that

associative memory

and translation lookaside buffer

are synonyms.

This is wrong.

Associative memory is a general concept of which translation

lookaside buffer is a specific example.

An associative memory is a

content addressable memory.

That is you access the memory by giving the value of some

field (called the index) and the hardware searches all the records

and returns the record whose index field contains the requested value.

For example

Name | Animal | Mood | Color

======+========+==========+======

Moris | Cat | Finicky | Grey

Fido | Dog | Friendly | Black

Izzy | Iguana | Quiet | Brown

Bud | Frog | Smashed | Green

If the index field is Animal and Iguana is given, the associative

memory returns

Izzy | Iguana | Quiet | Brown

A Translation Lookaside Buffer

or TLB is an associate memory where the index field

is the page number.

The other fields include the frame number, dirty bit, valid bit,

etc.

Note that, unlike the situation with a the page table, the page

number is stored in the TLB; indeed it is the index

field.

A TLB is small and expensive but at least it

is fast.

When the page number is in the TLB, the frame number is returned

very quickly.

On a miss, a TLB reload is performed.

The page number is looked up in the page table.

The record found is placed in the TLB and a victim is discarded (not

really discarded, dirty and referenced bits are copied back to the

PTE).

There is no placement question since all TLB entries are accessed at

the same time and hence are equally suitable.

But there is a replacement question.

Homework: 15.

As the size of the TLB has grown, some processors have switched

from single-level, fully-associative, unified TLBs to multi-level,

set-associative, separate instruction and data, TLBs.

We are actually discussing caching, but using different

terminology.

- Page frames are a cache for pages (one could say that

central memory is a cache of the disk).

- The TLB is a cache of the page table.

- Also the processor almost surely has a cache (most likely

several) of central memory.

- In all the cases, we have small-and-fast acting as a cache

of big-and-slow.

However what is big-and-slow in one level of caching, can be

small-and-fast in another level.

Software TLB Management

The words above assume that, on a TLB miss, the MMU (i.e., hardware

and not the OS) loads the TLB with the needed PTE and then performs

the virtual to physical address translation.

This implies that the OS need not be concerned with TLB misses.

Some newer systems do this in software, i.e., the

OS is involved.

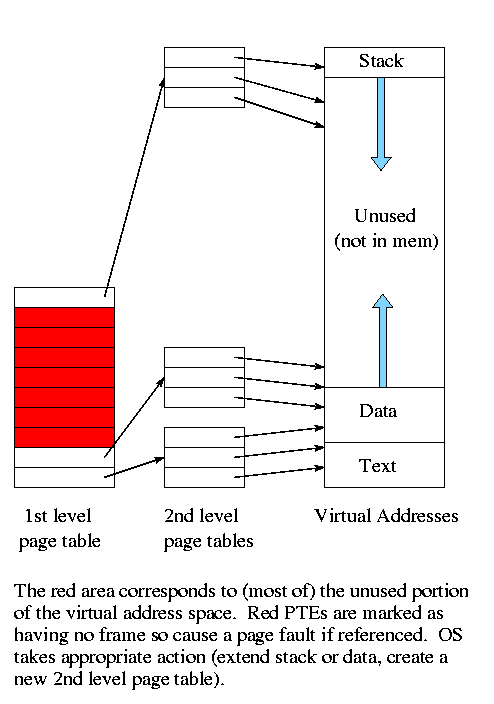

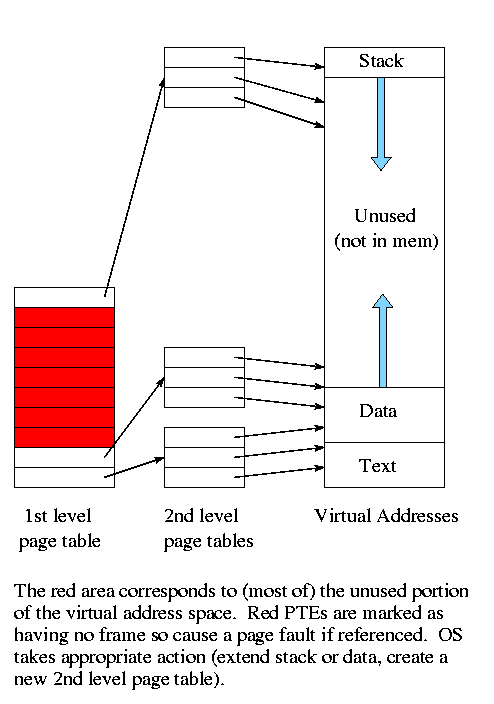

Multilevel Page Tables

Recall the diagram above showing

the data and stack growing towards each other.

Most of the virtual memory is the unused space between the data and

stack regions.

However, with demand paging this space does not waste real

memory.

But the single large page table does waste real

memory.

The idea of multi-level page tables (a similar idea is used in Unix

i-node-based file systems, which we study later when we do I/O) is

to add a level of indirection and have a page table containing

pointers to page tables.

- Imagine one big page table, which we will (eventually) call

the second level page table.

- We want to apply paging to this large table, viewing it as

simply memory not as a page table.

So we (logically) cut it into pieces each the size of a page.

Note that many (typically 1024 or 2048) PTEs fit in one page so

there are far fewer of these pages than PTEs.

- Now construct a first level page table containing PTEs

that point to the pages produced in the previous bullet.

- This first level PT is small enough to store in memory.

It contains one PTE for every page of PTEs in the 2nd level PT,

which reduces space by a factor of one or two thousand.

- But since we still have the 2nd level PT, we have made the

world bigger not smaller!

- Don't store in memory those 2nd level page tables all of whose

PTEs refer to unused memory.

That is

use demand paging on the (second level) page table!

This idea can be extended to three or more levels.

The largest I know of has four levels.

We will be content with two levels.

Address Translation With a 2-Level Page Table

For a two level page table the virtual address is divided into

three pieces

+-----+-----+-------+

| P#1 | P#2 | Offset|

+-----+-----+-------+

- P#1, page number sub 1, gives the index into the first level

page table.

- Follow the pointer in the corresponding PTE to reach the frame

containing the relevant 2nd level page table.

- P#2 gives the index into this 2nd level page table.

- Follow the pointer in the corresponding PTE to reach the frame

containing the (originally) requested page.

- Offset gives the offset in this frame where the originally

requested word is located.

Do an example on the board

The VAX used a 2-level page table structure, but with some wrinkles

(see Tanenbaum for details).

Naturally, there is no need to stop at 2 levels.

In fact the SPARC has 3 levels and the Motorola 68030 has 4 (and the

number of bits of Virtual Address used for P#1, P#2, P#3, and P#4

can be varied).

More recently, x86-64 also has 4-levels.

Inverted Page Tables

For many systems the virtual address range is much bigger that the

size of physical memory.

In particular, with 64-bit addresses, the range is 264

bytes, which is 16 million terabytes.

If the page size is 4KB and a PTE is 4 bytes, a full page table

would be 16 thousand terabytes.

A two level table would still need 16 terabytes for the first level

table, which is stored in memory.

A three level table reduces this to 16 gigabytes, which is still

large and only a 4-level table gives a reasonable memory footprint

of 16 megabytes.

An alternative is to instead keep a table indexed by frame number.

The content of entry f contains the number of the page currently

loaded in frame f.

This is often called a frame table as well as an

inverted page table.

Now there is one entry per frame.

Again using 4KB pages and 4 byte PTEs, we see that the table would

be a constant 0.1% of the size of real memory.

But on a TLB miss, the system must search the

inverted page table, which would be hopelessly slow except that some

tricks are employed.

Specifically, hashing is used.

Also it is often convenient to have an inverted table as we will

see when we study global page replacement algorithms.

Some systems keep both page and inverted page tables.

3.4 Page Replacement Algorithms (PRAs)

These are solutions to the replacement question.

Good solutions take advantage of locality when choosing the

victim page to replace.

- Temporal locality: If a word is referenced

now, it is likely to be referenced in the near

future.

This argues for caching referenced words, i.e. keeping

the referenced word near the processor for a while.

- Spatial locality: If a word is referenced

now, nearby words are likely to be referenced in the

near future.

This argues for prefetching words around the currently

referenced word.

- Temporal and spacial locality are lumped together into

locality: If any word in a page is referenced,

each word in the page is

likely

to be referenced.

So it is good to bring in the entire page on a miss and to keep

the page in memory for a while.

When programs begin there is no history so nothing to base locality

on.

At this point the paging system is said to be undergoing a

cold start

.

Programs exhibit phase changes

in which the set of pages

referenced changes abruptly (similar to a cold start).

An example would occurs in your linker lab when you finish pass 1

and start pass 2.

At the point of a phase change, many page faults occur because

locality is poor.

Pages belonging to processes that have terminated are of course

perfect choices for victims.

Pages belonging to processes that have been blocked for a long time

are good choices as well.

Random PRA

A lower bound on performance.

Any decent scheme should do better.

3.4.1 The Optimal Page Replacement Algorithm

Replace the page whose next reference will be

furthest in the future.

- Also called Belady's min algorithm.

- Provably optimal.

That is, no algorithm generates fewer page faults.

- Unimplementable: Requires predicting the future.

- Good upper bound on performance.

3.4.2 The Not Recently Used (NRU) PRA

Divide the frames into four classes and make a random selection from

the lowest nonempty class.

- Not referenced, not modified.

- Not referenced, modified.

- Referenced, not modified.

- Referenced, modified.

Assumes that in each PTE there are two extra flags R (for

referenced; sometimes called U, for used) and M (for modified, often

called D, for dirty).

NRU is based on the belief that a page in a lower priority class is

a better victim.

- If a page is not referenced, locality suggests that it

probably will not referenced again soon and hence is a good

candidate for eviction.

- If a clean page (i.e., one that is not modified) is chosen to

evict, the OS does not have to write it back to disk and hence

the cost of the eviction is lower than for a dirty page.

Implementation

- When a page is brought in, the OS resets R and M (i.e. R=M=0).

- On a read, the hardware sets R.

- On a write, the hardware sets R and M.

We again have the prisoner problem: We do a good job of making

little ones out of big ones, but not as good a job on the reverse.

We need more resets.

Therefore, every k clock ticks, the OS resets all R bits.

Why not reset M as well?

Answer: If a dirty page has a clear M, we will not copy the page

back to disk when it is evicted, and thus the only accurate version

of the page will be lost!

What if the hardware doesn't set these bits?

Answer: The OS can uses tricks.

When the bits are reset, the PTE is made to indicate that the page

is not resident (which is a lie).

On the ensuing page fault, the OS sets the appropriate bit(s).

We ignore the tricks and assume the hardware does set the bits.

3.4.3: FIFO PRA

Simple but poor since the usage of the page is ignored.

Belady's Anomaly: Can have more frames yet generate

more faults.

An example is given later.

The natural implementation is to have a queue of nodes each

referring to a resident page (i.e., pointing to a frame).

- When a page is loaded, a node referring to the page is appended to

the tail of the queue.

- When a page needs to be evicted, the head node is removed and the

page referenced is chosen as the victim.

This sound good, but only at first.

The trouble is that a page referenced say every other memory

reference and thus very likely to be referenced

soon will be evicted because we only look at the first reference.

3.4.4: Second chance PRA

Similar to the FIFO PRA, but altered so that a page recently

referenced is given a second chance.

- When a page is loaded, a node referring to the page is

appended to the tail of the queue.

The R bit of the page is cleared.

- When a page needs to be evicted, the head node is removed and

the page referenced is the potential victim.

- If the R bit is unset (the page hasn't been referenced

recently), then the page is the victim.

- If the R bit is set, the page is given a second chance.

Specifically, the R bit is cleared, the node referring to this

page is appended to the rear of the queue (so it appears to have

just been loaded), and the current head node becomes the

potential victim.

This node is being given a

second chance

.

- What if all the R bits are set?

- We will move each page from the front to the rear and will

arrive at the initial condition but with all the R bits now

clear.

Hence we will remove the same page as fifo would have removed,

but will have spent more time doing so.

- We might want to periodically clear all the R bits so that a

long ago reference is forgotten (but so is a recent reference).

3.4.5 Clock PRA

Same algorithm as 2nd chance, but a better implementation for the

nodes: Use a circular list with a single pointer serving as both

head and tail.

Let us begin by assuming that the number of pages loaded is

constant.

- So the size of the node list in 2nd chance is constant.

- Use a circular list for the nodes and have a pointer pointing

to the head entry.

Think of the list as the hours on a clock and the pointer as the

hour hand.

(Hence the name

clock

PRA.)

- Since the number of nodes is constant, the operation we need

to support is replace the

oldest, unreferenced

page by a

new page.

- Examine the node pointed to by the (hour) hand.

If the R bit of the corresponding page is set, we give the

page a second chance: clear the R bit, move the hour hand (now the page

looks freshly loaded), and examine the next node.

- Eventually we will reach a node whose corresponding R bit is

clear.

The corresponding page is the victim.

- Replace the victim with the new page (may involve 2 I/Os as

always).

- Update the node to refer to this new page.

- Move the hand forward another hour so that the new page is at the

rear.

Thus, when the number of loaded pages (i.e., frames) is constant,

the algorithm is just like 2nd chance except that only the one

pointer (the clock hand

) is updated.

How can the number of frames change for a fixed machine?

Presumably we don't (un)plug DRAM chips while the system is running?

The number of frames can change when we use a so called

local algorithm

—discussed later—where the victim

must come from the frames assigned to the faulting process.

In this case we have a different frame list for each process.

At times we want to change the number of frames assigned to a given

process and hence the number of frames in a given frame list changes

with time.

How does this affect 2nd chance?

- We now have to support inserting a node right before the hour

hand (the rear of the queue) and removing the node pointed to by

the hour hand.

- The natural solution is to double link the circular list.

- In this case insertion and deletion are a little slower than

for the primitive 2nd chance (double linked lists have more

pointer updates for insert and delete).

- So the trade-off is: If there are mostly inserts and

deletes, and granting 2nd chances is not too common, use the

original 2nd chance implementation.

If there are mostly replacements, and you often give nodes a 2nd

chance, use clock.

LIFO PRA

This is terrible!

Why?

Ans: All but the last frame are frozen once loaded so you can

replace only one frame.

This is especially bad after a phase shift in the program as now the

program is references mostly new pages but only one frame is

available to hold them.

3.4.6 Least Recently Used (LRU) PRA

When a page fault occurs, choose as victim that page that has been

unused for the longest time, i.e. the one that has been least

recently used.

LRU is definitely

- Implementable: The past is knowable.

- Good: Simulation studies have shown this.

- Difficult.

Essentially the system needs to either:

- Keep a time stamp in each PTE, updated

on each reference and scan all the PTEs

when choosing a victim to find the PTE with the oldest

timestamp.

- Keep the PTEs in a linked list in usage order, which means

on each reference moving the corresponding

PTE to the end of the list.

Homework: 28, 22.

A hardware cutsie in Tanenbaum

A clever hardware method to determine the LRU page.

- For n pages, keep an nxn bit matrix.

- On a reference to page i, set row i to all 1s and column i to

all 0s.

- At any time the 1 bits in the rows are ordered by inclusion.

I.e. one row's 1s are a subset of another row's 1s, which is a

subset of a third.

(Tanenbaum forgets to mention this.)

- So the row with the fewest 1s is a subset of all the others

and is hence least recently used.

- This row also has the smallest value, when treated as an

unsigned binary number.

So the hardware can do a comparison of the rows rather than

counting the number of 1 bits.

- Cute, but still impractical.

3.4.7 Simulating (Approximating) LRU in Software

The Not Frequently Used (NFU) PRA

Keep a count of how frequently each page is used and evict the one

that has been the lowest score.

Specifically:

- Include a counter (and reference bit R) in each PTE.

- Set the counter to zero when the page is brought into memory.

- Every k clocks, perform the following for each PTE.

- Add R to the counter.

- Clear R.

- Choose as victim the PTE with lowest count.

| R | counter |

|---|

| 1 | 10000000 |

| 0 | 01000000 |

| 1 | 10100000 |

| 1 | 11010000 |

| 0 | 01101000 |

| 0 | 00110100 |

| 1 | 10011010 |

| 1 | 11001101 |

| 0 | 01100110 |

The Aging PRA

NFU doesn't distinguish between old references and recent ones.

The following modification does distinguish.

- Include a counter (and reference bit, R) in each PTE.

- Set the counter to zero when the page is brought into memory.

- Every k clock ticks, perform the following for each PTE.

- Shift the counter right one bit.

- Insert R as the new high order bit of the counter.

- Clear R.

- Choose as victim the PTE with lowest count.

Aging does indeed give more weight to later references, but an n

bit counter maintains data for only n time intervals; whereas NFU

maintains data for at least 2n intervals.

Homework: 24, 33.