Start Lecture #6

Remark: Tanenbaum presents both busy waiting (as above) and blocking (process switching) solutions. We present only do busy waiting solutions, which are easier and used in the blocking solutions. Sleep and Wakeup are the simplest blocking primitives. Sleep voluntarily blocks the process and wakeup unblocks a sleeping process. However, it is far from clear how sleep and wakeup are implemented. Indeed, deep inside, they typically use TAS or some similar primitive. We will not cover these solutions.

Homework: Explain the difference between busy waiting and blocking process synchronization.

Remark: Tannenbaum use the term semaphore only for blocking solutions. I will use the term for our busy waiting solutions (as well as for blocking solutions). Others call our solutions spin locks.

The entry code is often called P and the exit code V. Thus the critical section problem is to write P and V so that

loop forever

P

critical-section

V

non-critical-section

satisfies

Note that I use indenting carefully and hence do not need (and sometimes omit) the braces {} used in languages like C or java.

A binary semaphore abstracts the TAS solution we gave for the critical section problem.

openand

closed.

while (S=closed) {}

S<--closed -- This is NOT the body of the while

where finding S=open and setting S<--closed is atomic

The above code is not real, i.e., it is not an implementation of P. It requires a sequence of two instructions to be atomic and that is, after all, what we are trying to implement in the first place. The above code is, instead, a definition of the effect P is to have.

To repeat: for any number of processes, the critical section problem can be solved by

loop forever

P(S)

CS

V(S)

NCS

The only solution we have seen for an arbitrary number of processes is the one just above with P(S) implemented via test and set.

Remark: Peterson's solution requires each process to know its process number; the TAS soluton does not. Moreover the definition of P and V does not permit use of the process number. Thus, strictly speaking Peterson did not provide an implementation of P and V. He did solve the critical section problem.

To solve other coordination problems we want to extend binary semaphores.

Both of the shortcomings can be overcome by not restricting ourselves to a binary variable, but instead define a generalized or counting semaphore.

while (S=0) {}

S--

where finding S>0 and decrementing S is atomic

Counting semaphores can solve what I call the semi-critical-section problem, where you premit up to k processes in the section. When k=1 we have the original critical-section problem.

initially S=k

loop forever

P(S)

SCS -- semi-critical-section

V(S)

NCS

Note that my definition of semaphore is different from Tanenbaum's so it is not surprising that my solution is also different from his.

Unlike the previous problems of mutual exclusion, the producer-consumer has two classes of processes

What happens if the producer encounters a full buffer?

Answer: It waits for the buffer to become non-full.

What if the consumer encounters an empty buffer?

Answer: It waits for the buffer to become non-empty.

The producer-consumer problem is also called the bounded buffer problem, which is another example of active entities being replaced by a data structure when viewed at a lower level (Finkel's level principle).

Initially e=k, f=0 (counting semaphores); b=open (binary semaphore)

Producer Consumer

loop forever loop forever

produce-item P(f)

P(e) P(b); take item from buf; V(b)

P(b); add item to buf; V(b) V(e)

V(f) consume-item

bounded alternation. If k=1 it gives strict alternation.

Remark: Whereas we use the term semaphore to mean binary semaphore and explicitly say generalized or counting semaphore for the positive integer version, Tanenbaum uses semaphore for the positive integer solution and mutex for the binary version. Also, as indicated above, for Tanenbaum semaphore/mutex implies a blocking primitive; whereas I use binary/counting semaphore for both busy-waiting and blocking implementations. Finally, remember that in this course our only solutions are busy-waiting.

| Busy wait | block/switch | |

|---|---|---|

| critical | (binary) semaphore | (binary) semaphore |

| semi-critical | counting semaphore | counting semaphore |

| Busy wait | block/switch | |

|---|---|---|

| critical | enter/leave region | mutex |

| semi-critical | no name | semaphore |

You can find some information on barriers in my lecture notes for a follow-on course (see in particular lecture number 16).

We did this previously.

A classical problem from Dijkstra

What algorithm do you use for access to the shared resource (the forks)?

The purpose of mentioning the Dining Philosophers problem without giving the solution is to give a feel of what coordination problems are like. The book gives others as well. The solutions would be covered in a sequel course. If you are interested look, for example here.

Homework: 45 and 46 (these have short answers but are not easy). Note that the second problem refers to fig. 2-20, which is incorrect. It should be fig 2-46.

As in the producer-consumer problem we have two classes of processes.

The problem is to

Variants

Solutions to the readers-writers problem are quite useful in

multiprocessor operating systems and database systems.

The easy way out

is to treat all processes as writers in

which case the problem reduces to mutual exclusion (P and V).

The disadvantage of the easy way out is that you give up reader

concurrency.

Again for more information see the web page referenced above.

Critical Sections have a form of atomicity, in some ways similar to transactions. But there is a key difference: With critical sections you have certain blocks of code, say A, B, and C, that are mutually exclusive (i.e., are atomic with respect to each other) and other blocks, say D and E, that are mutually exclusive; but blocks from different critical sections, say A and D, are not mutually exclusive.

The day after giving this lecture in 2006-07-spring, I found a

modern reference to the same question.

The quote below is from

Subtleties of Transactional Memory Atomicity Semantics

by Blundell, Lewis, and Martin in

Computer Architecture Letters

(volume 5, number 2, July-Dec. 2006, pp. 65-66).

As mentioned above, busy-waiting (binary) semaphores are often

called locks (or spin locks).

... conversion (of a critical section to a transaction) broadens the scope of atomicity, thus changing the program's semantics: a critical section that was previously atomic only with respect to other critical sections guarded by the same lock is now atomic with respect to all other critical sections.

We began with a subtle bug (wrong answer for x++ and x--) and used it to motivate the Critical Section Problem for which we provided a (software) solution.

We then defined (binary) Semaphores and showed that a Semaphore easily solves the critical section problem and doesn't require knowledge of how many processes are competing for the critical section. We gave an implementation using Test-and-Set.

We then gave an operational definition of Semaphore (which is not an implementation) and morphed this definition to obtain a Counting (or Generalized) Semaphore, for which we gave NO implementation. I asserted that a counting semaphore can be implemented using 2 binary semaphores and gave a reference.

We defined the Producer-Consumer (or Bounded Buffer) Problem and showed that it can be solved using counting semaphores (and binary semaphores, which are a special case).

Finally we briefly discussed some classical problems, but did not give (full) solutions.

Skipped.

Skipped, but you should read.

Remark: Deadlocks are closely related to process

management so belong

here, right after chapter 2.

It was here in 2e.

A goal of 3e is to make sure that the basic material gets covered in

one semester.

But I know we will do the first 6 chapters so there is no need for

us to postpone the study of deadlock.

A deadlock occurs when every member of a set of processes is waiting for an event that can only be caused by a member of the set.

Often the event waited for is the release of a resource.

In the automotive world deadlocks are called gridlocks.

For a computer science example consider two processes A and B that each want to print a file currently on a CD-ROM Drive.

Bingo: deadlock!

A resource is an object granted to a process.

Resources come in two types

The interesting issues arise with non-preemptable resources so those are the ones we study.

The life history of a resource is a sequence of

Processes request the resource, use the resource, and release the resource. The allocate decisions are made by the system and we will study policies used to make these decisions.

A simple example of the trouble you can get into.

Recall from the semaphore/critical-section treatment last chapter, that it is easy to cause trouble if a process dies or stays forever inside its critical section. We assume processes do not do this. Similarly, we assume that no process retains a resource forever. It may obtain the resource an unbounded number of times (i.e. it can have a loop forever with a resource request inside), but each time it gets the resource, it must release it eventually.

Definition: A deadlock occurs when a every member of a set of processes is waiting for an event that can only be caused by a member of the set.

Often the event waited for is the release of a resource.

The following four conditions (Coffman; Havender) are necessary but not sufficient for deadlock. Repeat: They are not sufficient.

One can say

If you want a deadlock, you must have these four conditions.

.

But of course you don't actually want a deadlock, so you would more

likely say If you want to prevent deadlock, you need only violate

one or more of these four conditions.

.

The first three are static characteristics of the system and resources. That is, for a given system with a fixed set of resources, the first three conditions are either always true or always false: They don't change with time. The truth or falsehood of the last condition does indeed change with time as the resources are requested/allocated/released.

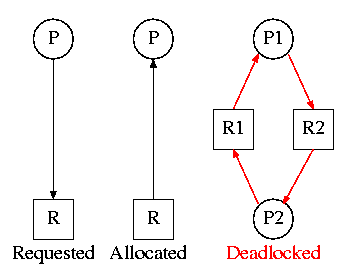

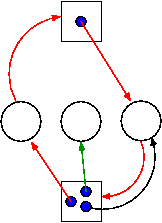

On the right are several examples of a Resource Allocation Graph, also called a Reusable Resource Graph.

Homework: 5.

Consider two concurrent processes P1 and P2 whose programs are.

P1 P2

request R1 request R2

request R2 request R1

release R2 release R1

release R1 release R2

On the board draw the resource allocation graph for various possible executions of the processes, indicating when deadlock occurs and when deadlock is no longer avoidable.

There are four strategies used for dealing with deadlocks.

The put your head in the sand approach

.

Consider the case in which there is only one instance of each resource.

a printernot a specific printer. Similarly, one can have many CD-ROM drives.

To find a directed cycle in a directed graph is not hard. The algorithm is in the book. The idea is simple.

The searches are finite since there are a finite number of nodes.

This is more difficult.

can). If, even with such demanding processes, the resource manager can insure that all process terminates, then we can insure that deadlock is avoided.

Perhaps you can temporarily preempt a resource from a process. Not likely.

Database (and other) systems take periodic checkpoints. If the system does take checkpoints, one can roll back to a checkpoint whenever a deadlock is detected. You must somehow guarantee forward progress.

Can always be done but might be painful. For example some processes have had effects that can't be simply undone. Print, launch a missile, etc.