Start Lecture #5

Remark: The room for the final exam has been moved (by the department) from here 102 down the hall.

Done later.

The pioneers in this work were mathematicians who were interested in understanding what could be computed. They introduced models of computation including the Turing Machine (Alan Turing) and the lambda calculus (Alonzo Church). We will not consider the Turing machine, but will speak some about the lambda calculus from which functional languages draw much inspiration.

Definition: The term Functional Programming refers to a programming style in which every procedure is a (mathematical) function. That is, the procedure produces an output based on its inputs and has no side effects.

The lack of side effects implies that no procedure can, for example, read, print, modify its parameters, or modify external variables.

By defining the stream from which one reads to be another input, and the stream to which one writes to be another output, we can allow I/O.

Some languages, such as Haskell are purely functional. Scheme is not: it includes non functional constructs (such as updating the value of a variable), but supports a functional style where these constructs are used much less frequently than in a typical applicative language.

Having just described what is left out (or discouraged) in functional languages, I note that such languages, including Scheme, have powerful features not normally found in common imperative languages.

Homework: CYU 1, 4, 5.

Remark: We are moving the λ-calculus before Scheme unlike the 3e.

Our treatment is rather different from the book.

The λ-calculus was invented by Alonzo Church in 1932. It forms the underpinning of several functional languages such as Scheme, other forms of Lisp, ML, and Haskell.

Technical point: There are typed and untyped variants of the lambda

calculus.

We will be studying the pure, untyped version.

In this version everything is a function

as we shall see.

The syntax is very simple. Parentheses are NOT use to show a function applied to its arguments. Let f be a function of one argument and let x be the argument we want to apply f to. This is written fx, no blank, no comma, no parens, nothing.

Parentheses are used for grouping, as we show below. Ignoring parens for a minute the grammar can be written.

M → λx.M a function definition

| MN a function application

| identifier a variable, normally one character

The functions take only one variable, which appears before the dot; the function body appears after the dot. For example λx.x is the function that, given x, has value x. This function is often called the identity.

Another example would be λx.xx. This function takes the argument x and has value xx. But what is xx? It is an example of the second production in the above grammar. The form xx means apply (the function) x to (the argument) x. Since essentially everything is a function, it is clear that functions are first-class values and that functions are higher order.

Below are some examples shown both with and without parentheses used for grouping.

λx.x

xxx (xx)x

x(xx)

λx.xx λx.(xx)

(λx.x)x

(λx.xx)(λx.xx)

(λx.λy.yxx)((λx.x)(λy.z))

Examples on the same line are equivalent (i.e., the parens on the

right version are not needed).

The default without parens is that xyz means (xy)z, i.e., the

function x is applied to the argument y producing another function

that is applied to the argument z.

The variables need not be distinct, so xxx is possible.

The other default is that the body of a function definition extends

as far as possible.

Definitions:

Since nearly everything is a function, it is expected that function application (applying a function to its argument) will be an important operation.

Definition: In the λ-calculus, function application is called β-reduction. If you have the function definition λx.M and you apply it to N, (λx.M)N, the result is naturally M with the x's changed to N. More precisely, the result of β-reduction applied to (λx.M)N is M with all free occurrences of x replaced by N.

Technical point: Before applying the β-reduction above, we must ensure (using α-conversions if needed) that N has no free variables that are bound in M.

Do this example on the board: The β-reduction of λx.(λy.yx)z is (λy.yz)=λy.yz

To understand the technical point consider the following example λx.(λz.zx)z. First note that this is really the same example as all I did to the original is apply an α-transformation (y to z). But if I blindly apply the rule for β-reduction to this new example, I get (λz.zz)=λz.zz, which is clearly not equivalent to the original answer. The error is that in the new example M=(λz.zx), N=z, and hence N does have a free variable that is bound in M.

Consider the C-language expression f(g(3)). Of course we must invoke g before we can invoke f. The reason is that C is call-by-value and we can't call f until we know the value of the argument. But in a call-by-name language like Algol-60, we call f first and call g every time (perhaps no times) f evaluates its parameter

Let's write this in the λ-calculus. Instead of 3, we will use the argument λx.yx (remember arguments can be functions) and for f and g we use the identity function λx.x. This gives (λx.x)((λx.x)(λx.yx)).

At this point we can apply one of two β-reductions, corresponding to evaluating f or g.

Definition: The normal order evaluation rule is to perform the (leftmost) outermost β-reduction possible.

Definition: The applicative order evaluation rule is to perform the (leftmost) innermost β-reduction possible.

Doing one reduction using normal-order evaluation on our example gives an answer of ((λx.x)(λx.xy)). The outer (redundant) parentheses are removed and we get (λx.x)(λx.xy).

If, instead, we do one applicative-order reduction we get the same answer, but it seems for a completely different reason. Must the answers always be the same?

Do it again in class with the following more complicated example. (λx.λy.yxx)((λx.x)(λy.z)).

In this case doing one normal-order reduction gives a different answer from doing one applicative-order reduction. But we have the following celebrated.

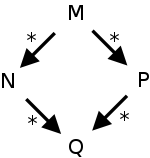

Church-Rosser Theorem: If a term M can be β-reduced (in 0 or more steps) to two terms N and P, then there is a term Q so that both N and P can be β-reduced to Q.

Corollary: If you start with a term M and keep β-reducing it until it can no longer be reduced, you will always get the same final term.

Definition: A term than cannot be β-reduced is said to be in normal form.

Continue on the board to find the normal form of (λx.λy.yxx)((λx.x)(λy.z)).

That is, if you keep β-reducing, with the process terminate?

No. Consider (λx.xx)(λx.xx).

Were this a theory course we would rigorously explain the notion of computability. In this class, we will be content to say that roughly speaking computability theory studies the following question: Given a model of computation, what functions can be computed? There are many models of computation. For example we could ask for all functions computable

Turing Machine.

A fundamental result is that for all the models listed the same functions are computable.

Definition: Any model for which the computable functions are the same as those for a Turing Machine is called Turing Complete.

Thus, the fundamental result is that all the models listed above are Turing Complete. This should be surprising! How can the silly λ-calculus compute all the functions computable in Ada; after all the λ-calculus doesn't even have numbers?? Or Boolean values? Or loops? Or Recursion?

I will just show a little about numbers.

First remember that the number three is a concept or an

abstraction.

Writing three as three

, or 3

, or III

,

or 0011

does not change the meaning of three.

What I will show is a representation of every non-negative number

and a function that corresponds to adding 1 (finding the successor).

Much more would be shown in a theory course.

It should not be surprising that each number will be represented as a function taking one argument—that is all we have to work with! The function with one parameter representing the number n takes as argument a function f and returns function g taking one argument. The function g will apply f n-times to its argument.

I think those words are correct, but I also think the following symbolism is clearer.

So how do we represent the successor function S(n)=n+1? It must take one argument n and produce a function that takes an argument f and yields a function that applies f one more time to its argument than n does.

Again the symbols may be clearer than the words

S is λn.λf.λx.f(nfx)

Show on the board that S1 is 2, i.e., show that

(λn.λf.λx.f(nfx))(λf.λx.fx)

is λf.λx.f(fx)

To make it clearer, first perform α-conversion to λf.λx.fx and get λg.λy.gy

First note that in the expression λx.λy.z, the left most function is higher order. That is, it is given an argument x and it produces a function λy.z.

Given (higher-order) functions of one variable, it is easy to

define functions of multiple variables by

λxy.z = λx.λy.z

This adds no power to the λ-calculus, but does make for

shorter notation.

For example, the successor function above is now written

S is λnfx.f(nfx).

This section shows how to model Boolean values, if-then-else, and recursion. Although I find it very pretty, I am skipping it.

This section shows how to model the Scheme constructs for list processing, from which one can build many other structures. Again, I am skipping it.

Lisp is one of the first high level languages, invented by John McCarthy in 1958 while at MIT (McCarthy is mainly associated with Stanford). Many dialects have appeared. Currently, two main dialects are prominent for standalone use: Common Lisp and Scheme. The Emacs editor is largely written in a Lisp dialect (elisp) and elisp is used as a scripting language to extend/customize the editor.

Whereas, Common Lisp is a large language, Scheme takes a more minimalist approach and is design to have clear and simple semantics.

Scheme was first described in 1975, so had the benefit of nearly 20 years of Lisp experience.

special formsthat have unique evaluation rules. We shall discuss some later.

Scheme interpreters execute a read-eval-print loop. That is, the interpreter reads an expression, evaluates the expression, and prints the result, after which it waits to read another expression.

It is common to use ⇒ to indicate the output produced.

Thus instead of writing

If the user types (+ 7 6), the interpreter replies 13

authors write

(+ 7 6) ⇒ 13

.

I follow this convention.

Note that the interpreter itself does not print ⇒; it simply

prints the answer 13

in the previous example.

Try ssh access.cims.nyu.edu; then ssh mauler; then mzscheme. Illustrate the above in mzscheme. There is a drscheme environment, you may wish to investigate.

Remark: Remember that you may implement labs on any platform, but they must run at on the class platform, which is mzscheme.

The syntax is trivial; much simpler than other languages you know. Every object in scheme is either an atom, a (dotted) pair, or a list. We will have little to say about pairs. Indeed, some of the words to follow should be modified to take pairs into consideration.

An atom is either a symbol (similar to an identifier in other languages) or a literal. Literals include numbers (we use mainly integers), Booleans (#t and #f), characters (#\a, #\b, etc.; we won't use these much), and strings ("hello", "12", "/usr/lib/scheme", etc.).

Symbols can contain some punctuation characters; we will manly use easy symbols starting with a letter and containing letters, digits, and dash (the minus sign). For example, x23 and hello-world are symbols.

A list can be null (the empty list); or a list of elements, each of which can be an atom or a list. Some example lists:

() (a b c)

(1 2 (3)) ( () )

(xy 2 (x y ((xy)) 4)) ( () "")

( () () ) ( (()) (""))

Note that nested lists can be viewed as trees (the null list is tricky).

Literals are self-evaluating, i.e., they evaluate to themselves.

453 ⇒ 453

"hello, world" ⇒ "hello, world"

#t ⇒ #t

#\8 ⇒ #\8

Symbols evaluate to their current binding, i.e., they are de-referenced as in languages like C. This concludes atoms.

A list is a computation. There are two types.

What if you want the list itself (or a symbol itself), e.g., what

if you want the data item ("hello" hello) and don't want to evaluate

"hello" on hello (indeed "hello" is not an operation so that would

be erroneous)?

Then you need a special form

, in this case the form

quote.

We have already seen a few scheme functions I remember define, lambda, + from lecture one. The third one + is not a special form. The symbol + evaluates to a function and the remaining elements of its list evaluate to the arguments. The function + is invoked with these arguments and the result is their sum.

Since values are typed, but symbols are not, programs need a way to determine the type of the value current bound to the symbol.

The book The Little Schemer

recommends

(define atom? (lambda (x) (and (not (pair? x)) (not (null? x)))))

I do this personally and sometime forget that atom? is not

part of standard Scheme.

Two other predicates are very useful as well

Compare

(+ x x) (lambda (x) (+ x x))

The second does not evaluate any of the x's.

Instead it does something special; in this case it creates an

unnamed function with one parameter x and establishes the body to be

(+ x x).

No addition is performed when the lambda is

executed (it is performed later when the created function is

invoked).

Problem: Every list is a computation (or a special form).

How do we enter a list that is to be treated as data?

Answer: Clearly we need some kind of quoting mechanism.

Note that "(this is a data list)" produces a string, which is not a list at all. Hence the special form (quote data).

Quoting is used to obtain a literal symbol (instead of a variable reference), a literal list (instead of a function call), or a literal vector (we won't use vectors). An apostrophe ' is a shorthand.

(quote hello) ⇒ hello

'hello ⇒ hello

(quote (this is a data list)) ⇒ (this is a data list)

'(this is a data list) ⇒ (this is a data list)

Scheme has four special forms for binding symbols to values: define is used to give global definitions and the trio let, let*, letrec are used for generating a nested scope and defining bindings for that scope.

(define x y) (define x "a string") (define f (lambda (x) (+ x x)))

On the right we see three uses of define.

The special

part about define is that it does

NOT evaluate its first parameter.

Instead, it binds that parameter to the value of the 2nd parameter,

which it does evaluate.

The form define cannot be used in a nested scope; One can

redefine an existing symbol so the first two functions on

the right can appear together as listed.

The third function is not any different from the first two: the

symbol f is bound in the global scope to the value computed

by the 2nd argument, which just happens to be a function.

All three let variations have the same general form

(let ; or let* or letrec

( (var1 init1) (var2 init2) ... (var initn) )

body ) ; this ) matches (let

For all three variations, a nested environment is created, the

inits are evaluated, and the vars are bound in

to the values of the inits.

The difference is in the details, in particular, in the question of

which environment is used when.

For let, all the inits are evaluated in the current environments, the new (nested) environment is formed by adding bindings of the vars to the values of the inits. Hence none of the inits can use any of the vars. More precisely, if an init mentions a var it refers to the binding than symbol had in the current (pre-let) environment.

For let*, the inits are evaluated and the corresponding vars are bound in left to right order. Each evaluation is performed in an environment in which the preceding vars have been bound. For example, init3 can use var1 and var2 to refer to the values init1 and init2.

For letrec a three step procedure is used

(letrec ((fact

(lambda (n)

(if (zero? n) 1

(* n (fact (- n 1)))))))

(fact 5))

Thus any init that refers to any var is referencing that var's binding in the new (nested) scope. This is what is needed to define a recursive procedure (hence the name letrec). The factorial example on the right prints 120 and then exits the nested scope so that typing (fact 5) immediately after produces an error.

There are three basic functions and one critical constant associated with lists.

(car '(this "is" a list 3 of atoms)) ⇒ this (cdr '(this (has) (sublists))) ⇒ ((has) (sublists)) (car '(x)) ⇒ x (cdr '(x)) ⇒ '() (car '()) ⇒ error (cdr '()) ⇒ error

Another pair of names for car and cdr is, head and rest. The car is the head of the list (the first element) and the cdr is rest. If we continue (for just a little while longer) to ignore pairs, then we can say thatcar and cdr are defined only for non-empty lists and that cdr returns a (possibly empty) list.

Note that (car (cdr l)) gives the second element of a list and hence is a commonly used idiom. It can be abbreviated (cadr l). In fact any combination of cxxxxr with each x (no more than 4 allowed) an a or d is defined.

For example, (cdadar l) is (cdr (car (cdr (car l))))

(cons 5 '(5)) ⇒ (5 5) (cons 5 '()) ⇒ (5)

(cons '() '()) ⇒ (()) (cons 'x '(()) ⇒ (x ())

(cons "these" '("are" "strings")) ⇒ ("these" "are" "strings")

(cons 'a1 (cons 'a2 (cons 'a3 '()))) ⇒ (a1 a2 a3)

The function (cons x l) prepends x onto the list l. It may seem that to get a list with 5 elements we need 5 cons applications, but there is a shortcut.

The function list takes any number of arguments (including

0) and returns a list of n elements.

It is equivalent to n cons applications the rightmost

having '() as the 2nd argument.

For example

(list 'a1 'a2 'a3) is equivalent to

(cons 'a1 (cons 'a2 (cons 'a3 '()))), the last example on

the right.

You could very easily ask where the silly names car and cdr came from. Head or first make more sense for car, and rest makes more sense for cdr. In this section I briefly cover the historical reason for the car/cdr terminology, hint at how lists are implemented, introduce (dotted) pairs, show how our (proper) lists as well as improper lists can be built from these pairs and present box diagrams, which are another (this time pictorial) representation of lists, pairs, and improper lists.

Lisp was first implemented on the IBM 704 computer that had 32,768 36-bit words. Since 32,768 is 215, 15-bits were needed to address the words. A common instruction format (all instructions were 36-bits) had 15-bit address and decrement fields. There also were address and decrement registers. Typically, the pointer to the head of a list was the Contents of the Address Register (CAR) and the pointer to the rest was the Contents of the Decrement Register. The car and cdr were stored in the address and decrement fields of memory words as well.

Thus we see that the fundamental unit is a pair of pointers in lisp. This is precisely what cons always returns.

Box diagrams are useful for seeing pictorially what a list looks like in terms of cons cells. The referenced page is from the manual for emacs lisp, which has some minor differences from scheme. I believe the diagram is completely clear if you remember than nil is often used for the empty list ('() in Scheme).

Each box-pair or domino depicts a cons cell and is written in Scheme as a dotted pair (each box is one component of the pair).

Note how every list (including sublists) ends with a reference to

nil in the right hand component of the rightmost domino.

This corresponds to the fact that if you keep taking cdr (cddr,

cdddr, etc) of any list you will get to '() and then cdr is invalid.

This is the defining characteristic of a proper

list (normally called simply a list).

In fact the second argument to cons need not be a list.

The single domino improper

list beginning

this writeup on dotted pairs

shows the result of executing

(cons 'rose 'violet).

In this example the second argument is an atom, not a list.

The resulting cons cell can again be written as a dotted pair, in

this case it is (rose . violet).

Likewise, cdr is generalized to take this domino as input.

As before it returns the right hand box of the domino, in this

case violet.

Thus we maintain the fundamental identities

(car (cons a b)) is a and

(cdr (cons a b)) is b

for any objects a and b.

Previously b had to be a list.

The summary is that cons in generalized to not require a list for

the second argument, the resulting object is represented as a dotted

pair in scheme, and linking together dotted pairs gives a

generalized

list.

If the last dotted pair in every chain has cdr equal to '(), then

the generalized list is an ordinary list; otherwise it is

improper

.

Homework: CYU: 9.

Homework: 1, 3.