Start Lecture #7

For a uniprocessor, which is all we are officially considering, there is little gain in splitting pure computation into pieces. If the CPU is to be active all the time for all the threads, it is simpler to just have one (unithreaded) process.

But this changes for multiprocessors/multicores. Now it is very useful to split computation into threads and have each executing on a separate processor/core. In this case, user-mode threads are wonderful, there are no system calls and the extremely low overhead is beneficial.

However, there are serious issues involved is programming applications for this environment.

One can move the thread operations into the operating system itself. This naturally requires that the operating system itself be (significantly) modified and is thus not a trivial undertaking.

One can write a (user-level) thread library even if the kernel also has threads. This is sometimes called the N:M model since N user-mode threads run on M kernel threads. In this scheme, the kernel threads cooperate to execute the user-level threads.

An offshoot of the N:M terminology is that kernel-level threading (without user-level threading) is sometimes referred to as the 1:1 model since one can think of each thread as being a user level thread executed by a dedicated kernel-level thread.

Homework: 12, 14.

Skipped

The idea is to automatically issue a thread-create system call upon message arrival. (The alternative is to have a thread or process blocked on a receive system call.) If implemented well, the latency between message arrival and thread execution can be very small since the new thread does not have state to restore.

Definitely NOT for the faint of heart.

Remark: Since the subject matter of lab 2 will be processor scheduling, we will do 2.4 before 2.3.

Scheduling processes on the processor is often called

processor scheduling

or process scheduling

or

simply scheduling

.

As we shall see later in the course, a more descriptive name would

be short-term, processor scheduling

.

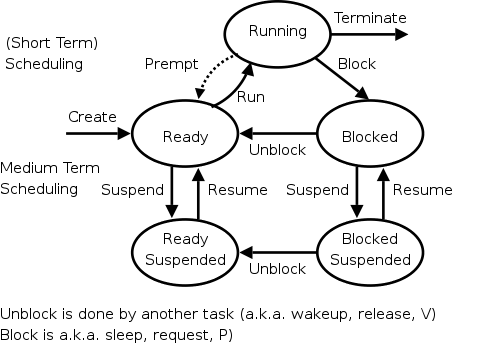

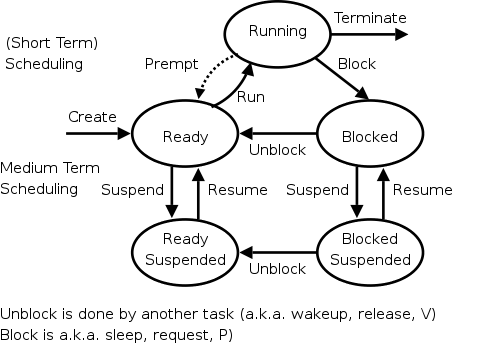

For now we are discussing the arcs connecting running↔ready in the diagram on the right showing the various states of a process. Medium term scheduling is discussed later as is disk-arm scheduling.

Naturally, the part of the OS responsible for (short-term, processor) scheduling is called the (short-term, processor) scheduler and the algorithm used is called the (short-term, processor) scheduling algorithm.

Early computer systems were monoprogrammed and, as a result, scheduling was a non-issue.

For many current personal computers, which are definitely multiprogrammed, there is in fact very rarely more than one runnable process. As a result, scheduling is not critical.

For servers (or old mainframes), scheduling is indeed important and these are the systems you should think of.

Processes alternate CPU bursts with I/O activity, as we shall see in lab2. The key distinguishing factor between compute-bound (aka CPU-bound) and I/O-bound jobs is the length of the CPU bursts.

The trend over the past decade or two has been for more and more jobs to become I/O-bound since the CPU rates have increased faster than the I/O rates.

An obvious point, which is often forgotten (I don't think 3e mentions it) is that the scheduler cannot run when the OS is not running. In particular, for the uniprocessor systems we are considering, no scheduling can occur when a user process is running. (In the mulitprocessor situation, no scheduling can occur when all processors are running user jobs).

Again we refer to the state transition diagram above.

It is important to distinguish preemptive from non-preemptive scheduling algorithms.

run until completion, yield, or block.

preemptarc in the diagram is present for preemptive scheduling algorithms.

We distinguish three categories of scheduling algorithms with regard to the importance of preemption.

For multiprogramed batch systems (we don't consider uniprogrammed systems, which don't need schedulers) the primary concern is efficiency. Since no user is waiting at a terminal, preemption is not crucial and if it is used, each process is given a long time period before being preempted.

For interactive systems (and multiuser servers), preemption is crucial for fairness and rapid response time to short requests.

We don't study real time systems in this course, but can say that preemption is typically not important since all the processes are cooperating and are programmed to do their task in a prescribed time window.

There are numerous objectives, several of which conflict, that a scheduler tries to achieve. These include.

more importantprocesses higher priority. For example, if my laptop is trying to fold proteins in the background, I don't want that activity to appreciably slow down my compiles and especially don't want it to make my system seem sluggish when I am modifying these class notes. In general,

interactivejobs should have higher priority.

jobto its termination. This is important for batch jobs.

shortest job first.

wasted cyclesand limited logins for repeatability.

This is used for real time systems. The objective of the scheduler is to find a schedule for all the tasks (there are a fixed set of tasks) so that each meets its deadline. The run time of each task is known in advance.

Actually it is more complicated.

There is an amazing inconsistency in naming the different (short-term) scheduling algorithms. Over the years I have used primarily 4 books: In chronological order they are Finkel, Deitel, Silberschatz, and Tanenbaum. The table just below illustrates the name game for these four books. After the table we discuss several scheduling policy in some detail.

Finkel Deitel Silbershatz Tanenbaum

-------------------------------------

FCFS FIFO FCFS FCFS

RR RR RR RR

PS ** PS PS

SRR ** SRR ** not in tanenbaum

SPN SJF SJF SJF

PSPN SRT PSJF/SRTF SRTF

HPRN HRN ** ** not in tanenbaum

** ** MLQ ** only in silbershatz

FB MLFQ MLFQ MQ

Remark: For an alternate organization of the scheduling algorithms (due to my former PhD student Eric Freudenthal and presented by him Fall 2002) click here.

If the OS doesn't

schedule, it still needs to store the list

of ready processes in some manner.

If it is a queue you get FCFS.

If it is a stack (strange), you get LCFS.

Perhaps you could get some sort of random policy as well.

Sort jobs by execution time needed and run the shortest first.

This is a Non-preemptive algorithm.

First consider a static situation where all jobs are available

in the beginning and we know how long each one takes to run.

For simplicity lets consider run-to-completion

, also

called uniprogrammed

(i.e., we don't even switch to

another process on I/O).

In this situation, uniprogrammed SJF has the shortest average

waiting time.

The above argument illustrates an advantage of favoring short jobs (e.g., RR with small quantum): The average waiting time is reduced.

In the more realistic case of true SJF where the scheduler switches to a new process when the currently running process blocks (say for I/O), we could also consider the policy shortest next-CPU-burst first.

The difficulty is predicting the future (i.e., knowing in advance the time required for the job or the job's next-CPU-burst).

SJF Can starve a process that requires a long burst.