Start Lecture #6

This should be compared with the addenda on transfer of control and trap.

In a well defined location in memory (specified by the hardware) the OS stores an interrupt vector, which contains the address of the interrupt handler.

Assume a process P is running and a disk interrupt occurs for the completion of a disk read previously issued by process Q, which is currently blocked. Note that disk interrupts are unlikely to be for the currently running process (because the process that initiated the disk access is likely blocked).

this instruction caused the interrupt.

program, namely the OS, and hence might well be using the same variables. We will soon see how this can cause great problems even in what appear to be trivial cases.

Consider a job that is unable to compute (i.e., it is waiting for I/O) a fraction p of the time.

There are at least two causes of inaccuracy in the above modeling procedure.

Nonetheless, it is correct that increasing MPL does increase CPU utilization up to a point.

An important limitation is memory. That is, we assumed that we have many jobs loaded at once, which means we must have enough memory for them. There are other memory-related issues as well and we will discuss them later in the course.

Homework: 5.

| Per process items | Per thread items |

|---|---|

| Address space | Program counter |

| Global variables | Machine registers |

| Open files | Stack |

| Child processes | |

| Pending alarms | |

| Signals and signal handlers | |

| Accounting information |

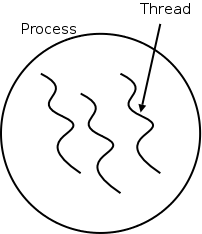

The idea behind threads to have separate threads of control (hence the name) running in the address space of a single process as shown in the diagram to the right. An address space is a memory management concept. For now think of an address space as the memory in which a process runs. (In reality it also includes the mapping from virtual addresses, i.e., addresses in the program, to physical addresses, i.e., addresses in the machine. The table on the left shows which properties are common to all threads in a given process and which properties are thread specific.

Each thread is somewhat like a process (e.g., it shares the processor with other threads) but a thread contains less state than a process (e.g., the address space belongs to the process in which the thread runs.)

Often, when a process P executing an application is blocked (say for I/O), there is still computation that can be done for the application. Another process can't do this computation since it doesn't have access to P's memory. But two threads in the same process do share memory so that problem doesn't occur.

An important modern example is a multithreaded web server.

Each thread is responding to a single WWW connection.

While one thread is blocked on I/O, another thread can be processing

another WWW connection.

Question: Why not use separate processes, i.e.,

what is the shared memory?

Answer: The cache of frequently referenced pages.

A common organization for a multithreaded application is to have a dispatcher thread that fields requests and then passes each request on to an idle worker thread. Since the dispatcher and worker share memory, passing the request is very low overhead.

Another example is a producer-consumer problem (see below) in which we have 3 threads in a pipeline. One thread reads data from an I/O device into an input buffer, the second thread performs computation on the input buffer and places results in an output buffer, and the third thread outputs the data found in the output buffer. Again, while one thread is blocked the others can execute.

Really you want 2 (or more) input buffers and 2 (or more) output buffers. Otherwise the middle thread would be using all the buffers and would block both outer threads.

Question: When does each thread block?

Answer:

A final (related) example is that an application wishing to perform automatic backups can have a thread to do just this. In this way the thread that interfaces with the user is not blocked during the backup. However some coordination between threads may be needed so that the backup is of a consistent state.

A process contains a number of resources such as address space, open files, accounting information, etc. In addition to these resources, a process has a thread of control, e.g., program counter, register contents, stack. The idea of threads is to permit multiple threads of control to execute within one process. This is often called multithreading and threads are sometimes called lightweight processes. Because threads in the same process share so much state, switching between them is much less expensive than switching between separate processes.

Individual threads within the same process are not completely independent. For example there is no memory protection between them. This is typically not a security problem as the threads are cooperating and all are from the same user (indeed the same process). However, the shared resources do make debugging harder. For example one thread can easily overwrite data needed by another thread in the process and when the second thread fails, the cause may be hard to determine because the tendency is to assume that the failed thread caused the failure.

A new thread in the same process is created by a routine named something like thread_create; similarly there is thread_exit. The analogue to waitpid is thread_join (the name comes presumably from the fork-join model of parallel execution).

The routine tread_yield, which relinquishes the processor, does not have a direct analogue for processes. The corresponding system call (if it existed) would move the process from running to ready.

Homework: 11.

Assume a process has several threads. What should we do if one of these threads

POSIX threads (pthreads) is an IEEE standard specification that is supported by many Unix and Unix-like systems. Pthreads follows the classical thread model above and specifies routines such as pthread_create, pthread_yield, etc.

An alternative to the classical model are the so-called Linux threads (see the section 10.3 in the 3e).

Write a (threads) library that acts as a mini-scheduler and implements thread_create, thread_exit, thread_wait, thread_yield, etc. This library acts as a run-time system for the threads in this process. The central data structure maintained and used by this library is a thread table, the analogue of the process table in the operating system itself.

There is a thread table and an instance of the threads library in each multithreaded process.

Advantages of User-Mode Threads

:

Disadvantages