Start Lecture #12

If there are boolean variables (or variables into which a boolean value can be placed), we can have boolean assignment statements. That is we might evaluate boolean expressions outside of control flow statements.

Recall that the code we generated for boolean expressions (inside control flow statements) used inherited attributes to push down the tree the exit labels B.true and B.false. How are we to deal with Boolean assignment statements?

Up to now we have used the so called jumping code

method for

Boolean quantities.

We evaluated Boolean expressions (in the context of control flow

statements) by using inherited attributes for the

true and false exits (i.e., the target locations to jump to if the

expression evaluates to true and false).

With this method if we have a Boolean assignment statement, we just let the true and false exits lead respectively to statements

LHS = true

LHS = false

In the second method we simply treat boolean expressions as

expressions.

That is, we just mimic the actions we did for integer/real

evaluations.

Thus Boolean assignment statements like

a = b OR (c AND d AND (x < y))

just work.

For control flow statements such as

while boolean-expression do statement-list end ;

if boolean-expression then statement-list else statement-list end ;

we simply evaluate the boolean expression as if it was part of an

assignment statement and then have two jumps to where we should go

if the result is true or false.

However, as mentioned before, this is wrong.

In C and other languages if (a=0 || 1/a > f(a)) is guaranteed not to divide by zero and the above implementation fails to provide this guarantee. Thus, even if we use method 2 for implementing Boolean expressions in assignment statements, we must implement short-circuit Boolean evaluation for control flow. That is, we need to use jumping-code for control flow. The easiest solution is to use method 1, i.e. employ jumping-code for all BOOLEAN expressions.

Our intermediate code uses symbolic labels.

At some point these must be translated into addresses of

instructions.

If we use quads all instructions are the same length so the address

is just the number of the instruction.

Sometimes we generate the jump before we generate the target so we

can't put in the instruction number on the fly

.

Indeed, that is why we used symbolic labels.

The easiest method of fixing this up is to make an extra pass (or

two) over the quads to determine the correct instruction number and

use that to replace the symbolic label.

This is extra work; a more efficient technique, which is independent

of compilation, is called backpatching

.

Evaluate an expression, compare it with a vector of constants that are viewed as labels of the arms of the switch, and execute the matching arm (or a default).

The C language is unusual in that the various cases are just labels

for a giant computed goto

at the beginning.

The more traditional idea is that you execute just one of the arms,

as in a series of

if

else if

else if

...

end if

else if'sabove. This executes roughly k jumps (worst case) for k cases.

The class grammar does not have a switch statement so we won't do a detailed SDD.

An SDD for the second method above could be organized as follows.

Much of the work for procedures involves storage issues and the run time environment; this is discussed in the next chapter.

The intermediate language we use has commands for parameters, calls, and returns. Enhancing the SDD to produce this code would not be difficult, but we shall not do it.

The first requirement is to record the signature

of the

procedure/function definition in the symbol (or related) table.

The signature of a procedure is a vector of types, one for each

parameter.

The signature of a function includes as well the type of the

returned value.

An implementation would enhance the SDD so that the productions involving parameter(s) are treated in a manner similar to our treatment of declarations.

First consider procedures (or functions) P and Q both defined at the top level, which is the only case supported by the class grammar. Assume the definition of P precedes that of Q. If the body of Q (the part between BEGIN and END) contains a call to P, then the compiler can check the types because the signature of P has already been stored.

If, instead, P calls Q, then additional mechanisms are needed since the signature of Q is not available at the call site. (Requiring the called procedure to always precede the caller would preclude the case of mutual recursion, P calls Q and Q calls P.)

These same considerations apply if both P and Q are nested inside another procedure/function R. The difference is that the signatures of P and Q are placed in R's symbol table rather than in the top-level symbol table.

The class grammar does not support nested scopes at all. However, the grammatical changes are small.

As we have mentioned previously, procedure/function/block nesting has a significant effect on symbol table management.

We will see in the next chapter what the code generated by the compiler must do to access the non-local names that are a consequence of nested scopes.

Homework: Read Chapter 7.

We are discussing storage organization from the point of view of the compiler, which must allocate space for programs to be run. In particular, we are concerned with only virtual addresses and treat them uniformly.

This should be compared with an operating systems treatment, where we worry about how to effectively map these virtual addresses to real memory. For example see the discussion concerning this diagrams in my OS class notes, which illustrate an OS difficulty with the allocation method used in this course, a method that uses a very large virtual address range. Perhaps the most straightforward solution uses multilevel page tables .

Some system require various alignment constraints. For example 4-byte integers might need to begin at a byte address that is a multiple of four. Unaligned data might be illegal or might lower performance. To achieve proper alignment padding is often used.

As mentioned above, there are various OS issues we are ignoring, for example the mapping from virtual to physical addresses, and consequences of demand paging. In this class we simply allocate memory segments in virtual memory let the operating system worry about managing real memory. In particular, we consider the following four areas of virtual memory.

staticarea do not change during execution, these areas, unlike the next two so called

dynamicareas, have no need for an

expansion region.

new, or via a library function call, such as malloc(). It is deallocated either by another executable statement, such as a call to free(), or automatically by the system via

garbage collection. We will have little more to say about the heap.

Much (often most) data cannot be statically allocated. Either its size is not known at compile time or its lifetime is only a subset of the program's execution.

Early versions of Fortran used only statically allocated data. This required that each array had a constant size specified in the program. Another consequence of supporting only static allocation was that recursion was forbidden (otherwise the compiler could not tell how many versions of a variable would be needed).

Modern languages, including newer versions of Fortran, support both static and dynamic allocation of memory.

The advantage of supporting dynamic storage allocation is the increased flexibility and storage efficiency possible (instead of declaring an array to have a size adequate for the largest data set; just allocate what is needed). The advantage of static storage allocation is that it avoids the runtime costs for allocation/deallocation and may permit faster code sequences for referencing the data.

An (unfortunately, all too common) error is a so-called memory leak

where a long running program repeated allocates memory that it fails

to delete, even after it can no longer be referenced.

To avoid memory leaks and ease programming, several programming

language systems employ automatic garbage collection

.

That means the runtime system itself determines when data

can no longer be referenced and automatically deallocates it.

The scheme to be presented achieves the following objectives.

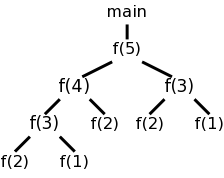

Recall the fibonacci sequence 1,1,2,3,5,8, ... defined by f(1)=f(2)=1 and, for n>2, f(n)=f(n-1)+f(n-2). Consider the function calls that result from a main program calling f(5). Surrounding the more-general pseudocode that calculates (very inefficiently) the first 10 fibonacci numbers, we show the calls and returns that result from main calling f(5). On the left they are shown in a linear fashion and, on the right, we show them in tree form. The latter is sometimes called the activation tree or call tree.

System starts main int a[10];

enter f(5) int main(){

enter f(4) int i;

enter f(3) for (i=0; i<10; i++){

enter f(2) a[i] = f(i);

exit f(2) }

enter f(1) }

exit f(1) int f (int n) {

exit f(3) if (n<3) return 1;

enter f(2) return f(n-1)+f(n-2);

exit f(2) }

exit f(4)

enter f(3)

enter f(2)

exit f(2)

enter f(1)

exit f(1)

exit f(3)

exit f(5)

main ends

We can make the following observations about these procedure calls.

Homework: 1, 2.

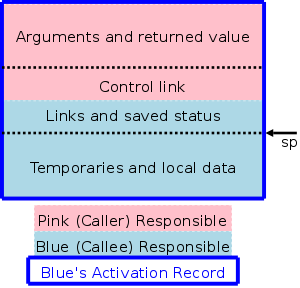

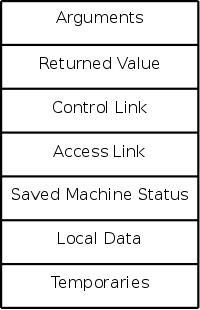

The information needed for each invocation of a procedure is kept in a runtime data structure called an activation record (AR) or frame. The frames are kept in a stack called the control stack.

Note that this is memory used by the compiled program, not by the compiler. The compiler's job is to generate code that obtains the needed memory and to reference the data stored in the ARs.

At any point in time the number of frames on the stack is the current depth of procedure calls. For example, in the fibonacci execution shown above when f(4) is active there are three activation records on the control stack.

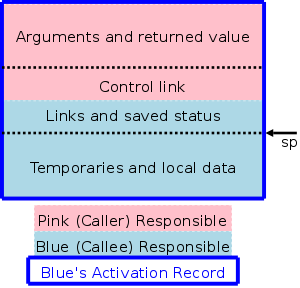

ARs vary with the language and compiler implementation. Typical components are described below and pictured to the right. In the diagrams the stack grows down the page.

actual parameters). The first few arguments are often instead placed in registers.

livetemporaries are relevant.

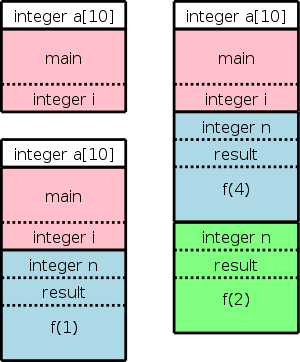

The diagram on the right shows (part of) the control stack for the fibonacci example at three points during the execution. The solid lines separate ARs; the dashed lines separate components within an AR.

In the upper left we have the initial state. We show the global variable a although it is not in an activation record. It is instead statically allocated before the program begins execution (recall that the stack and heap are each dynamically allocated). Also shown is the activation record for main, which contains storage for the local variable i. Recall that local variables are near the end of the AR.

Below the initial state we see the next state when main has called f(1) and there are two activation records, one for main and one for f. The activation record for f contains space for the argument n and and also for the returned value. Recall that arguments and the return value are allocated near the beginning of the AR. There are no local variables in f.

At the far right is a later state in the execution when f(4) has been called by main and has in turn called f(2). There are three activation records, one for main and two for f. It is these multiple activations for f that permits the recursive execution. There are two locations for n and two for the returned value.

The calling sequence, executed when one procedure (the caller) calls another (the callee), allocates an activation record (AR) on the stack and fills in the fields. Part of this work is done by the caller; the remainder by the callee. Although the work is shared, the AR is called the callee's AR.

Since the procedure being called is defined in one place, but normally called from many places, we would expect to find more instances of the caller activation code than of the callee activation code. Thus it is wise, all else being equal, to assign as much of the work to the callee as possible.

Although details vary among implementations, the following

principle is often followed: Values computed by the caller are

placed before any items of size unknown by the caller.

This way they can be referenced by the caller using fixed offsets.

One possible arrangement is the following.

The picture above illustrates the situation where a pink procedure (the caller) calls a blue procedure (the callee). Also shown is Blue's AR. It is called Blue's AR because its lifetime matches that of Blue even though responsibility for this single AR is shared by both procedures.

The picture is just an approximation: For example, the returned value is actually Blue's responsibility, although the space might well be allocated by Pink. Naturally, the returned value is only relevant for functions, not procedures. Also some of the saved status, e.g., the old sp, is saved by Pink.

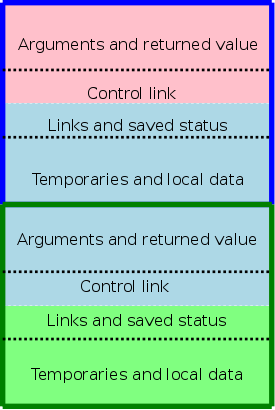

The picture to the right shows what happens when Blue, the callee, itself calls a green procedure and thus Blue is also a caller. You can see that Blue's responsibility includes part of its AR as well as part of Green's.

The following actions occur during a call.

When the procedure returns, the following actions are performed by the callee, essentially undoing the effects of the calling sequence.

Note that varagrs are supported.

Also note that the values written during the calling sequence are not erased and the space is not explicitly reclaimed. Instead, the sp is restored and, if and when the caller makes another call, the space will be reused.

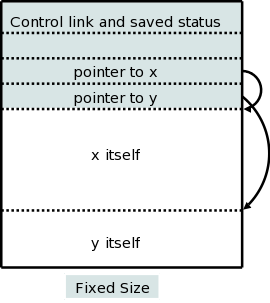

There are two flavors of variable-length data.

It is the second flavor that we wish to allocate on the stack. The goal is for the callee to be able to access these arrays using addresses determined at compile time even though the size of the arrays is not known until the program is called, and indeed often differs from one call to the next (even when the two calls correspond to the same source statement).

The solution is to leave room for pointers to the arrays in the AR. These pointers are fixed size and can thus be accessed using offsets known at compile time. When the procedure is invoked and the sizes are known, the pointers are filled in and the space allocated.

A difficulty caused by storing these variable size items on the stack is that it no longer is obvious where the real top of the stack is located relative to sp. Consequently another pointer (we might call it real-top-of-stack) is also kept. This is used when the callee itself calls another procedure to tell where the new allocation record should begin.

Homework: 4.

As we shall see, the ability of procedure P to access data declared outside of P (either declared globally outside of all procedures or, especially, those declared inside another procedure Q with P nested inside Q) offers interesting challenges.

In languages like standard C without nested procedures, visible names are either local to the procedure in question or are declared globally.

With nested procedures a complication arises. Say g is nested inside f. So g can refer to names declared in f. These names refer to objects in the AR for f. The difficulty is finding that AR when g is executing. We can't tell at compile time where the (most recent) AR for f will be relative to the current AR for g since a dynamically-determined (i.e., unknown at compile time) number of routines could have been called in the middle.

There is an example in the next section. in which g refers to x, which is declared in the immediately outer scope (main) but the AR is 2 away because f was invoked in between. (In that example you can tell at compile time what was called in what order, but with a more complicated program having data-dependent branches, it is not possible.)

The book asserts (correctly) that C doesn't have nested procedures so introduces ML, which does (and is quite slick). However, many of you don't know ML and I haven't used it. Fortunately, a common extension to C is to permit nested procedures. In particular, gcc supports nested procedures. To check my memory I compiled and ran the following program.

#include <stdio.h>

int main (int argc, char *argv[]) {

int x = 10;

int g(int y) {

int z = x+y;

return z;

}

int f (int y) {

return g(2*y);

}

(void) printf("The answer is %d\n", f(x));

return 0;

}

The program compiles without errors and the correct answer of 30 is printed.

So we can use C (really the GCC extension of C).

Remark: Many consider this gcc extension (or its implementation) to be evil. http://www.mailinglistarchive.com/xcode-users@lists.apple.com/msg14135.html http://lkml.indiana.edu/hypermail/linux/kernel/0405.1/1008.html http://lkml.indiana.edu/hypermail/linux/kernel/0405.1/0978.html

Outermost procedures have nesting depth 1. Other procedures have nesting depth 1 more than the nesting depth of the immediately outer procedure. In the example above, main has nesting depth 1; both f and g have nesting depth 2.

The AR for a nested procedure contains an access link that points to the AR of the most recent activation of the immediately outer procedure).

So in the example above the access link for f and the access link for g would each point to the AR of the activation of main. Then when g references x, defined in main, the activation record for main can be found by following the access link in the AR for f. Since f is nested in main, they are compiled together so, once the AR is determined, the same techniques can be used as for variables local to f.

This example was too easy.

However the technique is quite general. For a procedure P to access a name defined in the 3-outer scope, i.e., the unique outer scope whose nesting depth is 3 less than that of P, you follow the access links three times. Make sure you understand why the n-th outer scope, but not the n-th inner scope is unique)

The remaining question is

How are access links maintained?

.

Let's assume there are no procedure parameters. We are also assuming that the entire program is compiled at once.

Without procedure parameters, the compiler knows the name of the called procedure and hence its nesting depth. The compiler always knows the nesting depth of the caller.

Let the caller be procedure F and let the called procedure be G, so we have F calling G. Let N(proc) be the nesting depth of the procedure proc.

We distinguish two cases.

P() {

G() {...}

P1() {

P2() {

...

Pk() {

F(){... G(); ...}

}

...

}

}

}

Our goal while creating the AR for G at the call from F is to

set the access link to point to the AR for P.

Note that the entire structure in the skeleton code shown is

visible to the compiler.

The current (at the time of the call) AR is the one for F and,

if we follow the access links k times we get a pointer to the AR

for P, which we can then place in the access link for the

being-created AR for G.

The above works fine when F is nested (possibly deeply) inside G. It is the picture above but P1 is G.

When k=0 we get the gcc code I showed before and also the case of direct recursion where G=F. I do not know why the book separates out the case k=0, especially since the previous edition didn't.

Almost all of this section is covered in the OS class.

Covered in OS.

Covered in Architecture.

Covered in OS.

Covered in OS.

Stack data is automatically deallocated when the defining procedure returns. What should we do with heap data explicated allocated with new/malloc?

The manual method is to require that the programmer explicitly deallocate these data. Two problems arise.

loop

allocate X

use X

forget to deallocate X

end loop

As this program continues to run it will require more and more

storage even though is actual usage is not increasing

significantly.

allocate X

use X

deallocate X

100,000 lines of code not using X

use X

Both can be disastrous and motivate the next topic, which is covered in programming languages courses.

The system detects data that cannot be accessed (no direct or indirect references exist) and deallocates the data automatically.

Covered in programming languages.