Start Lecture #10

| Production | Semantic Rules |

|---|---|

| pd → PROC np IS ds BEG s ss END ; | ds.offset = 0 |

| ds → d ds1 |

d.offset = ds.offset ds1.offset = d.newoffset ds.totalSize = ds1.totalSize |

| ds → ε | ds.totalSize = ds.offset |

| d → di : ty ; | addType(di.entry, ty.type) addSize(di.entry, ty.size)

addOffset(di.entry, d.offset)

d.newoffset = d.offset + ty.size |

| di → ID | di.entry = ID.entry |

| ty → ARRAY [ NUM ] OF ty1 |

ty.type = array(NUM.value, t1.type) ty.size = NUM.value * t1.size |

| ty → INT |

ty.type = integer ty.size = 4 |

| ty → REAL |

ty.type = real ty.size = 8 |

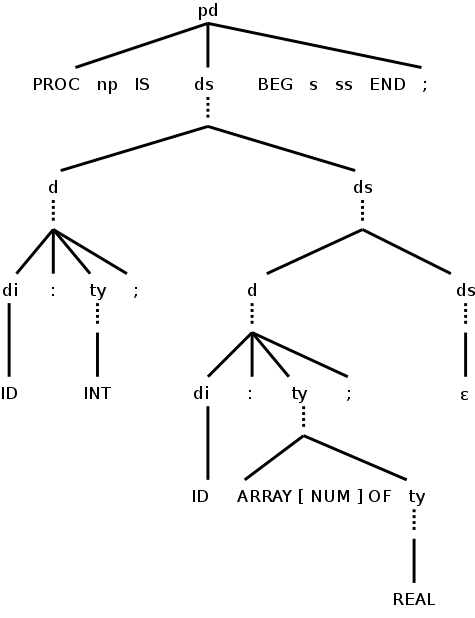

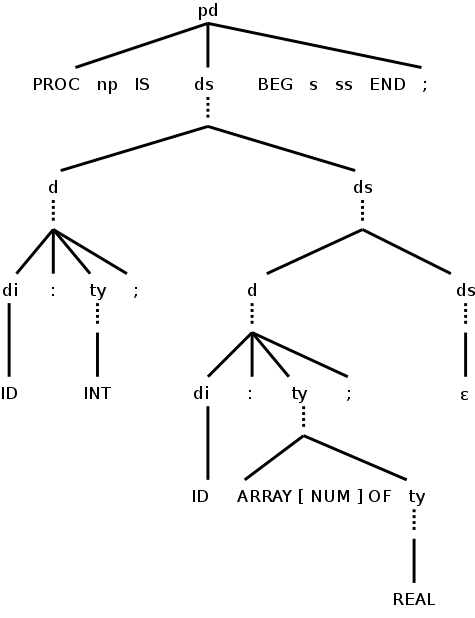

On the right we show the part of the SDD used to translate multiple declarations for the class grammar. We do not show the productions for name-and-parameters (np), statements (ss), or statement (s) since we are focusing just on the declaration of local variables.

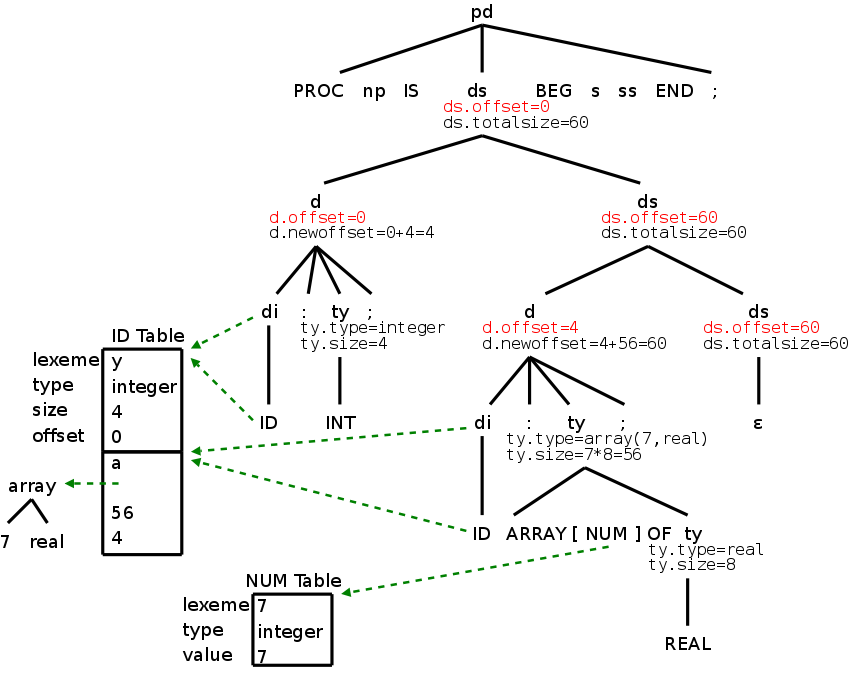

The new part is determining the offset for each individual declaration. The new items have blue backgrounds (this includes new, inherited attributes, which were red). The idea is that the first declaration has offset 0 and the next offset is the current offset plus the current size. Specifically, we proceed as follows.

In the procedure-def (pd) production, we give

the nonterminal declarations

(ds) the inherited attribute

offset (ds.offset), which we initialize to zero.

We inherit this offset down to individual declarations. At each declaration (d), we store the offset in the entry for the identifier being declared and increment the offset by the size of this object.

When we get the to the end of the declarations (the ε-production), the offset value is the total size needed. We turn it around and send it back up the tree in case the total is needed by some higher level production.

Example: What happens when the following program (an extension of P1 from the previous section) is parsed and the semantic rules above are applied.

procedure P2 is

y : integer;

a : array [7] of real;

begin

y := 5; // Statements not yet done

a[2] := y; // Type error?

end;

On the right is the parse tree, limited to the declarations.

The dotted lines would be solid for a parse tree and connect to the lines below. They are shown dotted so that the figure can be compared to the annotated parse tree immediately below, in which the attributes and their values have been filled in.

In the annotated tree, the attributes shown in red are inherited. Those in black are synthesized.

To start the annotation process, look at the top production in the parse tree. It has an inherited attributed ds.offset, which is set to zero. Since the attribute is inherited, the entry can be placed immediately into the annotated tree.

We now do the left child and fill in its inherited attribute.

When the Euler tour comes back up the tree, the synthesized attributes are evaluated and recorded.

Since records can essentially have a bunch of declarations inside, we only need add

T → RECORD { D }

to get the syntax right.

For the semantics we need to push the environment and offset onto

stacks since the namespace inside a record is distinct from that on

the outside.

The width of the record itself is the final value of (the inner)

offset, which in turn is the value of totalsize at the root when the

inner scope is concluded.

T → record { { Env.push(top);

top = new Env();

Stack.push(offset);

offset = 0; }

D } { T.type = record(top);

T.width = offset;

top = Env.pop();

offset = Stack.pop(); }

This does not apply directly to the class grammar, which does not have records.

This same technique would be used for other examples of nested scope, e.g., nested procedures/functions and nested blocks. To have nested procedures/functions, we need other alternatives for declaration: procedure/function definitions. Similarly if we wanted to have nested blocks we would add another alternative to statement.

s → ks | ids | block-stmt block-stmt → DECLARE ds BEGIN ss END ;

If we wanted to generate code for nested procedures or nested blocks, we would need to stack the symbol table as done above and in the text.

Homework: 1.

| Production | Semantic Rule |

|---|---|

| e → t |

e.addr = t.addr e.code = t.code |

| e → e1 ADDOP t |

e.addr = new Temp() e.code = e1.code || t.code || gen(e.addr = e1.addr ADDOP t.addr) |

| t → f |

t.addr = f.addr t.code = f.code |

| t → t1 MULOP f |

t.addr = new Temp() t.code = t1.code || f.code || gen(t.addr = t1.addr MULOP f.addr) |

| f → ( e ) |

f.addr = e.addr f.code = e.code |

| f → NUM |

f.addr = get(NUM.lexeme) f.code = "" |

| f → ID ins |

assume indices is ε f.addr = get(ID.lexeme) f.code = "" |

The goal is to generate 3-address code for scalar expressions, i.e., arrays are not treated in this section (they will be shortly). Specifically, indices is assumed to be ε. We use is to abbreviate indices.

We generate the 3-address code using the natural

notation of

6.2.

In fact we assume there is a function gen() that, given the pieces

needed, does the proper formatting so gen(x = y + z) will output the

corresponding 3-address code.

gen() is often called with addresses other than lexemes, e.g.,

temporaries and constants.

The constructor Temp() produces a new address in whatever format gen

needs.

Hopefully this will be clear in the table to the right and the

others that follow.

We will use two attributes code and address (addr). The key objective at each node of the parse tree for an expression is to produce values for the code and addr attributes so that the following crucial invariant is maintained.

IfIn particular, after TheRoot.code is evaluated, the address TheRoot.addr contains the value of the entire expression.codeis executed, then addressaddrcontains the value of the (sub-)expression routed at this node.

Said another way, the attribute addr at a node is the address that holds the value calculated by the code at the node. Recall that unlike real code for a real machine our 3-address code doesn't reuse temporary addresses.

As one would expect for expressions, all the attributes in the table to the right are synthesized. The table is for the expression part of the class grammar. To save space we use ID for IDENTIFIER, e for expression, t for term, and f for factor.

The SDDs for declarations and scalar expressions can be easily combined by essentially concatenating them as shown here.

We saw this in chapter 2.

The method in the previous section generates long strings as we walk the tree. By using SDTs instead of SDDs, you can output parts of the string as each node is processed.

| Production | Semantic Rules |

|---|---|

| pd → PROC np IS ds BEG s ss END ; | ds.offset = 0 |

| np → di ( ps ) | di | not used yet |

| ds → d ds1 |

d.offset = ds.offset ds1.offset = d.newoffset |

| ds.totalSize = ds1.totalSize | |

| ds → ε | ds.totalSize = ds.offset |

| d → di : ty ; |

addType(di.entry, ty.type) addBaseType(di.entry, ty.basetype)

addSize(di.entry, ty.size)addOffset(di.entry, d.offset) d.newoffset = d.offset + ty.size |

| di → ID | di.entry = ID.entry |

| ty → ARRAY [ NUM ] OF ty1 |

ty.type = array(NUM.value, ty1.type)

ty.basetype = ty1.basetype

ty.size = NUM.value * ty1.size

|

| ty → INT |

ty.type = integer

ty.basetype = integer

ty.size = 4

|

| ty → REAL |

ty.type = real

ty.basetype = real

ty.size = 8

|

The idea is to associate the base address with the array name. That is, the offset stored in the identifier table entry for the array is the address of the first element. When an element is referenced, the indices and the array bounds are used to compute the amount, often called the offset (unfortunately, we have already used that term), by which the address of the referenced element differs from the base address.

To implement this technique, we must first store the base type of each identifier in the identifier table. We use this basetype to determine the size of each element of the array. For example, consider

arr: array [10] of integer;

x : real ;

Our previous SDD for declarations calculates the size and type of

each identifier.

For arr these are 40 and array(10,integer),

respectively.

The enhanced SDD on the right calculates, in addition, the base

type.

For arr this is integer.

For a scalar, such as x, the base type is the same as the

type, which in the case of x is real.

The new material is shaded in blue.

This SDD is combined with the expression SDD here.

Calculating the address of an element of a one dimensional array is easy. The address increment is the width of each element times the index (assuming indices start at 0). So the address of A[i] is the base address of A, which is the offset component of A's entry in the identifier table, plus i times the width of each element of A.

The width of each element of an array is the width of what we have called the base type of the array. For a scalar, there is just one element and its width is the width of the type, which is the same as the base type. Hence, for any ID the element width is sizeof(getBaseType(ID.entry)).

For convenience, we define getBaseWidth by the formula

getBaseWidth(ID.entry) = sizeof(getBaseType(ID.entry)) = sizeof(ID.entry.baseType)

Let us assume row major ordering. That is, the first element stored is A[0,0], then A[0,1], ... A[0,k-1], then A[1,0], ... . Modern languages use row major ordering.

With the alternative column major ordering, after A[0,0] comes A[1,0], A[2,0], ... .

For two dimensional arrays the address of A[i,j] is the sum of three terms

Remarks

End of Remarks

The generalization to higher dimensional arrays is clear.

Consider the following expression containing a simple array reference, where a and c are integers and b is a real array.

a = b[3*c]

We want to generate code something like

T1 = 3 * c // i.e. mult T1,3,c

T2 = T1 * 8 // each b[i] is size 8

a = b[T2] // Uses the x[i] special form

If we considered it too easy to use the that special form we would

generate something like

T1 = 3 * c

T2 = T1 * 8

T3 = &b

T4 = T2 + T3

a = *T4

| Production | Semantic Rules |

|---|---|

| f → ID i |

f.t1 = new Temp() f.addr = new Temp f.code = i.code || gen(f.t1 = i.addr * getBaseWidth(ID.entry)) || gen(f.addr = get(ID.lexeme)[f.t1]) |

| f → ID i |

f.t1 = new Temp() f.t2 = new Temp() f.t3 = new Temp() f.addr = new Temp f.code = i.code || gen(f.t1 = in.addr * getBaseWidth(ID.entry)) || gen(f.t2 = &get(ID.lexeme)) || gen(f.t3 = f.t2 + f.t1) || gen(f.addr = *f.t3) |

| i → [ e ] |

i.addr = e.addr i.code = e.code |

To permit arrays in expressions, we need to specify the semantic actions for the production

factor → IDENTIFIER indices

As a warm-up, lets start with references to one-dimensional arrays. That is, instead of the above production, we consider the simpler

factor → IDENTIFIER index

The table on the right does this in two ways, both with and without using the special addressing form x[j]. In the table the nonterminal index is abbreviated i . I included the version without the x[j] special form for two reasons.

Note that by avoiding the special form b=x[j], I ended up

using two other special forms.

Is it possible to avoid the special forms?

Lisp is taught in our programming languages course, which is a prerequisite for compilers. If you no longer remember Lisp, don't worry.

special formsthat are evaluated differently.

We just (optionally) saw an exception to the basic lisp evaluation

rule.

A similar exception occurs with x[j] in our three-address

code.

It is a special form

in that, unlike the normal rules for

three-address code, we don't use the address

of j but instead its value.

Specifically the value of j is added to

the address of x.

The rules for addresses in 3-address code also include

a = &b

a = *b

*a = b

which are other special forms. They have the same meaning as in the C programming language.

Our current SDD includes the production

f → ID is

with the added assumption that indices is ε.

We now want to permit indices to be a single index as well as

ε.

That is we replace the above production with the pair

f → ID

f → ID i

The semantic rules for each case were given in previous tables. The ensemble to date is given here.

On the board evaluate e.code for the RHS of the simple example above: a=b[3*c].

This is an exciting moment. At long last we really seem to be compiling!

As mentioned above in the general case we must process the production

f → IDENTIFIER is

Following the method used in the 1D case we need to construct is.code. The basic idea is shown here

Now that we can evaluate expressions (including one-dimensional array reverences) we need to handle the left-hand side of an assignment statement (which also can be an array reference). Specifically we need semantic actions for the following productions from the class grammar.

id-statement → ID rest-of-assign

rest-of-assign → = expression ;

rest-of-assign → indices = expression

| Production | Semantic Rules |

|---|---|

| ss → s ss1 | ss.code = s.code || ss1.code |

| ss → ε | ss.code = "" |

| s → ids | s.code = ids.code |

| ids → ID ra |

ra.id = ID.entry

ids.code = ra.code

|

| ra → := e ; | ra.code = e.code || gen(ra.id.lexeme=e.addr) |

| ra → i := e ; | ra.t1 = newTemp() ra.code = i.code || e.code || gen(ra.t1 = i.addr * getBaseWidth(ra.id)|| gen(ra.id.lexeme[ra.t1]=e.addr) |

Once again we begin by restricting ourselves to one-dimensional arrays, which corresponds to replacing indices by index in the last production. The SDD for this restricted case is shown on the right.

The first three productions reduce statements (ss) and statement (s) to identifier-statement (ids), which is used for statements such as assignment and procedure invocation, that begin with an identifier. The corresponding semantic rules simply concatenate all the code produced into the top ss.code.

The identifier-statement production captures the ID and sends it down to the appropriate rest-of-assignment (ra) production where the necessary code is generated and passed back up.

The simple ra → := e; production generates ra.code by simply appending to the evaluation of the RHS, the natural assignment with ra as the LHS.

It is instructive to compare ra.code for the ra → i := e production with f.code for the f → ID i production in the expression SDD. Both compute the same offset (index*elementSize) and both use a special form for the address x[j]=y in this section and y=x[j] for expressions.

Incorporating these productions and semantics gives this SDD. Note that we have added a semantic rule to the procedure-def (pd) production. This rule simply concatenates the code in the s and ss symbols appearing between BEG and END

The idea is the same as when a multidimensional array appears in an expression. Specifically,

Recall the program we could partially handle.

procedure P2 is

y : integer;

a : array [7] of real;

begin

y := 5; // Statements not yet done

a[2] := y; // Type error?

end;

Now we can do the statements.

Homework: What code is generated for the program written above? Please remind me to go over this homework next class.

What should we do about the possible type error?

Let's take the last option.

Type Checking includes several aspects.

For any language, all type checking could be done at run time, i.e. there would be no compile-time checking. However, that does not mean the compiler is absolved from the type checking. Instead, the compiler generates code to do the checks at run time.

It is normally preferable to perform the checks at compile time, if possible. Some languages have very weak typing; for example, variables can change their type during execution. Often these languages need run-time checks. Examples include lisp, snobol, and apl.

A sound type system guarantees that all checks can be performed prior to execution. This does not mean that a given compiler will make all the necessary checks.

An implementation is strongly typed if compiled programs are guaranteed to run without type errors.

There are two forms of type checking.

We will implement type checking for expressions. Type checking statements is similar. The SDDs below (and for lab 4) contain type checking (and coercions) for assignment statements as well as expressions.

A very strict type system would do no automatic conversion. Instead it would offer functions for the programmer to explicitly convert between selected types. With such a system, either the program has compatible types or is in error. Such explicit conversions supplied by the programmer are called casts.

We, however, will consider a more liberal approach in which the

language permits certain implicit conversions that the

compiler is to supply.

This is called type coercion.

We continue to work primarily with the two basic types integer and real, and postulate a unary function denoted (real) that converts an integer into the real having the same value. Nonetheless, we do consider the more general case where there are multiple types some of which have coercions (often called widenings). For example in C/Java, int can be widened to long, which in turn can be widened to float as shown in the figure to the right.

Mathematically the hierarchy on the right is a partially order set (poset) in which each pair of elements has a least upper bound (LUB). For many binary operators (all the arithmetic ones we are considering, but not exponentiation) the two operands are converted to the LUB. So adding a short to a char, requires both to be converted to an int. Adding a byte to a float, requires the byte to be converted to a float (the float remains a float and is not converted).