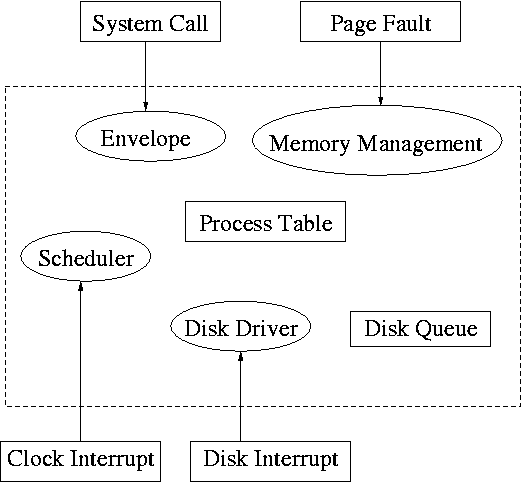

partscorresponding to its two access points. Recall the figure at the upper right, which we saw at the beginning of the course.

Start Lecture #13

The most noticeable characteristic of current ensemble ofI/O devices is their great diversity.

output onlydevice such as a printer supplies very little output to the computer (out of paper) but receives voluminous input from the computer. Again it is better thought of as a transducer, converting electronic data from the computer to paper data for humans.

These are the devices

as far as the OS is concerned.

That is, the OS code is written with the controller spec in hand not

with the device spec.

Consider a disk controller processing a read request. The goal is to copy data from the disk to some portion of the central memory. How is this to be accomplished?

The controller contains a microprocessor and memory, and is connected to the disk (by wires). When the controller requests a sector from the disk, the sector is transmitted to the control via the wires and is stored by the controller in its memory.

Two questions arise.

Typically the interface the OS sees consists of some several registers located on the controller.

go button.

So the first question above becomes, how does the OS read and write the device register?

I/O spaceinto which the registers are mapped. In this case special I/O space instructions are used to accomplish the loads and stores.

elegantsolution in that it uses an existing mechanism to accomplish another objective.

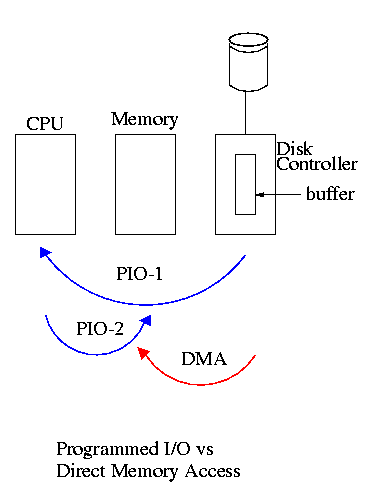

We now address the second question, moving data between the controller and the main memory. Recall that (independent of the issue with respect to DMA) the disk controller, when processing a read request pulls the desired data from the disk to its own buffer (and pushes data from the buffer to the disk when processing a write).

Without DMA, i.e., with programmed I/O (PIO), the cpu then does loads and stores (assuming the controller buffer is memory mapped, or uses I/O instructions if it is not) to copy the data from the buffer to the desired memory locations.

A DMA controller, instead writes the main memory itself, without intervention of the CPU.

Clearly DMA saves CPU work. But this might not be important if the CPU is limited by the memory or by system buses.

An important point is that there is less data movement with DMA so the buses are used less and the entire operation takes less time. Compare the two blue arrows vs. the single red arrow.

Since PIO is pure software it is easier to change, which is an advantage.

DMA does need a number of bus transfers from the CPU to the controller to specify the DMA. So DMA is most effective for large transfers where the setup is amortized.

Why have the buffer? Why not just go from the disk straight to the memory?

Homework: 12

Skipped.

As with any large software system, good design and layering is important.

We want to have most of the OS to be unaware of the characteristics of the specific devices attached to the system. (This principle of device independence is not limited to I/O; we also want the OS to be largely unaware of the CPU type itself.)

This objective has been accomplished quite well for files stored on various devices. Most of the OS, including the file system code, and most applications can read or write a file without knowing if the file is stored on a floppy disk, a hard disk, a tape, or (for reading) a CD-ROM.

This principle also applies for user programs reading or writing streams. A program reading from ``standard input'', which is normally the user's keyboard can be told to instead read from a disk file with no change to the application program. Similarly, ``standard output'' can be redirected to a disk file. However, the low-level OS code dealing with disks is rather different from that dealing keyboards and (character-oriented) terminals.

One can say that device independence permits programs to be implemented as if they will read and write generic or abstract devices, with the actual devices specified at run time. Although writing to a disk has differences from writing to a terminal, Unix cp, DOS copy, and many programs we compose need not be aware of these differences.

However, there are devices that really are special. The graphics interface to a monitor (that is, the graphics interface presented by the video controller--often called a ``video card'') does not resemble the ``stream of bytes'' we see for disk files.

Homework: What is device independence?

We have already discussed the value of the name space implemented by file systems. There is no dependence between the name of the file and the device on which it is stored. So a file called IAmStoredOnAHardDisk might well be stored on a floppy disk.

More interesting once a device is mounted on (Unix) directory, the device is named exactly the same as the directory was. So if a CD-ROM was mounted on (existing) directory /x/y, a file named joe on the CD-ROM would now be accessible as /x/y/joe.

There are several aspects to error handling including: detection, correction (if possible) and reporting.

Detection should be done as close to where the error occurred as possible before more damage is done (fault containment). Moreover, the error may be obvious at the low level, but harder to discover and classify if the erroneous data is passed to higher level software.

Correction is sometimes easy, for example ECC memory does this automatically (but the OS wants to know about the error so that it can schedule replacement of the faulty chips before unrecoverable double errors occur).

Other easy cases include successful retries for failed ethernet transmissions. In this example, while logging is appropriate, it is quite reasonable for no action to be taken.

Error reporting tends to be awful. The trouble is that the error occurs at a low level but by the time it is reported the context is lost. Unix/Linux in particular is horrible in this area.

I/O must be asynchronous for good performance. That is the OS cannot simply wait for an I/O to complete. Instead, it proceeds with other activities and responds to the interrupt that is generated when the I/O has finished.

Users (mostly) want no part of this. The code sequence

Read X

Y <— X+1

Print Y

should print a value one greater than that read.

But if the assignment is performed before the read completes, the

wrong value can easily be printed.

Performance junkies sometimes do want the asynchrony so

that they can have another portion of their program executed while

the I/O is underway.

That is, they implement a mini-scheduler in their application

code.

See this message from linux kernel

developer Ingo Molnar for his take on asynchronous IO and

kernel/user threads/processes.

You can find the entire discussion

here.

Buffering is often needed to hold data for examination prior to sending it to its desired destination.

Since this involves copying the data, which can be expensive, modern systems try to avoid as much buffering as possible. This is especially noticeable in network transmissions, where the data could conceivably be copied many times.

I am not sure if any systems actually do all seven.

For devices like printers and CD-ROM drives, only one user at a time is permitted. These are called serially reusable devices, which we studied them in the deadlocks chapter. Devices such as disks and ethernet ports can, on the contrary, be shared by concurrent processes without any deadlock risk.

As mentioned just above, with programmed I/O the main processor (i.e., the one on which the OS runs) moves the data between memory and the device. This is the most straightforward method for performing I/O.

One question that arises is how does the processor know when the device is ready to accept or supply new data.

In the simplest implementation, the processor, when it seeks to use a device, loops continually querying the device status, until the device reports that it is free. This is called polling or busy waiting.

If we poll infrequently (and do useful work in between), there can be a significant delay from when the previous I/O is complete to when the OS detects the device availability.

loop

if device-available exit loop

do-useful-work

If we poll frequently (and thus are able to do little useful work in between) and the device is (sometimes) slow, polling is clearly wasteful.

The extreme case is where the process does nothing between polls. For a slow device this can take the CPU out of service for a significant period. This bad situation leads us to ... .

As we have just seen, a difficulty with polling is determining the frequency with which to poll. Another problem is that the OS must continually return to the polling loop, i.e., we must arrange that do-useful-work takes the desired amount of time. Really we want the device to tell the CPU when it is available, which is exactly what an interrupt does.

The device interrupts the processor when it is ready and an interrupt handler (a.k.a. an interrupt service routine) then initiates transfer of the next datum.

Normally interrupt schemes perform better than polling, but not always since interrupts are expensive on modern machines. To minimize interrupts, better controllers often employ ...

We discussed DMA above.

An additional advantage of dma, not mentioned above, is that the processor is interrupted only at the end of a command not after each datum is transferred. Many devices receive a character at a time, but with a dma controller, an interrupt occurs only after a buffer has been transferred.

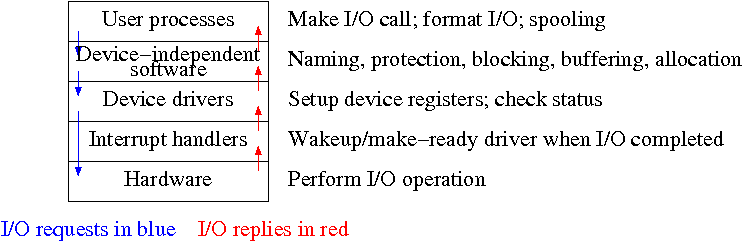

Layers of abstraction as usual prove to be effective. Most systems are believed to use the following layers (but for many systems, the OS code is not available for inspection).

We will give a bottom up explanation.

We discussed the behavior of an interrupt handler

before when studying page faults.

Then it was called assembly language code

.

A difference is that page faults are caused by specific user

instructions, whereas interrupts just occur

.

However, the low level code done is essentially the same.

In the present case, we have a process blocked on I/O and the I/O event has just completed. So the goal is to make the process ready and then call the scheduler. Possible methods are.

Once the process is ready, it is up to the scheduler to decide when it should run.

Device drivers form the portion of the OS that is tailored to the characteristics of individual controllers. They form the dominant portion of the source code of the OS since there are hundreds of drivers. Normally some mechanism is used so that the only drivers loaded on a given system are those corresponding to hardware actually present.

Indeed, modern systems often have loadable device drivers

,

which are loaded dynamically when needed.

This way if a user buys a new device, no changes to the operating

system are needed.

When the device is installed it will be detected during the boot

process and the corresponding driver is loaded.

Sometimes an even fancier method is used and the device can be plugged in while the system is running (USB devices are like this). In this case it is the device insertion that is detected by the OS and that causes the driver to be loaded.

Some systems can dynamically unload a driver, when the corresponding device is unplugged.

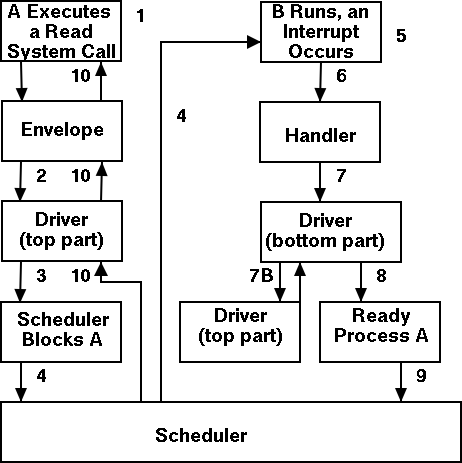

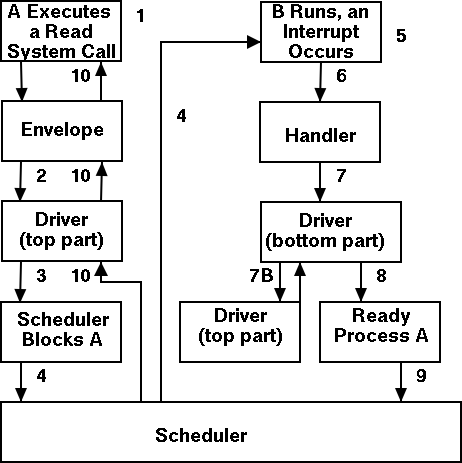

The driver has two parts

corresponding to its two access

points.

Recall the figure at the upper right, which we saw at the beginning

of the course.

toppart.

bottompart.

In some system the drivers are implemented as user-mode processes. Indeed, Tannenbaum's MINIX system works that way, and in previous editions of the text, he describes such a scheme. However, most systems have the drivers in the kernel itself and the 3e describes this scheme. I previously included both descriptions, but have eliminated the user-mode process description (actually I greyed it out).

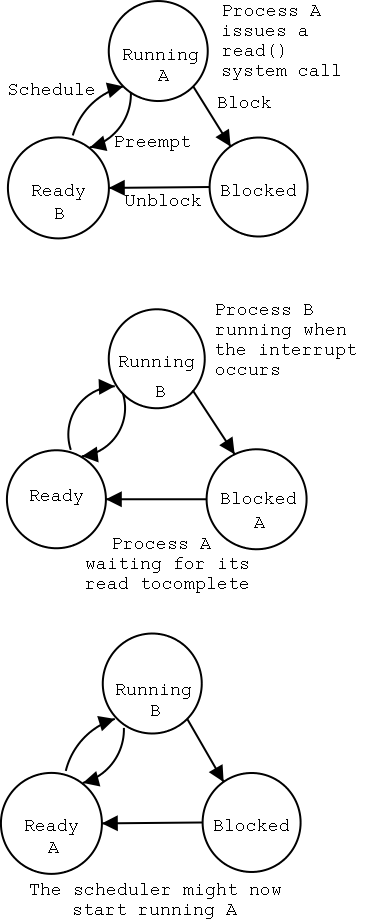

The numbers in the diagram to the right correspond to the numbered steps in the description that follows. The bottom diagram shows the state of processes A and B at steps 1, 6, and 9 in the execution sequence described.

What follows is the Unix-like view in which the driver is invoked

by the OS acting in behalf of a user process (alternatively stated,

the process shifts into kernel mode).

Thus one says that the scheme follows a self-service

paradigm

in that the process itself (now in kernel mode) executes the driver.

Actions that occur when the user issues an I/O request.

Actions that occur when an interrupt arrives (i.e., when an I/O has been completed).

The device-independent code cantains most of the I/O functionality, but not most of the code since there are very many drivers, all doing essentially the same thing in slightly different ways due to slightly different controllers.

As stated above the bulk of the OS code is made of device drivers and thus it is important that the task of driver writing not be made more difficult than needed. As a result each class of devices (e.g. the class of all disks) has a defined driver interface to which all drivers for that class of device conform. The device independent I/O portion processes user requests and calls the drivers.

Naming is again an important O/S functionality. In addition it offers a consistent interface to the drivers. The Unix method works as follows

specialfile in the /dev directory.

specialfiles and also contain so called major and minor device numbers.

Protection. A wide range of possibilities are actually done in real systems. Including both extreme examples of everything is permitted and nothing is (directly) permitted.

Buffering is necessary since requests come in a size specified by the user and data is delivered in a size specified by the device. It is also important so that a user process using getchar() is not blocked and unblocked for each character read.

The text describes double buffering and circular buffers, which are important programming techniques, but are not specific to operating systems.

Skipped.

The system must enforce exclusive access for non-shared devices like CD-ROMs.

A good deal of I/O code is actually executed by unprivileged code running in user space. This code includes library routines linked into user programs, standard utilities, and daemon processes.

If one uses the strict definition that the operating system consists of the (supervisor-mode) kernel, then this I/O code is not part of the OS. However, very few use this strict definition.

Some library routines are trivial and just move their arguments into the correct place (e.g., a specific register) and then issue a trap to the correct system call to do the real work.

I think everyone considers these routines to be part of the operating system. Indeed, they constitute the code that implement user interface to the OS. For example, when we specify the (Unix) read system call by

count = read (fd, buffer, nbytes)

as we did in chapter 1, we are really

giving the parameters and return values of such a library routine.

Although users could write these routines, it would make their programs non-portable and would require them to write in assembly language since neither trap nor specifying individual registers is available in high-level languages.

Other library routines, notably standard I/O (stdio) in Unix, are definitely not trivial. For example consider the formatting of floating point numbers done in printf and the reverse operation done in scanf.

Printing to a local printer is often performed in part by a regular program (lpr in Unix) that copies (or links) the file to a standard place, and in part by a daemon (lpd in Unix) that reads the copied files and sends them to the printer. The daemon might be started when the system boots.

Note that this implementation of printing uses spooling, i.e., the file to be printed is copied somewhere by lpr and then the daemon works with this copy. Mail uses a similar technique (but generally it is called queuing, not spooling).

The diagram on the right shows the various layers and some of the actions that are performed by each layer.

The arrows show the flow of control. The blue downward arrows show the execution path made by a request from user space eventually reaching the device itself. The red upward arrows show the response, beginning with the device supplying the result for an input request (or a completion acknowledgement for an output request) and ending with the initiating user process receiving its response.

Homework: 11, 13.