Start Lecture #8

Remark: midterm grades are based on lab1.

When a page fault occurs, choose as victim that page that has been unused for the longest time, i.e. the one that has been least recently used.

LRU is definitely

Homework: 28, 22.

A cleaver hardware method to determine the LRU page.

Keep a count of how frequently each page is used and evict the one that has been the lowest score. Specifically:

| R | counter |

|---|---|

| 1 | 10000000 |

| 0 | 01000000 |

| 1 | 10100000 |

| 1 | 11010000 |

| 0 | 01101000 |

| 0 | 00110100 |

| 1 | 10011010 |

| 1 | 11001101 |

| 0 | 01100110 |

NFU doesn't distinguish between old references and recent ones. The following modification does distinguish.

Aging does indeed give more weight to later references, but an n bit counter maintains data for only n time intervals; whereas NFU maintains data for at least 2n intervals.

Homework: 24, 33.

The goals are first to specify which pages a given process needs to have memory resident in order for the process to run without too many page faults and second to ensure that these pages are indeed resident.

But this is impossible since it requires predicting the future. So we again make the assumption that the near future is well approximated by the immediate past.

We measure time in units of memory references, so t=1045 means the time when the 1045th memory reference is issued. In fact we measure time separately for each process, so t=1045 really means the time when this process made its 1045th memory reference.

Definition: w(k,t), the working set at time t (with window k) is the set of pages referenced by the last k memory references ending at reference t.

The idea of the working set policy is to ensure that each process keeps its working set in memory.

Homework: Describe a process (i.e., a program) that runs for a long time (say hours) and always has a working set size less than 10. Assume k=100,000 and the page size is 4KB. The program need not be practical or useful.

Homework: Describe a process that runs for a long time and (except for the very beginning of execution) always has a working set size greater than 1000. Assume k=100,000 and the page size is 4KB. The program need not be practical or useful.

The definition of Working Set is local to a process. That is, each process has a working set; there is no system wide working set other than the union of all the working sets of each process.

However, the working set of a single process has effects on the demand paging behavior and victim selection of other processes. If a process's working set is growing in size, i.e., w(t,k) is increasing as t increases, then we need to obtain new frames from other processes. A process with a working set decreasing in size is a source of free frames. We will see below that this is an interesting amalgam of local and global replacement policies.

Interesting questions concerning the working set include:

... Various approximations to the working set, have been devised. We will study two: using virtual time instead of memory references (immediately below) and Page Fault Frequency (part of section 3.5.1). In 3.4.9 we will see the popular WSClock algorithm that includes an approximation of the working set as well as several other ideas.

Instead of counting memory referenced and declaring a page in the working set if it was used within k references, we keep track of time, which the system does anyway, and declare a page in the working set if it was used in the past τ seconds. Note that the time is measured only while this process is running, i.e., we are using virtual time.

time of last useto the PTE.

Remark: Lab 4 (the last lab) is assigned).

The WSClock algorithm combines aspects of the working set algorithm and the clock implementation of second chance.

Like clock we create a circular list of nodes with a hand

pointing to the next node to examine.

There is one such node for every resident page of this process; thus

the nodes can be thought of as a list of frames or a kind of

inverted page table.

Like working set we store in each node the referenced and modified bits R and M and the time of last use. R and M are initialized to zero when the page is read in. R is set by the hardware on a reference and cleared periodically by the OS (perhaps at the end of each page fault or perhaps every k clock ticks). M is set by the hardware on a write. We indicate below the setting of the time of last use and the clearing of M.

We use virtual time and declare a page old if its last reference is more than τ seconds in the past. Other pages are declared young (i.e., in the working set).

As with clock, on every page fault a victim is found by scanning the list starting with the node indicated by the clock hand.

It is possible to go all around the clock without finding a victim. In that case

An alternative treatment of WSClock, including more details of is interaction with the I/O subsystem, can be found here.

| Algorithm | Comment |

|---|---|

| Random | Poor, used for comparison |

| Optimal | Unimplementable, used for comparison |

| LIFO | Horrible, useless |

| NRU | Crude |

| FIFO | Not good ignores frequency of use |

| Second Chance | Improvement over FIFO |

| Clock | Better implementation of Second Chance |

| LRU | Great but impractical |

| NFU | Crude LRU approximation |

| Aging | Better LRU approximation |

| Working Set | Good, but expensive |

| WSClock | Good approximation to working set |

Consider a system that has no pages loaded and that uses the FIFO

PRU.

Consider the following reference string

(sequences of

pages referenced).

0 1 2 3 0 1 4 0 1 2 3 4

If we have 3 frames this generates 9 page faults (do it).

If we have 4 frames this generates 10 page faults (do it).

Theory has been developed and certain PRA (so called

stack algorithms

) cannot suffer this anomaly for any

reference string.

FIFO is clearly not a stack algorithm.

LRU is.

Repeat the above calculations for LRU.

A local PRA is one is which a victim page is chosen among the pages of the same process that requires a new frame. That is the number of frames for each process is fixed. So LRU for a local policy means the page least recently used by this process. A global policy is one in which the choice of victim is made among all pages of all processes.

In general a global policy seems to work better. For example, consider LRU. With a local policy, the local LRU page might have been more recently used than many resident pages of other processes. A global policy needs to be coupled with a good method to decide how many frames to give to each process. By the working set principle, each process should be given |w(k,t)| frames at time t, but this value is hard to calculate exactly.

If a process is given too few frames (i.e., well below |w(k,t)|), its faulting rate will rise dramatically. If this occurs for many or all the processes, the resulting situation in which the system is doing very little useful work due to the high I/O requirements for all the page faults is called thrashing.

An approximation to the working set policy that is useful for determining how many frames a process needs (but not which pages) is the Page Fault Frequency (PFF) algorithm.

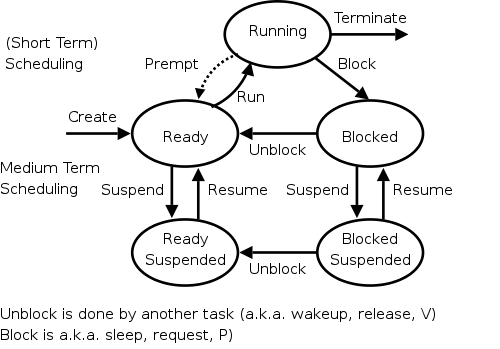

To reduce the overall memory pressure, we must reduce the multiprogramming level (or install more memory while the system is running, which is not possible with current technology). That is, we have a connection between memory management and process management. These are the suspend/resume arcs we saw way back when and repeated in the diagram on the right.

When the PFF (or another indicator) is too high, we choose a process and suspend it, thereby swapping it to disk and releasing all its frames. When the frequency gets low, we can resume one or more suspended processes. We also need a policy to decide when a suspended process should be resumed even at the cost of suspending another.

This is called medium-term scheduling. Since suspending or resuming a process can take seconds, we clearly do not perform this scheduling decision every few milliseconds as we do for short-term scheduling. A time scale of minutes would be more appropriate.

Page size must

be a multiple of the disk block size.

Why?

Answer: When copying out a page if you have a partial disk block, you

must do a read/modify/write (i.e., 2 I/Os).

Characteristics of a large page size.

regionsthan the number of (large) frames that the process has been allocated.

A small page size has the opposite characteristics.

Homework: Consider a 32-bit address machine using paging with 8KB pages and 4 byte PTEs. How many bits are used for the offset and what is the size of the largest page table? Repeat the question for 128KB pages.

This was used when machine have very small virtual address spaces. Specifically the PDP-11, with 16-bit addresses, could address only 216 bytes or 64KB, a severe limitation. With separate I and D spaces there could be 64KB of instructions and 64KB of data.

Separate I and D are no longer needed with modern architectures having large address spaces.

Permit several processes to each have a page loaded in the same frame. Of course this can only be done if the processes are using the same program and/or data.

copy on writetechniques.

Homework: Can a page shared between two processes be read-only for one process and read-write for the other?

In addition to sharing individual pages, process can share entire library routines. The technique used is called dynamic linking and the objects produced are calledshared libraries or dynamically-linked libraries (DLLs). (The traditional linking you did in lab1 is today often called static linking).

changeeven when they haven't changed.

copiesof the module). Instead position-independent code must be used. For example, jumps within the module would use PC-relative addresses.

The idea of memory-mapped files is to use the mechanisms in place for demand paging (and segmentation, if present) to implement I/O.

A system call is used to map a file into a portion of the address

space.

(No page can be part of a file and part of regular

memory;

the mapped file would be a complete segment if segmentation is

present).

The implementation of demand paging we have presented assumes that the entire process is stored on disk. This portion of secondary storage is called the backing store for the pages. Sometimes it is called a paging disk. For memory-mapped files, the file itself is the backing store.

Once the file is mapped into memory, reads and writes become loads and stores.