Operating Systems

Start Lecture #6

Example 1

Consider the example shown in the table on the right.

A safe state with 22 units of one resource

| process | initial claim | current alloc | max add'l |

|---|

| X | 3 | 1 | 2 |

| Y | 11 | 5 | 6 |

| Z | 19 | 10 | 9 |

| Total | 16 |

| Available | 6 |

- One resource type R with 22 unit.

- Three processes X, Y, and Z with initial claims 3, 11, and 19

respectively.

- Currently the processes have 1, 5, and 10 units respectively.

- Hence the manager currently has 6 units left.

- Also note that the max additional needs for the processes are 2,

6, 9 respectively.

- So the manager cannot assure (with its current

remaining supply of 6 units) that Z can terminate.

But that is not the question.

-

This state is safe.

- Use 2 units to satisfy X; now the manager has 7 units.

- Use 6 units to satisfy Y; now the manager has 12 units.

- Use 9 units to satisfy Z; done!

Example 2

This example is a continuation of example 1 in which Z requested 2

units and the manager (foolishly?) granted the request.

An unsafe state with 22 units of one resource

| process | initial claim | current alloc | max add'l |

|---|

| X | 3 | 1 | 2 |

| Y | 11 | 5 | 6 |

| Z | 19 | 12 | 7 |

| Total | 18 |

| Available | 4 |

- Currently the processes have 1, 5, and 12 units respectively.

- The manager has 4 units.

- The max additional needs are 2, 6, and 7.

- This state is unsafe

- Use 2 unit to satisfy X; now the manager has 5 units.

- Y needs 6 and Z needs 7 so we can't guarantee satisfying

either

- Note that we were able to find a process that can terminate

(X) but then we were stuck.

So it is not enough to find one process.

We must find a sequence of all the processes.

Remark: An unsafe state is not necessarily

a deadlocked state.

Indeed, for many unsafe states, if the manager gets lucky all

processes may terminate successfully.

Processes that are not currently blocked can terminate (instead of

requesting more resources up to their initial claim, which is the

worst case and is the case the manager prepares for).

A safe state means that the manager can

guarantee that no deadlock will occur (even in the

worst case in which processes request as much as permitted by their

initial claims.)

6.5.3 The Banker's Algorithm (Dijkstra) for a Single Resource

The algorithm is simple: Stay in safe states.

Initially, we assume all the processes are present before execution

begins and that all initial claims are given before execution

begins.

We will relax these assumptions very soon.

In a little more detail the banker's algorithm is as follows.

- Before execution begins, check that the system is safe.

That is, check that no process claims more than the manager

has).

If not, then the offending process is trying to claim more of

some resource than exist in the system has and hence cannot be

guaranteed to complete even if run by itself.

You might say that it can become deadlocked all by

itself.

- When the manager receives a request, it pretends to grant

it and checks if the resulting state is safe.

If it is safe the request is granted, if not the process is

blocked.

- When a resource is returned, the manager (politely thanks the

process and then) checks to see if

the first

pending

requests can be granted (i.e., if the result would now be

safe).

If so the request is granted.

Whether or not the request was granted, the manager checks to see if

the next pending request can be granted, etc..

Homework: 16.

6.5.4 The Banker's Algorithm for Multiple Resources

At a high level the algorithm is identical: Stay in safe states.

But what is a safe state in this new setting?

The same definition (if processes are run in a certain order they

will all terminate).

Checking for safety is the same idea as above.

The difference is that to tell if there are enough free resources

for a processes to terminate, the manager must check that

for all resources, the number of free units is at

least equal to the max additional need of the process.

Limitations of the Banker's Algorithm

- Often users don't know the maximum requests a process will make.

They can estimate conservatively (i.e., use big numbers for the claim)

but then the manager becomes very conservative.

- New processes arriving cause a problem (but not so bad as

Tanenbaum suggests).

- The process's claim must be less than the total number of

units of the resource in the system.

If not, the process is not accepted by the manager.

- Since the state without the new process is safe, so is the

state with the new process!

Just use the order you had originally and put the new process

at the end.

- Insuring fairness (starvation freedom) needs a little more

work, but isn't too hard either (once an hour stop taking new

processes until all current processes finish).

- A resource can become unavailable (e.g., a CD-ROM drive might

break).

This can result in an unsafe state.

Homework: 22, 25, and 30.

There is an interesting typo in 22 A has claimed 3 units of resource

5, but there are only 2 units in the entire system.

Change the problem by having B both claim and be allocated 1 unit of

resource 5.

Remark: Lab 3 (banker) assigned.

It is due in 2 weeks.

6.7 Other Issues

6.7.1 Two-phase locking

This is covered (MUCH better) in a database text.

We will skip it.

6.7.2 Communication Deadlocks

We have mostly considered actually hardware resources such as

printers, but have also considered more abstract resources such as

semaphores.

There are other possibilities.

For example a server often waits for a client to make a request.

But if the request msg is lost the server is still waiting for the

client and the client is waiting for the server to respond to the

(lost) last request.

Each will wait for the other forever, a deadlock.

A solution

to this communication deadlock would be to use a

timeout so that the client eventually determines that the msg was

lost and sends another.

But it is not nearly that simple:

The msg might have been greatly delayed and now the server will get

two requests, which could be bad, and is likely to send two replies,

which also might be bad.

This gives rise to the serious subject of communication

protocols.

6.7.3 Livelock

Instead of blocking when a resource is not available, a process may

(wait and then) try again to obtain it.

Now assume process A has the printer, and B the CD-ROM, and each

process wants the other resource as well.

A will repeatedly request the CD-ROM and B will repeatedly request the

printer.

Neither can ever succeed since the other process holds the desired

resource.

Since no process is blocked, this is not technically deadlock, but a

related concept called livelock.

6.7.4 Starvation

As usual FCFS is a good cure.

Often this is done by priority aging and picking the highest

priority process to get the resource.

Also can periodically stop accepting new processes until all old

ones get their resources.

6.8 Research on Deadlocks

Skipped.

6.9 Summary

Read.

Chapter 3 Memory Management

Also called storage management or

space management.

The memory manager must deal with the

storage hierarchy present in modern machines.

- The hierarchy consists of registers, cache, central memory,

disk, tape (backup).

- The manager moves data from level to level of the hierarchy.

- When should we move data up to a higher level?

- Fetch on demand (e.g. demand paging, which is dominant now).

- Prefetch

- Read-ahead for file I/O.

- Large cache lines and pages.

- Extreme example.

Entire job present whenever running.

- Unless the top level has sufficient memory for the entire

system, we must also decide when to move data down to a lower

level.

This is normally called evicting the data (from the higher

level).

- In OS classes we concentrate on the central-memory/disk layers

and transitions.

- In architecture we concentrate on the cache/central-memory

layers and transitions (and use different terminology).

We will see in the next few weeks that there are three independent

decision:

- Should we have segmentation.

- Should we have paging.

- Should we employ fetch on demand.

Memory management implements address translation.

- Convert virtual addresses to physical addresses

- Also called logical to real address translation.

- A virtual address is the address expressed in

the program.

- A physical address is the address understood

by the computer hardware.

- The translation from virtual to physical addresses is performed by

the Memory Management Unit or (MMU).

- Another example of address translation is the conversion of

relative addresses to absolute addresses

by the linker.

- The translation might be trivial (e.g., the identity) but not

in a modern general purpose OS.

- The translation might be difficult (i.e., slow).

- Often includes addition/shifts/mask—not too bad.

- Often includes memory references.

- VERY serious.

- Solution is to cache translations in a

Translation Lookaside Buffer (TLB).

Sometimes called a translation buffer (TB).

Homework:

What is the difference between a physical address and a virtual address?

When is address translation performed?

- At compile time

- Compiler generates physical addresses.

- Requires knowledge of where the compilation unit will be loaded.

- No linker.

- Loader is trivial.

- Primitive.

- Rarely used (MSDOS .COM files).

- At link-edit time (the

linker lab

)

- Compiler

- Generates relative (a.k.a. relocatable) addresses for each

compilation unit.

- References external addresses.

- Linkage editor

- Converts the relocatable addr to absolute.

- Resolves external references.

- Misnamed ld by unix.

- Must also converts virtual to physical addresses by

knowing where the linked program will be loaded. Linker

lab

does

this, but it is trivial since we

assume the linked program will be loaded at 0.

- Loader is still trivial.

- Hardware requirements are small.

- A program can be loaded only where specified and

cannot move once loaded.

- Not used much any more.

- At load time

- Similar to at link-edit time, but do not fix

the starting address.

- Program can be loaded anywhere.

- Program can move but cannot be split.

- Need modest hardware: base/limit registers.

- Loader sets the base/limit registers.

- No longer common.

- At execution time

- Addresses translated dynamically during execution.

- Hardware needed to perform the virtual to physical address

translation quickly.

- Currently dominates.

- Much more information later.

Extensions

-

Dynamic Loading

- When executing a call, check if module is loaded.

- If not loaded, call linking loader to load it and update

tables.

- Slows down calls (indirection) unless you rewrite code dynamically.

- Not used much.

-

Dynamic Linking

- The traditional linking described above is today often called

static linking.

- With dynamic linking, frequently used routines are not linked

into the program. Instead, just a stub is linked.

- When the routine is called, the stub checks to see if the

real routine is loaded (it may have been loaded by

another program).

- If not loaded, load it.

- If already loaded, share it. This needs some OS

help so that different jobs sharing the library don't

overwrite each other's private memory.

- Advantages of dynamic linking.

- Saves space: Routine only in memory once even when used

many times.

- Bug fix to dynamically linked library fixes all applications

that use that library, without having to

relink the application.

- Disadvantages of dynamic linking.

- New bugs in dynamically linked library infect all

applications.

- Applications

change

even when they haven't changed.

Note: I will place ** before each memory management

scheme.

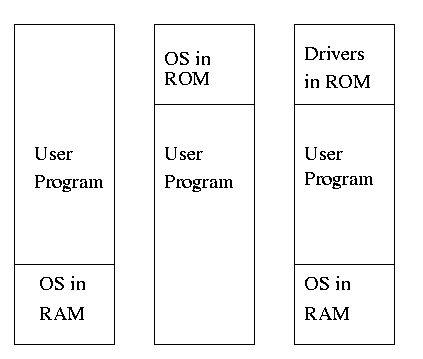

3.1 No Memory Management

The entire process remains in memory from start to finish and does

not move.

The sum of the memory requirements of all jobs in the system cannot

exceed the size of physical memory.

Monoprogramming

The good old days

when everything was easy.

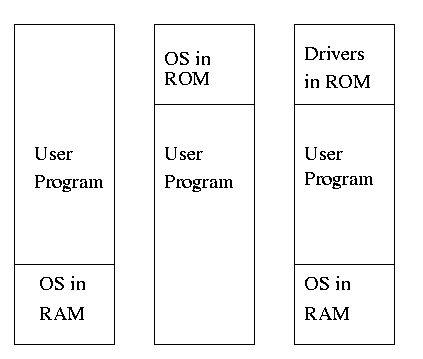

- No address translation done by the OS (i.e., address translation is

not performed dynamically during execution).

- Either reload the OS for each job (or don't have an OS, which is almost

the same), or protect the OS from the job.

- One way to protect (part of) the OS is to have it in ROM.

- Of course, must have the OS (read-write) data in RAM.

- Can have a separate OS address space only accessible in

supervisor mode.

- Might just put some drivers in ROM (BIOS).

- The user employs overlays if the memory needed

by a job exceeds the size of physical memory.

- Programmer breaks program into pieces.

- A

root

piece is always memory resident.

- The root contains calls to load and unload various pieces.

- Programmer's responsibility to ensure that a piece is already

loaded when it is called.

- No longer used, but we couldn't have gotten to the moon in the

60s without it (I think).

- Overlays have been replaced by dynamic address translation and

other features (e.g., demand paging) that have the system support

logical address sizes greater than physical address sizes.

- Fred Brooks (leader of IBM's OS/360 project and author of

The

mythical man month

) remarked that the OS/360 linkage editor was

terrific, especially in its support for overlays, but by the time

it came out, overlays were no longer used.

Running Multiple Programs Without a Memory Abstraction

This can be done via swapping if you have only one program loaded

at a time.

A more general version of swapping is discussed below.

One can also support a limited form of multiprogramming, similar to

MFT (which is described next).

In this limited version, the loader relocates all relative

addresses, thus permitting multiple processes to coexist in physical

memory the way your linker permitted multiple modules in a single

process to coexist.

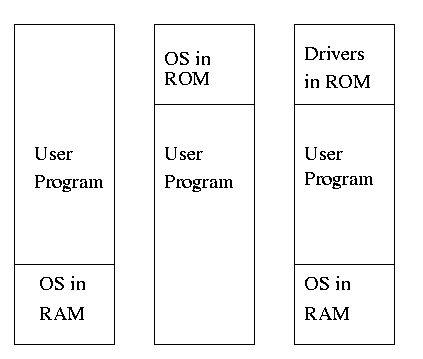

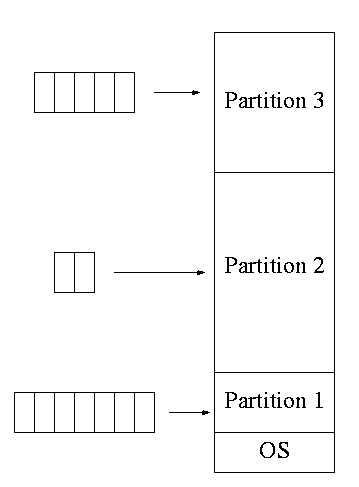

**Multiprogramming with Fixed Partitions

Two goals of multiprogramming are to improve CPU utilization, by

overlapping CPU and I/O, and to permit short jobs to finish quickly.

- This scheme was used by IBM for system 360 OS/MFT

(multiprogramming with a fixed number of tasks).

- An alternative would have a single input queue instead of one

for each partition.

- With this alternative, if there are no big jobs, one can

use the big partition for little jobs.

- But I don't think IBM did this.

- You can think of the input queue(s) as the ready list(s)

with a scheduling policy of FCFS in each partition.

- Each partition was monoprogrammed, the

multiprogramming occurred across partitions.

- The partition boundaries are not movable (must reboot to

move a job).

- So the partitions are of fixed size.

- MFT can have large internal fragmentation,

i.e., wasted space inside a region of memory assigned

to a process.

- Each process has a single

segment

(i.e., the virtual

address space is contiguous).

We will discuss segments later.

- The physical address space is also contiguous (i.e., the program

is stored as one piece).

- No sharing of memory between process.

- No dynamic address translation.

- OS/MFT is an example of address translation during load time.

- The system must

establish addressability

.

- That is, the system must set a register to the location at

which the process was loaded (the bottom of the partition).

Actually this is done with a user-mode instruction so could

be called execution time, but it is only done once at the

very beginning.

- This register (often called a base register by ibm) is

part of the programmer visible register set.

Soon we will meet base/limit registers, which while related

to the IBM base register above, have the important

difference of being outside the programmer's control or view.

- Also called relocation.

- In addition, since the linker/assembler allow the use of

addresses as data, the loader itself relocates these at load

time.

- Storage keys are adequate for protection (the IBM method).

- An alternative protection method is base/limit registers,

which are discussed below.

- An advantage of the base/limit scheme is that it is easier to

move a job.

- But MFT didn't move jobs so this disadvantage of storage keys

is moot.

3.2 A memory Abstraction: Address Spaces

the Notion of an Address Space

Just as the process concept creates a kind of abstract CPU to

run programs, the address space creates a kind of abstract memory

for programs to live in.

This does for processes, what you so kindly did for modules in the

linker lab: permit each to believe it has its own memory starting at

address zero.

Base and Limit Registers

Base and limit registers are additional hardware, invisible to the

programmer, that supports multiprogramming by automatically adding

the base address (i.e., the value in the base register) to every

relative address when that address is accessed at run time.

In addition the relative address is compared against the value in

the limit register and if larger, the processes aborted since it has

exceeded its memory bound.

Compare this to your error checking in the linker lab.

The base and limit register are set by the OS when the job starts.

3.2.2 Swapping

Moving an entire processes between disk and memory is called

swapping.

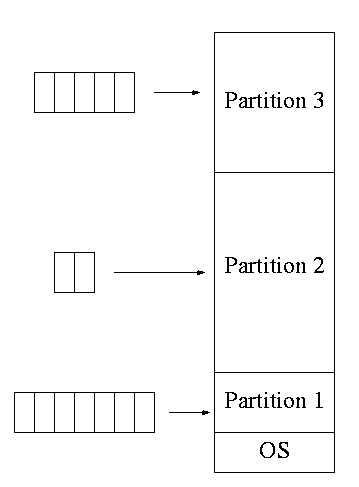

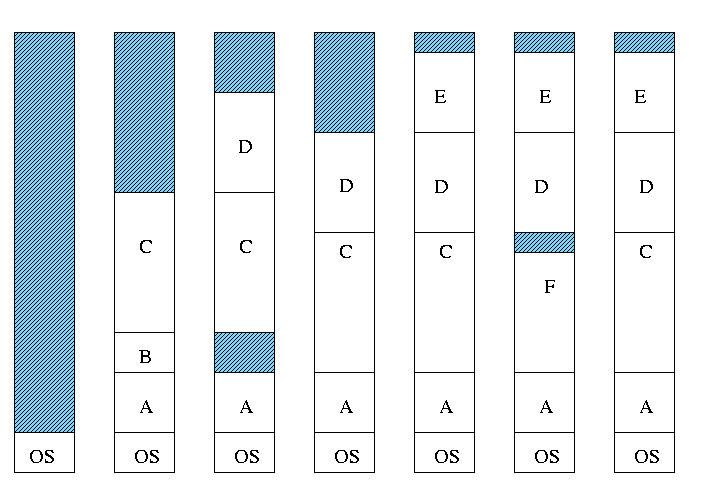

Multiprogramming with Variable Partitions

Both the number and size of the partitions change with

time.

- OS/MVT (multiprogramming with a varying number of

tasks).

- Also early PDP-10 OS.

- Job still has only one segment (as with MFT). That is, the

virtual address space is contiguous.

- The physical address is also contiguous, that is, the process is

stored as one piece in memory.

- The job can be of any

size up to the size of the machine and the job size can change

with time.

- A single ready list.

- A job can move (might be swapped back in a different

place).

- This is dynamic address translation (during run time).

- Must perform an addition on every memory reference (i.e. on

every address translation) to add the start address of the

partition (the base register).

- Called a DAT (dynamic address translation) box by IBM.

- Eliminates internal fragmentation (unusable space within a

process).

- Find a region the exact right size.

- Not quite true, can't get a piece with 108755 bytes.

Would get say 108760.

But internal fragmentation is much reduced compared

to MFT.

Indeed, we say that internal fragmentation has been

eliminated.

- Introduces external fragmentation, i.e., holes

outside any region of memory assigned to a

process.

- What do you do if no hole is big enough for the request?

- Can compactify

- Transition from bar 3 to bar 4 in diagram below.

- This is expensive.

- Not suitable for real time (MIT ping pong).

- Can swap out one process to bring in another, e.g., bars 5-6

and 6-7 in the diagram.

- There are more processes than holes.

Why?

- Because next to a process there might be a process or a hole

but next to a hole there must be a process.

- So can have

runs

of processes, but not of holes.

- If after a process one is equally likely to have a process or

a hole, you get about twice as many processes as holes.

- Base and limit registers are used.

- Storage keys would not good since compactifying or moving

would require changing many keys.

- Storage keys might need a fine granularity to permit the

boundaries to move by small amounts (to reduce internal

fragmentation).

Hence many keys would need to be changed.

Homework:

A swapping system eliminates holes by compaction.

Assuming a random distribution of many holes and many data segments

and a time to read or write a 32-bit memory word of 10ns, about ow

long does it take to compact 128 MB?

For simplicity, assume that word 0 is part of a hole and the highest

word in memory conatains valid data.

Managing Free Memory

MVT Introduces the Placement Question

That is, which hole (partition) should one choose?

- Some alternative algorithms for choosing a hole include best

fit, worst fit, first fit, circular first fit, quick fit, and

Buddy.

- Best fit doesn't waste big holes, but does leave slivers and

is expensive to run.

- Worst fit avoids slivers, but eliminates all big holes so

a big job will require compaction.

Even more expensive than best fit (best fit stops if it

finds a perfect fit).

- Quick fit keeps lists of some common sizes (but has other

problems, see Tanenbaum).

- Buddy system

- Round request to next highest power of two (causes

internal fragmentation).

- Look in list of blocks this size (as with quick fit).

- If list empty, go higher and split into buddies.

- When returning coalesce with buddy.

- Do splitting and coalescing recursively, i.e. keep

coalescing until can't and keep splitting until successful.

- See Tanenbaum (look in the index) or an algorithms

book for more details.

- A current favorite is circular first fit, also known as next fit.

- Use the first hole that is big enough (first fit) but start

looking where you left off last time.

- Doesn't waste time constantly trying to use small holes that

have failed before, but does tend to use many of the big holes,

which can be a problem.

- Buddy comes with its own implementation.

How about the others?

Homework:

Consider a swapping system in which memory consists of the following

hole sizes in memory order: 10K, 4K, 20K, 18K 7K, 9K, 12K, and 15K.

Which hole is taken for successive segment requests of

- 12K

- 10K

- 9K

for first fit?

Now repeat the question for best fit, worst fit, and next fit.

Solution: First fit takes 20k, 10k, 18k.

Best fit 12k, 10k, 9k.

Worst fit takes 20k, 18KB, 15KB.

Next fit takes 20KB, 18KB, 9KB.

Memory Management with Bitmaps

Divide memory into blocks and associate a bit with each block, used

to indicate if the corresponding block is free or allocated. To find

a chunk of size N blocks need to find N consecutive bits

indicating a free block.

The only design question is how much memory does one bit represent.

- Big: Serious internal fragmentation.

- Small: Many bits to store and process.

3.2.2 Memory Management with Linked Lists

Instead of a bit map, use a link list of nodes where each node

corresponds to a region of memory either allocated to a process or

still available (a hole).

- Each item on list gives the length and starting location of the

corresponding region of memory and says whether it is a Hole or Process.

- The items on the list are not taken from the memory to be

used by processes.

- The list is kept in order of starting address.

- Merge adjacent holes when freeing memory.

- Use either a singly or doubly linked list.

Memory Management using Boundary Tags

See Knuth, The Art of Computer Programming vol 1.

- Use the same memory for list items as for processes.

- Don't need an entry in linked list for blocks in use, just

the avail blocks are linked.

- The avail blocks themselves are linked, not a node that points to

an avail block.

- When a block is returned, we can look at the boundary tag of the

adjacent blocks and see if they are avail.

If so they must be merged with the returned block.

- For the blocks currently in use, just need a hole/process bit at

each end and the length. Keep this in the block itself.

- We do not need to traverse the list when returning a block can use

boundary tags to find predecessor.

MVT also introduces the Replacement Question

That is, which victim should we swap out?

Note that this is an example of the suspend arc mentioned in process

scheduling.

We will study this question more when we discuss

demand paging in which case

we swap out part of a process.

Considerations in choosing a victim

- Cannot replace a job that is pinned,

i.e. whose memory is tied down. For example, if Direct Memory

Access (DMA) I/O is scheduled for this process, the job is pinned

until the DMA is complete.

- Victim selection is a medium term scheduling decision

- A job that has been in a wait state for a long time is a good

candidate.

- Often choose as a victim a job that has been in memory for a long

time.

- Another question is how long should it stay swapped out.

-

For demand paging, where swaping out a page is not as drastic as

swapping out a job, choosing the victim is an important memory

management decision and we shall study several policies,

NOTEs:

- So far the schemes presented so far have had two properties:

- Each job is stored contiguously in memory.

That is, the job is

contiguous in physical addresses.

- Each job cannot use more memory than exists in the system.

That is, the virtual addresses space cannot exceed the

physical address space.

- Tanenbaum now attacks the second item.

I wish to do both and start with the first.

- Tanenbaum (and most of the world) uses the term

paging

to mean what I call demand paging.

This is unfortunate as it mixes together two concepts.

- Paging (dicing the address space) to solve the placement

problem and essentially eliminate external fragmentation.

- Demand fetching, to permit the total memory requirements of

all loaded jobs to exceed the size of physical memory.

- Most of the world uses the term virtual memory as a synonym for

demand paging.

Again I consider this unfortunate.

- Demand paging is a fine term and is quite descriptive.

- Virtual memory

should

be used in contrast with

physical memory to describe any virtual to physical address

translation.