I start at -1 so that when we get to chapter 1, the numbering will agree with the text.

There is a web site for the course. You can find it from my home page, which is http://cs.nyu.edu/~gottlieb

The course text is Tanenbaum, "Modern Operating Systems", 2nd Edition

Grades will computed as

40%*LabAverage + 60%*FinalExam (but see homeworks below).

I use the upper left board for lab/homework assignments and announcements. I should never erase that board. Viewed as a file it is group readable (the group is those in the room), appendable by just me, and (re-)writable by no one. If you see me start to erase an announcement, let me know.

I try very hard to remember to write all announcements on the upper left board and I am normally successful. If, during class, you see that I have forgotten to record something, please let me know. HOWEVER, if I forgot and no one reminds me, the assignment has still been given.

I make a distinction between homeworks and labs.

Labs are

Homeworks are

Homeworks are numbered by the class in which they are assigned. So any homework given today is homework #1. Even if I do not give homework today, the homework assigned next class will be homework #2. Unless I explicitly state otherwise, all homeworks assignments can be found in the class notes. So the homework present in the notes for lecture #n is homework #n (even if I inadvertently forgot to write it to the upper left board).

You may solve lab assignments on any system you wish, but ...

Good methods for obtaining help include

You may write your lab in Java, C, or C++.

The rules for incompletes and grade changes are set by the school and not the department or individual faculty member. The rules set by GSAS state:

The assignment of the grade Incomplete Pass(IP) or Incomplete Fail(IF) is at the discretion of the instructor. If an incomplete grade is not changed to a permanent grade by the instructor within one year of the beginning of the course, Incomplete Pass(IP) lapses to No Credit(N), and Incomplete Fail(IF) lapses to Failure(F).

Permanent grades may not be changed unless the original grade resulted from a clerical error.

I do not assume you have had an OS course as an undergraduate, and I do not assume you have had extensive experience working with an operating system.

If you have already had an operating systems course, this course is probably not appropriate. For example, if you can explain the following concepts/terms, the course is probably too elementary for you.

I do assume you are an experienced programmer, at least to the extent that you are comfortable writing modest size (a few hundred lines) programs. You may write your programs in C, C++, or java.

Our policy on academic integrity, which applies to all graduate courses in the department, can be found here.

Originally called a linkage editor by IBM.

A linker is an example of a utility program included with an operating system distribution. Like a compiler, the linker is not part of the operating system per se, i.e. it does not run in supervisor mode. Unlike a compiler it is OS dependent (what object/load file format is used) and is not (normally) language dependent.

Link of course.

When the compiler and assembler have finished processing a module,

they produce an object module

that is almost runnable.

There are two remaining tasks to be accomplished before object modules

can be run.

Both are involved with linking (that word, again) together multiple

object modules.

The tasks are relocating relative addresses

and resolving external references.

The output of a linker is called a load module because it is now ready to be loaded and run.

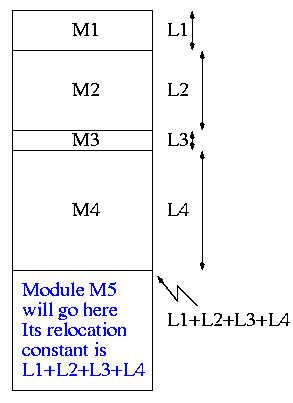

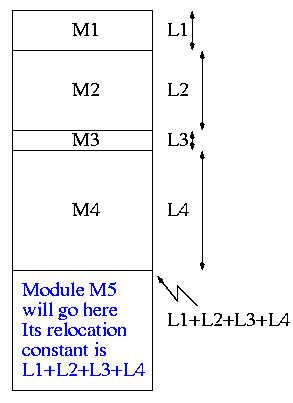

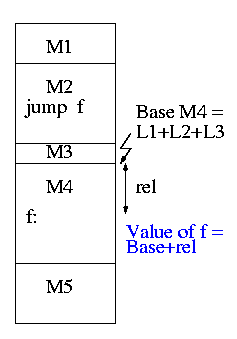

To see how a linker works lets consider the following example, which is the first dataset from lab #1. The description in lab1 is more detailed.

The target machine is word addressable and has a memory of 250 words, each consisting of 4 decimal digits. The first (leftmost) digit is the opcode and the remaining three digits form an address.

Each object module contains three parts, a definition list, a use list, and the program text itself. Each definition is a pair (sym, loc). Each entry in the use list is a symbol and a list of uses of that symbol.

The program text consists of a count N followed by N pairs (type, word), where word is a 4-digit instruction described above and type is a single character indicating if the address in the word is Immediate, Absolute, Relative, or External.

Input set #1 1 xy 2 2 z 2 -1 xy 4 -1 5 R 1004 I 5678 E 2000 R 8002 E 7001 0 1 z 1 2 3 -1 6 R 8001 E 1000 E 1000 E 3000 R 1002 A 1010 0 1 z 1 -1 2 R 5001 E 4000 1 z 2 2 xy 2 -1 z 1 -1 3 A 8000 E 1001 E 2000

The first pass simply finds the base address of each module and produces the symbol table giving the values for xy and z (2 and 15 respectively). The second pass does the real work using the symbol table and base addresses produced in pass one.

Symbol Table

xy=2

z=15

Memory Map

+0

0: R 1004 1004+0 = 1004

1: I 5678 5678

2: xy: E 2000 ->z 2015

3: R 8002 8002+0 = 8002

4: E 7001 ->xy 7002

+5

0 R 8001 8001+5 = 8006

1 E 1000 ->z 1015

2 E 1000 ->z 1015

3 E 3000 ->z 3015

4 R 1002 1002+5 = 1007

5 A 1010 1010

+11

0 R 5001 5001+11= 5012

1 E 4000 ->z 4015

+13

0 A 8000 8000

1 E 1001 ->z 1015

2 z: E 2000 ->xy 2002

The output above is more complex than I expect you to produce it is there to help me explain what the linker is doing. All I would expect from you is the symbol table and the rightmost column of the memory map.

You must process each module separately, i.e. except for the symbol table and memory map your space requirements should be proportional to the largest module not to the sum of the modules. This does NOT make the lab harder.

(Unofficial) Remark: It is faster (less I/O) to do a one pass approach, but is harder since you need “fix-up code” whenever a use occurs in a module that precedes the module with the definition.

The linker on unix was mistakenly called ld (for loader), which is unfortunate since it links but does not load.

Historical remark: Unix was originally developed at Bell Labs; the seventh edition of unix was made publicly available (perhaps earlier ones were somewhat available). The 7th ed man page for ld begins (see http://cm.bell-labs.com/7thEdMan).

.TH LD 1 .SH NAME ld \- loader .SH SYNOPSIS .B ld [ option ] file ... .SH DESCRIPTION .I Ld combines several object programs into one, resolves external references, and searches libraries.By the mid 80s the Berkeley version (4.3BSD) man page referred to ld as "link editor" and this more accurate name is now standard in unix/linux distributions.

During the 2004-05 fall semester a student wrote to me “BTW - I have meant to tell you that I know the lady who wrote ld. She told me that they called it loader, because they just really didn't have a good idea of what it was going to be at the time.”

Lab #1: Implement a two-pass linker. The specific assignment is detailed on the class home page.

Homework: Read Chapter 1 (Introduction)

The kernel itself raises the level of abstraction and hides details. For example a user (of the kernel) can write to a file (a concept not present in hardware) and ignore whether the file resides on a floppy, a CD-ROM, or a hard disk. The user can also ignore issues such as whether the file is stored contiguously or is broken into blocks.

The kernel is a resource manager (so users don't conflict).

How is an OS fundamentally different from a compiler (say)?

Answer: Concurrency! Per Brinch Hansen in Operating Systems Principles (Prentice Hall, 1973) writes.

The main difficulty of multiprogramming is that concurrent activities can interact in a time-dependent manner, which makes it practically impossibly to locate programming errors by systematic testing. Perhaps, more than anything else, this explains the difficulty of making operating systems reliable.Homework: 1, 2. (unless otherwise stated, problems numbers are from the end of the chapter in Tanenbaum.)

Homework: 3.

There is not as much difference between mainframe, server, multiprocessor, and PC OSes as Tannenbaum suggests. For example Windows NT/2000/XP, Unix and Linux are used on all.

Used in data centers, these systems ofter tremendous I/O capabilities and extensive fault tolerance.

Perhaps the most important servers today are web servers. Again I/O (and network) performance are critical.

These existed almost from the beginning of the computer age, but now are not exotic.

Some OSes (e.g. Windows ME) are tailored for this application. One could also say they are restricted to this application.

Very limited in power (both meanings of the word).

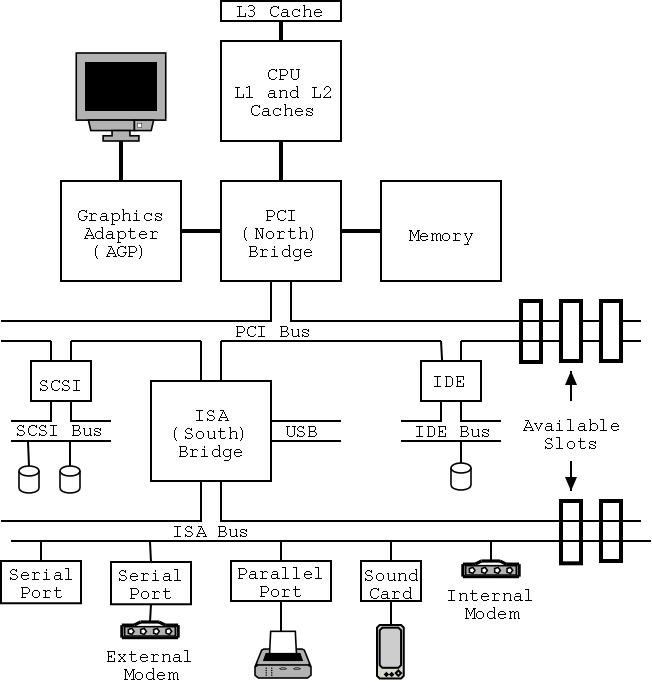

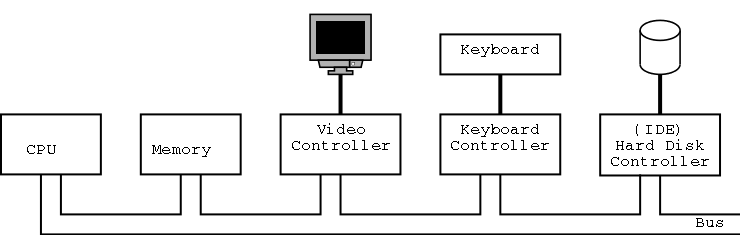

Tannenbaum's treatment is very brief and superficial. Mine is even more so. The picture above is very simplified. (For one thing, today separate buses are used to Memory and Video.)

A bus is a set of wires that connect two or more devices. Only one message can be on the bus at a time. All the devices “receive” the message: There are no switches in between to steer the message to the desired destination, but often some of the wires form an address that indicates which devices should actually process the message.

We will ignore processor concepts such as program counters and stack pointers. We will also ignore computer design issues such as pipelining and superscalar. We do, however, need the notion of a trap, that is an instruction that atomically switches the processor into privileged mode and jumps to a pre-defined physical address.

We will ignore caches, but will (later) discuss demand paging, which is very similar (although demand paging and caches use completely disjoint terminology). In both cases, the goal is to combine large slow memory with small fast memory to achieve the effect of large fast memory.

The central memory in a system is called RAM (Random Access Memory). A key point is that it is volatile, i.e. the memory loses its data if power is turned off.

I don't understand why Tanenbaum discusses disks here instead of in the next section entitled I/O devices, but he does. I don't.

ROM (Read Only Memory) is used to hold data that will not change, e.g. the serial number of a computer or the program use in a microwave. ROM is non-volatile. A modern, familiar ROM is CD-ROM (or the denser DVD).

But often this unchangable data needs to be changed (e.g., to fix bugs). This gives rise first to PROM (Programmable ROM), which, like a CD-R, can be written once (as opposed to being mass produced already written like a CD-ROM), and then to EPROM (Erasable PROM; not Erasable ROM as in Tanenbaum), which is like a CD-RW. An EPROM is especially convenient if it can be erased with a normal circuit (EEPROM, Electrically EPROM or Flash RAM).

As mentioned above when discussing OS/MFT and OS/MVT multiprogramming requires that we protect one process from another. That is we need to translate the virtual addresses of each program into distinct physical addresses. The hardware that performs this translation is called the MMU or Memory Management Unit.

When context switching from one process to another, the translation must change, which can be an expensive operation.

When we do I/O for real, I will show a real disk opened up and illustrate the components

Devices are often quite complicated to manage and a separate computer, called a controller, is used to translate simple commands (read sector 123456) into what the device requires (read cylinder 321, head 6, sector 765). Actually the controller does considerably more, e.g. calculates a checksum for error detection.

How does the OS know when the I/O is complete?

We discuss this more in chapter 5. In particular, we explain the last point about halving bus accesses.

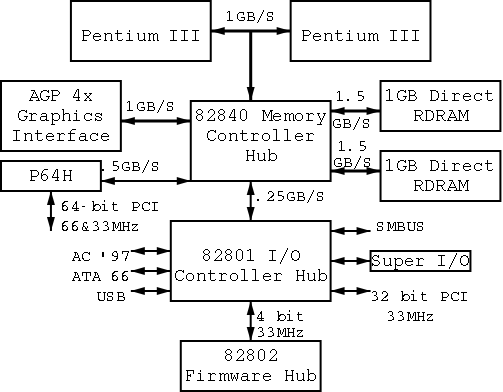

I don't care very much about the names of the buses, but the diagram

given in the book doesn't show a modern design. The one below

does. On the right is a figure showing the specifications for a modern chip

set (introduced in 2000). The chip set has two different width PCI

busses, which is not shown below. Instead of having the chip set

supply USB, a PCI USB controller may be used. Finally, the use of ISA

is decreasing. Indeed my last desktop didn't have an ISA bus and I

had to replace my ISA sound card with a PCI version.