I start at Chapter 0 so that when we get to chapter 1, the numbering will agree with the text.

There is a web site for the course. You can find it from my home page listed above.

The course text is Aho, Seithi, and Ullman: Compilers: Principles, Techniques, and Tools

Your grade will be a function of your final exam and laboratory assignments (see below). I am not yet sure of the exact weightings for each lab and the final, but will let you know soon.

I use the upper left board for lab/homework assignments and announcements. I should never erase that board. If you see me start to erase an announcement, please let me know.

I try very hard to remember to write all announcements on the upper left board and I am normally successful. If, during class, you see that I have forgotten to record something, please let me know. HOWEVER, if I forgot and no one reminds me, the assignment has still been given.

I make a distinction between homeworks and labs.

Labs are

Homeworks are

Homeworks are numbered by the class in which they are assigned. So any homework given today is homework #1. Even if I do not give homework today, the homework assigned next class will be homework #2. Unless I explicitly state otherwise, all homeworks assignments can be found in the class notes. So the homework present in the notes for lecture #n is homework #n (even if I inadvertently forgot to write it to the upper left board).

You may solve lab assignments on any system you wish, but ...

Good methods for obtaining help include

You may write your lab in Java, C, or C++. Other languages may be possible, but please ask in advance. I need to ensure that the TA is comfortable with the language.

The rules for incompletes and grade changes are set by the school and not the department or individual faculty member. The rules set by GSAS state:

The assignment of the grade Incomplete Pass(IP) or Incomplete Fail(IF) is at the discretion of the instructor. If an incomplete grade is not changed to a permanent grade by the instructor within one year of the beginning of the course, Incomplete Pass(IP) lapses to No Credit(N), and Incomplete Fail(IF) lapses to Failure(F).

Permanent grades may not be changed unless the original grade resulted from a clerical error.

I do not assume you have had a compiler course as an undergraduate, and I do not assume you have had experience developing/maintaining a compiler.

If you have already had a compiler class, this course is probably not appropriate. For example, if you can explain the following concepts/terms, the course is probably too elementary for you.

I do assume you are an experienced programmer. There will be non-trivial programming assignments during this course. Indeed, you will write a compiler for a simple programming language.

I also assume that you have at least a passing familiarity with assembler language. In particular, your compiler will produce assembler language. We will not, however, write significant assembly-language programs.

Our policy on academic integrity, which applies to all graduate courses in the department, can be found here.

Homework Read chapter 1.

A Compiler is a translator from one language, the input or source language, to another language, the output or target language.

Often, but not always, the target language is an assembler language or the machine language for a computer processor.

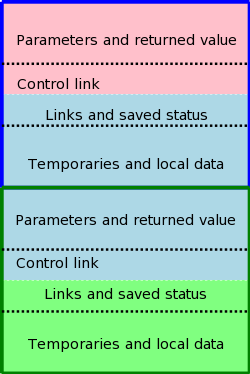

Modern compilers contain two (large) parts, each of which is often subdivided. These two parts are the front end and the back end.

The front end analyzes the source program, determines its constituent parts, and constructs an intermediate representation of the program. Typically the front end is independent of the target language.

The back end synthesizes the target program from the intermediate representation produced by the front end. Typically the back end is independent of the source language.

This front/back division very much reduces the work for a compiling

system that can handle several (N) source languages and several (M)

target languages. Instead of NM compilers, we need N front ends and

M back ends. For gcc (originally standing for Gnu C Compiler, but

now standing for Gnu Compiler Collection), N=7 and M~30 so the

savings is considerable.

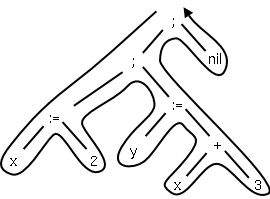

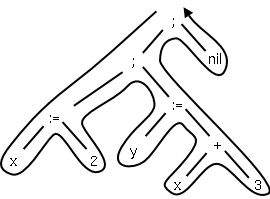

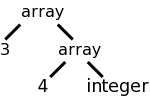

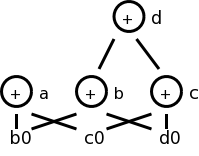

Often the intermediate form produced by the front end is

a syntax tree. In simple cases, such as that shown to the

right corresponding to the C statement sequence

x := 2; y := x + 3;

this tree has constants and

variables (and nil) for leaves and operators for internal nodes.

The back end traverses the tree in “Euler-tour” order and

generates code for each node. (This is quite oversimplified.)

Other “compiler like” applications also use analysis and synthesis. Some examples include

[preprocessor] --> [compiler] --> [assembler] --> [linker] --> [loader]

We will be primarily focused on the second element of the chain, the compiler. Our target language will be assembly language.

Preprocessors are normally fairly simple as in the C language, providing primarily the ability to include files and expand macros. There are exceptions, however. IBM's PL/I, another Algol-like language had quite an extensive preprocessor, which made available at preprocessor time, much of the PL/I language itself (e.g., loops and I believe procedure calls).

Some preprocessors essentially augment the base language, to add additional capabilities. One could consider them as compilers in their own right having as source this augmented language (say fortran augmented with statements for multiprocessor execution in the guise of fortran comments) and as target the original base language (in this case fortran). Often the “preprocessor” inserts procedure calls to implement the extensions at runtime.

Assembly code is an mnemonic version of machine code in which names, rather than binary values, are used for machine instructions, and memory addresses.

Some processors have fairly regular operations and as a result assembly code for them can be fairly natural and not-too-hard to understand. Other processors, in particular Intel's x86 line, have let us charitably say more “interesting” instructions with certain registers used for certain things.

My laptop has one of these latter processors (pentium 4) so my gcc compiler produces code that from a pedagogical viewpoint is less than ideal. If you have a mac with a ppc processor (newest macs are x86), your assembly language is cleaner. NYU's ACF features sun computers with sparc processors, which also have regular instruction sets.

No matter what the assembly language is, an assembler needs to assign memory locations to symbols (called identifiers) and use the numeric location address in the target machine language produced. Of course the same address must be used for all occurrences of a given identifier and two different identifiers must (normally) be assigned two different locations.

The conceptually simplest way to accomplish this is to make two passes over the input (read it once, then read it again from the beginning). During the first pass, each time a new identifier is encountered, an address is assigned and the pair (identifier, address) is stored in a symbol table. During the second pass, whenever an identifier is encountered, its address is looked up in the symbol table and this value is used in the generated machine instruction.

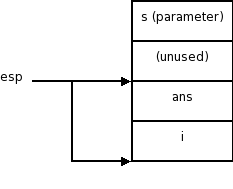

Consider the following trivial C program that computes and returns the xor of the characters in a string.

int xor (char s[]) // native C speakers say char *s

{

int ans = 0;

int i = 0;

while (s[i] != 0) {

ans = ans ^ s[i];

i = i + 1;

}

return ans;

}

The corresponding assembly language program (produced by gcc -S -fomit-frame-pointer) is

.file "xor.c" .text .globl xor .type xor, @function xor: subl $8, %esp movl $0, 4(%esp) movl $0, (%esp) .L2: movl (%esp), %eax addl 12(%esp), %eax cmpb $0, (%eax) je .L3 movl (%esp), %eax addl 12(%esp), %eax movsbl (%eax),%edx leal 4(%esp), %eax xorl %edx, (%eax) movl %esp, %eax incl (%eax) jmp .L2 .L3: movl 4(%esp), %eax addl $8, %esp ret .size xor, .-xor .section .note.GNU-stack,"",@progbits .ident "GCC: (GNU) 3.4.6 (Gentoo 3.4.6-r1, ssp-3.4.5-1.0, pie-8.7.9)"

You should be able to follow everything from xor: to ret. Indeed most of the rest can be omitted (.globl g is needed). That is the following assembly program gives the same results.

.globl xor xor: subl $8, %esp movl $0, 4(%esp) movl $0, (%esp) .L2: movl (%esp), %eax addl 12(%esp), %eax cmpb $0, (%eax) je .L3 movl (%esp), %eax addl 12(%esp), %eax movsbl (%eax),%edx leal 4(%esp), %eax xorl %edx, (%eax) movl %esp, %eax incl (%eax) jmp .L2 .L3: movl 4(%esp), %eax addl $8, %esp ret

What is happening in this program?

Lab assignment 1 is available on the class web site. The programming is trivial; you are just doing inclusive (i.e., normal) OR rather than XOR I just did. The point of the lab is to give you a chance to become familiar with your compiler and assembler.

Linkers, a.k.a. linkage editors combine the output of the assembler for several different compilations. That is the horizontal line of the diagram above should really be a collection of lines converging on the linker. The linker has another input, namely libraries, but to the linker the libraries look like other programs compiled and assembled. The two primary tasks of the linker are

The assembler processes one file at a time. Thus the symbol table produced while processing file A is independent of the symbols defined in file B, and conversely. Thus, it is likely that the same address will be used for different symbols in each program. The technical term is that the (local) addresses in the symbol table for file A are relative to file A; they must be relocated by the linker. This is accomplished by adding the starting address of file A (which in turn is the sum of the lengths of all the files processed previously in this run) to the relative address.

Assume procedure f, in file A, and procedure g, in file B, are compiled (and assembled) separately. Assume also that f invokes g. Since the compiler and assembler do not see g when processing f, it appears impossible for procedure f to know where in memory to find g.

The solution is for the compiler to indicated in the output of the file A compilation that the address of g is needed. This is called a use of g When processing file B, the compiler outputs the (relative) address of g. This is called the definition of g. The assembler passes this information to the linker.

The simplest linker technique is to again make two passes. During the first pass, the linker records in its “external symbol table” (a table of external symbols, not a symbol table that is stored externally) all the definitions encountered. During the second pass, every use can be resolved by access to the table.

I will be covering the linker in more detail tomorrow at 5pm in 2250, OS Design

After the linker has done its work, the resulting “executable file” can be loaded by the operating system into central memory. The details are OS dependent. With early single-user operating systems all programs would be loaded into a fixed address (say 0) and the loader simply copies the file to memory. Today it is much more complicated since (parts of) many programs reside in memory at the same time. Hence the compiler/assembler/linker cannot know the real location for an identifier. Indeed, this real location can change.

More information is given in any OS course (e.g., 2250 given wednesdays at 5pm).

Conceptually, there are three phases of analysis with the output of one phase the input of the next. The phases are called lexical analysis or scanning, syntax analysis or parsing, and semantic analysis.

The character stream input is grouped into tokens. For example, any one of the following

x3 := y + 3; x3 := y + 3 ; x3 :=y+ 3 ;but not

x 3 := y + 3;would be grouped into

Note that non-significant blanks are normally removed during scanning. In C, most blanks are non-significant. Blanks inside strings are an exception.

Note that we could define identifiers, numbers, and the various

symbols and punctuation can be defined without recursion (compare

with parsing below).

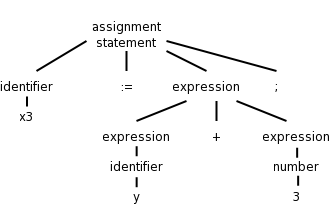

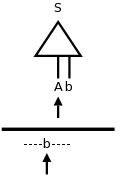

Parsing involves a further grouping in which tokens are grouped into grammatical phrases, which are normally represented in a parse tree. For example

x3 := y + 3;would be parsed into the tree on the right.

This parsing would result from a grammar containing rules such as

asst-stmt --> id := expr ;

expr --> number

| id

| expr + expr

Note the recursive definition of expression (expr). Note also the hierarchical decomposition in the figure on the right.

The division between scanning and parsing is somewhat arbitrary,

but invariably if a recursive definition is involved, it is

considered parsing not scanning.

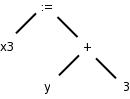

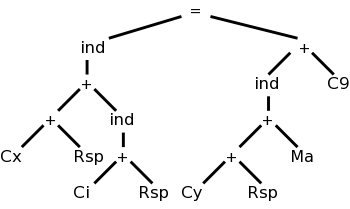

Often we utilize a simpler tree called the syntax tree with operators as interior nodes and operands as the children of the operator. The syntax tree on the right corresponds to the parse tree above it.

(Technical point.) The syntax tree represents an assignment expression not an assignment statement. In C an assignment statement includes the trailing semicolon. That is, in C (unlike in Algol) the semicolon is a statement terminator not a statement separator.

There is more to a front end than simply syntax. The compiler needs semantic information, e.g., the types (integer, real, pointer to array of integers, etc) of the objects involved. This enables checking for semantic errors and inserting type conversion where necessary.

For example, if y was declared to be a real and x3 an integer, We need to insert (unary, i.e., one operand) conversion operators “inttoreal” and “realtoint” as shown on the right.

Illustrates the use of hierarchical grouping for formatting languages (Tex and EQN are used as examples). For example shows how you can get subscripted superscripts (or superscripted subscripts)

[scanner]→[parser]→[sem anal]→[inter code gen]→[opt1]→[code gen]→[opt2]

We just examined the first three phases. Modern, high-performance

compilers, are dominated by their extensive optimization

phases, which occur before, during, and after code generation. Note

that optimization is most assuredly an inaccurate, albeit standard,

terminology, as the resulting code is not optimal.

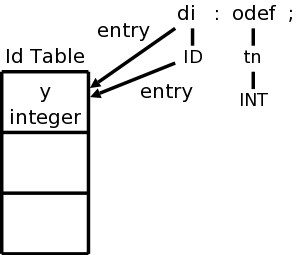

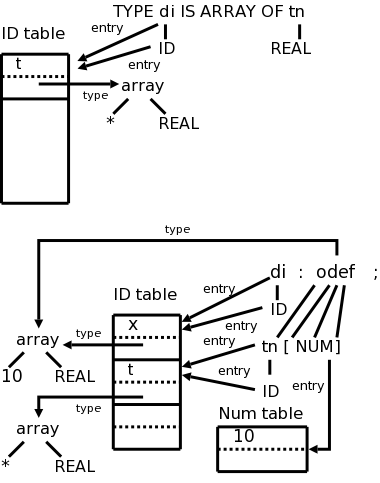

As we have seen when discussing assemblers and linkers, a symbol table is used to maintain information about symbols. The compiler uses a symbol to maintain information across phases as well as within each phase. One key item stored with each symbol is the corresponding type, which is determined during semantic and used (among other places) during code generation.

As you have doubtless noticed, not all programming efforts produce correct programs. If the input to the compiler is not a legal source language program, errors must be detected and reported. It is often much easier to detect that the program is not legal (e.g., the parser reaches a point where the next token cannot legally occur) than to deduce what is the actual error (which may have occurred earlier). It is even harder to reliably deduce what the intended correct program should be.

The scanner converts

x3 := y + 3;into

id1 := id2 + 3 ;where id is short for identifier.

This is processed by the parser and semantic analyzer to produce the two trees shown above here and here. On some systems, the tree would not contain the symbols themselves as shown in the figures. Instead the tree would contain leaves of the form idi which in turn would refer to the corresponding entries in the symbol table.

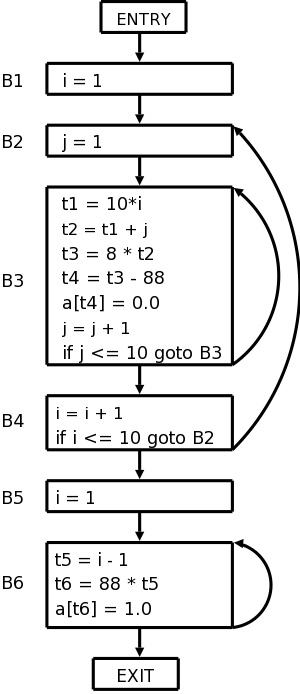

Many compilers first generate code for an “idealized machine”. For example, the intermediate code generated would assume that the target has an unlimited number of registers and that any register can be used for any operation. Another common assumption is that all machine operations take three operands, two source and one target.

With these assumptions one generates “three-address code” by walking the semantic tree. Our example C instruction would produce

temp1 := inttoreal(3) temp2 := id2 + temp1 temp3 := realtoint(temp2) id1 := temp3

We see that three-address code can include instructions with fewer than 3 operands.

Sometimes three-address code is called quadruples because one can view the previous code sequence as

inttoreal temp1 3 -- add temp2 id2 temp1 realtoint temp3 temp2 -- assign id1 temp3 --Each “quad” has the form

operation target source1 source2

This is a very serious subject, one that we will not really do justice to in this introductory course. Some optimizations are fairly easy to see.

add temp2 id2 3.0

realtoint id1 temp2

Modern processors have only a limited number of register. Although some processors, such as the x86, can perform operations directly on memory locations, we will for now assume only register operations. Some processors (e.g., the MIPS architecture) use three-address instructions. However, some processors permit only two addresses; the result overwrites the second source. With these assumptions, code something like the following would be produced for our example, after first assigning memory locations to id1 and id2.

MOVE id2, R1 ADD #3.0, R1 RTOI R1, R2 MOVE R2, id1

I found it more logical to treat these topics (preprocessors, assemblers, linkers, and loaders) earlier.

Logically each phase is viewed as a separate program that reads input and produces output for the next phase, i.e., a pipeline. In practice some phases are combined.

We discussed this previously.

Aho, Sethi, Ullman assert only limited success in producing several compilers for a single machine using a common back end. That is a rather pessimistic view and I wonder if the 2nd edition will change in this area.

The term pass is used to indicate that the entire input is read during this activity. So two passes, means that the input is read twice. We have discussed two pass approaches for both assemblers and linkers. If we implement each phase separately and use multiple phases for some of them, the compiler will perform a large number of I/O operations, an expensive undertaking.

As a result techniques have been developed to reduce the number of passes. We will see in the next chapter how to combine the scanner, parser, and semantic analyzer into one phase. Consider the parser. When it needs another token, rather than reading the input file (presumably produced by the scanner), the parser calls the scanner instead. At selected points during the production of the syntax tree, the parser calls the “code generator”, which performs semantic analysis as well as generating a portion of the intermediate code.

One problem with combining phases, or with implementing a single phase in one pass, is that it appears that an internal form of the entire program will need to be stored in memory. This problem arises because the downstream phase may need early in its execution information that the upstream phase produces only late in its execution. This motivated the use of symbol tables and a two pass approach. However, a clever one-pass approach is often possible.

Consider the assembler (or linker). The good case is when the definition precedes all uses so that the symbol table contains the value of the symbol prior to that value being needed. Now consider the harder case of one or more uses preceding the definition. When a not yet defined symbol is first used, an entry is placed in the symbol table, pointing to this use and indicating that the definition has not yet appeared. Further uses of the same symbol attach their addresses to a linked list of “undefined uses” of this symbol. When the definition is finally seen, the value is placed in the symbol table, and the linked list is traversed inserting the value in all previously encountered uses. Subsequent uses of the symbol will find its definition in the table.

This technique is called backpatching.

Originally, compilers were written “from scratch”, but now the situation is quite different. A number of tools are available to ease the burden.

We will study tools that generate scanners and parsers. This will involve us in some theory, regular expressions for scanners and various grammars for parsers. These techniques are fairly successful. One drawback can be that they do not execute as fast as “hand-crafted” scanners and parsers.

We will also see tools for syntax-directed translation and automatic code generation. The automation in these cases is not as complete.

Finally, there is the large area of optimization. This is not automated; however, a basic component of optimization is “data-flow analysis” (how are values transmitted between parts of a program) and there are tools to help with this task.

Homework: Read chapter 2.

Implement a very simple compiler.

The source language is infix expressions consisting of digits, +,

and -; the target is postfix expressions with the same components.

The compiler will convert

7+4-5 to 74+5-.

Actually, our simple compiler will handle a few other operators as well.

We will tokenize

the input (i.e., write a scanner), model

the syntax of the source, and let this syntax direct the translation.

This will be “done right” in the next two chapters.

A context-free grammar (CFG) consists of

Example:

Terminals: 0 1 2 3 4 5 6 7 8 9 + -

Nonterminals: list digit

Productions: list → list + digit

list → list - digit

list → digit

digit → 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9

Start symbol: list

Watch how we can generate the input 7+4-5 starting with the start symbol, applying productions, and stopping when no productions are possible (we have only terminals).

list → list - digit

→ list - 5

→ list + digit - 5

→ list + 4 - 5

→ digit + 4 - 5

→ 7 + 4 - 5

Homework: 2.1a, 2.1c, 2.2a-c (don't worry about “justifying” your answers).

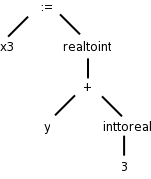

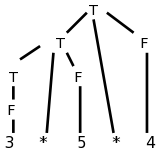

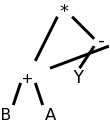

The compiler front end runs the above procedure in reverse! It starts with the string 7+4-5 and gets back to list (the “start” symbol). Reaching the start symbol means that the string is in the language generated by the grammar. While running the procedure in reverse, the front end builds up the parse tree on the right.

You can read off the productions from the tree. For any internal (i.e.,non-leaf) tree node, its children give the right hand side (RHS) of a production having the node itself as the LHS.

The leaves of the tree, read from left to right, is called the yield of the tree. We call the tree a derivation of its yield from its root. The tree on the right is a derivation of 7+4-5 from list.

Homework: 2.1b

An ambiguous grammar is one in which there are two or more parse trees yielding the same final string. We wish to avoid such grammars.

The grammar above is not ambiguous. For example 1+2+3 can be parsed only one way; the arithmetic must be done left to right. Note that I am not giving a rule of arithmetic, just of this grammar. If you reduced 2+3 to list you would be stuck since it is impossible to generate 1+list.

Homework: 2.3 (applied only to parts a, b, and c of 2.2)

Our grammar gives left associativity. That is, if you traverse the

tree in postorder and perform the indicated arithmetic you will

evaluate the string left to right. Thus 8-8-8 would evaluate to

-8. If you wished to generate right associativity (normally

exponentiation is right associative, so 2**3**2 gives 512 not 64),

you would change the first two productions to

list → digit + list and list → digit - list

We normally want * to have higher precedence than +. We do this by using an additional nonterminal to indicate the items that have been multiplied. The example below gives the four basic arithmetic operations their normal precedence unless overridden by parentheses. Redundant parentheses are permitted. Equal precedence operations are performed left to right.

expr → expr + term | expr - term | term term → term * factor | term / factor | factor factor → digit | ( expr ) digit → 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9

We use | to indicate that a nonterminal has multiple possible right hand side. So

A → B | Cis simply shorthand for

A → B A → C

Do the examples 1+2/3-4*5 and (1+2)/3-4*5 on the board.

Note how the precedence is enforced by the grammar; slick!

Keywords are very helpful for distinguishing statements from one another.

stmt → id := expr

| if expr then stmt

| if expr then stmt else stmt

| while expr do stmt

| begin opt-stmts end

opt-stmts → stmt-list | ε

stmt-list → stmt-list ; stmt | stmt

Remark:

Homework: 2.16a, 2.16b

Specifying the translation of a source language construct in terms of attributes of its syntactic components.

Operator after operand. Parentheses are not needed. The normal notation we used is called infix. If you start with an infix expression, the following algorithm will give you the equivalent postfix expression.

One question is, given say 1+2-3, what is E, F and op? Does E=1+2, F=3, and op=+? Or does E=1, F=2-3 and op=+? This is the issue of precedence mentioned above. To simplify the present discussion we will start with fully parenthesized infix expressions.

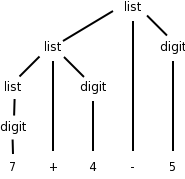

Example: 1+2/3-4*5

Example: Now do (1+2)/3-4*5

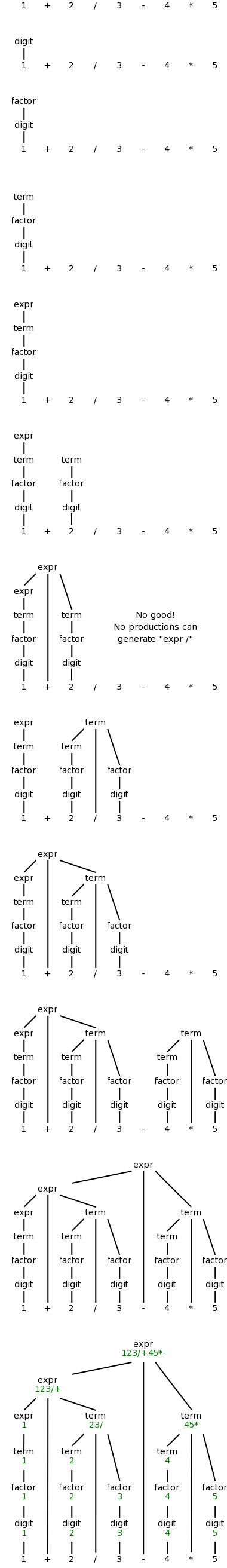

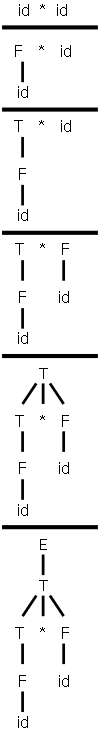

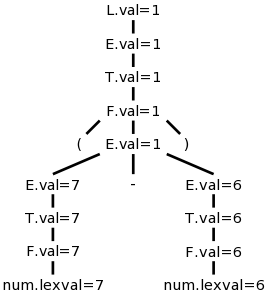

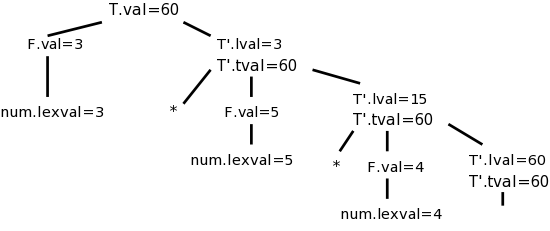

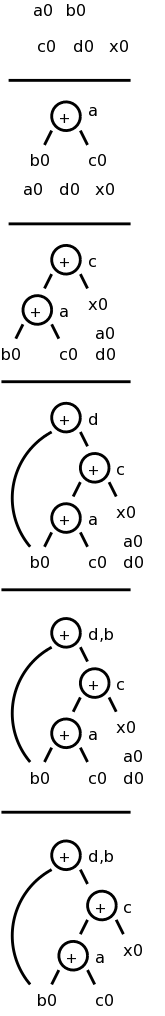

We want to “decorate” the parse trees we construct with “annotations” that give the value of certain attributes of the corresponding node of the tree. We will do the example of translating infix to postfix with 1+2/3-4*5. We use the following grammar, which follows the normal arithmetic terminology where one multiplies and divides factors to obtain terms, which in turn are added and subtracted to form expressions.

expr → expr + term | expr - term | term term → term * factor | term / factor | factor factor → digit | ( expr ) digit → 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9

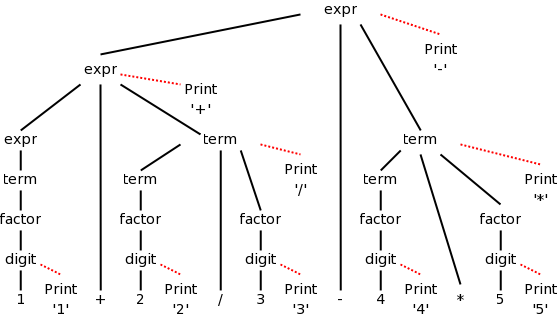

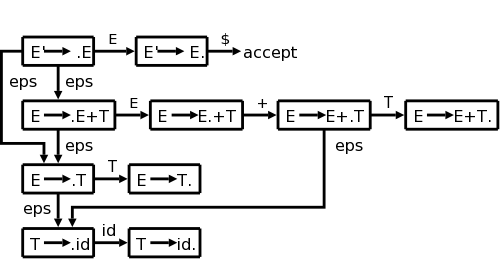

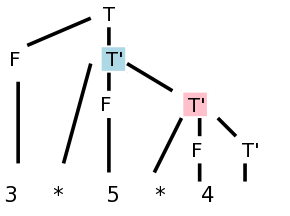

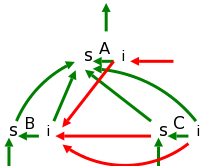

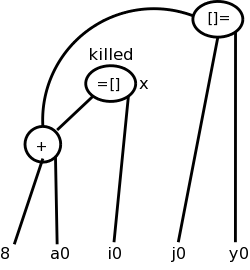

This grammar supports parentheses, although our example does not use them. On the right is a “movie” in which the parse tree is build from this example.

The attribute we will associate with the nodes is the text to be used to print the postfix form of the string in the leaves below the node. In particular the value of this attribute at the root is the postfix form of the entire source.

The book does a simpler grammar (no *, /, or parentheses) for a simpler example. You might find that one easier. The book also does another grammar describing commands to give a robot to move north, east, south, or west by one unit at a time. The attributes associated with the nodes are the current position (for some nodes, including the root) and the change in position caused by the current command (for other nodes).

Definition: A syntax-directed definition is a grammar together with a set of “semantic rules” for computing the attribute values. A parse tree augmented with the attribute values at each node is called an annotated parse tree.

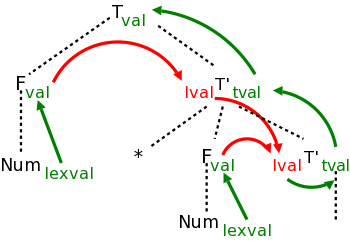

For the bottom-up approach I will illustrate now, we annotate a node after having annotated its children. Thus the attribute values at a node can depend on the children of the node but not the parent of the node. We call these synthesized attributes, since they are formed by synthesizing the attributes of the children.

In chapter 5, when we study top-down annotations as well, we will introduce “inherited” attributes that are passed down from parents to children.

We specify how to synthesize attributes by giving the semantic rules together with the grammar. That is we give the syntax directed definition.

| Production | Semantic Rule |

|---|---|

| expr → expr1 + term | expr.t := expr1.t || term.t || '+' |

| expr → expr1 - term | expr.t := expr1.t || term.t || '-' |

| expr → term | expr.t := term.t |

| term → term1 * factor | term.t := term1.t || factor.t || '*' |

| term → term1 / factor | term.t := term1.t || factor.t || '/' |

| term → factor | term.t := factor.t |

| factor → digit | factor.t := digit.t |

| factor → ( expr ) | factor.t := expr.t |

| digit → 0 | digit.t := '0' |

| digit → 1 | digit.t := '1' |

| digit → 2 | digit.t := '2' |

| digit → 3 | digit.t := '3' |

| digit → 4 | digit.t := '4' |

| digit → 5 | digit.t := '5 |

| digit → 6 | digit.t := '6' |

| digit → 7 | digit.t := '7' |

| digit → 8 | digit.t := '8' |

| digit → 9 | digit.t := '9' |

We apply these rules bottom-up (starting with the geographically lowest productions, i.e., the lowest lines on the page) and get the annotated graph shown on the right. The annotation are drawn in green.

Homework: Draw the annotated graph for (1+2)/3-4*5.

As mentioned in this chapter we are annotating bottom-up. This corresponds to doing a depth-first traversal of the (unannotated) parse tree to produce the annotations. It is often called a postorder traversal because a parent is visited after (i.e., post) its children are visited.

The bottom-up annotation scheme generates the final result as the annotation of the root. In our infix → postfix example we get the result desired by printing the root annotation. Now we consider another technique that produces its results incrementally.

Instead of giving semantic rules for each production (and thereby generating annotations) we can embed program fragments called semantic actions within the productions themselves.

In diagrams the semantic action is connected to the node with a distinctive, often dotted, line. The placement of the actions determine the order they are performed. Specifically, one executes the actions in the order they are encountered in a postorder traversal of the tree.

Definition: A syntax-directed translation scheme is a context-free grammar with embedded semantic actions.

For our infix → postfix translator, the parent either just

passes on the attribute of its (only) child or concatenates them

left to right and adds something at the end. The equivalent

semantic actions would be either to print the new item or print

nothing.

Here are the semantic actions corresponding to a few of the rows of the table above. Note that the actions are enclosed in {}.

expr → expr + term { print('+') }

expr → expr - term { print('-') }

term → term / factor { print('/') }

term → factor { null }

digit → 3 { print('3') }

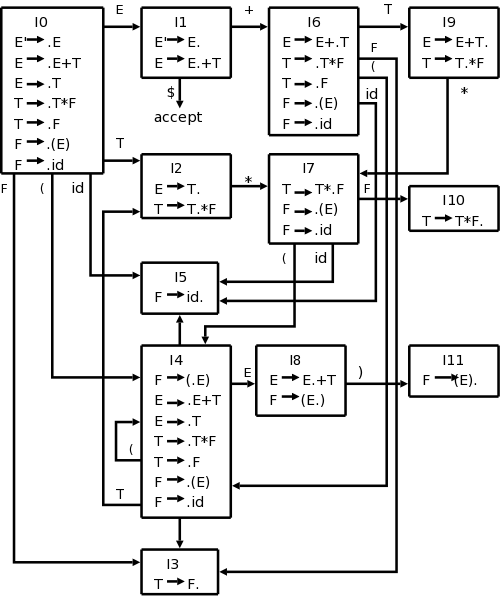

The diagram for 1+2/3-4*5 with attached semantic actions is shown on the right.

Given an input, e.g. our favorite 1+2/3-4*5, we just do a depth first (postorder) traversal of the corresponding diagram and perform the semantic actions as they occur. When these actions are print statements as above, we can be said to be emitting the translation.

Do a depth first traversal of the diagram on the board, performing the semantic actions as they occur, and confirm that the translation emitted is in fact 123/+45*-, the postfix version of 1+2/3-4*5

Homework: Produce the corresponding diagram for (1+2)/3-4*5.

When we produced postfix, all the prints came at the end (so that the children were already “printed”. The { actions } do not need to come at the end. We illustrate this by producing infix arithmetic (ordinary) notation from a prefix source.

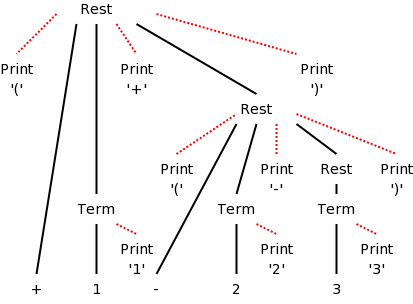

In prefix notation the operator comes first so +1-23 evaluates to zero. Consider the following grammar. It translates prefix to infix for the simple language consisting of addition and subtraction of digits between 1 and 3 without parentheses (prefix notation and postfix notation do not use parentheses). The resulting parse tree for +1-23 is shown on the right. Note that the output language (infix notation) has parentheses.

rest → + term rest | - term rest | term term → 1 | 2 | 3

The table below shows the semantic actions or rules needed for our

translator.

| Production with Semantic Action | Semantic Rule |

|---|---|

| rest → { print('(') } + term { print('+') } rest { print(')') } | rest.t := '(' || term.t || '+' || rest.t || ')' |

| rest → { print('(') } - term { print('-') } rest { print(')') } | rest.t := '(' || term.t || '-' || rest.t || ')' |

| rest → term | rest.t := term.t |

| term → 1 { print('1') } | term.t := '1' |

| term → 2 { print('2') } | term.t := '2' |

| term → 3 { print('3') } | term.t := '3' |

Homework: 2.8.

If the semantic rules of a syntax-directed definition all have the property that the new annotation for the left hand side (LHS) of the production is just the concatenation of the annotations for the nonterminals on the RHS in the same order as the nonterminals appear in the production, we call the syntax-directed definition simple. It is still called simple if new strings are interleaved with the original annotations. So the example just done is a simple syntax-directed definition.

Remark: We shall see later that, in many cases a simple syntax-directed definition permits one to execute the semantic actions while parsing and not construct the parse tree at all.

Remarks:

Objective: Given a string of tokens and a grammar, produce a parse tree yielding that string (or at least determine if such a tree exists).

We will learn both top-down (begin with the start symbol, i.e. the root of the tree) and bottom up (begin with the leaves) techniques.

In the remainder of this chapter we just do top down, which is easier to implement by hand, but is less general. Chapter 4 covers both approaches.

Tools (so called “parser generators”) often use bottom-up techniques.

In this section we assume that the lexical analyzer has already

scanned the source input and converted it into a sequence of tokens.

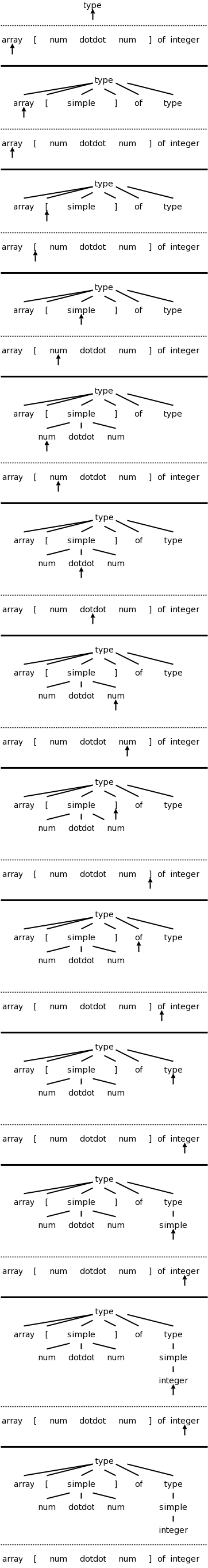

Consider the following simple language, which derives a subset of the types found in the (now somewhat dated) programming language Pascal. I am using the same example as the book so that the compiler code they give will be applicable.

We have two nonterminals, type, which is the start symbol, and simple, which represents the “simple” types.

There are 8 terminals, which are tokens produced by the lexer and correspond closely with constructs in pascal itself. I do not assume you know pascal. (The authors appear to assume the reader knows pascal, but do not assume knowledge of C.) Specifically, we have.

The productions are

type → simple

type → ↑ id

type → array [ simple ] of type

simple → integer

simple → char

simple → num dotdot num

Parsing is easy in principle and for certain grammars (e.g., the two above) it actually is easy. The two fundamental steps (we start at the root since this is top-down parsing) are

When programmed this becomes a procedure for each nonterminal that chooses a production for the node and calls procedures for each nonterminal in the RHS. Thus it is recursive in nature and descends the parse tree. We call these parsers “recursive descent”.

The big problem is what to do if the current node is the LHS of more than one production. The small problem is what do we mean by the “next” node needing a subtree.

The easiest solution to the big problem would be to assume that there is only one production having a given terminal as LHS. There are two possibilities

expr → term + term - 9

term → factor / factor

factor → digit

digit → 7

But this is very boring. The only possible sentence

is 7/7+7/7-9

expr → term + term

term → factor / factor

factor → ( expr )

This is even worse; there are no (finite) sentences. Only an

infinite sentence beginning (((((((((.

So this won't work. We need to have multiple productions with the same LHS.

How about trying them all? We could do this! If we get stuck where the current tree cannot match the input we are trying to parse, we would backtrack.

Instead, we will look ahead one token in the input and only choose productions that can yield a result starting with this token. Furthermore, we will (in this section) restrict ourselves to predictive parsing in which there is only production that can yield a result starting with a given token. This solution to the big problem also solves the small problem. Since we are trying to match the next token in the input, we must choose the leftmost (nonterminal) node to give children to.

Let's return to pascal array type grammar and consider the three

productions having type as LHS. Even when I write the short

form

type → simple | ↑ id | array [ simple ] of type

I view it as three productions.

For each production P we wish to consider the set FIRST(P) consisting of those tokens that can appear as the first symbol of a string derived from the RHS of P. We actually define FIRST(RHS) rather than FIRST(P), but I often say “first set of the production” when I should really say “first set of the RHS of the production”.

Definition: Let r be the RHS of a production P. FIRST(r) is the set of tokens that can appear as the first symbol in a string derived from r.

To use predictive parsing, we make the following

Assumption: Let P and Q be two productions with the same LHS. Then FIRST(P) and FIRST(Q) are disjoint. Thus, if we know both the LHS and the token that must be first, there is (at most) one production we can apply. BINGO!

This table gives the FIRST sets for our pascal array type example.

| Production | FIRST |

|---|---|

| type → simple | { integer, char, num } |

| type → ↑ id | { ↑ } |

| type → array [ simple ] of type | { array } |

| simple → integer | { integer } |

| simple → char | { char } |

| simple → num dotdot num | { num } |

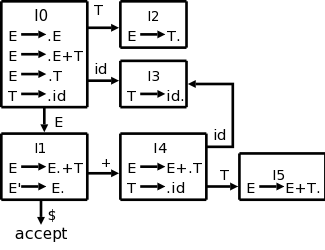

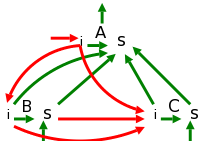

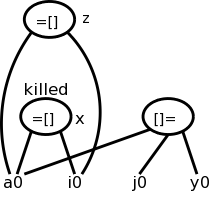

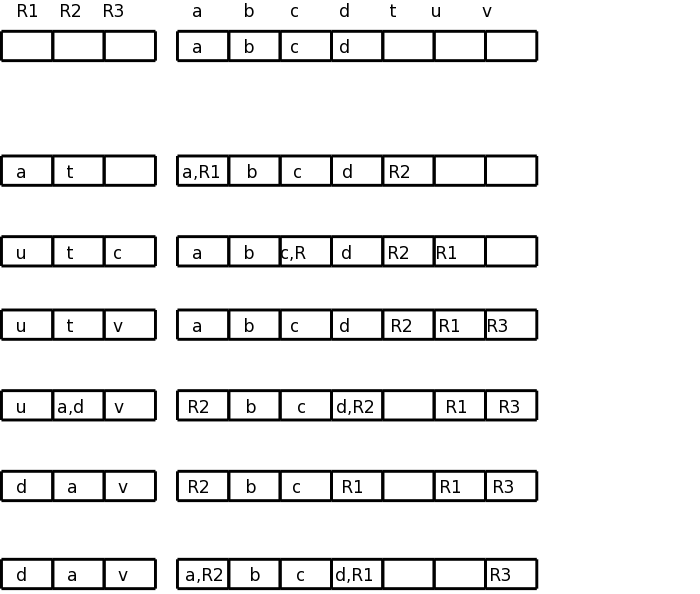

The three productions with type as LHS have disjoint FIRST sets. Similarly the three productions with simple as LHS have disjoint FIRST sets. Thus predictive parsing can be used. We process the input left to right and call the current token lookahead since it is how far we are looking ahead in the input to determine the production to use. The movie on the right shows the process in action.

Homework:

A. Construct the corresponding table for

rest → + term rest | - term rest | term term → 1 | 2 | 3B. Can predictive parsing be used?

End of Homework:.

Not all grammars are as friendly as the last example. The first complication is when ε occurs in a RHS. If this happens or if the RHS can generate ε, then ε is included in FIRST.

But ε would always match the current input position!

The rule is that if lookahead is not in FIRST of any production with the desired LHS, we use the (unique!) production (with that LHS) that has ε as RHS.

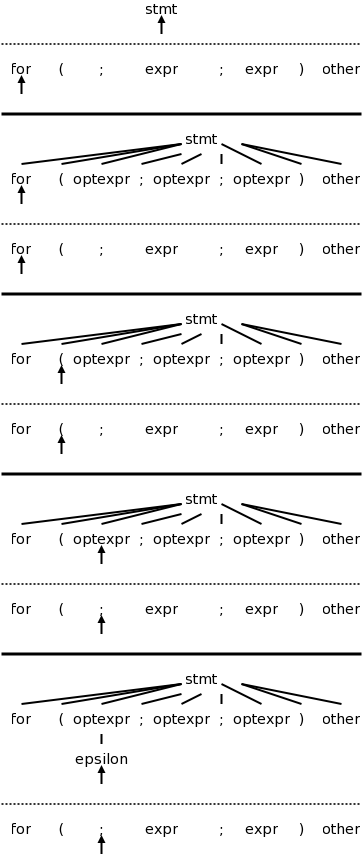

The second edition, which I just obtained now does a C instead of a pascal example. The productions are

stmt → expr ;

| if ( expr ) stmt

| for ( optexpr ; optexpr ; optexpr ) stmt

| other

optexpr → expr | ε

For completeness, on the right is the beginning of a movie for the C example. Note the use of the ε-production at the end since no other entry in FIRST will match ;

Predictive parsers are fairly easy to construct as we will now see. Since they are recursive descent parsers we go top-down with one procedure for each nonterminal. Do remember that we must have disjoint FIRST sets for all the productions having a given nonterminal as LHS.

The book has code at this point. We will see code later in this chapter.

Another complication. Consider

expr → expr + term

expr → term

For the first production the RHS begins with the LHS. This is called left recursion. If a recursive descent parser would pick this production, the result would be that the next node to consider is again expr and the lookahead has not changed. An infinite loop occurs.

Consider instead

expr → term rest

rest → + term rest

rest → ε

Both pairs of productions generate the same possible token strings,

namely

term + term + ... + term

The second pair is called right recursive since the RHS ends (has on

the right) the LHS.

If you draw the parse trees generated, you will see

that, for left recursive productions, the tree grows to the left; whereas,

for right recursive, it grows to the right.

Note also that, according to the trees generated by the first pair,

the additions are performed right to left; whereas, for the second

pair, they are performed left to right.

That is, for

term + term + term

the tree from the first pair has the left + at the top (why?);

whereas, the tree from the second pair has the right + at the top.

In general, for any A, R, α, and β, we can replace the pair

A → A α | β

with the triple

A → β R

R → α R | ε

For the example above A is “expr”, R is “rest”, α is “+ term”, and β is “term”.

Objective: an infix to postfix translator for expressions. We start with just plus and minus, specifically the expressions generated by the following grammar. We include a set of semantic actions with the grammar. Note that finding a grammar for the desired language is one problem, constructing a translator for the language given a grammar is another problem. We are tackling the second problem.

expr → expr + term { print('+') }

expr → expr - term { print('-') }

expr → term

term → 0 { print('0') }

. . .

term → 9 { print('9') }

One problem that we must solve is that this grammar is left recursive.

We prefer not to have superfluous nonterminals as they make the parsing less efficient. That is why we don't say that a term produces a digit and a digit produces each of 0,...,9. Ideally the syntax tree would just have the operators + and - and the 10 digits 0,1,...,9. That would be called the abstract syntax tree. A parse tree coming from a grammar is technically called a concrete syntax tree.

We eliminate the left recursion as we did in 2.4. This time there

are two operators + and - so we replace the triple

A → A α | A β | γ

with the quadruple

A → γ R

R → α R | β R | ε

This time we have actions so, for example

α is + term { print('+') }

However, the formulas still hold and we get

expr → term rest

rest → + term { print('+') } rest

| - term { print('-') } rest

| ε

term → 0 { print('0') }

. . .

| 9 { print('9') }

The C code is in the book. Note the else ; in rest(). This corresponds to the epsilon production. As mentioned previously. The epsilon production is only used when all others fail (that is why it is the else arm and not the then or the else if arms).

These are (useful) programming techniques.

In the first edition this is about 40 lines of C code, 12 of which are single { or }. The second edition has equivalent code in java.

Converts a sequence of characters (the source) into a sequence of tokens. A lexeme is the sequence of characters comprising a single token.

These do not become tokens so that the parser need not worry about them.

The 2nd edition moves the discussion about

x<y versus x<=y

into this new section.

I have left it 2 sections ahead to more closely agree with our

(first edition).

This chapter considers only numerical integer constants. They are computed one digit at a time by value=10*value+digit. The parser will therefore receive the token num rather than a sequence of digits. Recall that our previous parsers considered only one digit numbers.

The value of the constant is stored as the attribute of the token num. Indeed <token,attribute> pairs are passed from the scanner to the parser.

The C statement

sum = sum + x;

contains 4 tokens. The scanner will convert the input into

id = id + id ; (id standing for identifier).

Although there are three id tokens, the first and second represent

the lexeme sum; the third represents x. These must be

distinguished. Many language keywords, for example

“then”, are syntactically the same as identifiers.

These also must be distinguished. The symbol table will accomplish

these tasks.

Care must be taken when one lexeme is a proper subset of another.

Consider

x<y versus x<=y

When the < is read, the scanner needs to read another character

to see if it is an =. But if that second character is y, the

current token is < and the y must be “pushed back”

onto the input stream so that the configuration is the same after

scanning < as it is after scanning <=.

Also consider then versus thenewvalue, one is a keyword and the other an id.

As indicated the scanner reads characters and occasionally pushes one back to the input stream. The “downstream” interface is to the parser to which <token,attribute> pairs are passed.

A few comments on the program given in the text. One inelegance is that, in order to avoid passing a record (struct in C) from the scanner to the parser, the scanner returns the next token and places its attribute in a global variable.

Since the scanner converts digits into num's we can shorten the grammar. Here is the shortened version before the elimination of left recursion. Note that the value attribute of a num is its numerical value.

expr → expr + term { print('+') }

expr → expr - term { print('-') }

expr → term

term → num { print(num,value) }

In anticipation of other operators with higher precedence, we

introduce factor and, for good measure, include parentheses for

overriding the precedence. So our grammar becomes.

expr → expr + term { print('+') }

expr → expr - term { print('-') }

expr → term

term → factor

factor → ( expr ) | num { print(num,value) }

The factor() procedure follows the familiar recursive descent pattern: find a production with lookahead in FIRST and do what the RHS says.

The symbol table is an important data structure for the entire compiler. For the simple translator, it is primarily used to store and retrieve <lexeme,token> pairs.

insert(s,t) returns the index of a new entry storing the

pair (lexeme s, token t).

lookup(s) returns the index for x or 0 if not there.

Simply insert them into the symbol table prior to examining any input. Then they can be found when used correctly and, since their corresponding token will not be id, any use of them where an identifier is required can be flagged.

insert("div",div)Probably the simplest would be

struct symtableType {

char lexeme[BIGNUMBER];

int token;

} symtable[ANOTHERBIGNUMBER];

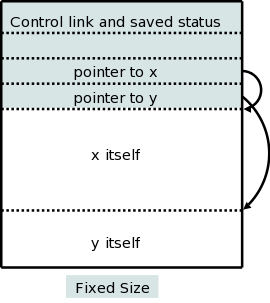

The space inefficiency of having a fixed size entry for all lexemes is

poor, so the authors use a (standard) technique of concatenating all

the strings into one big string and storing pointers to the beginning

of each of the substrings.

One form of intermediate representation is to assume that the target machine is a simple stack machine (explained very soon). The the front end of the compiler translates the source language into instructions for this stack machine and the back end translates stack machine instructions into instructions for the real target machine.

We use a very simple stack machine

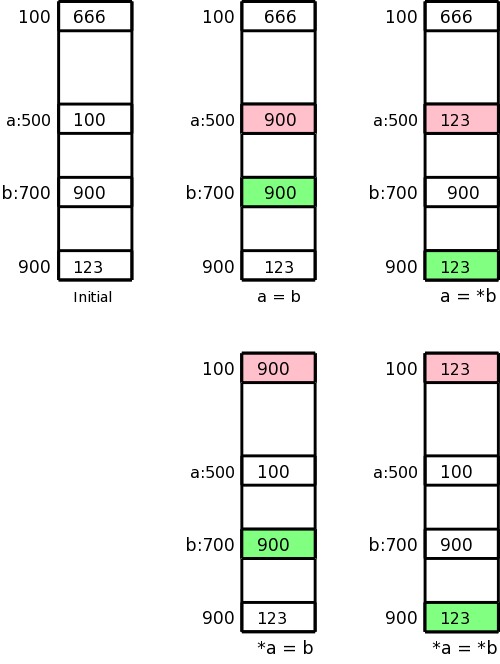

Consider Q := Z; or A[f(x)+B*D] := g(B+C*h(x,y));. (I follow the text and use := for the assignment op, which is written = in C/C++. I am using [] for array reference and () for function call).

From a macroscopic view, we have three tasks.

Note the differences between L-values and R-values

| push v | push v (onto stack) |

|---|---|

| rvalue l | push contents of (location) l |

| lvalue l | push address of l |

| pop | pop |

| := | r-value on tos put into the location specified by l-value 2nd on the stack; both are popped |

| copy | duplicate the top of stack |

Machine instructions to evaluate an expression mimic the postfix form of the expression. That is we generate code to evaluate the left operand, then code to evaluate the write operand, and finally the code to evaluate the operation itself.

For example y := 7 * xx + 6 * (z + w) becomes

lvalue y push 7 rvalue xx * push 6 rvalue z rvalue w + * + :=

To say this more formally we define two attributes. For any nonterminal, the attribute t gives its translation and for the terminal id, the attribute lexeme gives its string representation.

Assuming we have already given the semantic rules for expr (i.e., assuming that the annotation expr.t is known to contain the translation for expr) then the semantic rule for the assignment statement is

stmt → id := expr

{ stmt.t := 'lvalue' || id.lexime || expr.t || := }

There are several ways of specifying conditional and unconditional jumps. We choose the following 5 instructions. The simplifying assumption is that the abstract machine supports “symbolic” labels. The back end of the compiler would have to translate this into machine instructions for the actual computer, e.g. absolute or relative jumps (jump 3450 or jump +500).

| label l | target of jump |

|---|---|

| goto l | |

| gofalse | pop stack; jump if value is false |

| gotrue | pop stack; jump if value is true |

| halt |

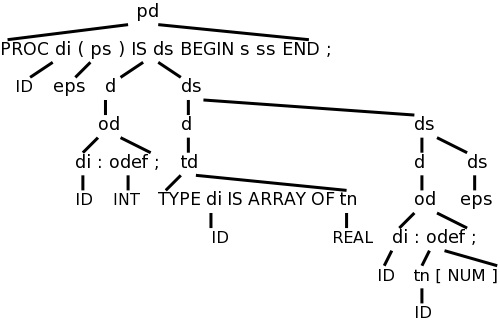

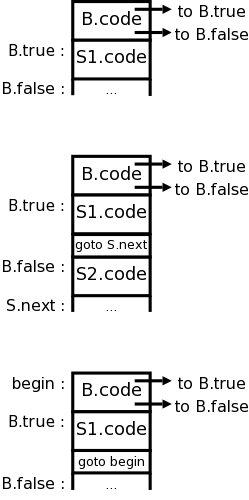

Fairly simple. Generate a new label using the assumed function newlabel(), which we sometimes write without the (), and use it. The semantic rule for an if statement is simply

stmt → if expr then stmt1 { out := newlabel();

stmt.t := expr.t || 'gofalse' out || stmt1.t || 'label' out

Rewriting the above as a semantic action (rather than a rule) we get the following, where emit() is a function that prints its arguments in whatever form is required for the abstract machine (e.g., it deals with line length limits, required whitespace, etc).

stmt → if

expr { out := newlabel; emit('gofalse', out); }

then

stmt1 { emit('label', out) }

Don't forget that expr is itself a nonterminal. So by the time we reach out:=newlabel, we will have already parsed expr and thus will have done any associated actions, such as emit()'ing instructions. These instructions will have left a boolean on the tos. It is this boolean that is tested by the emitted gofalse.

More precisely, the action written to the right of expr will be the third child of stmt in the tree. Since a postorder traversal visits the children in order, the second child “expr” will have been visited (just) prior to visiting the action.

Look how simple it is! Don't forget that the FIRST sets for the productions having stmt as LHS are disjoint!

procedure stmt

integer test, out;

if lookahead = id then // first set is {id} for assignment

emit('lvalue', tokenval); // pushes lvalue of lhs

match(id); // move past the lhs]

match(':='); // move past the :=

expr; // pushes rvalue of rhs on tos

emit(':='); // do the assignment (Omitted in book)

else if lookahead = 'if' then

match('if'); // move past the if

expr; // pushes boolean on tos

out := newlabel();

emit('gofalse', out); // out is integer, emit makes a legal label

match('then'); // move past the then

stmt; // recursive call

emit('label', out) // emit again makes out legal

else if ... // while, repeat/do, etc

else error();

end stmt;

Full code for a simple infix to postfix translator. This uses the concepts developed in 2.5-2.7 (it does not use the abstract stack machine material from 2.8). Note that the intermediate language we produced in 2.5-2.7, i.e., the attribute .t or the result of the semantic actions, is essentially the final output desired. Hence we just need the front end.

The grammar with semantic actions is as follows. All the actions come at the end since we are generating postfix. this is not always the case.

start → list eof

list → expr ; list

list → ε // would normally use | as below

expr → expr + term { print('+') }

| expr - term { print('-'); }

| term

term → term * factor { print('*') }

| term / factor { print('/') }

| term div factor { print('DIV') }

| term mod factor { print('MOD') }

| factor

factor → ( expr )

| id { print(id.lexeme) }

| num { print(num.value) }

Eliminate left recursion to get

start → list eof

list → expr ; list

| ε

expr → term moreterms

moreterms → + term { print('+') } moreterms

| - term { print('-') } moreterms

| ε

term | factor morefactors

morefactors → * factor { print('*') } morefactors

| / factor { print('/') } morefactors

| div factor { print('DIV') } morefactors

| mod factor { print('MOD') } morefactors

| ε

factor → ( expr )

| id { print(id.lexeme) }

| num { print(num.value) }

Show “A+B;” on board starting with “start”.

Contains lexan(), the lexical analyzer, which is called by the parser to obtain the next token. The attribute value is assigned to tokenval and white space is stripped.

| lexme | token | attribute value |

|---|---|---|

| white space | ||

| sequence of digits | NUM | numeric value |

| div | DIV | |

| mod | MOD | |

| other seq of a letter then letters and digits | ID | index into symbol table |

| eof char | DONE | |

| other char | that char | NONE |

Using a recursive descent technique, one writes routines for each nonterminal in the grammar. In fact the book combines term and morefactors into one routine.

term() {

int t;

factor();

// now we should call morefactorsl(), but instead code it inline

while(true) // morefactor nonterminal is right recursive

switch (lookahead) { // lookahead set by match()

case '*': case '/': case DIV: case MOD: // all the same

t = lookahead; // needed for emit() below

match(lookahead) // skip over the operator

factor(); // see grammar for morefactors

emit(t,NONE);

continue; // C semantics for case

default: // the epsilon production

return;

Other nonterminals similar.

The routine emit().

The insert(s,t) and lookup(s) routines described previously are in symbol.c The routine init() preloads the symbol table with the defined keywords.

Does almost nothing. The only help is that the line number, calculated by lexan() is printed.

One reason is that much was deliberately simplified. Specifically note that

Also, I presented the material way too fast to expect full understanding.

Homework: Read chapter 3.

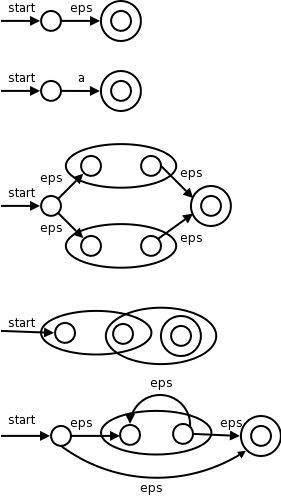

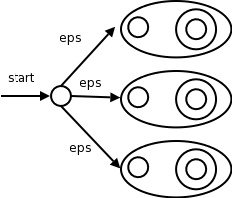

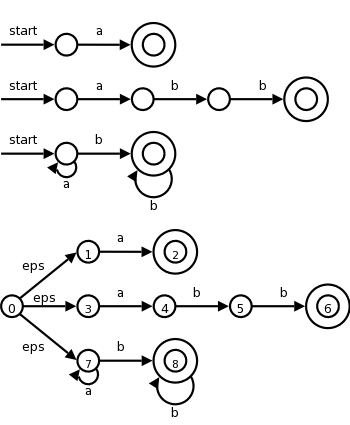

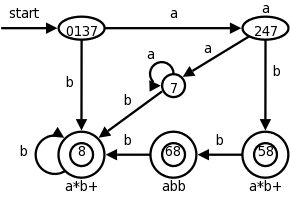

Two methods to construct a scanner (lexical analyzer).

Note that the speed (of the lexer not of the code generated by the compiler) and error reporting/correction are typically much better for a handwritten lexer. As a result most production-level compiler projects write their own lexers

The lexer is called by the parser when the latter is ready to process another token.

The lexer also might do some housekeeping such as eliminating whitespace and comments. Some call these tasks scanning, but others call the entire task scanning.

After the lexer, individual characters are no longer examined by the compiler; instead tokens (the output of the lexer) are used.

Why separate lexical analysis from parsing? The reasons are basically software engineering concerns.

Note the circularity of the definitions for lexeme and pattern.

Common token classes.

Homework: 3.3.

We saw an example of attributes in the last chapter.

For tokens corresponding to keywords, attributes are not needed since the name of the token tells everything. But consider the token corresponding to integer constants. Just knowing that the we have a constant is not enough, subsequent stages of the compiler need to know the value of the constant. Similarly for the token identifier we need to distinguish one identifier from another. The normal method is for the attribute to specify the symbol table entry for this identifier.

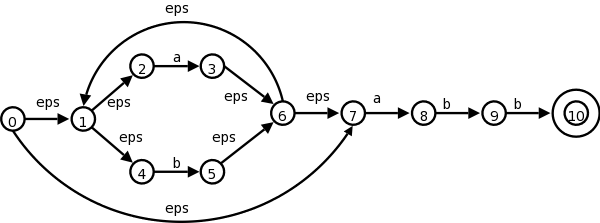

We saw in this movie an example where parsing got “stuck” because we reduced the wrong part of the input string. We also learned about FIRST sets that enabled us to determine which production to apply when we are operating left to right on the input. For predictive parsers the FIRST sets for a given nonterminal are disjoint and so we know which production to apply. In general the FIRST sets might not be disjoint so we have to try all the productions whose FIRST set contains the lookahead symbol.

All the above assumed that the input was error free, i.e. that the source was a sentence in the language. What should we do when the input is erroneous and we get to a point where no production can be applied?

The simplest solution is to abort the compilation stating that the program is wrong, perhaps giving the line number and location where the parser could not proceed.

We would like to do better and at least find other errors. We could perhaps skip input up to a point where we can begin anew (e.g. after a statement ending semicolon), or perhaps make a small change to the input around lookahead so that we can proceed.

Determining the next lexeme often requires reading the input beyond the end of that lexeme. For example, to determine the end of an identifier normally requires reading the first whitespace character after it. Also just reading > does not determine the lexeme as it could also be >=. When you determine the current lexeme, the characters you read beyond it may need to be read again to determine the next lexeme.

The book illustrates the standard programming technique of using two (sizable) buffers to solve this problem.

A useful programming improvement to combine testing for the end of a buffer with determining the character read.

The chapter turns formal and, in some sense, the course begins.

The book is fairly careful about finite vs infinite sets and also uses

(without a definition!) the notion of a countable set.

(A countable set is either a finite set or one whose elements can be

put into one to one correspondence with the positive integers.

That is, it is a set whose elements can be counted.

The set of rational numbers, i.e., fractions in lowest terms, is

countable;

the set of real numbers is uncountable, because it is strictly

bigger, i.e., it cannot be counted.)

We should be careful to distinguish the empty set φ from the

empty string ε.

Formal language theory is a beautiful subject, but I shall suppress

my urge to do it right

and try to go easy on the formalism.

We will need a bunch of definitions.

Definition: An alphabet is a finite set of symbols.

Example: {0,1}, presumably φ (uninteresting), ascii, unicode, ebcdic, latin-1.

Definition: A string over an alphabet is a finite sequence of symbols from that alphabet. Strings are often called words or sentences.

Example: Strings over {0,1}: ε, 0, 1, 111010. Strings over ascii: ε, sysy, the string consisting of 3 blanks.

Definition: The length of a string is the number of symbols (counting duplicates) in the string.

Example: The length of allan, written |allan|, is 5.

Definition: A language over an alphabet is a countable set of strings over the alphabet.

Example: All grammatical English sentences with five, eight, or twelve words is a language over ascii. It is also a language over unicode.

Definition: The concatenation of strings s and t is the string formed by appending the string t to s. It is written st.

Example: εs = sε = s for any string s.

We view concatenation as a product (see Monoid in wikipedia http://en.wikipedia.org/wiki/Monoid). It is thus natural to define s0=ε and si+1=sis.

Example: s1=s, s4=ssss.

A prefix of a string is a portion starting from the beginning and a suffix is a portion ending at the end. More formally,

Definitions: A prefix of s is any string obtained from s by removing (possibly zero) characters from the end of s.

A suffix is defined analogously and a substring of s is obtained by deleting a prefix and a suffix.

Example: If s is 123abc, then

(1) s itself and ε are each a prefix, suffix, and a substring.

(2) 12 are 123a are prefixes.

(3) 3abc is a suffix.

(4) 23a is a substring.

Definitions: A proper prefix of s is a prefix of s other than ε and s itself. Similarly, proper suffixes and proper substrings of s do not include ε and s.

Definition: A subsequence of s is formed by deleting (possibly) positions from s. We say positions rather than characters since s may for example contain 5 occurrences of the character Q and we only want to delete a certain 3 of them.

Example: issssii is a subsequence of Mississippi.

Homework: 3.1b, 3.5 (c and e are optional).

Definition: The union of L1 and L2 is simply the set-theoretic union, i.e., it consists of all words (strings) in either L1 or L2.

Example: The union of {Grammatical English sentences with one, three, or five words} with {Grammatical English sentences with two or four words} is {Grammatical English sentences with five or fewer words}.

Definition: The concatenation of L1 and L2 is the set of all strings st, where s is a string of L1 and t is a string of L2.

We again view concatenation as a product and write LM for the concatenation of L and M.

Examples:: The concatenation of {a,b,c} and {1,2} is {a1,a2,b1,b2,c1,c2}. The concatenation of {a,b,c} and {1,2,ε} is {a1,a2,b1,b2,c1,c2,a,b,c}.

Definition: As with strings, it is natural to

define powers of a language L.

L0={ε}, which is not φ.

Li+1=LiL.

Definition: The (Kleene) closure of L,

denoted L* is

L0 ∪ L1 ∪ L2 ...

Definition: The positive closure of L,

denoted L+ is

L1 ∪ L2 ...

Example: {0,1,2,3,4,5,6,7,8,9}+ gives

all unsigned integers, but with some ugly versions.

It has 3, 03, 000003.

{0} ∪ ( {1,2,3,4,5,6,7,8,9} ({0,1,2,3,4,5,6,7,8,9,0}* ) )

seems better.

In these notes I may write * for * and + for +, but that is strictly speaking wrong and I will not do it on the board or on exams or on lab assignments.

Example: {a,b}* is

{ε,a,b,aa,ab,ba,bb,aaa,aab,aba,abb,baa,bab,bba,bbb,...}.

{a,b}+ is {a,b,aa,ab,ba,bb,aaa,aab,aba,abb,baa,bab,bba,bbb,...}.

{ε,a,b}* is {ε,a,b,aa,ab,ba,bb,...}.

{ε,a,b}+ is the same as {ε,a,b}*.

The book gives other examples based on L={letters} and D={digits}, which you should read..

The idea is that the regular expressions over an alphabet consist

of

ε, the alphabet, and expressions using union, concatenation, and *,

but it takes more words to say it right.

For example, I didn't include ().

Note that (A ∪ B)* is definitely not A* ∪ B* (* does not

distribute over ∪) so we

need the parentheses.

The book's definition includes many () and is more complicated than I think is necessary. However, it has the crucial advantages of being correct and precise.

The wikipedia entry doesn't seem to be as precise.

I will try a slightly different approach, but note again that there is nothing wrong with the book's approach (which appears in both first and second editions, essentially unchanged).

Definition: The regular expressions and associated languages over an alphabet consist of

Parentheses, if present, control the order of operations. Without parentheses the following precedence rules apply.

The postfix unary operator * has the highest precedence. The book mentions that it is left associative. (I don't see how a postfix unary operator can be right associative or how a prefix unary operator such as unary - could be left associative.)

Concatenation has the second highest precedence and is left associative.

| has the lowest precedence and is left associative.

The book gives various algebraic laws (e.g., associativity) concerning these operators.

The reason we don't include the positive closure is that for any RE

r+ = rr*.

Homework: 3.6 a and b.

These will look like the productions of a context free grammar we saw previously, but there are differences. Let Σ be an alphabet, then a regular definition is a sequence of definitions

d1 → r1

d2 → r2

...

dn → rn

where the d's are unique and not in Σ andNote that each di can depend on all the previous d's.

Example: C identifiers can be described by the following regular definition

letter_ → A | B | ... | Z | a | b | ... | z | _

digit → 0 | 1 | ... | 9

CId → letter_ ( letter_ | digit)*

Homework: 3.7 a,b (c is optional)

There are many extensions of the basic regular expressions given above. The following three will be frequently used in this course as they are particular useful for lexical analyzers as opposed to text editors or string oriented programming languages, which have more complicated regular expressions.

All three are simply shorthand. That is, the set of possible languages generated using the extensions is the same as the set of possible languages generated without using the extensions.

Examples:

C-language identifiers

letter_ → [A-Za-z_]

digit → [0-9]

CId → letter_ ( letter | digit ) *

Unsigned integer or floating point numbers

digit → [0-9] digits → digit+ number → digits (. digits)?(E[+-]? digits)?

Homework: 3.8 for the C language (you might need to read a C manual first to find out all the numerical constants in C), 3.10a.

Goal is to perform the lexical analysis needed for the following grammar.

stmt → if expr then stmt

| if expr then stmt else stmt

| ε

expr → term relop term // relop is relational operator =, >, etc

| term

term → id

| number

Recall that the terminals are the tokens, the nonterminals produce terminals.

A regular definition for the terminals is

digit → [0-9]

digits → digits+

number → digits (. digits)? (E[+-]? digits)?

letter → [A-Za-z]

id → letter ( letter | digit )*

if → if

then → then

else → else

relop → < | > | <= | >= | = | <>

| Lexeme | Token | Attribute |

|---|---|---|

| Whitespace | ws | — |

| if | if | — |

| then | then | — |

| else | else | — |

| An identifier | id | Pointer to table entry |

| A number | number | Pointer to table entry |

| < | relop | LT |

| <= | relop | LE |

| = | relop | EQ |

| <> | relop | NE |

| > | relop | GT |

| >= | relop | GE |

We also want the lexer to remove whitespace so we define a new token

ws → ( blank | tab | newline ) +where blank, tab, and newline are symbols used to represent the corresponding ascii characters.

Recall that the lexer will be called by the parser when the latter needs a new token. If the lexer then recognizes the token ws, it does not return it to the parser but instead goes on to recognize the next token, which is then returned. Note that you can't have two consecutive ws tokens in the input because, for a given token, the lexer will match the longest lexeme starting at the current position that yields this token. The table on the right summarizes the situation.

For the parser all the relational ops are to be treated the same so

they are all the same token, relop.

Naturally, other parts of the compiler will need to distinguish

between the various relational ops so that appropriate code is

generated.

Hence, they have distinct attribute values.

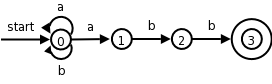

A transition diagram is similar to a flowchart for (a part of) the lexer. We draw one for each possible token. It shows the decisions that must be made based on the input seen. The two main components are circles representing states (think of them as decision points of the lexer) and arrows representing edges (think of them as the decisions made).

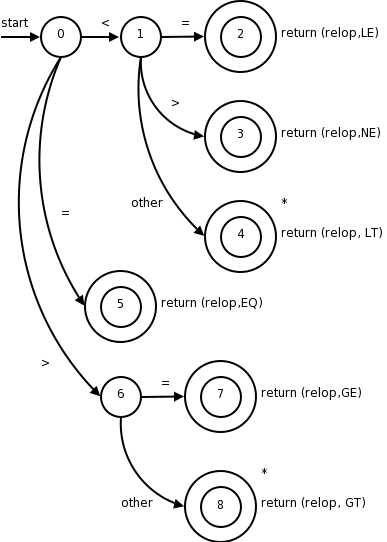

The transition diagram (3.12 in the 1st edition, 3.13 in the second) for relop is shown on the right.

too farin finding the token, one (or more) stars are drawn.

It is fairly clear how to write code corresponding to this diagram. You look at the first character, if it is <, you look at the next character. If that character is =, you return (relop,LE) to the parser. If instead that character is >, you return (relop,NE). If it is another character, return (relop,LT) and adjust the input buffer so that you will read this character again since you have used it for the current lexeme. If the first character was =, you return (relop,EQ).

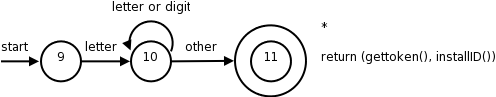

The transition diagram below corresponds to the regular definition given previously.

Note again the star affixed to the final state.

Two questions remain.

then, which also match the pattern in the transition diagram?

We will continue to assume that the keywords are reserved, i.e., may not be used as identifiers. (What if this is not the case—as in Pl/I, which had no reserved words? Then the lexer does not distinguish between keywords and identifiers and the parser must.)

We will use the method mentioned last chapter and have the keywords installed into the symbol table prior to any invocation of the lexer. The symbol table entry will indicate that the entry is a keyword.

installID() checks if the lexeme is already in the table. If it is not present, the lexeme is install as an id token. In either case a pointer to the entry is returned.

gettoken() examines the lexeme and returns the token name, either id or a name corresponding to a reserved keyword.

Both installID() and gettoken() access the buffer to obtain the lexeme of interest

The text also gives another method to distinguish between identifiers and keywords.

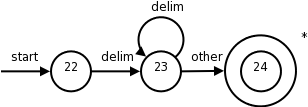

So far we have transition diagrams for identifiers (this diagram also handles keywords) and the relational operators. What remains are whitespace, and numbers, which are the simplest and most complicated diagrams seen so far.

The diagram itself is quite simple reflecting the simplicity of the corresponding regular expression.

delimin the diagram represents any of the whitespace characters, say space, tab, and newline.

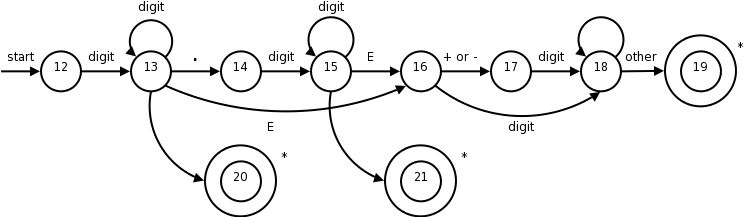

The diagram below is from the second edition. It is essentially a combination of the three diagrams in the first edition.

This certainly looks formidable, but it is not that bad; it follows from the regular expression.

In class go over the regular expression and show the corresponding parts in the diagram.

When an accepting states is reached, action is required but is not shown on the diagram. Just as identifiers are stored in a symbol table and a pointer is returned, there is a corresponding number table in which numbers are stored. These numbers are needed when code is generated. Depending on the source language, we may wish to indicate in the table whether this is a real or integer. A similar, but more complicated, transition diagram could be produced if they language permitted complex numbers as well.

Homework: Write transition diagrams for the regular expressions in problems 3.6 a and b, 3.7 a and b.

The idea is that we write a piece of code for each decision diagram. I will show the one for relational operations below (from the 2nd edition). This piece of code contains a case for each state, which typically reads a character and then goes to the next case depending on the character read. The numbers in the circles are the names of the cases.

Accepting states often need to take some action and return to the parser. Many of these accepting states (the ones with stars) need to restore one character of input. This is called retract() in the code.

What should the code for a particular diagram do if at one state the character read is not one of those for which a next state has been defined? That is, what if the character read is not the label of any of the outgoing arcs? This means that we have failed to find the token corresponding to this diagram.

The code calls fail(). This is not an error case. It simply means that the current input does not match this particular token. So we need to go to the code section for another diagram after restoring the input pointer so that we start the next diagram at the point where this failing diagram started. If we have tried all the diagram, then we have a real failure and need to print an error message and perhaps try to repair the input.

Note that the order the diagrams are tried is important. If the input matches more than one token, the first one tried will be chosen.

TOKEN getRelop() // TOKEN has two components

TOKEN retToken = new(RELOP); // First component set here

while (true)

switch(state)

case 0: c = nextChar();

if (c == '<') state = 1;

else if (c == '=') state = 5;

else if (c == '>') state = 6;

else fail();

break;

case 1: ...

...

case 8: retract(); // an accepting state with a star

retToken.attribute = GT; // second component

return(retToken);

The description above corresponds to the one given in the first edition.

The newer edition gives two other methods for combining the multiple transition-diagrams (in addition to the one above).

The newer version, which we will use, is called flex, the f stands for fast. I checked and both lex and flex are on the cs machines. I will use the name lex for both.

Lex is itself a compiler that is used in the construction of other compilers (its output is the lexer for the other compiler). The lex language, i.e, the input language of the lex compiler, is described in the few sections. The compiler writer uses the lex language to specify the tokens of their language as well as the actions to take at each state.

Let us pretend I am writing a compiler for a language called pink. I produce a file, call it lex.l, that describes pink in a manner shown below. I then run the lex compiler (a normal program), giving it lex.l as input. The lex compiler output is always a file called lex.yy.c, a program written in C.

One of the procedures in lex.yy.c (call it pinkLex()) is the lexer itself, which reads a character input stream and produces a sequence of tokens. pinkLex() also sets a global value yylval that is shared with the parser. I then compile lex.yy.c together with a the parser (typically the output of lex's cousin yacc, a parser generator) to produce say pinkfront, which is an executable program that is the front end for my pink compiler.

The general form of a lex program like lex.l is

declarations %% translation rules %% auxiliary functions

The lex program for the example we have been working with follows (it is typed in straight from the book).

%{

/* definitions of manifest constants

LT, LE, EQ, NE, GT, GE,

IF, THEN, ELSE, ID, NUMBER, RELOP */

%}

/* regular definitions */

delim [ \t\n]

ws {delim}*

letter [A-Za-z]

digit [0-9]

id {letter}({letter}{digit})*

number {digit}+(\.{digit}+)?(E[+-]?{digit}+)?

%%

{ws} {/* no action and no return */}

if {return(IF);}

then {return(THEN);}

else {return(ELSE);}

{id} {yylval = (int) installID(); return(ID);}

{number} {yylval = (int) installNum(); return(NUMBER);}

"<" {yylval = LT; return(RELOP);}

"<=" {yylval = LE; return(RELOP);}

"=" {yylval = EQ; return(RELOP);}

"<>" {yylval = NE; return(RELOP);}

">" {yylval = GT; return(RELOP);}

">=" {yylval = GE; return(RELOP);}

%%

int installID() {/* function to install the lexeme, whose first character

is pointed to by yytext, and whose length is yyleng,

into the symbol table and return a pointer thereto */

}

int installNum() {/* similar to installID, but puts numerical constants

into a separate table */

The first, declaration, section includes variables and constants as well as the all-important regular definitions that define the building blocks of the target language, i.e., the language that the generated lexer will analyze.

The next, translation rules, section gives the patterns of the lexemes that the lexer will recognize and the actions to be performed upon recognition. Normally, these actions include returning a token name to the parser and often returning other information about the token via the shared variable yylval.

If a return is not specified the lexer continues executing and finds the next lexeme present.

Anything between %{ and %} is not processed by lex, but instead is copied directly to lex.yy.c. So we could have had statements like

#define LT 12 #define LE 13

The regular definitions are mostly self explanatory. When a definition is later used it is surrounded by {}. A backslash \ is used when a special symbol like * or . is to be used to stand for itself, e.g. if we wanted to match a literal star in the input for multiplication.

Each rule is fairly clear: when a lexeme is matched by the left, pattern, part of the rule, the right, action, part is executed. Note that the value returned is the name (an integer) of the corresponding token. For simple tokens like the one named IF, which correspond to only one lexeme, no further data need be sent to the parser. There are several relational operators so a specification of which lexeme matched RELOP is saved in yylval. For id's and numbers's, the lexeme is stored in a table by the install functions and a pointer to the entry is placed in yylval for future use.

Everything in the auxiliary function section is copied directly to lex.yy.c. Unlike declarations enclosed in %{ %}, however, auxiliary functions may be used in the actions

The first rule makes <= one instead of two lexemes.

The second rule makes if

a keyword and not an id.

Sorry.

Sometimes a sequence of characters is only considered a certain

lexeme if the sequence is followed by specified other sequences.

Here is a classic example.

Fortran, PL/I, and some other languages do not have reserved words.

In Fortran

IF(X)=3

is a legal assignment statement and the IF is an identifier.

However,

IF(X.LT.Y)X=Y

is an if/then statement and IF is a keyword.