Operating Systems

================ Start Lecture #8 ================

4.2: Swapping

Moving the entire processes between disk and memory is called

swapping.

Multiprogramming with Variable Partitions

Both the number and size of the partitions change with

time.

Both the number and size of the partitions change with

time.

-

IBM OS/MVT (multiprogramming with a varying number of tasks).

-

Also early PDP-10 OS.

-

Job still has only one segment (as with MFT). That is, the

virtual address space is contiguous.

-

The physical address is also contiguous, that is, the process is

stored as one piece in memory.

-

The job can be of any

size up to the size of the machine and the job size can change

with time.

-

A single ready list.

-

A job can move (might be swapped back in a different place).

-

This is dynamic address translation (during run time).

-

Must perform an addition on every memory reference (i.e. on every

address translation) to add the start address of the partition.

-

Called a DAT (dynamic address translation) box by IBM.

-

Eliminates internal fragmentation.

-

Find a region the exact right size (leave a hole for the

remainder).

-

Not quite true, can't get a piece with 10A755 bytes. Would

get say 10A760. But internal fragmentation is much

reduced compared to MFT. Indeed, we say that internal

fragmentation has been eliminated.

-

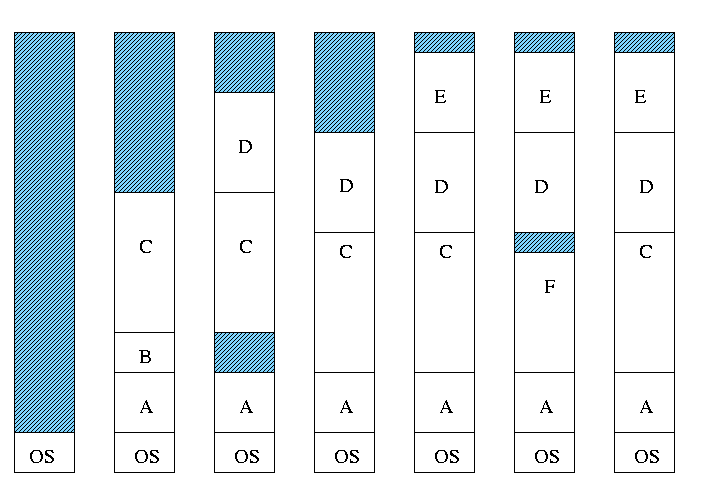

Introduces external fragmentation, i.e., holes

outside any region of memory assigned to a process.

-

What do you do if no hole is big enough for the request?

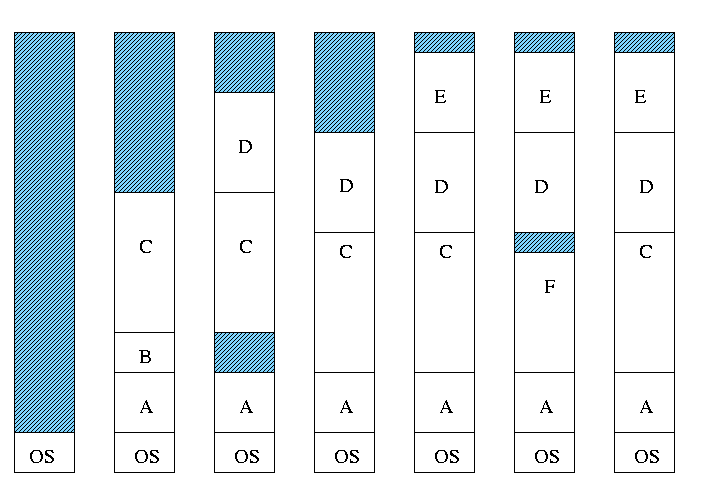

- Can compactify

-

Transition from bar 3 to bar 4 in diagram below.

-

This is expensive.

-

Not suitable for real time (MIT ping pong).

- Can swap out one process to bring in another, e.g., bars 5-6

and 6-7 in the diagram.

- There are more processes than holes. Why?

-

Because next to a process there might be a process or a hole

but next to a hole there must be a process

-

So can have “runs” of processes but not of holes

-

If after a process one is equally likely to have a process or

a hole, you get about twice as many processes as holes.

- Base and limit registers are used.

-

Storage keys not good since compactifying or moving would require

changing many keys.

-

Storage keys might need a fine granularity to permit the

boundaries to move by small amounts (to reduce internal

fragmentation). Hence many keys would need to be changed.

Homework: 3

MVT Introduces the “Placement Question”

That is, which hole (partition) should one choose?

-

Best fit, worst fit, first fit, circular first fit, quick fit, Buddy

-

Best fit doesn't waste big holes, but does leave slivers and

is expensive to run.

-

Worst fit avoids slivers, but eliminates all big holes so a

big job will require compaction. Even more expensive than best

fit (best fit stops if it finds a perfect fit).

-

Quick fit keeps lists of some common sizes (but has other

problems, see Tanenbaum).

-

Buddy system

-

Round request to next highest power of two (causes

internal fragmentation).

-

Look in list of blocks this size (as with quick fit).

-

If list empty, go higher and split into buddies.

-

When returning coalesce with buddy.

-

Do splitting and coalescing recursively, i.e. keep

coalescing until can't and keep splitting until successful.

-

See Tanenbaum for more details (or an algorithms book).

- A current favorite is circular first fit, also known as next fit.

-

Use the first hole that is big enough (first fit) but start

looking where you left off last time.

-

Doesn't waste time constantly trying to use small holes that

have failed before, but does tend to use many of the big holes,

which can be a problem.

- Buddy comes with its own implementation. How about the others?

Homework: 5.

4.2.1: Memory Management with Bitmaps

Divide memory into blocks and associate a bit with each block, used

to indicate if the corresponding block is free or allocated. To find

a chunk of size N blocks need to find N consecutive bits

indicating a free block.

The only design question is how much memory does one bit represent.

-

Big: Serious internal fragmentation.

-

Small: Many bits to store and process.

4.2.2: Memory Management with Linked Lists

-

Each item on list gives the length and starting location of the

corresponding region of memory and says whether it is a Hole or Process.

-

The items on the list are not taken from the memory to be

used by processes.

-

Keep in order of starting address.

-

Merge adjacent holes.

-

Singly linked.

Memory Management using Boundary Tags

-

Use the same memory for list items as for processes.

-

Don't need an entry in linked list for blocks in use, just

the avail blocks are linked.

-

The avail blocks themselves are linked, not a node that points to

an avail block.

-

When a block is returned, we can look at the boundary tag of the

adjacent blocks and see if they are avail.

If so they must be merged with the returned block.

-

For the blocks currently in use, just need a hole/process bit at

each end and the length. Keep this in the block itself.

-

We do not need to traverse the list when returning a block can use

boundary tags to find predecessor.

-

See Knuth, The Art of Computer Programming vol 1.

MVT also introduces the “Replacement Question”

That is, which victim should we swap out?

Note that this is an example of the suspend arc mentioned in process

scheduling.

We will study this question more when we discuss

demand paging in which case

we swap out part of a process.

Considerations in choosing a victim

-

Cannot replace a job that is pinned,

i.e. whose memory is tied down. For example, if Direct Memory

Access (DMA) I/O is scheduled for this process, the job is pinned

until the DMA is complete.

-

Victim selection is a medium term scheduling decision

- A job that has been in a wait state for a long time is a good

candidate.

- Often choose as a victim a job that has been in memory for a long

time.

- Another question is how long should it stay swapped out.

-

For demand paging, where swaping out a page is not as drastic as

swapping out a job, choosing the victim is an important memory

management decision and we shall study several policies,

NOTEs:

-

So far the schemes presented so far have had two properties:

-

Each job is stored contiguously in memory.

That is, the job is

contiguous in physical addresses.

-

Each job cannot use more memory than exists in the system.

That is, the virtual addresses space cannot exceed the

physical address space.

-

Tanenbaum now attacks the second item. I wish to do both and start

with the first.

-

Tanenbaum (and most of the world) uses the term

“paging” to mean what I call demand paging. This is

unfortunate as it mixes together two concepts.

-

Paging (dicing the address space) to solve the placement

problem and essentially eliminate external fragmentation.

-

Demand fetching, to permit the total memory requirements of

all loaded jobs to exceed the size of physical memory.

-

Tanenbaum (and most of the world) uses the term virtual memory as

a synonym for demand paging. Again I consider this unfortunate.

-

Demand paging is a fine term and is quite descriptive.

-

Virtual memory “should” be used in contrast with

physical memory to describe any virtual to physical address

translation.

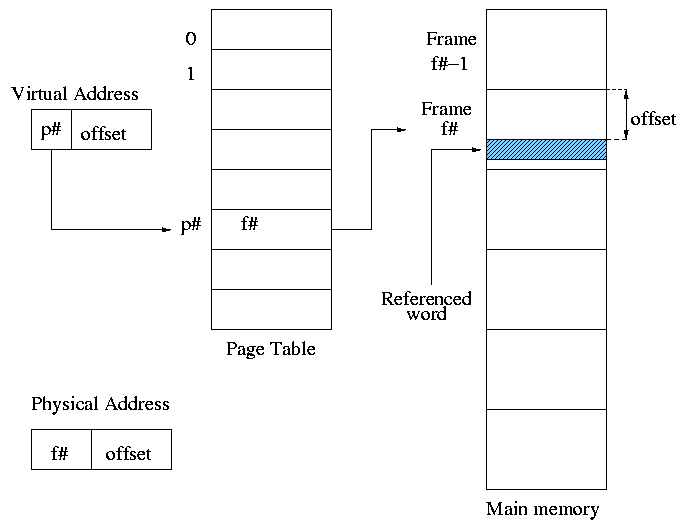

** (non-demand) Paging

Simplest scheme to remove the requirement of contiguous physical

memory.

Simplest scheme to remove the requirement of contiguous physical

memory.

-

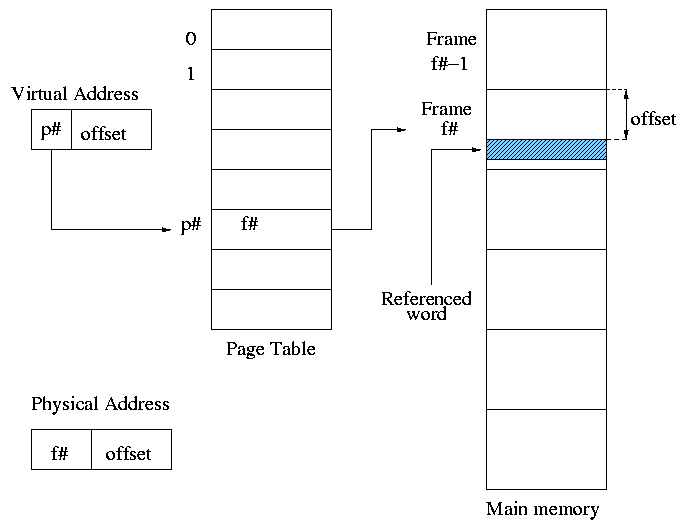

Chop the program into fixed size pieces called

pages (invisible to the programmer).

- Chop the real memory into fixed size pieces called page

frames or simply frames.

- Size of a page (the page size) = size of a frame (the frame size).

- Sprinkle the pages into the frames.

- Keep a table (called the page table) having an

entry for each page. The page table entry or PTE for

page p contains the number of the frame f that contains page p.

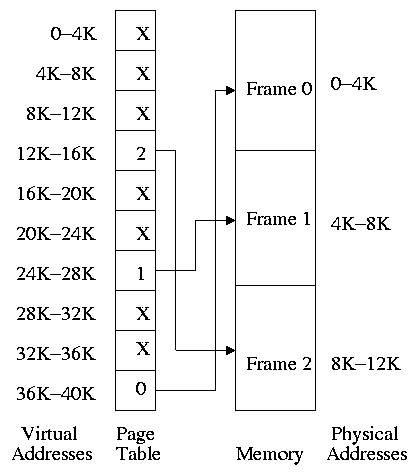

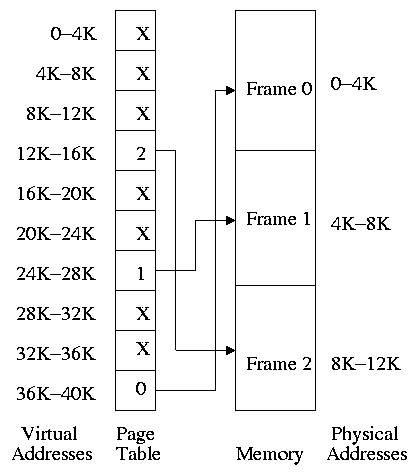

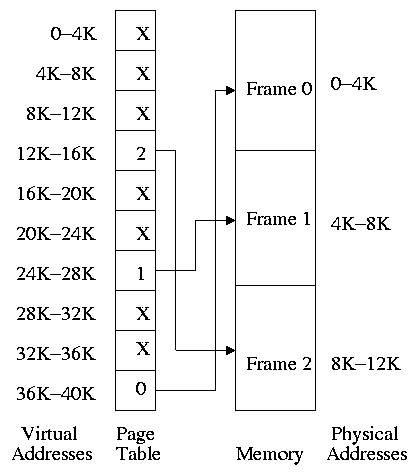

Example: Assume a decimal machine with

page size = frame size = 1000.

Assume PTE 3 contains 459.

Then virtual address 3372 corresponds to physical address 459372.

Properties of (non-demand) paging.

-

Entire job must be memory resident to run.

-

No holes, i.e. no external fragmentation.

-

If there are 50 frames available and the page size is 4KB than a

job requiring <= 200KB will fit, even if the available frames are

scattered over memory.

-

Hence (non-demand) paging is useful.

-

Introduces internal fragmentation approximately equal to 1/2 the

page size for every process (really every segment).

-

Can have a job unable to run due to insufficient memory and have

some (but not enough) memory available. This is not

called external fragmentation since it is not due to memory being

fragmented.

-

Eliminates the placement question. All pages are equally

good since don't have external fragmentation.

-

The replacement question remains.

-

Since page boundaries occur at “random” points and can

change from run to run (the page size can change with no effect on

the program--other than performance), pages are not appropriate

units of memory to use for protection and sharing. This is

discussed further when we introduce segmentation.

Homework: 16.

Address translation

-

Each memory reference turns into 2 memory references

- Reference the page table

- Reference central memory

-

This would be a disaster!

-

Hence the MMU caches page#-->frame# translations. This cache is kept

near the processor and can be accessed rapidly.

-

This cache is called a translation lookaside buffer (TLB) or

translation buffer (TB).

-

For the above example, after referencing virtual address 3372,

there would be an entry in the TLB containing the mapping

3-->459.

-

Hence a subsequent access to virtual address 3881 would be

translated to physical address 459881 without an extra memory

reference.

Naturally, a memory reference for location 459881 itself would be

required.

Choice of page size is discuss below.

Homework: 8.

4.3: Virtual Memory (meaning fetch on demand)

Idea is that a program can execute even if only the active portion

of its address space is memory resident. That is, we are to swap in

and swap out

portions of a program. In a crude sense this could be called

“automatic overlays”.

Advantages

-

Can run a program larger than the total physical memory.

-

Can increase the multiprogramming level since the total size of

the active, i.e. loaded, programs (running + ready + blocked) can

exceed the size of the physical memory.

-

Since some portions of a program are rarely if ever used, it is an

inefficient use of memory to have them loaded all the time. Fetch

on demand will not load them if not used and will unload them

during replacement if they are not used for a long time

(hopefully).

-

Simpler for the user than overlays or variable aliasing

(older techniques to run large programs using limited memory).

Disadvantages

-

More complicated for the OS.

-

Execution time less predictable (depends on other jobs).

-

Can over-commit memory.

** 4.3.1: Paging (meaning demand paging)

Fetch pages from disk to memory when they are referenced, with a hope

of getting the most actively used pages in memory.

-

Very common: dominates modern operating systems.

- Started by the Atlas system at Manchester University in

the 60s (Fortheringham).

- Each PTE continues to have the frame number if the page is

loaded.

- But what if the page is not loaded (exists only on disk)?

- The PTE has a flag indicating if it is loaded (can think of

the X in the diagram on the right as indicating that this flag is

not set).

- If not loaded, the location on disk could be kept in the PTE,

but normally it is not

(discussed below).

- When a reference is made to a non-loaded page (sometimes

called a non-existent page, but that is a bad name), the system

has a lot of work to do. We give more details

below.

- Choose a free frame, if one exists.

- If not

- Choose a victim frame.

- More later on how to choose a victim.

- Called the replacement question

- Write victim back to disk if dirty,

- Update the victim PTE to show that it is not loaded.

- Copy the referenced page from disk to the free frame.

- Update the PTE of the referenced page to show that it is

loaded and give the frame number.

- Do the standard paging address translation (p#,off)-->(f#,off).

-

Really not done quite this way

-

There is “always” a free frame because ...

-

... there is a deamon active that checks the number of free frames

and if this is too low, chooses victims and “pages them out”

(writing them back to disk if dirty).

-

Choice of page size is discussed below.

Homework: 12.

4.3.2: Page tables

A discussion of page tables is also appropriate for (non-demand)

paging, but the issues are more acute with demand paging since the

tables can be much larger. Why?

-

The total size of the active processes is no longer limited to the

size of physical memory. Since the total size of the processes is

greater, the total size of the page tables is greater and hence

concerns over the size of the page table are more acute.

-

With demand paging an important question is the choice of a victim

page to page out. Data in the page table

can be useful in this choice.

We must be able access to the page table very quickly since it is

needed for every memory access.

Unfortunate laws of hardware.

-

Big and fast are essentially incompatible.

-

Big and fast and low cost is hopeless.

So we can't just say, put the page table in fast processor registers,

and let it be huge, and sell the system for $1000.

The simplest solution is to put the page table in main memory.

However it seems to be both too slow and two big.

-

Seems too slow since all memory references require two reference.

-

This can be largely repaired by using a TLB, which is fast

and, although small, often captures almost all references to

the page table.

-

For this course, officially TLBs “do not exist”,

that is if you are asked to perform a translation, you should

assume there is no TLB.

-

Nonetheless we will discuss them below and in reality they

very much do exist.

-

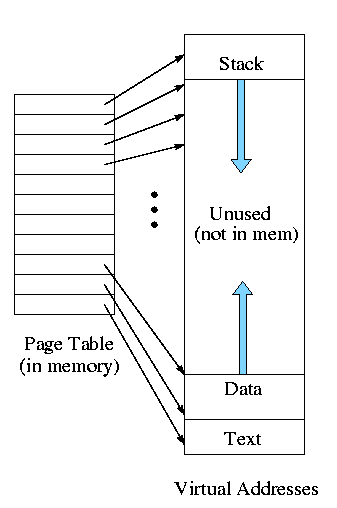

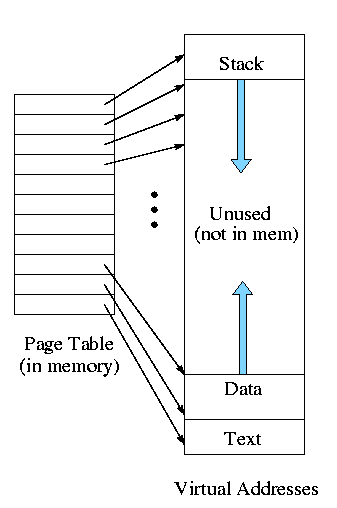

The page table might be too big.

-

Currently we are considering contiguous virtual

addresses ranges (i.e. the virtual addresses have no holes).

-

Typically put the stack at one end of virtual address and the

global (or static) data at the other end and let them grow towards

each other.

-

The memory in between is unused.

-

This unused virtual memory can be huge (in address range) and

hence the page table will mostly contain unneeded PTEs.

-

Works fine if the maximum virtual address size is small, which

was once true (e.g., the PDP-11 of the 1970s) but is no longer the

case.

-

The “fix” is to use multiple levels of mapping.

We will see two examples below: two-level paging and

segmentation plus paging.

Contents of a PTE

Each page has a corresponding page table entry (PTE).

The information in a PTE is for use by the hardware.

Information set by and used by the OS is normally kept in other OS tables.

The page table format is determined by the hardware so access routines

are not portable.

The following fields are often present.

-

The valid bit. This tells if

the page is currently loaded (i.e., is in a frame). If set, the frame

number is valid.

It is also called the presence or

presence/absence bit. If a page is accessed with the valid

bit unset, a page fault is generated by the hardware.

-

The frame number. This is the main reason for the table. It gives

the virtual to physical address translation.

-

The Modified bit. Indicates that some part of the page

has been written since it was loaded. This is needed if the page is

evicted so that the OS can tell if the page must be written back to

disk.

-

The referenced bit. Indicates that some word in the page

has been referenced. Used to select a victim: unreferenced pages make

good victims by the locality property (discussed below).

-

Protection bits. For example one can mark text pages as

execute only. This requires that boundaries between regions with

different protection are on page boundaries. Normally many

consecutive (in logical address) pages have the same protection so

many page protection bits are redundant.

Protection is more

naturally done with segmentation.

Both the number and size of the partitions change with

time.

Both the number and size of the partitions change with

time.

Simplest scheme to remove the requirement of contiguous physical

memory.

Simplest scheme to remove the requirement of contiguous physical

memory.