Operating Systems

================ Start Lecture #7 ================

Note:

The department would like to know how you feel about a recitation

being added the same way as in Fundamental Algorithms and Programming

Languages.

It would be 1 hour per week, probably on a different evening (but

surely on an evening).

3.5: Deadlock Avoidance

Let's see if we can tiptoe through the tulips and avoid deadlock

states even though our system does permit all four of the necessary

conditions for deadlock.

An optimistic resource manager is one that grants every

request as soon as it can. To avoid deadlocks with all four

conditions present, the manager must be smart not optimistic.

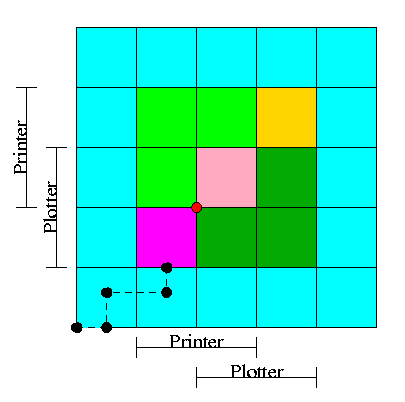

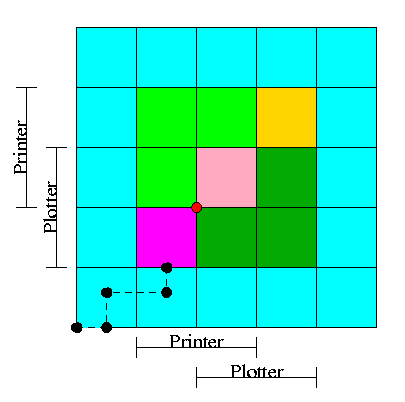

3.5.1 Resource Trajectories

We plot progress of each process along an axis.

In the example we show, there are two processes, hence two axes, i.e.,

planar.

This procedure assumes that we know the entire request and release

pattern of the processes in advance so it is not a practical

solution.

I present it as it is some motivation for the practical solution that

follows, the Banker's Algorithm.

-

We have two processes H (horizontal) and V.

-

The origin represents them both starting.

-

Their combined state is a point on the graph.

-

The parts where the printer and plotter are needed by each process

are indicated.

-

The dark green is where both processes have the plotter and hence

execution cannot reach this point.

-

Light green represents both having the printer; also impossible.

-

Pink is both having both printer and plotter; impossible.

-

Gold is possible (H has plotter, V has printer), but the system

can't get there.

-

The upper right corner is the goal; both processes have finished.

-

The red dot is ... (cymbals) deadlock. We don't want to go there.

-

The cyan is safe. From anywhere in the cyan we have horizontal

and vertical moves to the finish point (the upper right corner)

without hitting any impossible area.

-

The magenta interior is very interesting. It is

- Possible: each processor has a different resource

- Not deadlocked: each processor can move within the magenta

- Deadly: deadlock is unavoidable. You will hit a magenta-green

boundary and then will no choice but to turn and go to the red dot.

-

The cyan-magenta border is the danger zone.

-

The dashed line represents a possible execution pattern.

-

With a uniprocessor no diagonals are possible. We either move to

the right meaning H is executing or move up indicating V is executing.

-

The trajectory shown represents.

- H excuting a little.

- V excuting a little.

- H executes; requests the printer; gets it; executes some more.

- V executes; requests the plotter.

-

The crisis is at hand!

-

If the resource manager gives V the plotter, the magenta has been

entered and all is lost. “Abandon all hope ye who enter

here” --Dante.

-

The right thing to do is to deny the request, let H execute moving

horizontally under the magenta and dark green. At the end of the dark

green, no danger remains, both processes will complete successfully.

Victory!

-

This procedure is not practical for a general purpose OS since it

requires knowing the programs in advance. That is, the resource

manager, knows in advance what requests each process will make and in

what order.

Homework: 10, 11, 12.

3.5.2: Safe States

Avoiding deadlocks given some extra knowledge.

-

Not surprisingly, the resource manager knows how many units of each

resource it had to begin with.

-

Also it knows how many units of each resource it has given to

each process.

-

It would be great to see all the programs in advance and thus know

all future requests, but that is asking for too much.

-

Instead, when each process starts, it announces its maximum usage.

That is each process, before making any resource requests, tells

the resource manager the maximum number of units of each resource

the process can possible need.

This is called the claim of the process.

-

If the claim is greater than the total number of units in the

system the resource manager kills the process when receiving

the claim (or returns an error code so that the process can

make a new claim).

-

If during the run the process asks for more than its claim,

the process is aborted (or an error code is returned and no

resources are allocated).

-

If a process claims more than it needs, the result is that the

resource manager will be more conservative than need be and there

will be more waiting.

Definition: A state is safe

if there is an ordering of the processes such that: if the

processes are run in this order, they will all terminate (assuming

none exceeds its claim).

Recall the comparison made above between detecting deadlocks (with

multi-unit resources) and the banker's algorithm

-

The deadlock detection algorithm given makes the most

optimistic assumption

about a running process: it will return all its resources and

terminate normally.

If we still find processes that remain blocked, they are

deadlocked.

-

The banker's algorithm makes the most pessimistic

assumption about a running process: it immediately asks for all

the resources it can (details later on “can”).

If, even with such demanding processes, the resource manager can

assure that all process terminates, then we can assure that

deadlock is avoided.

In the definition of a safe state no assumption is made about the

running processes; that is, for a state to be safe termination must

occur no matter what the processes do (providing the all terminate and

to not exceed their claims).

Making no assumption is the same as making the most pessimistic

assumption.

Give an example of each of the four possibilities. A state that is

-

Safe and deadlocked--not possible.

-

Safe and not deadlocked--trivial (e.g., no arcs).

-

Not safe and deadlocked--easy (any deadlocked state).

-

Not safe and not deadlocked--interesting.

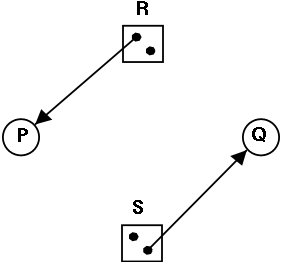

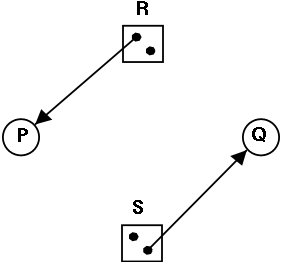

Is the figure on the right safe or not?

Is the figure on the right safe or not?

-

You can NOT tell until I give you the initial claims of the

process.

-

Please do not make the unfortunately common exam mistake to give

an example involving safe states without giving the claims.

-

For the figure on the right, if the initial claims are:

P: 1 unit of R and 2 units of S (written (1,2))

Q: 2 units of R and 1 units of S (written (2,1))

the state is NOT safe.

-

But if the initial claims are instead:

P: 2 units of R and 1 units of S (written (2,1))

Q: 1 unit of R and 2 units of S (written (1,2))

the state IS safe.

-

Explain why this is so.

A manager can determine if a state is safe.

-

Since the manager know all the claims, it can determine the maximum

amount of additional resources each process can request.

-

The manager knows how many units of each resource it has left.

The manager then follows the following procedure, which is part of

Banker's Algorithms discovered by Dijkstra, to

determine if the state is safe.

-

If there are no processes remaining, the state is

safe.

-

Seek a process P whose max additional requests is less than

what remains (for each resource type).

- If no such process can be found, then the state is

not safe.

- The banker (manager) knows that if it refuses all requests

excepts those from P, then it will be able to satisfy all

of P's requests. Why?

Ans: Look at how P was chosen.

-

The banker now pretends that P has terminated (since the banker

knows that it can guarantee this will happen). Hence the banker

pretends that all of P's currently held resources are returned. This

makes the banker richer and hence perhaps a process that was not

eligible to be chosen as P previously, can now be chosen.

-

Repeat these steps.

Example 1

A safe state with 22 units of one resource

| process | initial claim | current alloc | max add'l |

|---|

| X | 3 | 1 | 2 |

| Y | 11 | 5 | 6 |

| Z | 19 | 10 | 9 |

| Total | 16 |

| Available | 6 |

-

One resource type R with 22 unit

-

Three processes X, Y, and Z with initial claims 3, 11, and 19

respectively.

-

Currently the processes have 1, 5, and 10 units respectively.

-

Hence the manager currently has 6 units left.

-

Also note that the max additional needs for the processes are 2,

6, 9 respectively.

-

So the manager cannot assure (with its current

remaining supply of 6 units) that Z can terminate. But that is

not the question.

-

This state is safe

-

Use 2 units to satisfy X; now the manager has 7 units.

-

Use 6 units to satisfy Y; now the manager has 12 units.

-

Use 9 units to satisfy Z; done!

Example 2

An unsafe state with 22 units of one resource

| process | initial claim | current alloc | max add'l |

|---|

| X | 3 | 1 | 2 |

| Y | 11 | 5 | 6 |

| Z | 19 | 12 | 7 |

| Total | 18 |

| Available | 4 |

Start with example 1 and assume that Z now requests 2 units and the

manager grants them.

-

Currently the processes have 1, 5, and 12 units respectively.

-

The manager has 4 units.

-

The max additional needs are 2, 6, and 7.

-

This state is unsafe

-

Use 2 unit to satisfy X; now the manager has 5 units.

-

Y needs 6 and Z needs 7 so we can't guarantee satisfying either

-

Note that we were able to find a process that can terminate (X)

but then we were stuck. So it is not enough to find one process.

We must find a sequence of all the processes.

Remark: An unsafe state is not

necessarily a deadlocked state.

Indeed, if one gets lucky all processes in an unsafe state may

terminate successfully.

A safe state means that the manager can

guarantee that no deadlock will occur.

3.5.3: The Banker's Algorithm (Dijkstra) for a Single Resource

The algorithm is simple: Stay in safe states. Initially, we

assume all the processes are present before execution begins and that

all initial claims are given before execution begins.

We will relax these assumptions very soon.

-

Before execution begins, check that the system is safe.

That is, check that no process claims more than the manager has).

If not, then the offending process is trying to claim more of

some resource than exist in

the system has and hence cannot be guaranteed to complete even if

run by itself.

You might say that it can become deadlocked all by itself.

-

When the manager receives a request, it pretends to grant it and

checks if the resulting state is safe. If it is safe the request is

granted, if not the process is blocked.

-

When a resource is returned, the manager (politely thanks the

process and then) checks to see if “the first” pending

requests can be granted (i.e., if the result would now be

safe). If so the request is granted. The manager checks to see if

the next pending request can be granted, etc..

Homework: 13.

3.5.4: The Banker's Algorithm for Multiple Resources

At a high level the algorithm is identical: Stay in safe states.

-

What is a safe state?

-

The same definition (if processes are run in a certain order they

will all terminate).

-

Checking for safety is the same idea as above. The difference is

that to tell if there enough free resources for a processes to

terminate, the manager must check that for all

resources, the number of free units is at least equal to the max

additional need of the process.

Limitations of the banker's algorithm

-

Often users don't know the maximum requests a process will make.

They can estimate conservatively (i.e., use big numbers for the claim)

but then the manager becomes very conservative.

-

New processes arriving cause a problem (but not so bad as

Tanenbaum suggests).

-

The process's claim must be less than the total number of

units of the resource in the system. If not, the process is not

accepted by the manager.

-

Since the state without the new process is safe, so is the

state with the new process! Just use the order you had originally

and put the new process at the end.

-

Insuring fairness (starvation freedom) needs a little more

work, but isn't too hard either (once an hour stop taking new

processes until all current processes finish).

-

A resource can become unavailable (e.g., a tape drive might

break).

This can result in an unsafe state.

Homework: 21, 27, and 20. There is an interesting

typo in 20: A has claimed 3 units of resource 5,

but there are only 2 units in the entire system.

Change the problem by having B both claim and be allocated 1 unit of

resource 5.

3.7: Other Issues

3.7.1: Two-phase locking

This is covered (MUCH better) in a database text. We will skip it.

3.7.2: Non-resource deadlocks

You can get deadlock from semaphores as well as resources. This is

trivial. Semaphores can be considered resources. P(S) is request S

and V(S) is release S. The manager is the module implementing P and

V. When the manager returns from P(S), it has granted the resource S.

3.7.3: Starvation

As usual FCFS is a good cure. Often this is done by priority aging

and picking the highest priority process to get the resource. Also

can periodically stop accepting new processes until all old ones

get their resources.

3.8: Research on Deadlocks

Skipped.

3.9: Summary

Read.

Chapter 4: Memory Management

Also called storage management or

space management.

Memory management must deal with the storage

hierarchy present in modern machines.

-

Registers, cache, central memory, disk, tape (backup)

-

Move data from level to level of the hierarchy.

-

How should we decide when to move data up to a higher level?

- Fetch on demand (e.g. demand paging, which is dominant now).

- Prefetch

- Read-ahead for file I/O.

- Large cache lines and pages.

- Extreme example. Entire job present whenever running.

We will see in the next few weeks that there are three independent

decision:

-

Segmentation (or no segmentation)

-

Paging (or no paging)

-

Fetch on demand (or no fetching on demand)

Memory management implements address translation.

-

Convert virtual addresses to physical addresses

- Also called logical to real address translation.

- A virtual address is the address expressed in

the program.

- A physical address is the address understood

by the computer hardware.

-

The translation from virtual to physical addresses is performed by

the Memory Management Unit or (MMU).

-

Another example of address translation is the conversion of

relative addresses to absolute addresses

by the linker.

-

The translation might be trivial (e.g., the identity) but not in a modern

general purpose OS.

-

The translation might be difficult (i.e., slow).

- Often includes addition/shifts/mask--not too bad.

- Often includes memory references.

- VERY serious.

- Solution is to cache translations in a Translation

Lookaside Buffer (TLB). Sometimes called a

translation buffer (TB).

Homework: 6.

When is address translation performed?

-

At compile time

- Compiler generates physical addresses.

- Requires knowledge of where the compilation unit will be loaded.

- No linker.

- Loader is trivial.

- Primitive.

- Rarely used (MSDOS .COM files).

-

At link-edit time (the “linker lab”)

- Compiler

-

Generates relative (a.k.a. relocatable) addresses for each

compilation unit.

-

References external addresses.

- Linkage editor

- Converts the relocatable addr to absolute.

- Resolves external references.

-

Misnamed ld by unix.

-

Must also converts virtual to physical addresses by

knowing where the linked program will be loaded. Linker

lab “does” this, but it is trivial since we

assume the linked program will be loaded at 0.

-

Loader is still trivial.

-

Hardware requirements are small.

-

A program can be loaded only where specified and

cannot move once loaded.

-

Not used much any more.

-

At load time

-

Similar to at link-edit time, but do not fix

the starting address.

-

Program can be loaded anywhere.

-

Program can move but cannot be split.

-

Need modest hardware: base/limit registers.

-

Loader sets the base/limit registers.

-

No longer common.

-

At execution time

- Addresses translated dynamically during execution.

- Hardware needed to perform the virtual to physical address

translation quickly.

- Currently dominates.

- Much more information later.

Extensions

-

Dynamic Loading

- When executing a call, check if module is loaded.

- If not loaded, call linking loader to load it and update

tables.

- Slows down calls (indirection) unless you rewrite code dynamically.

- Not used much.

-

Dynamic Linking

- The traditional linking described above is today often called

static linking.

- With dynamic linking, frequently used routines are not linked

into the program. Instead, just a stub is linked.

- When the routine is called, the stub checks to see if the

real routine is loaded (it may have been loaded by

another program).

- If not loaded, load it.

- If already loaded, share it. This needs some OS

help so that different jobs sharing the library don't

overwrite each other's private memory.

- Advantages of dynamic linking.

- Saves space: Routine only in memory once even when used

many times.

- Bug fix to dynamically linked library fixes all applications

that use that library, without having to

relink the application.

- Disadvantages of dynamic linking.

- New bugs in dynamically linked library infect all

applications.

- Applications “change” even when they haven't changed.

Note: I will place ** before each memory management

scheme.

NOTE: Lab 3 (banker's algorithm) will be assigned next week

and due two NYU weeks (three calendar weeks) later.

4.1: Basic Memory Management (Without Swapping or Paging)

Entire process remains in memory from start to finish and does not move.

The sum of the memory requirements of all jobs in the system cannot

exceed the size of physical memory.

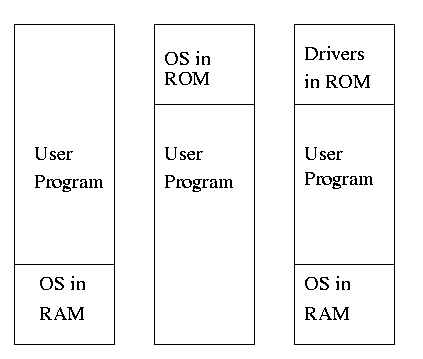

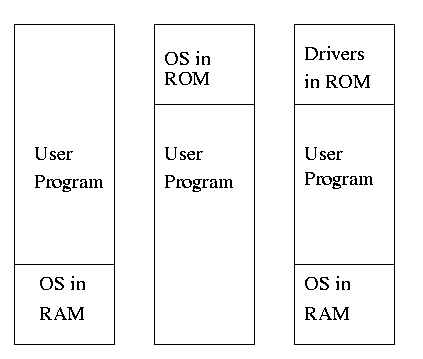

** 4.1.1: Monoprogramming without swapping or paging (Single User)

The “good old days” when everything was easy.

-

No address translation done by the OS (i.e., address translation is

not performed dynamically during execution).

-

Either reload the OS for each job (or don't have an OS, which is almost

the same), or protect the OS from the job.

- One way to protect (part of) the OS is to have it in ROM.

- Of course, must have the OS (read-write) data in ram.

- Can have a separate OS address space only accessible in

supervisor mode.

- Might just put some drivers in ROM (BIOS).

-

The user employs overlays if the memory needed

by a job exceeds the size of physical memory.

- Programmer breaks program into pieces.

- A “root” piece is always memory resident.

- The root contains calls to load and unload various pieces.

- Programmer's responsibility to ensure that a piece is already

loaded when it is called.

- No longer used, but we couldn't have gotten to the moon in the

60s without it (I think).

- Overlays have been replaced by dynamic address translation and

other features (e.g., demand paging) that have the system support

logical address sizes greater than physical address sizes.

- Fred Brooks (leader of IBM's OS/360 project and author of “The

mythical man month”) remarked that the OS/360 linkage editor was

terrific, especially in its support for overlays, but by the time

it came out, overlays were no longer used.

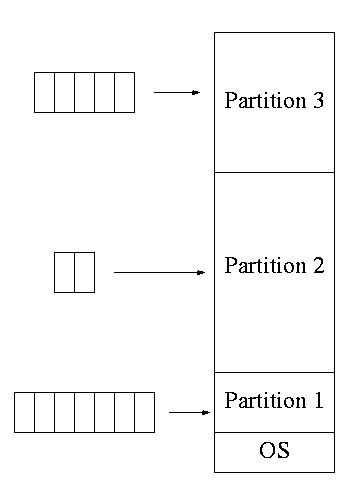

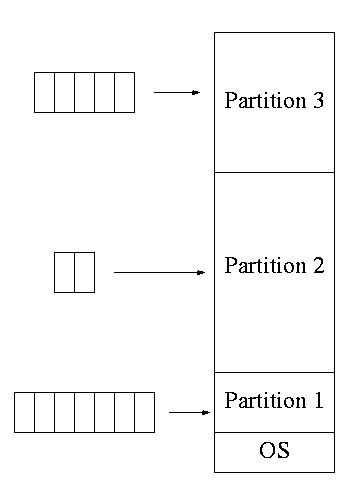

**4.1.2: Multiprogramming with fixed partitions

Two goals of multiprogramming are to improve CPU utilization, by

overlapping CPU and I/O, and to permit short jobs to finish quickly.

-

This scheme was used by IBM for system 360 OS/MFT

(multiprogramming with a fixed number of tasks).

-

Can have a single input queue instead of one for each partition.

-

So that if there are no big jobs, one can use the big

partition for little jobs.

-

But I don't think IBM did this.

-

Can think of the input queue(s) as the ready list(s) with a

scheduling policy of FCFS in each partition.

-

Each partition was monoprogrammed, the

multiprogramming occurred across partitions.

-

The partition boundaries are not movable (must reboot to

move a job).

-

So the partitions are of fixed size.

-

MFT can have large internal fragmentation,

i.e., wasted space inside a region of memory assigned

to a process.

-

Each process has a single “segment” (i.e., the virtual

address space is contiguous).

We will discuss segments later.

-

The physical address space is also contiguous (i.e., the program

is stored as one piece).

-

No sharing of memory between process.

-

No dynamic address translation.

-

At load time must “establish addressability”.

-

That is, must set a base register to the location at which the

process was loaded (the bottom of the partition).

-

The base register is part of the programmer visible register set.

-

This is an example of address translation during load time.

-

Also called relocation.

-

Storage keys are adequate for protection (IBM method).

-

Alternative protection method is base/limit registers.

-

An advantage of base/limit is that it is easier to move a job.

-

But MFT didn't move jobs so this disadvantage of storage keys is moot.

-

Tanenbaum says a job was “run until it terminates.

This must be wrong as that would mean monoprogramming.

-

He probably means that jobs not swapped out and each queue is FCFS

without preemption.

4.1.3: Modeling Multiprogramming (crudely)

-

Consider a job that is unable to compute (i.e., it is waiting for

I/O) a fraction p of the time.

-

Then, with monoprogramming, the CPU utilization is 1-p.

-

Note that p is often > .5 so CPU utilization is poor.

-

But, if the probability that a

job is waiting for I/O is p and n jobs are in memory, then the

probability that all n are waiting for I/O is approximately pn.

-

So, with a multiprogramming level (MPL) of n,

the CPU utilization is approximately 1-pn.

-

If p=.5 and n=4, then 1-pn = 15/16, which is much better than

1/2, which would occur for monoprogramming (n=1).

-

This is a crude model, but it is correct that increasing MPL does

increase CPU utilization up to a point.

-

The limitation is memory, which is why we discuss it here

instead of process management. That is, we must have many jobs

loaded at once, which means we must have enough memory for them.

There are other issues as well and we will discuss them.

-

Some of the CPU utilization is time spent in the OS executing

context switches so the gains are not a great as the crude model predicts.

Homework: 1, 2 (typo in book; figure 4.21 seems

irrelevant).

4.1.4: Analysis of Multiprogramming System Performance

Skipped

4.1.5: Relocation and Protection

Relocation was discussed as part of linker lab and at the

beginning of this chapter.

When done dynamically, a simple method is to have a

base register whose value is added to every address by the

hardware.

Similarly a limit register is checked by the

hardware to be sure that the address (before the base register is

added) is not bigger than the size of the program.

The base and limit register are set by the OS when the job starts.

Is the figure on the right safe or not?

Is the figure on the right safe or not?