Operating Systems

================ Start Lecture #24 ================

5.2.2: Programmed I/O

-

As mentioned just above, with programmed I/O

the processor moves the data between memory and the device.

-

How does the process know when the device is ready to accept or

supply new data?

-

In the simplest implementation, the processor loops continually

asking the device. This is called polling or

busy waiting.

-

If we poll infrequently, there can be a significant delay between

when the I/O is complete and the OS uses the data or supplies new

data.

-

If we poll frequently and the device is (sometimes) slow, polling

is clearly wasteful, which leads us to ...

5.2.3: Interrupt-Driven (Programmed) I/O

-

The device interrupts the processor when it is ready.

-

An interrupt service routine then initiates transfer of the next

datum.

-

Normally better than polling, but not always. Interrupts are

expensive on modern machines.

-

To minimize interrupts, better controllers often employ ...

5.2.4: I/O Using DMA

-

We discussed DMA above.

-

An additional advantage of dma, not mentioned above, is that the

processor is interrupted only at the end of a command not after

each datum is transferred.

-

Many devices receive a character at a time, but with a dma

controller, an interrupt occurs only after a buffer has been

transferred.

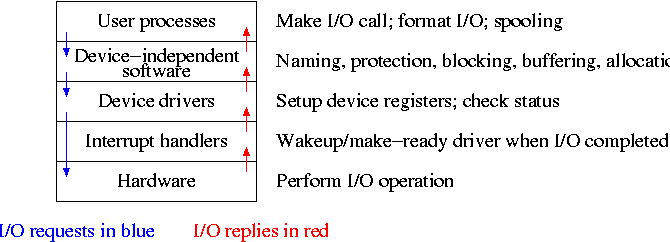

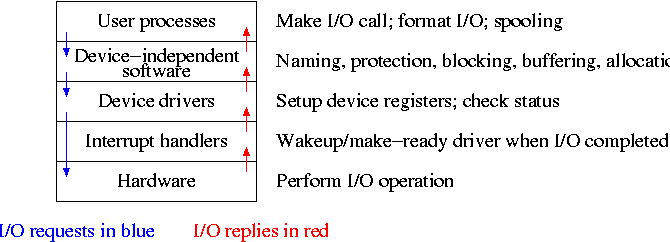

5.3: I/O Software Layers

Layers of abstraction as usual prove to be effective. Most systems

are believed to use the following layers (but for many systems, the OS

code is not available for inspection).

-

User-level I/O routines.

-

Device-independent (kernel-level) I/O software.

-

Device drivers.

-

Interrupt handlers.

We will give a bottom up explanation.

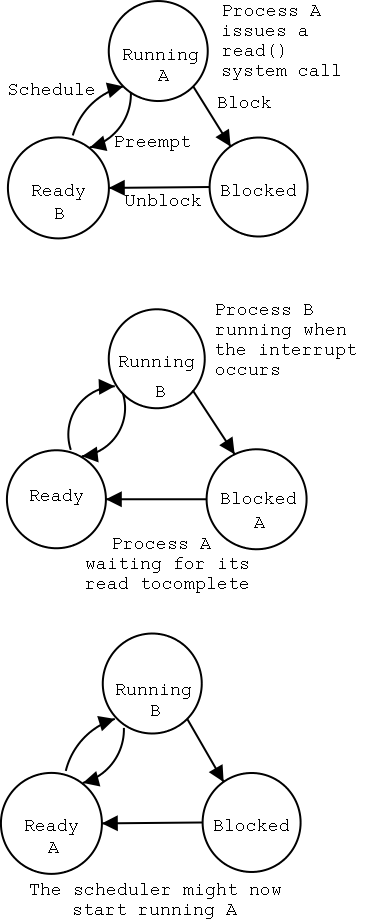

5.3.1: Interrupt Handlers

We discussed an interrupt handler before when studying page faults.

Then it was called “assembly language code”.

In the present case, we have a process blocked on I/O and the I/O

event has just completed. So the goal is to make the process ready.

Possible methods are.

-

Releasing a semaphore on which the process is waiting.

-

Sending a message to the process.

-

Inserting the process table entry onto the ready list.

Once the process is ready, it is up to the scheduler to decide when

it should run.

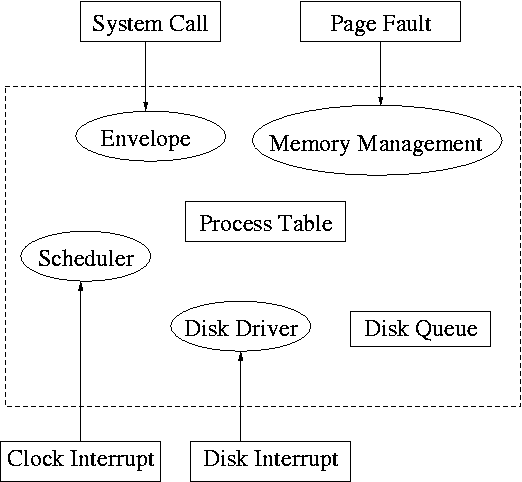

5.3.2: Device Drivers

The portion of the OS that is tailored to the characteristics of the

controller.

The driver has two “parts” corresponding to its two

access points.

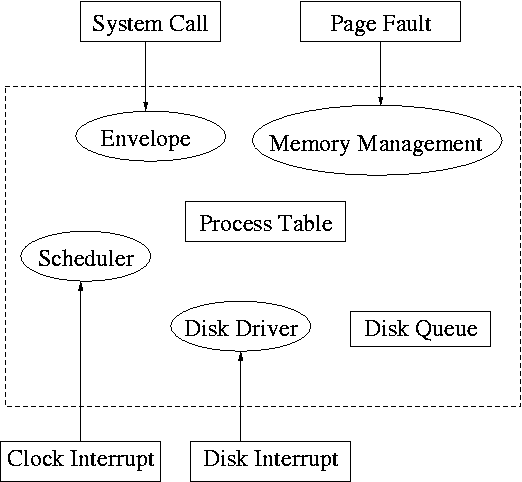

Recall the figure on the right, which we saw at the

beginning of the course.

-

Accessed by the main line OS via the envelope in response to an

I/O system call. The portion of the driver accessed in this way

is sometimes call the “top” part.

-

Accessed by the interrupt handler when the I/O completes (this

completion is signaled by an interrupt). The portion of the

driver accessed in this way is sometimes call the “bottom”

part.

Tanenbaum describes the actions of the driver assuming it is

implemented as a process (which he recommends).

I give both that view

point and the self-service paradigm in which the driver is invoked by

the OS acting in behalf of a user process (more precisely the process

shifts into kernel mode).

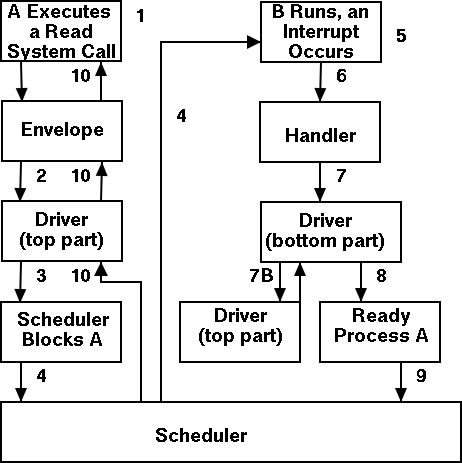

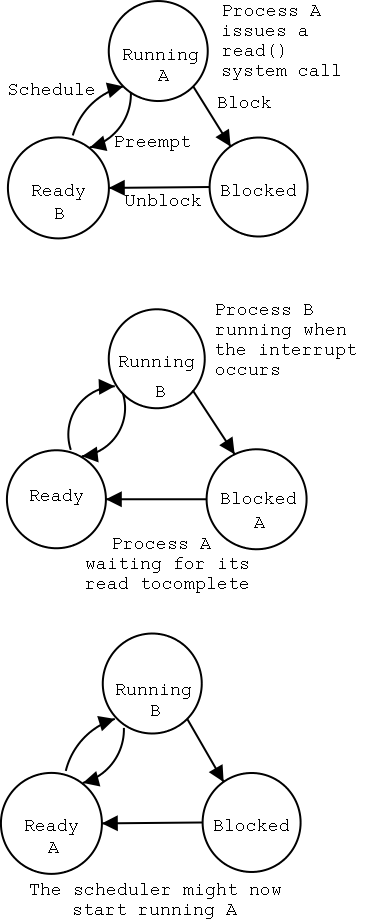

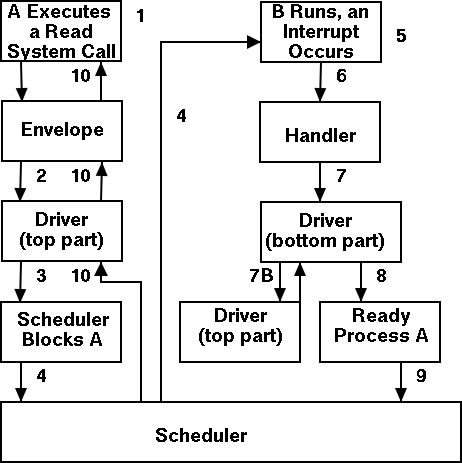

Driver in a self-service paradigm

-

The user (A) issues an I/O system call.

-

The main line, machine independent, OS prepares a

generic request for the driver and calls (the top part of)

the driver.

-

If the driver was idle (i.e., the controller was idle), the

driver writes device registers on the controller ending with a

command for the controller to begin the actual I/O.

-

If the controller was busy (doing work the driver gave it

previously), the driver simply queues the current request (the

driver dequeues this request below).

-

The driver jumps to the scheduler indicating that the current

process should be blocked.

-

The scheduler blocks A and runs (say) B.

-

B starts running.

-

An interrupt arrives (i.e., an I/O has been completed) and the

handler is invoked.

-

The interrupt handler invokes (the bottom part of) the driver.

-

The driver informs the main line perhaps passing data and

surely passing status (error, OK).

-

The top part is called to start another I/O if the queue is

nonempty. We know the controller is free. Why?

Answer: We just received an interrupt saying so.

-

The driver jumps to the scheduler indicating that process A should

be made ready.

-

The scheduler picks a ready process to run. Assume it picks A.

-

A resumes in the driver, which returns to the main line, which

returns to the user code.

Driver as a process (Tanenbaum) (less detailed than above)

-

The user issues an I/O request. The main line OS prepares a

generic request (e.g. read, not read using Buslogic BT-958 SCSI controller)

for the driver and the driver is awakened (perhaps a message is sent to

the driver to do both jobs).

- The driver wakes up.

- If the driver was idle (i.e., the controller is idle), the

driver writes device registers on the controller ending with a

command for the controller to begin the actual I/O.

- If the controller is busy (doing work the driver gave it), the

driver simply queues the current request (the driver dequeues this

below).

- The driver blocks waiting for an interrupt or for more

requests.

-

An interrupt arrives (i.e., an I/O has been completed).

- The driver wakes up.

- The driver informs the main line perhaps passing data and

surely passing status (error, OK).

- The driver finds the next work item or blocks.

- If the queue of requests is non-empty, dequeue one and

proceed as if just received a request from the main line.

- If queue is empty, the driver blocks waiting for an

interrupt or a request from the main line.

5.3.3: Device-Independent I/O Software

The device-independent code does most of the functionality, but not

necessarily most of the code since there can be many drivers,

all doing essentially the same thing in slightly different ways due to

slightly different controllers.

-

Naming. Again an important O/S functionality.

Must offer a consistent interface to the device drivers.

-

In Unix this is done by associating each device with a

(special) file in the /dev directory.

-

The i-nodes for these files contain an indication that these

are special files and also contain so called major and minor

device numbers.

-

The major device number gives the number of the driver.

(These numbers are rather ad hoc, they correspond to the position

of the function pointer to the driver in a table of function

pointers.)

-

The minor number indicates for which device (e.g., which scsi

cdrom drive) the request is intended

-

Protection. A wide range of possibilities are

actually done in real systems. Including both extreme examples of

everything is permitted and nothing is permitted (directly).

-

In ms-dos any process can write to any file. Presumably, our

offensive nuclear missile launchers do not run dos.

-

In IBM 360/370/390 mainframe OS's, normal processors do not

access devices. Indeed the main CPU doesn't issue the I/O

requests. Instead an I/O channel is used and the mainline

constructs a channel program and tells the channel to invoke it.

-

Unix uses normal rwx bits on files in /dev (I don't believe x

is used).

-

Buffering is necessary since requests come in a

size specified by the user and data is delivered in a size specified

by the device.

-

Enforce exclusive access for non-shared devices

like tapes.

5.3.4: User-Space Software

A good deal of I/O code is actually executed by unprivileged code

running in user space.

Some of this code consists of library routines linked into user programs,

some are standard utilities,

and some is in daemon processes.

-

Some library routines are trivial and just move their arguments

into the correct place (e.g., a specific register) and then issue a

trap to the correct system call to do the real work.

-

Some, notably standard I/O (stdio) in Unix, are definitely not

trivial. For example consider the formatting of floating point

numbers done in printf and the reverse operation done in scanf.

-

Printing to a local printer is often performed in part by a

regular program (lpr in Unix) and part by a

daemon (lpd in Unix).

The daemon might be started when the system boots.

-

Printing uses spooling, i.e., the file to be

printed is copied somewhere by lpr and then the daemon works with this

copy. Mail uses a similar technique (but generally it is called

queuing, not spooling).

Homework: 10, 13.

5.4: Disks

The ideal storage device is

-

Fast

-

Big (in capacity)

-

Cheap

-

Impossible

When compared to central memory, disks are big and cheap, but slow.

5.4.1: Disk Hardware

Show a real disk opened up and illustrate the components.

-

Platter

-

Surface

-

Head

-

Track

-

Sector

-

Cylinder

-

Seek time

-

Rotational latency

-

Transfer rate

Consider the following characteristics of a disk.

-

RPM (revolutions per minute)

-

Seek time. This is actually quite complicated to calculate since

you have to worry about, acceleration, travel time, deceleration,

and "settling time".

-

Rotational latency. The average value is the time for

(approximately) one half a revolution.

-

Transfer rate, determined by RPM and bit density.

-

Sectors per track, determined by bit density

-

Tracks per surface (i.e., number of cylinders), determined by bit

density.

-

Tracks per cylinder (i.e, the number of surfaces)

Overlapping I/O operations is important. Many controllers can do

overlapped seeks, i.e. issue a seek to one disk while another is

already seeking.

As technology increases the space taken to store a bit decreases,

i.e.. the bit density increases.

This changes the number of cylinders per inch of radius (the cylinders

are closer together) and the number of bits per inch along a given track.

(Unofficial) Modern disks cheat and have more sectors on outer

cylinders as on inner one. For this course, however, we assume the

number of sectors/track is constant. Thus for us there are fewer bits

per inch on outer sectors and the transfer rate is the same for all

cylinders. The modern disks have electronics and software (firmware)

that hides the cheat and gives the illusion of the same number of

sectors on all tracks.

(Unofficial) Despite what tanenbaum says later, it is not true that

when one head is reading from cylinder C, all the heads can read from

cylinder C with no penalty. It is, however, true that the penalty is

very small.

Choice of block size

-

We discussed a similar question before when studying page size.

-

Current commodity disk characteristics (not for laptops) result in

about 15ms to transfer the first byte and 10K bytes per ms for

subsequent bytes (if contiguous).

-

Rotation rate often 5400 or 7200 RPM with 10k, 15k and (just

now) 20k available.

-

Recall that 6000 RPM is 100 rev/sec or one rev

per 10ms. So half a rev (the average time to rotate to a

given point) is 5ms.

-

Transfer rates around 10MB/sec = 10KB/ms.

-

Seek time around 10ms.

-

This analysis suggests large blocks, 100KB or more.

-

But the internal fragmentation would be severe since many files

are small.

-

Typical block sizes are 4KB-8KB.

-

Multiple block sizes have been tried (e.g. blocks are 8K but a

file can also have “fragments” that are a fraction of

a block, say 1K)

-

Some systems employ techniques to force consecutive blocks of a

given file near each other,

preferably contiguous. Also some

systems try to cluster “related” files (e.g., files in the

same directory).

Homework:

Consider a disk with an average seek time of 10ms, an average

rotational latency of 5ms, and a transfer rate of 10MB/sec.

-

If the block size is 1KB, how long would it take to read a block?

-

If the block size is 100KB, how long would it take to read a

block?

-

If the goal is to read 1K, a 1KB block size is better as the

remaining 99KB are wasted. If the goal is to read 100KB, the

100KB block size is better since the 1KB block size needs 100

seeks and 100 rotational latencies. What is the minimum size

request for which a disk with a 100KB block size would complete

faster than one with a 1KB block size?