Operating Systems

================ Start Lecture #23 ================

4.9: Research on Memory Management

Skipped

4.10: Summary

Read

Some Last Words on Memory Management

-

Segmentation / Paging / Demand Loading (fetch-on-demand)

-

Each is a yes or no alternative.

-

Gives 8 possibilities.

-

Placement and Replacement.

-

Internal and External Fragmentation.

-

Page Size and locality of reference.

-

Multiprogramming level and medium term scheduling.

Chapter 5: Input/Output

5.1: Principles of I/O Hardware

5.1.1: I/O Devices

-

Not much to say. Devices are varied.

-

Block versus character devices.

This used to be a big deal, but now is of lesser importance.

-

Devices, such as disks and CDROMs, with addressable chunks

(sectors in this case) are called block

devices,

These devices support seeking.

-

Devices, such as Ethernet and modem connections, that are a

stream of characters are called character

devices.

These devices do not support seeking.

-

Some cases, like tapes, are not so clear.

-

More natural is to distinguish between

-

Input only (keyboard, mouse), vs. output only (monitor), vs.

input-output (disk).

-

Local vs. remote (network).

-

Weird (clock).

-

Random vs sequential access.

5.1.2: Device Controllers

These are the “devices” as far as the OS is concerned. That

is, the OS code is written with the controller spec in hand not with

the device spec.

-

Also called adaptors.

-

The controller abstracts away some of the low level features of

the device.

-

For disks, the controller does error checking and buffering.

-

(Unofficial) In the old days it handled interleaving of sectors.

(Sectors are interleaved if the

controller or CPU cannot handle the data rate and would otherwise have

to wait a full revolution. This is not a concern with modern systems

since the electronics have increased in speed faster than the

devices.)

-

For analog monitors (CRTs) the controller does

a great deal. Analog video is very far from a bunch of ones and

zeros.

5.1.3: Memory-Mapped I/O

Think of a disk controller and a read request. The goal is to copy

data from the disk to some portion of the central memory. How do we

do this?

-

The controller contains a microprocessor and memory and is

connected to the disk (by a cable).

-

When the controller asks the disk to read a sector, the contents

come to the controller via the cable and are stored by the controller

in its memory.

-

Two questions are: how does the OS, which is running on another

processor, let the controller know that a disk read is desired, and how

is the data eventually moved from the controller's memory to the

general system memory.

-

Typically the interface the OS sees consists of some device

registers located on the controller.

-

These are memory locations into which the OS writes

information such as the sector to access, read vs. write, length,

where in system memory to put the data (for a read) or from where

to take the data (for a write).

-

There is also typically a device register that acts as a

“go button”.

-

There are also devices registers that the OS reads, such as

status of the controller, errors found, etc.

-

So the first question becomes, how does the OS read and write the device

register.

-

With Memory-mapped I/O the device registers

appear as normal memory.

All that is needed is to know at which

address each device regester appears.

Then the OS uses normal

load and store instructions to write the registers.

-

Some systems instead have a special “I/O space” into which

the registers are mapped and require the use of special I/O space

instructions to accomplish the load and store.

-

From a conceptual point of view there is no difference between

the two models.

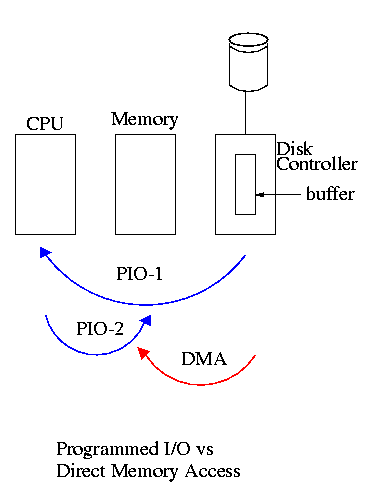

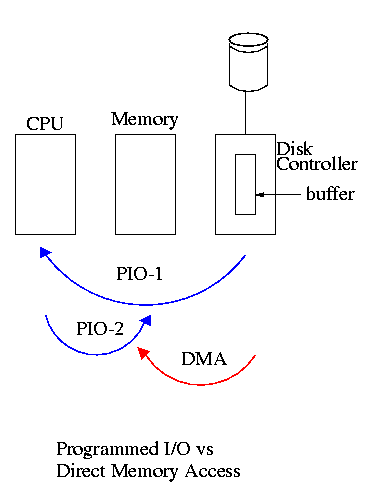

5.1.4: Direct Memory Access (DMA)

We now address the second question, moving data between the

controller and the main memory.

-

With or without DMA, the disk controller, when processing a read

request pulls the desired data from the disk to its buffer (and

pushes data from the buffer to the disk when processing a write).

-

Without DMA, i.e., with programmed I/O (PIO), the

cpu then does loads and stores (assuming the controller buffer is

memory mapped, or uses I/O instructions if it is not) to copy the data

from the buffer to the desired memory location.

-

With a DMA controller, the controller writes the main

memory itself, without intervention of the CPU.

-

Clearly DMA saves CPU work. But this might not be important if

the CPU is limited by the memory or by system buses.

-

An important point is that there is less data movement with DMA so

the buses are used less and the entire operation takes less time.

-

Since PIO is pure software it is easier to change, which is an

advantage.

-

DMA does need a number of bus transfers from the CPU to the

controller to specify the DMA. So DMA is most effective for large

transfers where the setup is amortized.

-

Why have the buffer? Why not just go from the disk straight to

the memory.

Answer: Speed matching. The disk supplies data at a fixed rate,

which might exceed the rate the memory can accept it.

In particular the memory

might be busy servicing a request from the processor or from another

DMA controller.

Homework: 12

5.1.5: Interrupts Revisited

Skipped.

5.2: Principles of I/O Software

As with any large software system, good design and layering is

important.

5.2.1: Goals of the I/O Software

Device independence

We want to have most of the OS, unaware of the characteristics of

the specific devices attached to the system.

(This principle of device independence is not limited to I/O; we also

want the OS to be largely unaware of the CPU type itself.)

This works quite well for files stored on various devices.

Most of the OS, including the file system code, and most applications

can read or write a file without knowing if the file is stored on a

floppy disk, a hard disk, a tape, or (for reading) a CD-ROM.

This principle also applies for user programs reading or writing

streams.

A program reading from ``standard input'', which is normally the

user's keyboard can be told to instead read from a disk file with no

change to the application program.

Similarly, ``standard output'' can be redirected to a disk file.

However, the low-level OS code dealing with disks is rather different

from that dealing keyboards and (character-oriented) terminals.

One can say that device independence permits programs to be

implemented as if they will read and write generic devices, with the

actual devices specified at run time.

Although writing to a disk has differences from writing to a terminal,

Unix cp, DOS copy, and many programs we write need not

be aware of these differences.

However, there are devices that really are special.

The graphics interface to a monitor (that is, the graphics interface

presented by the video controller--often called a ``video card'')

does not resemble the ``stream of bytes'' we see for disk files.

Homework: 9

Uniform naming

Recall that we discussed the value

of the name space implemented by file systems. There is no dependence

between the name of the file and the device on which it is stored. So

a file called IAmStoredOnAHardDisk might well be stored on a floppy disk.

Error handling

There are several aspects to error handling including: detection,

correction (if possible) and reporting.

-

Detection should be done as close to where the error occurred as

possible before more damage is done (fault containment). This is not

trivial.

-

Correction is sometimes easy, for example ECC memory does this

automatically (but the OS wants to know about the error so that it can

schedule replacement of the faulty chips before unrecoverable double

errors occur).

Other easy cases include successful retries for failed ethernet

transmissions. In this example, while logging is appropriate, it is

quite reasonable for no action to be taken.

-

Error reporting tends to be awful. The trouble is that the error

occurs at a low level but by the time it is reported the

context is lost. Unix/Linux in particular is horrible in this area.

Creating the illusion of synchronous I/O

-

I/O must be asynchronous for good performance. That is

the OS cannot simply wait for an I/O to complete. Instead, it

proceeds with other activities and responds to the notification when

the I/O has finished.

-

Users (mostly) want no part of this. The code sequence

Read X

Y <-- X+1

Print Y

should print a value one greater than that read. But if the

assignment is performed before the read completes, the wrong value is

assigned.

-

Performance junkies sometimes do want the asynchrony so that they

can have another portion of their program executed while the I/O is

underway. That is they implement a mini-scheduler in their

application code.

Buffering

-

Often needed to hold data for examination prior to sending it to

its desired destination.

-

But this involves copying and takes time.

-

Modern systems try to avoid as much buffering as possible. This

is especially noticeable in network transmissions, where the data

could conceivably be copied many times.

-

User space --> kernel space as part of the write system call

-

kernel space to kernel I/O buffer.

-

I/O buffer to buffer on the network adapter/controller.

-

From adapter on the source to adapter on the destination.

-

From adapter to I/O buffer.

-

From I/O buffer to kernel space.

-

From kernel space to user space as part of the read system call.

-

I am not sure if any systems actually do all seven.

Sharable vs dedicated devices

For devices like printers and tape drives, only one user at a time

is permitted. These are called serially reusable

devices, and were studied in the deadlocks chapter.

Devices like disks and Ethernet ports can be shared by processes

running concurrently.