Operating Systems

================ Start Lecture #2 ================

End of Interlude on Linkers

Chapter 1: Introduction

Homework: Read Chapter 1 (Introduction)

Levels of abstraction (virtual machines)

-

Software (and hardware, but that is not this course) is often

implemented in layers.

-

The higher layers use the facilities provided by lower layers.

-

Alternatively said, the upper layers are written using a more

powerful and more abstract virtual machine than the lower layers.

-

Alternatively said, each layer is written as though it runs on the

virtual machine supplied by the lower layer and in turn provides a

more abstract (pleasant) virtual machine for the higher layer to

run on.

-

Using a broad brush, the layers are.

- Applications and utilities

- Compilers, Editors, Command Interpreter (shell, DOS prompt)

- Libraries

- The OS proper (the kernel, runs in

privileged/kernel/supervisor mode)

- Hardware

-

Compilers, editors, shell, linkers. etc run in user mode.

-

The kernel itself is itself normally layered, e.g.

- Filesystems

- Machine independent I/O

- Machine dependent device drivers

-

The machine independent I/O part is written assuming “virtual

(i.e. idealized) hardware”. For example, the machine independent

I/O portion simply reads a block from a “disk”. But in reality

one must deal with the specific disk controller.

-

Often the machine independent part is more than one layer.

-

The term OS is not well defined. Is it just the kernel? How

about the libraries? The utilities? All these are certainly

system software but not clear how much is part of

the OS.

1.1: What is an operating system?

The kernel itself raises the level of abstraction and hides details.

For example a user (of the kernel) can write to a file (a concept not

present in hardware) and ignore whether the file resides on a floppy,

a CD-ROM, or a hard disk.

The user can also ignore issues such as whether the file is stored

contiguously or is broken into blocks.

The kernel is a resource manager (so users don't

conflict).

How is an OS fundamentally different from a compiler (say)?

Answer: Concurrency! Per Brinch Hansen in Operating Systems

Principles (Prentice Hall, 1973) writes.

The main difficulty of multiprogramming is that concurrent activities

can interact in a time-dependent manner, which makes it practically

impossibly to locate programming errors by systematic testing.

Perhaps, more than anything else, this explains the difficulty of

making operating systems reliable.

Homework: 1, 2. (unless otherwise stated, problems

numbers are from the end of the chapter in Tanenbaum.)

1.2 History of Operating Systems

- Single user (no OS).

- Batch, uniprogrammed, run to completion.

- The OS now must be protected from the user program so that it is

capable of starting (and assisting) the next program in the batch.

- Multiprogrammed

- The purpose was to overlap CPU and I/O

- Multiple batches

- IBM OS/MFT (Multiprogramming with a Fixed number of Tasks)

- OS for IBM system 360.

- The (real) memory is partitioned and a batch is

assigned to a fixed partition.

- The memory assigned to a

partition does not change.

- Jobs were spooled from cards into the

memory by a separate processor (an IBM 1401).

Similarly output was

spooled from the memory to a printer (a 1403) by the 1401.

- IBM OS/MVT (Multiprogramming with a Variable number of Tasks)

(then other names)

- Each job gets just the amount of memory it needs. That

is, the partitioning of memory changes as jobs enter and leave

- MVT is a more “efficient” user of resources, but is

more difficult.

- When we study memory management, we will see that, with

varying size partitions, questions like compaction and

“holes” arise.

- Time sharing

-

This is multiprogramming with rapid switching between jobs

(processes). Deciding when to switch and which process to

switch to is called scheduling.

-

We will study scheduling when we do processor management

- Personal Computers

-

Serious PC Operating systems such as linux, Windows NT/2000/XP

and (the newest) MacOS are multiprogrammed OSes.

-

GUIs have become important. Debate as to whether it should be

part of the kernel.

-

Early PC operating systems were uniprogrammed and their direct

descendants in some sense still are (e.g. Windows ME).

Homework: 3.

1.3: OS Zoo

There is not as much difference between mainframe, server,

multiprocessor, and PC OSes as Tannenbaum suggests. For example

Windows NT/2000/XP, Unix and Linux are used on all.

1.3.1: Mainframe Operating Systems

Used in data centers, these systems ofter tremendous I/O

capabilities and extensive fault tolerance.

1.3.2: Server Operating Systems

Perhaps the most important servers today are web servers.

Again I/O (and network) performance are critical.

1.3.3: Multiprocessor Operating systems

These existed almost from the beginning of the computer

age, but now are not exotic.

1.3.4: PC Operating Systems (client machines)

Some OSes (e.g. Windows ME) are tailored for this application. One

could also say they are restricted to this application.

1.3.5: Real-time Operating Systems

- Often are Embedded Systems.

- Soft vs hard real time. In the latter missing a deadline is a

fatal error--sometimes literally.

- Very important commercially, but not covered much in this course.

1.3.6: Embedded Operating Systems

- The OS is “part of” the device. For example, PDAs,

microwave ovens, cardiac monitors.

- Often are real-time systems.

- Very important commercially, but not covered much in this course.

1.3.7: Smart Card Operating Systems

Very limited in power (both meanings of the word).

Multiple computers

- Network OS: Make use of the multiple PCs/workstations on a LAN.

- Distributed OS: A “seamless” version of above.

- Not part of this course (but often in G22.2251).

Homework: 5.

1.4: Computer Hardware Review

Tannenbaum's treatment is very brief and superficial. Mine is even

more so.

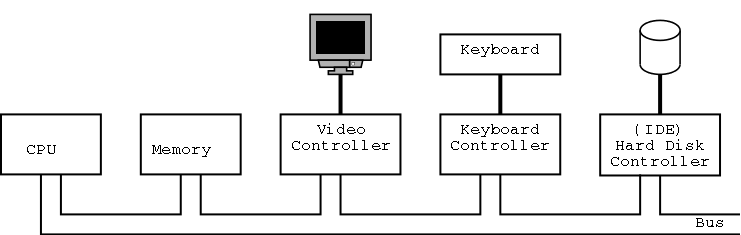

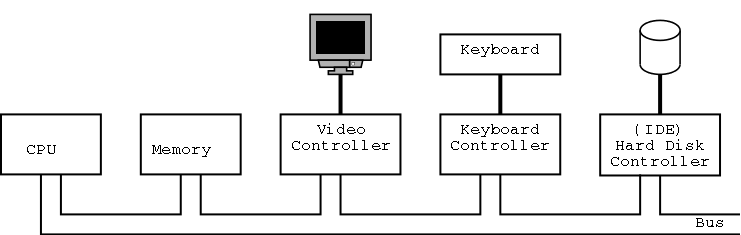

The picture above is very simplified.

For one thing, today separate buses are used to Memory and Video.

1.4.1: Processors

We will ignore processor concepts such as program

counters and stack pointers. We will also ignore

computer design issues such as pipelining and

superscalar. We do, however, need the notion of a

trap, that is an instruction that atomically

switches the processor into privileged mode and jumps to a pre-defined

physical address.

1.4.2: Memory

We will ignore caches, but will (later) discuss demand paging,

which is very similar (although demand paging and caches use

completely disjoint terminology).

In both cases, the goal is to combine large slow memory with small

fast memory to achieve the effect of large fast memory.

The central memory in a system is called RAM

(Random Access Memory). A key point is that it is volatile, i.e. the

memory loses its data if power is turned off.

Disk Hardware

I don't understand why Tanenbaum discusses disks here instead of in

the next section entitled I/O devices, but he does. I don't.

ROM / PROM / EPROM / EEPROM / Flash Ram

ROM (Read Only Memory) is used to hold data that

will not change, e.g. the serial number of a computer or the program

use in a microwave. ROM is non-volatile. A modern, familiar ROM is

CD-ROM (or the denser DVD).

But often this unchangable data needs to be changed (e.g., to fix

bugs). This gives rise first to PROM (Programmable

ROM), which, like a

CD-R, can be written once (as opposed to being mass produced already

written like a CD-ROM), and then to EPROM (Erasable

PROM; not Erasable

ROM as in Tanenbaum), which is like a CD-RW. An EPROM is especially

convenient if it can be erased with a normal circuit (EEPROM,

Electrically EPROM or Flash RAM).

Memory Protection and Context Switching

As mentioned above when discussing

OS/MFT and OS/MVT

multiprogramming requires that we protect one process from another.

That is we need to translate the virtual addresses of

each program into distinct physical addresses. The

hardware that performs this translation is called the

MMU or Memory Management Unit.

When context switching from one process to

another, the translation must change, which can be an expensive

operation.

1.4.3: I/O Devices

When we do I/O for real, I will show a real disk opened up and

illustrate the components

- Platter

- Surface

- Head

- Track

- Sector

- Cylinder

- Seek time

- Rotational latency

- Transfer time

Devices are often quite complicated to manage and a separate

computer, called a controller, is used to translate simple commands

(read sector 123456) into what the device requires (read cylinder 321,

head 6, sector 765). Actually the controller does considerably more,

e.g. calculates a checksum for error detection.

How does the OS know when the I/O is complete?

-

It can busy wait constantly asking the controller

if the I/O is complete. This is the easiest (by far) but has low

performance. This is also called polling or

PIO (Programmed I/O).

-

It can tell the controller to start the I/O and then switch to

other tasks. The controller must then interrupt

the OS when the I/O is done. Less waiting, but harder

(concurrency!). Also on modern processors a single is rather

costly. Much more than a single memory reference, but much, much

less than a disk I/O.

-

Some controllers can do

DMA (Direct Memory Access)

in which case they deal directly with memory after being started

by the CPU. This takes work from the CPU and halves the number of

bus accesses.

We discuss this more in chapter 5. In particular, we explain the last

point about halving bus accesses.