Operating Systems

================ Start Lecture #9 ================

Address translation

- Each memory reference turns into 2 memory references

- Reference the page table

- Reference central memory

- This would be a disaster!

- Hence the MMU caches page#-->frame# translations. This cache is kept

near the processor and can be accessed rapidly.

- This cache is called a translation lookaside buffer (TLB) or

translation buffer (TB).

- For the above example, after referencing virtual address 3372,

there would be an entry in the TLB containing the mapping

3-->459.

- Hence a subsequent access to virtual address 3881 would be

translated to physical address 459881 without an extra memory

reference.

Naturally, a memory reference for location 459881 itself would be

required.

Choice of page size is discuss below.

Homework: 8.

4.3: Virtual Memory (meaning fetch on demand)

Idea is that a program can execute even if only the active portion of its

address space is memory resident. That is, we are to swap in and swap out

portions of a program. In a crude sense this could be called

“automatic overlays”.

Advantages

-

Can run a program larger than the total physical memory.

-

Can increase the multiprogramming level since the total size of

the active, i.e. loaded, programs (running + ready + blocked) can

exceed the size of the physical memory.

-

Since some portions of a program are rarely if ever used, it is an

inefficient use of memory to have them loaded all the time. Fetch

on demand will not load them if not used and will unload them

during replacement if they are not used for a long time

(hopefully).

-

Simpler for the user than overlays or variable aliasing

(older techniques to run large programs using limited memory).

Disadvantages

-

More complicated for the OS.

-

Execution time less predictable (depends on other jobs).

-

Can over-commit memory.

** 4.3.1: Paging (meaning demand paging)

Fetch pages from disk to memory when they are referenced, with a hope

of getting the most actively used pages in memory.

- Very common: dominates modern operating systems.

- Started by the Atlas system at Manchester University in the 60s

(Fortheringham).

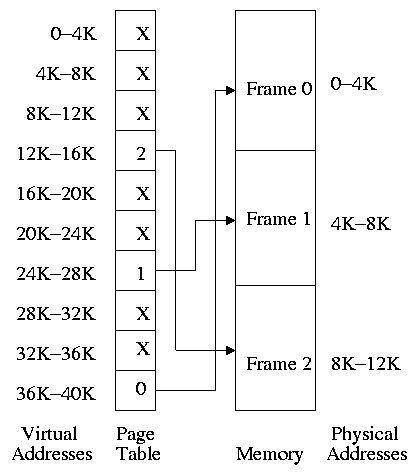

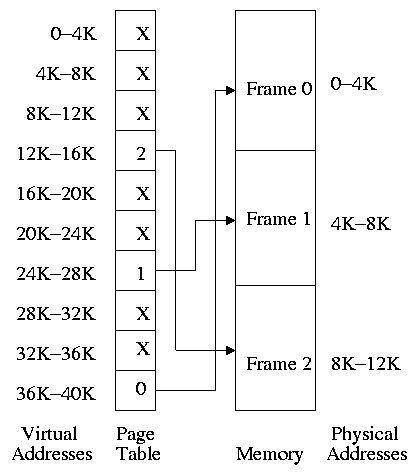

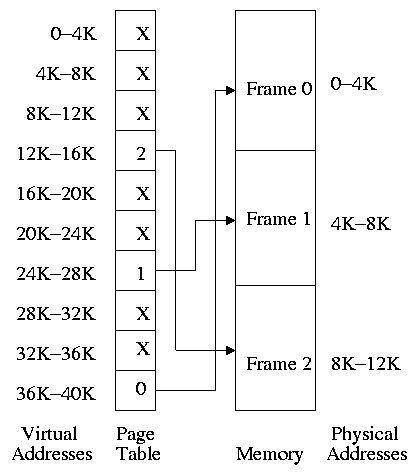

- Each PTE continues to have the frame number if the page is

loaded.

- But what if the page is not loaded (exists only on disk)?

- The PTE has a flag indicating if it is loaded (can think of

the X in the diagram on the right as indicating that this flag is

not set).

- If not loaded, the location on disk could be kept in the PTE,

but normally it is not

(discussed below).

- When a reference is made to a non-loaded page (sometimes

called a non-existent page, but that is a bad name), the system

has a lot of work to do. We give more details

below.

- Choose a free frame, if one exists.

- If not

- Choose a victim frame.

- More later on how to choose a victim.

- Called the replacement question

- Write victim back to disk if dirty,

- Update the victim PTE to show that it is not loaded.

- Copy the referenced page from disk to the free frame.

- Update the PTE of the referenced page to show that it is

loaded and give the frame number.

- Do the standard paging address translation (p#,off)-->(f#,off).

- Really not done quite this way

-

There is “always” a free frame because ...

-

... there is a deamon active that checks the number of free frames

and if this is too low, chooses victims and “pages them out”

(writing them back to disk if dirty).

-

Choice of page size is discussed below.

Homework: 12.

4.3.2: Page tables

A discussion of page tables is also appropriate for (non-demand)

paging, but the issues are more acute with demand paging since the

tables can be much larger. Why?

-

The total size of the active processes is no longer limited to the

size of physical memory. Since the total size of the processes is

greater, the total size of the page tables is greater and hence

concerns over the size of the page table are more acute.

-

With demand paging an important question is the choice of a victim

page to page out. Data in the page table

can be useful in this choice.

We must be able access to the page table very quickly since it is

needed for every memory access.

Unfortunate laws of hardware.

-

Big and fast are essentially incompatible.

-

Big and fast and low cost is hopeless.

So we can't just say, put the page table in fast processor registers,

and let it be huge, and sell the system for $1000.

The simplest solution is to put the page table in main memory.

However it seems to be both too slow and two big.

-

Seems too slow since all memory references require two reference.

-

This can be largely repaired by using a TLB, which is fast

and, although small, often captures almost all references to

the page table.

-

For this course, officially TLBs “do not exist”,

that is if you are asked to perform a translation, you should

assume there is no TLB.

-

Nonetheless we will discuss them below and in reality they

very much do exist.

-

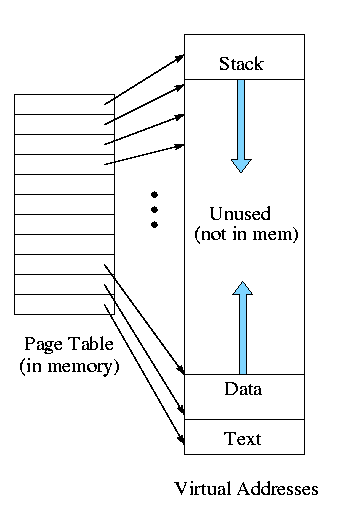

The page table might be too big.

-

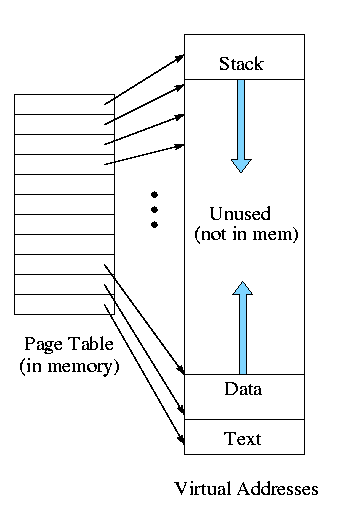

Currently we are considering contiguous virtual

addresses ranges (i.e. the virtual addresses have no holes).

-

Typically put the stack at one end of virtual address and the

global (or static) data at the other end and let them grow towards

each other.

-

The memory in between is unused.

-

This unused virtual memory can be huge (in address range) and

hence the page table will mostly contain unneeded PTEs.

-

Works fine if the maximum virtual address size is small, which

was once true (e.g., the PDP-11 of the 1970s) but is no longer the

case.

-

The “fix” is to use multiple levels of mapping.

We will see two examples below: two-level paging and

segmentation plus paging.

Contents of a PTE

Each page has a corresponding page table entry (PTE).

The information in a PTE is for use by the hardware.

Information set by and used by the OS is normally kept in other OS tables.

The page table format is determined by the hardware so access routines

are not portable.

The following fields are often present.

-

The valid bit. This tells if

the page is currently loaded (i.e., is in a frame). If set, the frame

number is valid.

It is also called the presence or

presence/absence bit. If a page is accessed with the valid

bit unset, a page fault is generated by the hardware.

-

The frame number. This is the main reason for the table. It gives

the virtual to physical address translation.

-

The Modified bit. Indicates that some part of the page

has been written since it was loaded. This is needed if the page is

evicted so that the OS can tell if the page must be written back to

disk.

-

The referenced bit. Indicates that some word in the page

has been referenced. Used to select a victim: unreferenced pages make

good victims by the locality property (discussed below).

-

Protection bits. For example one can mark text pages as

execute only. This requires that boundaries between regions with

different protection are on page boundaries. Normally many

consecutive (in logical address) pages have the same protection so

many page protection bits are redundant.

Protection is more

naturally done with segmentation.

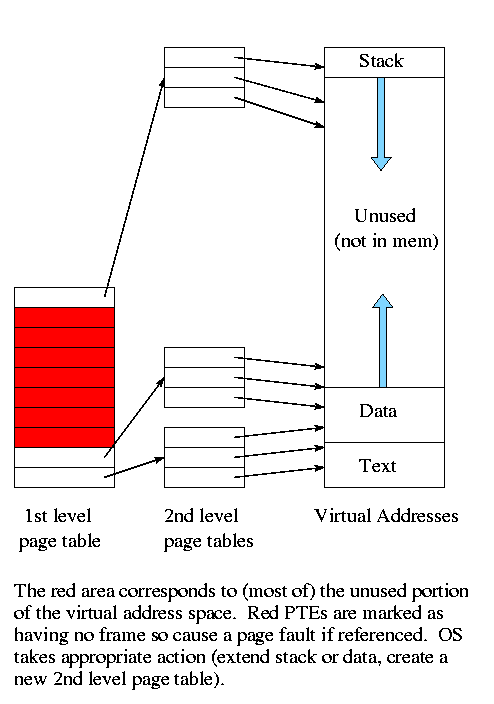

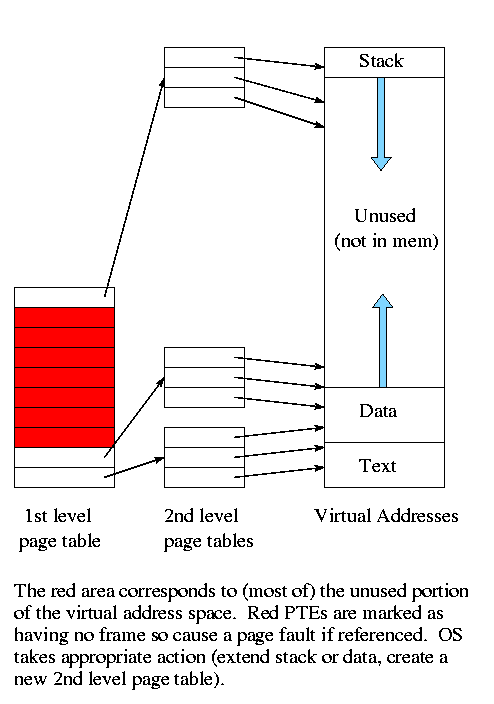

Multilevel page tables

Recall the previous diagram. Most of the virtual memory is the

unused space between the data and stack regions. However, with demand

paging this space does not waste real memory. But the single

large page table does waste real memory.

The idea of multi-level page tables (a similar idea is used in Unix

i-node-based file systems, which we study later) is to add a level of

indirection and have a page table containing pointers to page tables.

-

Imagine one big page table.

-

Call it the second level page table and

cut it into pieces each the size of a page.

Note that many (typically 1024 or 2048) PTEs fit in one page so

there are far fewer of these pages than PTEs.

-

Now construct a first level page table containing PTEs that

point to these pages.

-

This first level PT is small enough to store in memory. It

contains one PTE for every page of PTEs in the 2nd level PT. A

space reduction of one or two thousand.

-

But since we still have the 2nd level PT, we have made the world

bigger not smaller!

-

Don't store in memory those 2nd level page tables all of whose PTEs

refer to unused memory. That is use demand paging on the (second

level) page table

Address translation with a 2-level page table

For a two level page table the virtual address is divided into

three pieces

+-----+-----+-------+

| P#1 | P#2 | Offset|

+-----+-----+-------+

-

P#1 gives the index into the first level page table.

-

Follow the pointer in the corresponding PTE to reach the frame

containing the relevant 2nd level page table.

-

P#2 gives the index into this 2nd level page table

-

Follow the pointer in the corresponding PTE to reach the frame

containing the (originally) requested frame.

-

Offset gives the offset in this frame where the requested word is

located.

Do an example on the board

The VAX used a 2-level page table structure, but with some wrinkles

(see Tanenbaum for details).

Naturally, there is no need to stop at 2 levels. In fact the SPARC

has 3 levels and the Motorola 68030 has 4 (and the number of bits of

Virtual Address used for P#1, P#2, P#3, and P#4 can be varied).

4.3.3: TLBs--Translation Lookaside Buffers (and General

Associative Memory)

Note:

Tanenbaum suggests that “associative memory” and “translation

lookaside buffer” are synonyms. This is wrong. Associative memory

is a general concept and translation lookaside buffer is a special

case.

An associative memory is a

content addressable memory.

That is you access the memory by giving the value

of some field and the hardware searches all the records and returns

the record whose field contains the requested value.

For example

Name | Animal | Mood | Color

======+========+==========+======

Moris | Cat | Finicky | Grey

Fido | Dog | Friendly | Black

Izzy | Iguana | Quiet | Brown

Bud | Frog | Smashed | Green

If the index field is Animal and Iguana is given, the associative

memory returns

Izzy | Iguana | Quiet | Brown

A Translation Lookaside Buffer

or TLB

is an associate memory

where the index field is the page number. The other fields include

the frame number, dirty bit, valid bit, and others.

-

A TLB is small and expensive but at least it is

fast. When the page number is in the TLB, the frame number

is returned very quickly.

-

On a miss, the page number is looked up in the page table. The record

found is placed in the TLB and a victim is discarded. There is no

placement question since all entries are accessed at the same time.

But there is a replacement question.

Homework: 17.

4.3.4: Inverted page tables

Keep a table indexed by frame number with the entry f containing the

number of the page currently loaded in frame f.

This is often called a frame table as well as an inverted page

table.

-

Since modern machine have a smaller physical address space than

virtual address space, the frame table is smaller than the

corresponding page table.

-

But on a TLB miss, the system must search the inverted

page table.

-

Would be hopelessly slow except that some tricks are employed.

-

The book mentions some but not all of the tricks, we are not

covering the tricks.

For us, the frame table is searched on each TLB miss.

4.4: Page Replacement Algorithms (PRAs)

These are solutions to the replacement question.

Good solutions take advantage of locality.

-

Temporal locality: If a word is referenced now,

it is likely to be referenced in the near future.

-

This argues for caching referenced words,

i.e. keeping the referenced word near the processor for a while.

-

Spatial locality: If a word is referenced now,

nearby words are likely to be referenced in the near future.

-

This argues for prefetching words around the currently

referenced word.

-

These are lumped together into locality: If any

word in a page is referenced, each word in the page is

“likely” to be referenced.

-

So it is good to bring in the entire page on a miss and to

keep the page in memory for a while.

-

When programs begin there is no history so nothing to base

locality on. At this point the paging system is said to be undergoing

a “cold start”.

-

Programs exhibit “phase changes”, when the set of

pages referenced changes abruptly (similar to a cold start). At

the point of a phase change, many page faults occur because

locality is poor.

Pages belonging to processes that have terminated are of course

perfect choices for victims.

Pages belonging to processes that have been blocked for a long time

are good choices as well.

Random PRA

A lower bound on performance. Any decent scheme should do better.

4.4.1: The optimal page replacement algorithm (opt PRA) (aka

Belady's min PRA)

Replace the page whose next

reference will be furthest in the future.

-

Also called Belady's min algorithm.

-

Provably optimal. That is, generates the fewest number of page

faults.

-

Unimplementable: Requires predicting the future.

-

Good upper bound on performance.

4.4.2: The not recently used (NRU) PRA

Divide the frames into four classes and make a random selection from

the lowest nonempty class.

-

Not referenced, not modified

-

Not referenced, modified

-

Referenced, not modified

-

Referenced, modified

Assumes that in each PTE there are two extra flags R (sometimes called

U, for used) and M (often called D, for dirty).

Also assumes that a page in a lower priority class is cheaper to evict.

-

If not referenced, probably will not referenced again soon and

hence is a good candidate for eviction.

-

If not modified, do not have to write it out so the cost of the

eviction is lower.

-

When a page is brought in, OS resets R and M (i.e. R=M=0)

-

On a read, hardware sets R.

-

On a write, hardware sets R and M.

We again have the prisoner problem, we do a good job of making little

ones out of big ones, but not the reverse. Need more resets.

Every k clock ticks, reset all R bits

-

Why not reset M?

Answer: Must have M accurate to know if victim needs to be written back

-

Could have two M bits one accurate and one reset, but I don't know

of any system (or proposal) that does so.

What if the hardware doesn't set these bits?

-

OS can use tricks

-

When the bits are reset, make the PTE indicate the page is not

resident (i.e. lie). On the page fault, set the appropriate

bit(s).

-

We ignore the tricks and assume the hardware does set the bits.

4.4.3: FIFO PRA

Simple but poor since usage of the page is ignored.

Belady's Anomaly: Can have more frames yet generate

more faults.

Example given later.

The natural implementation is to have a queue of nodes each

pointing to a page.

-

When a page is loaded, a node referring to the page is appended to

the tail of the queue.

-

When a page needs to be evicted, the head node is removed and the

page referenced is chosen as the victim.

4.4.4: Second chance PRA

Similar to the FIFO PRA, but altered so that a page recently

referenced is given a second chance.

-

When a page is loaded, a node referring to the page is appended to

the tail of the queue. The R bit of the page is cleared.

-

When a page needs to be evicted, the head node is removed and the

page referenced is the potential victim.

-

If the R bit on this page is unset (the page hasn't been

referenced recently), then the page is the victim.

-

If the R bit is set, the page is given a second chance.

Specifically, the R bit is cleared, the node

referring to this page is appended to the rear of the queue (so it

appears to have just been loaded), and the current head node

becomes the potential victim.

-

What if all the R bits are set?

-

We will move each page from the front to the rear and will arrive

at the initial condition but with all the R bits now clear. Hence

we will remove the same page as fifo would have removed, but will

have spent more time doing so.

-

Might want to periodically clear all the R bits so that a long ago

reference is forgotten (but so is a recent reference).