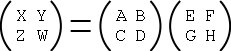

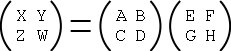

First try. Assume N is a power of 2 and break each matrix into 4

parts as shown on the right. Then X = AE+BG and similarly for Y, Z,

and W. This gives 8 multiplications of half size matrices plus

Θ(N2) scalar addition so

First try. Assume N is a power of 2 and break each matrix into 4

parts as shown on the right. Then X = AE+BG and similarly for Y, Z,

and W. This gives 8 multiplications of half size matrices plus

Θ(N2) scalar addition so

If you thought the integer multiplication involved pulling a rabbit out of our hat, get ready.

This algorithm was a sensation when it was discovered by Strassen. The standard algorithm for multiplying two NxN matrices is Θ(N3). We want to do better.

Do a matrix multiplication on the board and show that it is Θ(N3). One way to see this is to note that each entry in the product is an inner product. There are Θ(N2) entries and computing an inner product is Θ(N).

First try. Assume N is a power of 2 and break each matrix into 4

parts as shown on the right. Then X = AE+BG and similarly for Y, Z,

and W. This gives 8 multiplications of half size matrices plus

Θ(N2) scalar addition so

First try. Assume N is a power of 2 and break each matrix into 4

parts as shown on the right. Then X = AE+BG and similarly for Y, Z,

and W. This gives 8 multiplications of half size matrices plus

Θ(N2) scalar addition so

T(N) = 8T(N/2) + bN2

We apply the master theorem and get T(N) = Θ(N3),

i.e., no improvement.

But strassen found the way! He (somehow, I don't know how) decided to consider the following 7 (not 8) multiplications of half size matrices.

S1 = A(F-H) S2 = (A+B)H S3 = (C+D)E S4 = D(G-E) S5 = (A+D)(E+H) S6 = (B-D)(G+H) S7 = (A-C)(E+F)

Now we can compute X, Y, Z, and W from the S's

X = S5+S6+S4-S2 Y = S1+S2 Z = S3+S4 W = S1-S7-S3+S5

This computation shows that

T(N) = 7T(N/2) + bN2

Thus the master theorem now gives

Theorem(Strassen): We can multiply two NxN matrices in time O(nlog7).

Remarks:

Homework: R-5.6