Homework: R-5.3

Problem Set 4, Problem 3.

Part A. C-5.3 (Do not argue why your algorithm is correct).

Part B. C-5.4.

Remark: Problem set 4 (the last problem set) is now complete and due in 3 lectures, thurs 2 Dec. 2003.

The idea of divide and conquer is that we solve a large problem by solving a number of smaller problems and then we apply this idea recursively.

From the description above we see that the complexity of a divide and conquer solution has three parts.

Let T(N) be the (worst case) time required to solve an instance of the problem having time N. The time required to split the problem and combine the subproblems is also typically a function of N, say f(N).

More interesting is the time required to solve the subproblems. If the problem has been split in half then the time required for each subproblem is T(N/2).

Since the total time required includes splitting, solving both subproblems, and combining we get.

T(N) = 2T(N/2)+f(N)

Very often the splitting and combining are fast, specifically linear in N. Then we get

T(N) = 2T(N/2)+rN

for some constant r. (We probably should say ≤ rather than =, but

we will soon be using big-Oh and friends so we can afford to be a

little sloppy.)

What if N is not divisible by 2? We should be using floor or ceiling or something, but we won't. Instead we will be assuming for recurrences like this one that N is a power of two so that we can keep dividing by 2 and get an integer. (The general case is not more difficult; but is more tedious)

But that is crazy! There is no integer that can be divided by 2 forever and still give an integer! At some point we will get to 1, the so called base case. But when N=1, the problem is almost always trivial and has a O(1) solution. So we write either

r if N = 1

T(N) =

2T(N/2)+rN if N > 1

or

T(1) = r

T(N) = 2T(N/2)+rN if N > 1

No we will now see three techniques that, when cleverly applied, can solve a number of problems. We will also see a theorem that, when its conditions are met, gives the solution without our being clever.

Also called ``plug and chug'' since we plug the equation into itself and chug along.

T(N) = 2T(N/2)+rN

= 2[ ]+rN

= 2[2T((N/2)/2)+r(N/2)]+rN

= 4T(N/4)+2rN now do it again

= 8T(N/8)+3rN

A flash of inspiration is now needed. When the smoke clears we get

T(N) = 2iT(N/2i)+irN

When i=log(N), N/2i=1 and we have the base case. This gives the final result

T(N) = 2log(N)T(N/2log(N))+irN

= N T(1) +log(N)rN

= N r +log(N)rN

= rN+rNlog(N)

Hence T(N) is O(Nlog(N))

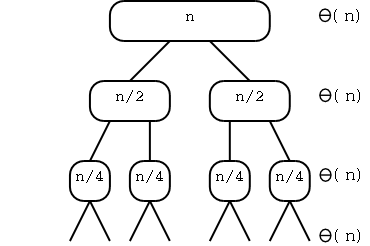

The idea is similar but we use a visual approach.

We already studied the recursion tree when we analyzed merge-sort. Let's look at it again.

The diagram shows the various subproblems that are executed, with their sizes. (It is not always true that we can divide the problem so evenly as shown.) Then we show that the splitting and combining that occur at each node (plus calling the recursive routine) only take time linear in the number of elements. For each level of the tree the number of elements is N so the time for all the nodes on that level is Θ(N) and we just need to find the height of the tree. When the tree is split evenly as illustrated the sizes of all the nodes on each level go down by a factor of two so we reach a node with size 1 in logN levels (assuming N is a power of 2). Thus T(N) is Θ(Nlog(N)).

This method is really only useful after you have practice in recurrences. The idea is that, when confronted with a new problem, you recognize that it is similar to a problem you have seen the solution to previously and you guess that the solution to the new problem is similar to the old.

You then plug your guess into the recurrence and test that it works. For example if we guessed that the solution of

T(1) = r

T(N) = 2T(N/2)+rN if N > 1

was

T(N) = rN+rNlog(N)

we would plug it in and check that

T(1) = r

T(N) = 2T(N/2)+rN if N > 1

But we don't have enough experience for this to be very useful.

In this section, we apply the heavy artillery. The following theorem, which we will not prove, enables us to solve some problems by just plugging in. It essentially does the guess part of guess and test for us.

We will only be considering complexities of the form

T(1) = c

T(N) = aT(N/b)+f(N) if N > 1

The idea is that we have done some sort of divide and conquer where there are a subproblems of size at most N/b. As mentioned earlier f(N) accounts for the time to divide the problem into subproblems and to combine the subproblem solutions.

Theorem [The Master Theorem]: Let f(N) and T(N) be as above.

Proof: Not given.

Remarks:

Now we can solve some problems easily and will do two serious problems after that.

Example: T(N) = 4T(N/2)+N.

Example: T(N) = 2T(N/2) + Nlog(N)