Alternate Proof that the amortized time is O(1). Note that amortized time is O(1) means that the total time is O(N). The new proof is a two step procedure

For step one we note that when the N add operations are complete the size of the array will be FS<.2N, with FS a power of 2. Let FS=2k So the total size used is TS=1+2+4+8+...+FS=∑2i (i from 0 to k). We already proved that this is (2k+1-1)/(2-1)=2k+1-1=2FS-1<4N as desired.

An easier way to see that the sum is 2k+1-1 is to write 1+2+4+8+...2k in binary, in which case we get (for k=5)

1

10

100

1000

10000

+100000

------

111111 = 1000000-1 = 25+1-1

The second part is clear. For each cell the algorithm only does a bounded number of operations. The cell is allocated, a value is copied in to the cell, and a value is copied out of the cell (and into another cell).

The book is quite clear. I have little to add.

You might want to know

Assume you believe the running time t(n) of an algorithm is Θ(nd) for some specific d and you want to both verify your assumption and find the multiplicative constant.

Make a plot of (n, t(n)/nd). If you are right the points should tend toward a horizontal line and the height of this line is the multiplicative constant.

Homework: R-1.29

What if you believe it is polynomial but don't have a guess for d?

Ans: Use ...

Plot (n, t(n)) on log log paper. If t(n) is Θ(nd), say t(n) approaches bnd, then log(t(n)) approaches log(b)+d(log(n)).

So when you plot (log(n), log(t(n)) (i.e., when you use log log paper), you will see the points approach (for large n) a straight line whose slope is the exponent d and whose y intercept is the multiplicative constant b.

Homework: R-1.30

Stacks implement a LIFO (last in first out) policy. All the action occurs at the top of the stack, primarily with the push(e) and pop operation.

The stack ADT supports

There is a simple implementation using an array A and an integer s (the current size). A[s-1] contains the TOS.

Objection (your honor). The ADT says we can always push. A simple array implementation would need to signal an error if the stack is full.

Sustained! What do you propose instead?

An extendable array.

Good idea.

Homework: Assume a software system has 100 stacks and 100,000 elements that can be on any stack. You do not know how the elements are to be distributed on the stacks. However, once an element is put on one stack, it never moves. If you used a normal array based implementation for the stacks, how much memory will you need. What if you use an extendable array based implementation? Now answer the same question, but assume you have Θ(S) stacks and Θ(E) elements.

Stacks work great for implementing procedure calls since procedures have stack based semantics. That is, last called is first returned and local variables allocated with a procedure are deallocated when the procedure returns.

So have a stack of "activation records" in which you keep the return address and the local variables.

Support for recursive procedures comes for free. For languages with static memory allocations (e.g., fortran) one can store the local variables with the method. Fortran forbids recursion so that memory allocation can be static. Recursion adds considerably flexibility to a language as some cost in efficiency (not part of this course).

I am reviewing modulo since I believe it is no longer taught in high school.

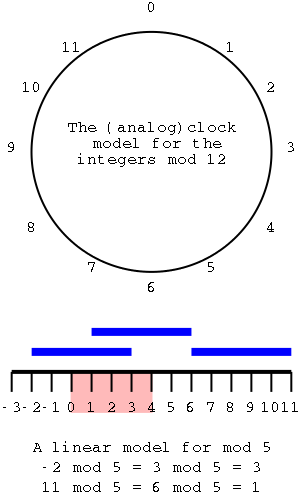

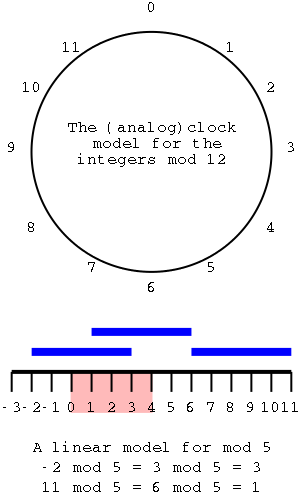

The top diagram shows an almost ordinary analog clock. The major difference is that instead of 12 we have 0. The hands would be useful if this was a video, but I omitted them for the static picture. Positive numbers go clockwise (cw) and negative counter-clockwise (ccw). The numbers shown are the values mod 12. This example is good to show arithmetic. (2-5) mod 12 is obtained by starting at 2 and moving 5 hours ccw, which gives 9. (-7) mod 12 is (0-7) mod 12 is obtained by starting at 0 and going 7 hours ccw, which gives 5.

To get mod 8, divide the circle into 8 hours

instead of 12.

The bottom picture shows mod 5 in a linear fashion. In pink are the 5 values one can get when doing mod 5, namely 0, 1, 2, 3, and 4. I only illustrate numbers from -3 to 11 but that is just due to space limitations. Each blue bar is 5 units long so the numbers at its endpoints are equal mod 5 (since they differ by 5). So you just lay off the blue bar until you wind up in the pink.

Homework: Using the real mod (let's call it RealMod) evaluate

Queues implement a FIFO (first in first out) policy. Elements are inserted at the rear and removed from the front using the enqueue and dequeue operations respectively.

The queue ADT supports

My favorite high level language, ada, gets it right in the obvious way: Ada defines both mod and remainder (ada extends the math definition of mod to the case where the second argument is negative).

In the familiar case when x≥0 and y>0 mod and remainder are

equal. Unfortunately the book uses mod sometimes when x<0 and

consequently needs to occasionally add an extra y to get the true mod.

End of personal rant

Returning to relevant issues we note that for queues we need a front and rear "pointers" f and r. Since we are using arrays f and r are actually indexes not pointers. Calling the array Q, Q[f] is the front element of the queue, i.e., the element that would be returned by dequeue(). Similarly, Q[r] is the element into which enqueue(e) would place e. There is one exception: if f=r, the queue is empty so Q[f] is not the front element.

Without writing the code, we see that f will be increased by each dequeue and r will be increased by every enqueue.

Assume Q has n slots Q[0]…Q[N-1] and the queue is initially empty with f=r=0. Now consider enqueue(1); dequeue(); enqueue(2); dequeue(); enqueue(3); dequeue(); …. There is never more than one element in the queue, but f and r keep growing so after N enqueue(e);dequeue() pairs, we cannot issue another operation.

The solution to this problem is to treat the array as circular,

i.e., right after Q[N-1] we find Q[0]. The way to implement this is

to arrange that when either f or r is N-1, adding 1 gives 0 not N.

Similarly for r. So the increment statements become

f←(f+1) mod N

r←(r+1) mod N

Note: Recall that we had some grief due to our starting arrays and loops at 0. For example, the fifth slot of A is A[4] and the fifth iteration of "for i←0 to 30" occurs when i=4. The updates of f and r directly above show one of the advantages of starting at 0; they are less pretty if the array starts at 1.

The size() of the queue seems to be r-f, but this is not always

correct since the array is circular.

For example let N=10 and consider an initially empty queue with f=r=0 that has

enqueue(10)enqueue(20);dequeue();enqueue(30);dequeue();enqueue(40);dequeue()

applied. The queue has one element, f=4, and r=3.

Now apply 6 more enqueue(e) operations

enqueue(50);enqueue(60);enqueue(70);enqueue(80);enqueue(90);enqueue(100)

At this point the array has 7 elements, f=0, and r=3.

Clearly the size() of the queue is not f-r=-3.

It is instead 7, the number of elements in the queue.

The problem is that f in some sense is 10 not 0 since there were 10

enqueue(e) operations. In fact if we kept 2 values for f and 2 for r,

namely the value before the mod and after, then size() would be

fBeforeMod-rBeforeMod. Instead we, use the following inelegant formula.

size() = (r-f+N) mod N

Remark: If java's definition of -3 mod 10 gave 7 (as it

should) instead of -3, we could use the more attractive formula

size() = (r-f) mod N.

Since isEmpty() is simply an abbreviation for the test size()=0, it is just testing if r=f.

Algorithm front():

if isEmpty() then

signal an error // throw QueueEmptyException

return Q[f]

Algorithm dequeue():

if isEmpty() then

signal an error // throw QueueEmptyException

temp←Q[f]

Q[f]←NULL // for security or debugging

f←(f+1) mod N

return temp

Algorithm enqueue(e):

if size() = N-1 then

signal an error // throw QueueFullException

Q[r]←e

r←(r+1) mod N