================ Start Lecture #1 ================

Basic Algorithms

2002-03 Fall

Monday and Wednesday 11-12:15

Ciww 102

Chapter 0 Administrivia

I start at 0 so that when we get to chapter 1, the numbering will

agree with the text.

0.1 Contact Information

- gottlieb@nyu.edu (best method)

- http://allan.ultra.nyu.edu/~gottlieb two el's in allan

- 715 Broadway, Room 712

0.2 Course Web Page

There is a web site for the course. You can find it from my home

page.

- You can find these lecture notes on the course home page.

Please let me know if you can't find it.

- I mirror my home page on the CS web site.

- I also mirror the course pages on the CS web site.

- But, the official site is allan.ultra.nyu.edu.

It is the one I personally manage.

- The notes will be updated as bugs are found.

- I will also produce a separate page for each lecture after the

lecture is given. These individual pages

might not get updated as quickly as the large page

0.3 Textbook

The course text is Goodrich and Tamassia: ``Algorithm Design:

Foundations, Analysis, and Internet Examples.

- Available in bookstore.

- I expect to cover most of part I and some of part II

0.4 Computer Accounts and Mailman Mailing List

- You are entitled to a computer account, please get it asap.

- Sign up for the Mailman mailing list for the course.

http://www.cs.nyu.edu/mailman/listinfo/v22_0310_002_fa03

- If you want to send mail just to me, use gottlieb@nyu.edu not

the mailing list.

- Questions about the lectures or homeworks should go to the mailing list.

You may answer questions posed on the list as well.

- I will respond to all questions; if another student has answered the

question before I get to it, I will confirm if the answer given is

correct.

0.5 Grades

The major components of the grade will be the midterm, the final,

and problem sets. I will post (soon) the weights for each.

0.6 Midterm

We will have a midterm. As the time approaches we will vote in

class for the exact date. Please do not schedule any trips during

days when the class meets until the midterm date is scheduled.

0.7 Homeworks, Problem Sets, and Labs

If you had me for 202, you know that in systems courses I also

assign labs. Basic algorithms is not a systems

course; there are no labs. There are homeworks and problem sets,

very few if any of these will require the computer. There is a

distinction between homeworks and problem sets.

Problem sets are

- Required.

- Due several lectures later (date given on assignment).

- Graded and form part of your final grade.

- Penalized for lateness, up to one week.

Homeworks are

- Optional.

- Due the beginning of Next lecture.

- Not accepted late.

- Mostly from the book.

- Collected and returned.

- Able to help, but not hurt, your grade.

0.8 Recitation

I run a recitation session on tuesdays from 2-3:15.

I believe there is another recitation section.

You need attend only one.

0.9 Obtaining Help

Good methods for obtaining help include

- Asking me during office hours (see web page for my hours).

- Asking the mailing list.

- Asking another student, but ...

Your homeworks and problem sets must be your own.

0.10 The Upper Left Board

I use the upper left board for homework assignments and

announcements. I should never erase that board.

Viewed as a file it is group readable (the group is those in the

room), appendable by just me, and (re-)writable by no one.

If you see me start to erase an announcement, let me know.

0.11 A Grade of ``Incomplete''

It is university policy that a student's request for an incomplete

be granted only in exceptional circumstances and only if applied for

in advance. Naturally, the application must be before the final exam.

Part I: Fundamental Tools

Chapter 1 Algorithm Analysis

We are interested in designing good

algorithms (a step-by-step procedure for performing

some task in a finite amount of time) and good

data structures (a systematic way of organizing and

accessing data).

Unlike v22.102, however, we wish to determine rigorously just

how good our algorithms and data structures really

are and whether significantly better algorithms are

possible.

1.1 Methodologies for Analyzing Algorithms

We will be primarily concerned with the speed (time

complexity) of algorithms.

- Sometimes the space complexity is studied.

- The time depends on the input, often on just the amount

input. For example, the time required to sum N numbers depends on

N but not on the numbers themselves (we assume all values fit in

one ``word''.

- One could run experiments in order to determine the space complexity.

- Must choose sufficiently many, representative inputs.

- Must use identical hardware to compare algorithms.

- Must implement the algorithm.

We will emphasize instead an analytic framework that is

independent of input and hardware, and does not require an

implementation. The disadvantage is that we can only estimate the

time required.

- Often we ignore multiplicative constants and small input values.

- So we consider f(x)=x3-20x2

equivalent to g(x)=10x3+10x2

- Huh??

- Easy to see that for say x > 100, f(x) < 10 g(x) and

g(x) < 10 f(x).

Homework: R-1.1 and R-1.2

(Unless otherwise stated, homework

problems are from the last section in the current book chapter.)

1.1.1 Pseudo-Code

Designed for human understanding. Suppress unimportant details and

describe some parts in natural language (English in this course).

1.1.2 The Random Access Machine (RAM) Model

The key difference between the RAM model and a real computer is the

assumption of a very simple memory model: Accessing any memory element

takes a constant amount of time. This ignores caching and paging for

example. (It also assumes

the word-size of a computer is large enough to hold any address, which

is generally valid for modern-day computers, but was not always the case.)

The time required is simply a count of the primitive

operations executed. There are several different possible

sets of primitive operations. For this course we will use

- Assigning a value to a variable (independent of the size of the

value; but the variable must be a scalar).

- Method invocation, i.e., calling a function or subroutine.

- Performing a (simple) arithmetic operation (divide is OK,

logarithm is not).

- Indexing into an array (for now just one dimensional; scalar

access is free).

- Following an object reference.

- Returning from a method.

1.1.3 Counting Primitive Operations

Let's start with a simple algorithm (the book does a different

simple algorithm, maximum).

Algorithm innerProduct

Input: Non-negative integer n and two integer arrays A and B of size n.

Output: The inner product of the two arrays.

prod ← 0

for i ← 0 to n-1 do

prod ← prod + A[i]*B[i]

return prod

- Line 1 is one op (assigning a value).

- Loop initializing is one op (assigning a value).

- Line 3 is five ops per iteration (mult, add, 2 array refs, assign).

- Line 3 is executed n times; total is 5n.

- Loop incrementation is two ops (an addition and an assignment)

- Loop incrementation is done n times; total is 2n.

- Loop termination test is one op (a comparison i<n).

- Loop termination is done n+1 times (n successes, one failure);

total is n+1.

- Return is one op.

The total is thus 1+1+5n+2n+(n+1)+1 = 8n+4.

Homework: Perform a similar analysis for

the following algorithm

Algorithm tripleProduct

Input: Non-negative integer n and three integer arrays A. B, and C

each of size n.

Output: The A[0]*B[0]*C[0] + ... + A[n-1]*B[n-1]*C[n-1]

prod ← 0

for i ← 0 to n-1 do

prod ← prod + A[i]*B[i]*C[i]

return prod

End of homework

Let's speed up innerProduct (a very little bit).

Algorithm innerProductBetter

Input: Non-negative integer n and two integer arrays A and B of size n.

Output: The inner product of the two arrays

prod ← A[0]*B[0]

for i ← 1 to n-1 do

prod ← prod + A[i]*B[i]

return prod

The cost is 4+1+5(n-1)+2(n-1)+n+1 = 8n-1

THIS ALGORITHM IS WRONG!!

If n=0, we access A[0] and B[0], which do not exist. The original

version returns zero as the inner product of empty arrays, which is

arguably correct. The best fix is perhaps to change Non-negative

to Positive

in the Input specification.

Let's call this algorithm innerProductBetterFixed.

What about if statements?

Algorithm countPositives

Input: Non-negative integer n and an integer array A of size n.

Output: The number of positive elements in A

pos ← 0

for i ← 0 to n-1 do

if A[i] > 0 then

pos ← pos + 1

return pos

- Line 1 is one op.

- Loop initialization is one op

- Loop termination test is n+1 ops

- The if test is performed n times; each is 2 ops

- Return is one op

- The update of pos is 2 ops but is done ??? times.

- What do we do?

Let U be the number of updates done.

- The total number of steps is 1+1+(n+1)+2n+1+2U = 4+3n+2U.

- The best case (i.e., lowest complexity) occurs

when U=0 (i.e., no numbers are positive) and gives a

complexity of 4+3n.

- The worst case occurs when U=n (i.e., all

numbers are positive) and gives a complexity of 4+5n.

- To determine the average case result is much

harder as it requires knowing the input distribution (i.e., are

positive numbers likely) and requires probability theory.

-

We will primarily study worst case complexity.

1.1.4 Analyzing Recursive Algorithms

Consider a recursive version of innerProduct. If the arrays are of

size 1, the answer is clearly A[0]B[0]. If n>1, we recursively get

the inner product of the first n-1 terms and then add in the last term.

Algorithm innerProductRecursive

Input: Positive integer n and two integer arrays A and B of size n.

Output: The inner product of the two arrays

if n=1 then

return A[0]B[0]

return innerProductRecursive(n-1,A,B) + A[n-1]B[n-1]

How many steps does the algorithm require? Let T(n) be

the number of steps required.

- If n=1 we do a comparison, two (array) fetches, a product, and a return.

- So T(1)=5.

- If n>1, we do a comparison, a subtraction, a method call, the

recursive computation, two fetches, a product, a sum and a return.

- So T(n) = 1 + 1 + 1 + T(n-1) + 2 + 1 + 1 + 1 = T(n-1)+8.

- This is called a recurrence equation. In general

these are quite difficult to solve in closed

form, i.e. without T on the right hand side.

- For this simple recurrence, one can see that T(n)=8n-3 is the

solution.

- We will learn more about recurrences later.

Problem Set #1, Problem 1.

The problem set will be officially assigned a little later, but the first

problem in the set is R-1.27.

================ Start Lecture #2 ================

1.2 Asymptotic Notation

One could easily complain about the specific primitive operations

we chose and about the amount we charge for each one. For example,

perhaps we should charge one unit for accessing a scalar variable.

Perhaps we should charge more for division than for addition. Some

computers can multiply two numbers and add it to a third in one

operation. What about the cost of loading the program?

Now we are going to be less precise and worry only about approximate

answers for large inputs.

Thus the rather arbitrary decisions made about how many units to

charge for each primitive operation will not matter since our

sloppiness will cover.

Please note that the sloppiness will be very

precise.

1.2.1 The Big-Oh

Notation

Definition: Let f(n) and g(n) be real-valued

functions of a single non-negative integer argument.

We write f(n) is O(g(n)) if there is a positive real

number c and a positive integer n0

such that f(n)≤cg(n) for all n≥n0.

What does this mean?

For large inputs (n≥n0), f is not much

bigger than g (specifically, f(n)≤cg(n)).

Examples to do on the board

- 3n-6 is O(n). Some less common ways of

saying the same thing follow.

- 3x-6 is O(x).

- If f(y)=3y-6 and id(y)=y,

then f(y) is O(id(y)).

- 3n-6 is O(2n)

- 9n4+12n2+1234 is

O(n4).

- innerProduct is O(n)

- innerProductBetter is O(n)

- innerProductFixed is O(n)

- countPositives is O(n)

- n+log(n) is O(n).

- log(n)+5log(log(n)) is O(log(n)).

- 1234554321 is O(1).

- 3/n is O(1). True but not the best.

- 3/n is O(1/n). Much better.

- innerProduct is

O(3n4+100n+log(n)+34.5).

True, but awful.

A few theorems give us rules that make calculating big-Oh easier.

Theorem (arithmetic): Let d(n),

e(n), f(n), and g(n) be nonnegative

real-valued functions of a nonnegative integer argument and assume

d(n) is O(f(n)) and e(n) is

O(g(n)). Then

- ad(n) is O(f(n)) for any nonnegative a

- d(n)+e(n) is O(f(n)+g(n))

- d(n)e(n) is O(f(n)g(n))

Theorem (transitivity): Let d(n),

f(n), and g(n) be nonnegative real-valued functions

of a nonnegative integer argument and assume d(n) is

O(f(n)) and f(n) is O(g(n)). Then

d(n) is O(g(n)).

Theorem (special functions): (Only n varies)

- If f(n) is a polynomial of degree d, then

f(n) is O(nd).

- nk is O(an) for any

k>0 and a>1.

- log(nk) is O(log(n)) for any k>0

- (log(n))k is O(nj) for any

k>0 and j>0.

Example: (log n)1000 is

O(n0.001).

This says raising log n to the 1000 is not (significantly) bigger than

the thousandth root of n. Indeed raising log to the 1000 is actually

significantly smaller than taking the thousandth root

since n0.001) is not

O((log n)1000).

So log is a VERY small (i.e., slow growing) function.

Homework: R-1.19 R-1.20

Example: Let's do problem R-1.10. Consider the

following simple loop that computes the sum of the first n positive

integers and calculate the running time using the big-Oh notation.

Algorithm Loop1(n)

s ← 0

for i←1 to n do

s ← s+i

With big-Oh we don't have to worry about multiplicative or additive

constants so we see right away that the running time is just the

number of iterates of the loop so the answer is O(n)

Homework: R-1.11 and R-1.12

Definitions: (Common names)

-

If a function is O(log(n)), we call it

logarithmic.

-

If a function is O(n),

we call it linear.

- If a function is O(n2),

we call it quadratic.

- If a function is O(nk) with k≥1,

we call it polynomial.

- If a function is O(an) with a>1,

we call it exponential.

Remark: The last definitions would be better with a

relative of big-Oh, namely big-Theta, since, for example 3log(n) is

O(n2), but we do not call

3log(n) quadratic.

Homework: R-1.10 and R-1.12.

Example: R-1.13. What is running time of the

following loop using big-Oh notation?

Algorithm Loop4(n)

s ← 0

for i←1 to 2n do

for j←1 to i do

s ← s+1

Clearly the time is determined by the number of executions of the last

statement. But this looks hard since the inner loop is executed a

different number of times for each iteration of the outer loop. But

it is not so bad. For iteration i of the outer loop, the inner loop

has i iterations. So the total number of iterations

of the last statement is 1+2+...+2n, which is 2n(2n+1)/2. So the

answer is O(n2).

Homework: R-1.14 (This was assigned during the

third lecture in Fall 03, but would have made more sense here).

================ Start Lecture #3 ================

1.2.2 Relatives

of the Big-Oh

Big-Omega and Big-Theta

Recall that f(n) is O(g(n)) if, for large n, f is not much bigger

than g. That is g is some sort of upper bound on f.

How about a definition for the case when g is (in the same sense) a

lower bound for f?

Definition: Let f(n) and g(n) be real valued

functions of an integer value. Then f(n) is

Ω(g(n)) if g(n) is O(f(n)).

Remarks:

- We pronounce f(n) is Ω(g(n)) as "f(n) is big-Omega of g(n)".

- What the last definition says is that we say f(n) is not much smaller

than g(n) if g(n) is not much bigger than f(n), which sounds

reasonable to me.

- What if f(n) and g(n) are about equal, i.e., neither is much

bigger than the other?

Definition: We write

f(n) is Θ(g(n)) if both

f(n) is O(g(n)) and f(n) is Ω(g(n)).

Remarks

We pronounce f(n) is Θ(g(n)) as "f(n) is big-Theta of g(n)"

Examples to do on the board.

- 2x2+3x is Θ(x2).

- 2x3+3x is not θ(x2).

- 2x3+3x is Ω(x2).

- innerProductRecursive is Θ(n).

- binarySearch is Θ(log(n)). Unofficial for now.

- If f(n) is Θ(g(n)), the f(n) is &Omega(g(n)).

- If f(n) is Θ(g(n)), then f(n) is O(g(n)).

Homework: R-1.6

Little-Oh and Little-Omega

Recall that big-Oh captures the idea that for large n, f(n) is not

much bigger than g(n). Now we want to capture the idea that, for

large n, f(n) is tiny compared to g(n).

If you remember limits from calculus, what we want is that

f(n)/g(n)→0 as n→∞.

However, the definition we give does not use the word limit (it

essentially has the definition of a limit built in).

Definition: Let f(n) and g(n) be real valued

functions of an integer variable. We say

f(n) is o(g(n))

if for any c>0,

there is an n0 such that

f(n)≤cg(n) for all n>n0.

This is pronounced as "f(n) is little-oh of g(n)".

Definition: Let f(n) and g(n) be real valued

functions of an integer variable. We say

f(n) is ω(g(n) if

g(n) is o(f(n)). This is pronounced as "f(n) is little-omega of g(n)".

Examples: log(n) is o(n) and x2 is

ω(nlog(n)).

Homework: R-1.4. R-1.22

What is "fast" or "efficient"?

If the asymptotic time complexity is bad, say Ω(n8), or

horrendous, say Ω(2n), then for large n, the algorithm will

definitely be slow. Indeed for exponential algorithms even modest n's

(say n=50) are hopeless.

Algorithms that are o(n) (i.e., faster than linear, a.k.a. sub-linear),

e.g. logarithmic algorithms, are very fast and quite rare.

Note that such algorithms do not even inspect most of the input data

once. Binary search has this property. When you look up a name in the

phone book you do not even glance at a majority of the names present.

Linear algorithms (i.e., Θ(n)) are also fast. Indeed, if the

time complexity is O(nlog(n)), we are normally quite happy.

Low degree polynomial (e.g., Θ(n2),

Θ(n3), Θ(n4)) are interesting. They

are certainly not fast but speeding up a computer system by a factor

of 1000 (feasible today with parallelism) means that a

Θ(n3) algorithm can solve a problem 10 times larger.

Many science/engineering problems are in this range.

1.2.3 The Importance of Asymptotics

It really is true that if algorithm A is o(algorithm B) then for

large problems A will take much less time than B.

Definition: If (the number of operations in)

algorithm A is o(algorithm B), we call A

asymptotically faster than B.

Example:: The following sequence of functions are

ordered by growth rate, i.e., each function is

little-oh of the subsequent function.

log(log(n)), log(n), (log(n))2, n1/3,

n1/2, n, nlog(n), n2/(log(n)), n2,

n3, 2n.

What about those constants that we have swept under the rug?

Modest multiplicative constants (as well as immodest additive

constants) don't cause too much trouble. But there are algorithms

(e.g. the AKS logarithmic sorting algorithm) in which the

multiplicative constants are astronomical and hence, despite its

wonderful asymptotic complexity, the algorithm is not used in

practice.

A Great Table

See table 1.10 on page 20.

Homework: R-1.7

1.3 A Quick Mathematical Review

This is hard to type in using html. The book is fine and I will

write the formulas on the board.

1.3.1 Summations

Definition:

The sigma notation: ∑f(i) with i going from a to b.

Theorem:

Assume 0<a≠1. Then ∑ai i from 0 to n =

(an+1-1)/(a-1).

Proof:

Cute trick. Multiply by a and subtract.

Theorem:

∑i from 1 to n = n(n+1)/2.

Proof:

Pair the 1 with the n, the 2 with the (n-1), etc. This gives a bunch

of (n+1)s. For n even it is clearly n/2 of them. For odd it is the

same (look at it).

Homework: R-1.14. (It would have been more

logical to assign this last time right after I did R-1.13. In the

future I will do so).

1.3.2 Logarithms and Exponents

Recall that logba = c means that bc=a.

b is called the base and c is called the

exponent.

What is meant by log(n) when we don't specify the base?

- Some people use base 10 by default.

- Mathematicians use base e.

- We will use base 2 (common in computer science)

I assume you know what ab is. (Actually this is not so

obvious. Whatever 2 raised to the square root of 3 means it is

not writing 2 down the square root of 3 times and

multiplying.) So you also know that

ax+y=axay.

Theorem:

Let a, b, and c be positive real numbers. To ease writing, I will use

base 2 often. This is not needed. Any base would do.

- log(ac) = log(a)+log(c)

- log(a/c) = log(a) - log(c)

- log(ac) = c log(a)

- logc(a) = (log(a))/log(c):

consider a = clogca and take log of both sides.

- clog(a) = a log(c): take log of both sides.

- (ba)c = bac

- babc = ba+c

- ba/bc = ba-c

Examples

- log(2nlog(n)) = 1 + log(n) + log(log(n)) is Θ(log(n))

- log(log(sqrt(n))) = log(.5log(n)) = log(.5)+log(log(n))

= -1 + log(log(n)) = Θ(log(log(n))

- log(2n) = nlog(2) = n = 2log(n)

Homework: C-1.12

Floor and Ceiling

⌊x⌋ is the greatest integer not greater than x.

⌈x⌉ is the least integer not less than x.

⌊5⌋ = ⌈5⌉ = 5

⌊5.2⌋ = 5 and ⌈5.2⌉ = 6

⌊-5.2⌋ = -6 and ⌈-5.2⌉ = -5

1.3.3 Simple Justification Techniques

By example

To prove the claim that there is a positive n

satisfying nn>n+n, we merely have to note that

33>3+3.

By counterexample

To refute the claim that all positive n

satisfy nn>n+n, we merely have to note that

11<1+1.

By contrapositive

"P implies Q" is the same as "not Q implies not P". So to show

that in the world of positive integers "a2≥b2

implies that a≥b" we can show instead that

"NOT(a≥b) implies NOT(a2≥b2)", i.e., that

"a<b implies a2<b2",

which is clear.

By contradiction

Assume what you want to prove is false and derive

a contradiction.

Theorem:

There are an infinite number of primes.

Proof:

Assume not. Let the primes be p1 up to pk and

consider the number

A=p1p2…pk+1.

A has remainder 1 when divided by any pi so cannot have any

pi as a factor. Factor A into primes. None can be

pi (A may or may not be prime). But we assumed that all

the primes were pi. Contradiction. Hence our assumption

that we could list all the primes was false.

By (complete) induction

The goal is to show the truth of some statement for all integers

n≥1. It is enough to show two things.

- The statement is true for n=1

- IF the statement is true for all k<n,

then it is true for n.

Theorem:

A complete binary tree of height h has 2h-1 nodes.

Proof:

We write NN(h) to mean the number of nodes in a complete binary tree

of height h.

A complete binary tree of height 1 is just a root so NN(1)=1 and

21-1 = 1.

Now we assume NN(k)=2k-1 nodes for all k<h

and consider a complete

binary tree of height h.

It is just two complete binary trees of height

h-1 with new root to connect them.

So NN(h) = 2NN(h-1)+1 = 2(2h-1-1)+1 = 2h-1,

as desired

Homework: R-1.9

================ Start Lecture #4 ================

Loop Invariants

Very similar to induction. Assume we have a loop with controlling

variable i. For example a "for i←0 to n-1". We then

associate with the loop a statement S(j) depending on j such that

- S(0) is true (just) before the loop begins

- IF S(j-1) holds before iteration j begins,

then S(j) will hold when iteration j ends.

By induction we see that S(n) will be true when the nth iteration

ends, i.e., when the loop ends.

I favor having array and loop indexes

starting at zero. However, here it causes us some grief. We must

remember that iteration j occurs when i=j-1.

Example::

Recall the countPositives algorithm

Algorithm countPositives

Input: Non-negative integer n and an integer array A of size n.

Output: The number of positive elements in A

pos ← 0

for i ← 0 to n-1 do

if A[i] > 0 then

pos ← pos + 1

return pos

Let S(j) be "pos equals the number of positive values in the first

j elements of A".

Just before the loop starts S(0) is true

vacuously. Indeed that is the purpose of the first

statement in the algorithm.

Assume S(j-1) is true before iteration j, then iteration j (i.e.,

i=j-1) checks A[j-1] which is the jth element and updates pos

accordingly. Hence S(j) is true after iteration j finishes.

Hence we conclude that S(n) is true when iteration n concludes,

i.e. when the loop terminates. Thus pos is the correct value to return.

1.3.4 Basic Probability

Skipped for now.

1.4 Case Studies in Algorithm Analysis

1.4.1 A Quadratic-Time Prefix Averages Algorithm

We trivially improved innerProduct (same asymptotic complexity

before and after). Now we will see a real improvement.

For simplicity I do a slightly simpler algorithm, prefix sums.

Algorithm partialSumsSlow

Input: Positive integer n and a real array A of size n

Output: A real array B of size n with B[i]=A[0]+…+A[i]

for i ← 0 to n-1 do

s ← 0

for j ← 0 to i do

s ← s + A[j]

B[i] ← s

return B

The update of s is performed 1+2+…+n times. Hence the

running time is Ω(1+2+…+n)=&Omega(n2).

In fact it is easy to see that the time is &Theta(n2).

1.4.2 A Linear-Time Prefix Averages Algorithm

Algorithm partialSumsFast

Input: Positive integer n and a real array A of size n

Output: A real array B of size n with B[i]=A[0]+…+A[i]

s ← 0

for i ← 0 to n-1 do

s ← s + A[i]

B[i] ← s

return B

We just have a single loop and each statement inside is O(1), so

the algorithm is O(n) (in fact Θ(n)).

Homework: Write partialSumsFastNoTemps, which is also

Θ(n) time but avoids the use of s (it still uses i so my name is not

great).

1.5 Amortization

Often we have a data structure supporting a number of different

operations that will each be applied many times. Sometimes the worst

case time complexity (i.e., the longest amount of time) of a sequence

of n operations is significantly less than n times the worst case

complexity of one operations. We give an example very soon.

If we divide the running time of the sequence by the number of

operations performed we get the average time for each operation in the

sequence, which is called the amortized running

time.

Why amortized?

Because the cost of the occasional expensive application is

amortized over the numerous cheap application (I think).

Example:: (From the book.) The clearable table.

This is essentially an array. The table is initially empty (i.e., has

size zero). We want to support three operations.

- Add(e): Add a new entry to the table at the end (extending its size).

- Get(i): Return the ith entry in the table.

- Clear(): Remove all the entries by setting each entry to zero (for

security) and setting the size to zero.

The obvious implementation is to use a large array A and an integer

s indicating the current size of A. More precisely A is (always)

of size N (large) and s indicates the extent of A that is currently in

use.

We are ignoring a number of error cases. For example, it is an

error to issue Get(5) if only two entries have been put into the

clearable table.

We start with a size zero table and assume we perform n (legal)

operations. Question: What is the worst-case running time for all n

operations? Once we know the answer, the amortized time is this

answer divided by n.

One possibility is that the sequence consists of n-1 add(e)

operations followed by one Clear(). The Clear() takes Θ(n),

which is the worst-case time for any operation (assuming n operations

in total). Since there are n operations and the worst-case is

Θ(n) for one of them, we might think that the worst-case

sequence would take Θ(n2).

But this is wrong.

It is easy to see that Add(e) and Get(i) are Θ(1).

The total time for all the Clear() operations in

any sequence of n operation is

O(n) since in total O(n) entries were cleared (since at most n entries

were added).

Hence, the amortized time for each operation in the clearable ADT

(abstract data type) is O(1), in fact Θ(1).

Why?

-

All the clears have complexity O(n) in total (shown above).

-

All the Adds have complexity O(n) in total: There are O(n) Adds in

the sequence and each one has Θ(1) complexity.

-

Similarly all the Gets have complexity O(n) in total.

-

Hence the total complexity is O(n)+O(n)+O(n)=O(n).

-

But there are a total of N operations each with complexity

Ω(1), so the total has complexity Ω(N).

-

So we have show the total complexity of any sequence of N

operations is O(N) and is Ω(N). Hence the total complexity

of the sequence is Θ(N) and the amortized

complexity is Θ(N)/N, which is &Theta(1);.

Note that we first found an upper bound on the complexity (i.e.,

big-Oh) and then a lower bound (Ω). Together this gave Θ.

1.5.1 Amortization Techniques

The Accounting Method

Overcharge for cheap operations and undercharge expensive so that

the excess charged for the cheap (the profit) covers the undercharge

(the loss). This is called in accounting an amortization schedule.

Assume the get(i) and add(e) really cost one ``cyber-dollar'',

i.e., there is a constant K so that they each take fewer than K

primitive operations and we let a ``cyber-dollar'' be K. Similarly,

assume that clear() costs P cyber-dollars when the table has P

elements in it.

We charge 2 cyber-dollars for every operation. So we have a profit

of 1 on each add(e) and we see that the profit is enough to cover next

clear() since if we clear P entries, we had P add(e)s.

All operations cost 2 cyber-dollars so n operations cost 2n. Since

we have just seen that the real cost is no more than the cyber-dollars

spent, the total cost is O(n) and the amortized cost is

O(1). Since every operation has cost Ω(1), the amortized cost

is Θ(1).

Potential Functions

Very similar to the accounting method. Instead of banking money,

you increase the potential energy. I don't believe we will use this

method so we are skipping it. If you like physics more than

accounting, you might prefer it.

1.5.2 Analyzing an Extendable Array Implementation

We want to let the size of an array grow dynamically (i.e., during

execution).

The implementation is quite simple. Copy the old array into a new one

twice the size. Specifically, on an array overflow instead of

signaling an error perform the following steps (assume the array is A

and the current size is N)

- Allocate a new array B of size 2N

- For i←0 to N-1 do B[i]←A[i]

- Make A refer to B (this is A=B in C and java).

- Deallocate the old A (automatic in java; error prone in C)

The cost of this growing operation is Θ(N).

Theorem:

Given an extendable array A that is initially empty and of size N, the

amortized time to perform n add(e) operations is Θ(1).

Proof:

Assume one cyber dollar is enough for an add w/o the grow and that N

cyber-dollars are enough to grow from N to 2N.

Charge 2 cyber dollars for each add; so a profit of 1 for each add w/o

growing.

When you must do a grow, you had N adds so have N dollars banked.

Hence the amortized cost is O(1). Since Omega;(1) is obvious, we get

Θ(1).

================ Start Lecture #5 ================

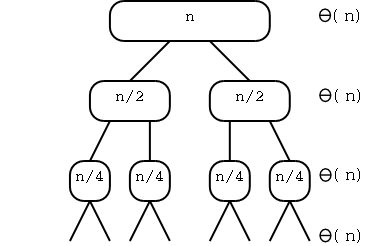

Alternate Proof that the amortized time is

O(1). Note that amortized time is O(1) means that the total time is O(N).

The new proof is a two step procedure

-

The total memory used is bounded by a constant times N.

-

The time per memory cell is bounded by a constant.

Hence the total time is bounded by a constant times the number of

memory cells and hence is bounded by a constant times N.

For step one we note that when the N add operations are complete

the size of the array will be FS<.2N, with FS a power of 2. Let

FS=2k So the total size used is

TS=1+2+4+8+...+FS=∑2i (i from 0 to k). We

already proved that this is

(2k+1-1)/(2-1)=2k+1-1=2FS-1<4N as desired.

An easier way to see that the sum is 2k+1-1 is to write

1+2+4+8+...2k in binary, in which case we get (for k=5)

1

10

100

1000

10000

+100000

------

111111 = 1000000-1 = 25+1-1

The second part is clear. For each cell the algorithm only does a

bounded number of operations. The cell is allocated, a value is

copied in to the cell, and a value is copied out of the cell (and into

another cell).

1.6 Experimentation

1.6.1 Experimental Setup

The book is quite clear. I have little to add.

Choosing the question

You might want to know

- Average running time.

- Compare two algorithms for speed.

- Determine the running time dependence of parameters of the

algorithm.

- For algorithms that generate approximations, test how close they

come to the correct value.

Deciding what to measure

- Memory references (increasingly important--unofficial hardware

comment).

- Comparisons (for sorting, searching, etc).

- Arithmetic ops (for numerical problems).

1.6.2 Data Analysis and Visualization

Ratio test

Assume you believe the running time t(n) of an algorithm is

Θ(nd) for some specific d and you want to both

verify your assumption and find the multiplicative constant.

Make a plot of (n, t(n)/nd). If you are right the

points should tend toward a horizontal line and the height of this

line is the multiplicative constant.

Homework: R-1.29

What if you believe it is polynomial but don't have a guess for d?

Ans: Use ...

The power test

Plot (n, t(n)) on log log paper. If t(n) is

Θ(nd), say t(n) approaches bnd, then

log(t(n)) approaches log(b)+d(log(n)).

So when you plot (log(n), log(t(n)) (i.e., when you use log log

paper), you will see the points approach (for large n) a straight line

whose slope is the exponent d and whose y intercept is the

multiplicative constant b.

Homework: R-1.30

Chapter 2 Basic Data Structures

2.1 Stacks and Queues

2.1.1 Stacks

Stacks implement a LIFO (last in first out)

policy. All the action occurs at the top of the

stack, primarily with the push(e) and

pop operation.

The stack ADT supports

- push(e): Insert e at TOS (top of stack).

- pop(): Remove and return TOS. Signal error if the stack empty.

- top(): Return TOS. Signal an error if empty.

- size(): Return the current numbers of elements in the stack.

- isEmpty(): Shortcut for the boolean expression size()=0.

There is a simple implementation using an array A and an integer s

(the current size). A[s-1] contains the TOS.

Objection (your honor).

The ADT says we can always push.

A simple array implementation would need to signal an error if the

stack is full.

Sustained! What do you propose instead?

An extendable array.

Good idea.

Homework: Assume a software system has 100 stacks

and 100,000 elements that can be on any stack. You do not know how

the elements are to be distributed on the stacks. However, once an

element is put on one stack, it never moves. If you used a normal

array based implementation for the stacks, how much memory will you

need. What if you use an extendable array based implementation?

Now answer the same question, but assume you have Θ(S) stacks and

Θ(E) elements.

Applications for procedure calls

Stacks work great for implementing procedure calls since procedures

have stack based semantics. That is, last called is first returned and

local variables allocated with a procedure are deallocated when the

procedure returns.

So have a stack of "activation records" in which you keep the

return address and the local variables.

Support for recursive procedures comes for free. For languages

with static memory allocations (e.g., fortran) one can store the local

variables with the method. Fortran forbids recursion so that memory

allocation can be static. Recursion adds considerably flexibility to

a language as some cost in efficiency (not part of this

course).

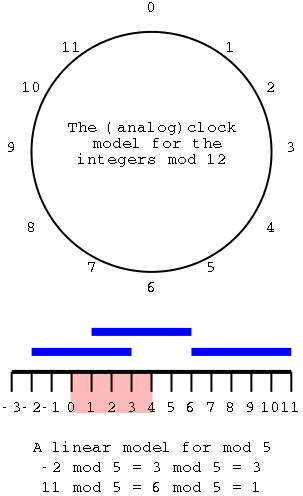

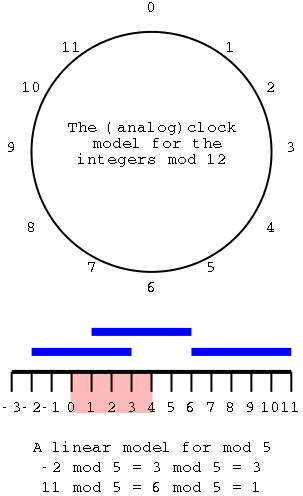

A Review of (the Real) Mod

I am reviewing modulo since I believe it is no longer taught in

high school.

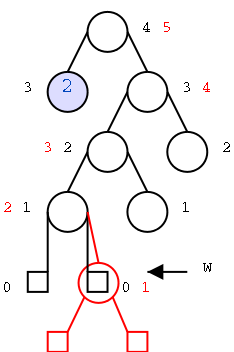

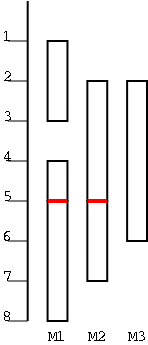

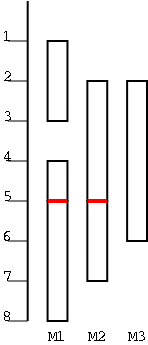

The top diagram shows an almost ordinary analog clock. The major

difference is that instead of 12 we have 0. The hands would be useful

if this was a video, but I omitted them for the static picture.

Positive numbers go clockwise (cw) and negative counter-clockwise

(ccw). The numbers shown are the values mod 12. This example is good

to show arithmetic. (2-5) mod 12 is obtained by starting at 2 and

moving 5 hours ccw, which gives 9. (-7) mod 12 is (0-7) mod 12 is

obtained by starting at 0 and going 7 hours ccw, which gives 5.

To get mod 8, divide the circle into 8 hours

instead of 12.

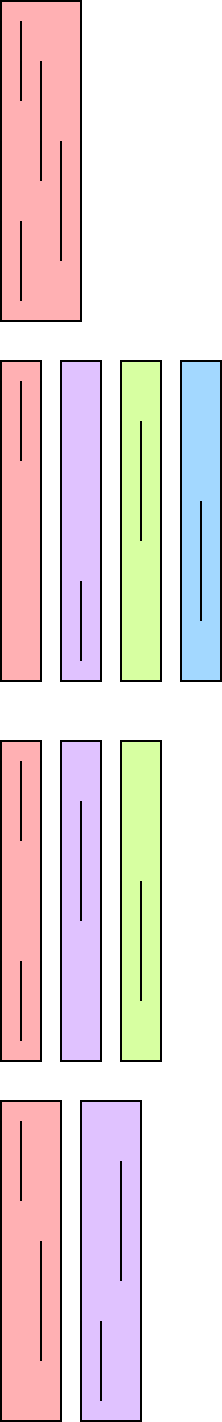

The bottom picture shows mod 5 in a linear fashion. In pink are

the 5 values one can get when doing mod 5, namely 0, 1, 2, 3, and 4.

I only illustrate numbers from -3 to 11 but that is just due to space

limitations. Each blue bar is 5 units long so the numbers at its

endpoints are equal mod 5 (since they differ by 5). So you just lay

off the blue bar until you wind up in the pink.

End of Mod Review

Homework: Using the real mod (let's call it

RealMod) evaluate

-

RealMod(8,3)

-

RealMod(-8,3)

-

RealMod(10000007,10)

-

RealMod(-10000007,10)

2.1.2 Queues

Queues implement a FIFO (first in first out) policy. Elements are

inserted at the rear and removed from the

front using the enqueue and

dequeue operations respectively.

The queue ADT supports

- enqueue(e): Insert e at the rear.

- dequeue(): Remove and return the front element. Signal an error if

the queue is empty.

- front(): Return the front element. Signal an error if empty.

- size(): Return the number of elements currently present.

- isEmpty(): Abbreviation for size()=0

Simple circular-array implementation

Personal rant:

I object to programming languages using

the well known and widely used function name mod and changing its

meaning. My math training prevents me from accepting that mod(-3,10)

is -3. The correct value is 7.

The book, following java, defines mod(x,y) as

x-⌊x/y⌋y.

This is not mod but remainder.

Think of x as the dividend, y as the divisor and then

⌊x/y⌋ is the quotient. We remember from elementary school

dividend = quotient * divisor + remainder

remainder = dividend - quotient * divisor

The last line is exactly the book's and java's definition of mod.

My favorite high level language, ada, gets it right in the

obvious way: Ada defines both mod and remainder

(ada extends the math definition of mod to the case where the second

argument is negative).

In the familiar case when x≥0 and y>0 mod and remainder are

equal. Unfortunately the book uses mod sometimes when x<0 and

consequently needs to occasionally add an extra y to get the true mod.

End of personal rant

Returning to relevant issues we note that for queues we need a

front and rear "pointers" f and r. Since we are using arrays f and r

are actually indexes not pointers. Calling the array Q, Q[f] is the

front element of the queue, i.e., the element that would be returned

by dequeue(). Similarly, Q[r] is the element into which enqueue(e)

would place e. There is one exception: if f=r, the queue is empty so

Q[f] is not the front element.

Without writing the code, we see that f will be increased by each

dequeue and r will be increased by every enqueue.

Assume Q has n slots Q[0]…Q[N-1] and the queue is initially

empty with f=r=0. Now consider enqueue(1); dequeue(); enqueue(2);

dequeue(); enqueue(3); dequeue(); …. There is never more than

one element in the queue, but f and r keep growing so after N

enqueue(e);dequeue() pairs, we cannot issue another operation.

The solution to this problem is to treat the array as circular,

i.e., right after Q[N-1] we find Q[0]. The way to implement this is

to arrange that when either f or r is N-1, adding 1 gives 0 not N.

Similarly for r. So the increment statements become

f←(f+1) mod N

r←(r+1) mod N

Note: Recall that we had some grief due to our

starting arrays and loops at 0. For example, the fifth slot of A is

A[4] and the fifth iteration of "for i←0 to 30" occurs when i=4.

The updates of f and r directly above show one of the advantages of

starting at 0; they are less pretty if the array starts at 1.

The size() of the queue seems to be r-f, but this is not always

correct since the array is circular.

For example let N=10 and consider an initially empty queue with f=r=0 that has

enqueue(10)enqueue(20);dequeue();enqueue(30);dequeue();enqueue(40);dequeue()

applied. The queue has one element, f=4, and r=3.

Now apply 6 more enqueue(e) operations

enqueue(50);enqueue(60);enqueue(70);enqueue(80);enqueue(90);enqueue(100)

At this point the array has 7 elements, f=0, and r=3.

Clearly the size() of the queue is not f-r=-3.

It is instead 7, the number of elements in the queue.

The problem is that f in some sense is 10 not 0 since there were 10

enqueue(e) operations. In fact if we kept 2 values for f and 2 for r,

namely the value before the mod and after, then size() would be

fBeforeMod-rBeforeMod. Instead we, use the following inelegant formula.

size() = (r-f+N) mod N

Remark: If java's definition of -3 mod 10 gave 7 (as it

should) instead of -3, we could use the more attractive formula

size() = (r-f) mod N.

Since isEmpty() is simply an abbreviation for the test size()=0, it

is just testing if r=f.

Algorithm front():

if isEmpty() then

signal an error // throw QueueEmptyException

return Q[f]

Algorithm dequeue():

if isEmpty() then

signal an error // throw QueueEmptyException

temp←Q[f]

Q[f]←NULL // for security or debugging

f←(f+1) mod N

return temp

Algorithm enqueue(e):

if size() = N-1 then

signal an error // throw QueueFullException

Q[r]←e

r←(r+1) mod N

================ Start Lecture #6 ================

Examples in OS

Round Robin processor scheduling is queue based as is fifo disk

arm scheduling.

More general processor or disk arm scheduling policies often use

priority queues (with various definitions of priority). We will learn

how to implement priority queues later this chapter (section 2.4).

Homework: (You may refer to your 202 notes if you

wish; mine are on-line based on my home page).

How can you interpret Round Robin processor scheduling and fifo disk

scheduling as priority queues. That is what is the priority?

Same question for SJF (shortest job first) and SSTF (shortest seek

time first). If you have not taken an OS course (202 or equivalent at

some other school), you are exempt from this question. Just write on

you homework paper that you have not taken an OS course.

Problem Set #1, Problem 2: C-2.2

2.2 Vectors, Lists, and Sequences

Unlike stacks and queues, the structures in this section support

operations in the middle, not just at one or both ends.

2.2.1 Vectors

The rank of an element in a sequence is the number

of elements before it. So if the sequence contains n elements,

0≤rank<n.

A vector storing n elements supports:

- elemAtRank(r): Return the element with rank r. Report an error

r<0 or r>n-1.

- replaceAtRank(r,e): Replace the element at rank r with e and

return it. Report an error r<0 or r>n-1.

- insertAtRank(r,e): Insert e at rank r moving up existing

elements with rank r and above. Report an error r<0 or

r>n. If r=n, then we are inserting at the end (i.e., after all

existing elements).

- removeAtRank(r): Remove the element at rank r moving down existing

elements with rank exceeding r. Report an error r<0 or

r>n-1.

- size().

- isEmpty().

A Simple Array-Based Implementation

Use an array A and store the element with rank r in A[r].

- Must shift elements when doing an insert or delete, which is

expensive.

- The code is below but does not include the error checks in the ADT

- We limit the number of elements to N, the declared size of the

array.

- How can we remove the above limitation?

Ans: Use an extendable array.

Algorithm insertAtRank(r,e)

for i = n-1, n-2, ..., r do

A[i+1]←A[i]

A[r]←e

n←n+1

Algorithm removeAtRank(r)

e←A[r]

for i = r, r+1, ..., n-2 do

A[i]←A[i+1]

n←n-1

return e

The worst-case time complexity of these two algorithms is

Θ(n); the remaining algorithms are all Θ(1).

Homework: When does the worst case occur for

insertAtRank(r,e) and removeAtRank(r)?

By using a circular array we can achieve Θ(1) time for

insertAtRank(0,e) and removeAtRank(0). Indeed, that is the third

problem of the first problem set.

Problem Set #1, Problem 3:

Part 1: C-2.5 from the book

Part 2: This implementation still has worst case complexity

Θ(n). When does the worst case occur?

2.2.2 Lists

So far we have been considering what Knuth refers to as sequential

allocation, when the next element is stored in the next location.

Now we will be considering linked allocation, where each element

refers explicitly to the next and/or preceding element(s).

Positions and Nodes

We think of each element as contained in a node,

which is a placeholder that also contains references to the preceding

and/or following node.

- In C: struct node { int element; struct node *next; struct node *prev;}

- In java: class Node { int element; Node next; Node prev;}

But in fact we don't want to expose Nodes to user's algorithms

since this would freeze the possible implementation.

Instead we define the idea (i.e., ADT) of a

position in a list.

The only method available to users is

- element(): Return the element stored in this position.

The List ADT

Given the position ADT, we can now define the methods for the list

ADT. The first methods only query a list; the last ones actually

modify it.

- -------------------------- read-only ------------------------

- first(): Return the position of the first element; error if L is

empty.

- last(): Return the position of the last element; error if empty.

- isFirst(p): Abbreviates p=first().

- isLast(p): Abbreviates p=last().

- before(p): Return the position preceding p; error if L is empty.

- after(p): Return the position following p; error if empty.

- size():

- isEmpty():

- -------------------------- updates ------------------------

- replaceElement(p,e): Store e at p, return replaced element.

- swapElements(p,q): Swap the elements at p and q.

- insertBefore(p,e): Insert e in the position before p. The book

says it is an error if p is the first position; that seems wrong,

especially considering the next method. I explain what they

likely meant below

- insertFirst(e): Abbreviates insertBefore(first(),e).

- insertAfter(p,e): Insert e in the position after p (analogous

error comment).

- insertLast(e): Abbreviates insertAfter(last(),e).

- remove(p): Remove the element at position p.

A Linked List Implementation

Now when we are implementing a list we can certainly use

the concept of nodes.

In a singly linked list each node contains a next

link that references the next node.

A doubly linked list contains, in addition prev link

that references the previous node.

Singly linked lists work well for stacks and queues, but do not

perform well for general lists. Hence we use doubly linked lists

Homework: What is the worst case time complexity

of insertBefore for a singly linked list implementation and when does

it occur?

================ Start Lecture #7 ================

Remarks:

-

Harper has offered to have an office hour thurs from 2-3. He is

also available by email appointment.

-

I think I wrote something wrong on the upper left board. I put

part 2 of problem 3 of problem set 1 as a homework. You can leave

it in the homework if you like, but it must be part of the problem

set (which is still not assigned, but coming soon).

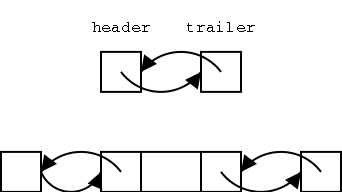

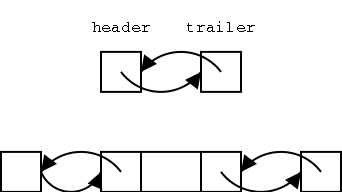

It is convenient to add two special nodes, a

header and trailer. The header has

just a next component, which links to the first node and the trailer

has just a prev component, which links to the last node. For an empty

list, the header and trailer link to each other and for a list of size

1, they both link to the only normal node.

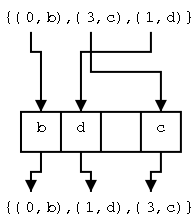

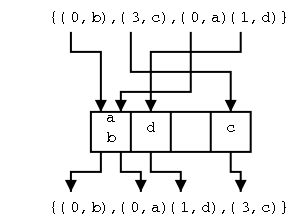

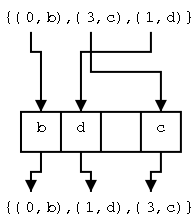

In order to proceed from the top (empty) list to the bottom list

(with one element), one would need to execute one of the insert

methods. Ignoring the abbreviations, this means either

insertBefore(p,e) or inserAfter(p,e). But this means that header

and/or trailer must be an example of a position, one for which there

is no element.

This observation explains the authors' comment above

that insertBefore(p,e) cannot be applied if p is the first position.

What they mean is that when we permit header and trailer to be

positions, then we cannot insertBefore the first position, since that

position is the header and the header has no prev. Similarly we

cannot insertAfter the final position since that position is the

trailer and the trailer has no next.

Clearly not the authors' finest hour.

A list object contains three components, the header, the trailer,

and the size of the list. Note that the book forgets to update the

size for inserts and deletes.

Implementation Comment I have not done the

implementation. It is probably easiest to have header and trailer

have the same three components as a normal node, but have the prev of

header and the next of trailer be some special value (say NULL) that

can be tested for.

The insertAfter(p,e) Algorithm

The position p can be header, but cannot be trailer.

Algorithm insertAfter(p,e):

If p is trailer then signal an error

size←size+1 // missing in book

Create a new node v

v.element←e

v.prev←p

v.next←p.next

(p.next).prev←v

p.next← v

return v

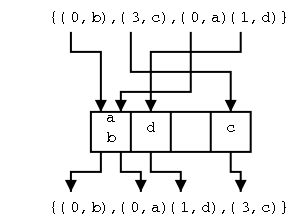

Do on the board the pointer updates for two cases: Adding a node

after an ordinary node and after header. Note that they are the

same. Indeed, that is what makes having the header and trailer so

convenient.

Homework: Write pseudo code for insertBefore(p,e).

Note that insertAfter(header,e) and insertBefore(trailer,e) appear

to be the only way to insert an element into an empty list. In

particular, insertFirst(e) fails for an empty list since it performs

insertBefore(first()) and first() generates an error for an empty list.

The remove(p) Algorithm

We cannot remove the header or trailer. Notice that removing the

only element of a one-element list correctly produces an empty list.

Algorithm remove(p)

if p is either header or trailer signal an error

size←size-1 // missing in book

t←p.element

(p.prev).next←p.next

(p.next).prev←p.prev

p.prev←NULL // for security or debugging

p.next←NULL

return t

2.2.3 Sequences

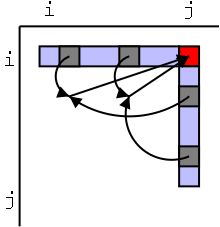

| Operation | Array | List

|

|---|

| size, isEmpty | O(1) | O(1)

|

| atRank, rankOf, elemAtRank | O(1) | O(n)

|

| first, last, before, after | O(1) | O(1)

|

| replaceElement, swapElements | O(1) | O(1)

|

| replaceAtRank | O(1) | O(n)

|

| insertAtRank, removeAtRank | O(n) | O(n)

|

| insertFirst, insertLast | O(1) | O(1)

|

| insertAfter, insertBefore | O(n) | O(1)

|

| remove | O(n) | O(1)

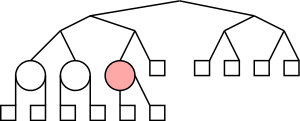

Asymptotic complexity of the methods for both the array and

list based implementations

|

Define a sequence ADT that includes all the methods of both vector

and list ADTs as well as

- atRank(r): Return the position of the element at rank r.

- rankOf(p): Return the rank of the element at position p.

Sequences can be implemented as either circular arrays, as we did

for vectors, or doubly linked lists, as we did for lists. Neither

clearly dominates the other. Instead it depends on the relative

frequency of the various operations. Circular arrays are faster for

some and doubly liked lists are faster for others as the table to the

right illustrates.

Iterators

An ADT for looping through a sequence one element at a time. It

has two methods.

- hasNext: Test whether there are elements left in the iterator

- nextObject: Return and remove the next object in the iterator

When you create the iterator it has all the elements of the

sequence. So a typical usage pattern would be

create iterator I for sequence S

while I.hasNext

process.nextObject

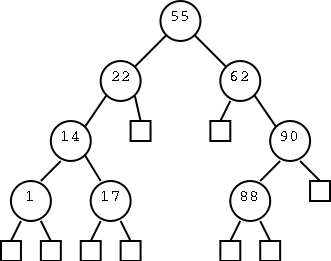

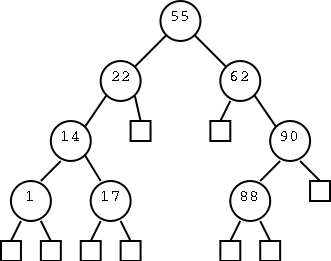

2.3 Trees

The tree ADT stores elements hierarchically.

There is a distinguished root node. All other nodes

have a parent of which they are a

child. We use nodes and positions interchangeably

for trees.

The definition above precludes an empty tree. This is a matter of

taste some authors permit empty trees, others do not.

Some more definitions.

- Nodes with the same parent are called siblings.

- A node without children is called external by the

authors. Also common is to call such nodes

leaves.

- A node with children is called internal.

- An ancestor of a node is either the node itself

or an ancestor of the parent of the node. This says that the

ancestors include the node, the node's parent, the parent's

parent, ... up to the root.

- A node v is a descendent of a node u if u is an

ancestor of v.

- The subtree rooted at v is the tree consisting of

all the descendents of v.

- A tree is ordered if there is a linear ordering

of the children of each node. That is, there is a first child, a

second child, etc.

- A binary tree is one in which all nodes have

at most two children.

- A proper binary tree is one

in which no node has exactly one child. This means that all

internal nodes have two children.

- In a binary tree we label each child as either a left

child or as a right child.

- The subtree routed at the left child of v is called the

left subtree of v.

- Similarly we define the right subtree of v.

- The depth of a node is the number of ancestors

not including the node itself.

- The height of a node is the length of a longest

path to a leaf.

- The height of a tree is the height of the root.

We order the children of a binary tree so that the left child comes

before the right child.

There are many examples of trees. You learned or will learn

tree-structured file systems in 202. However, despite what the book

says, for Unix/Linux at least, the file system does not form a

tree (due to hard and symbolic links).

These notes can be thought of as a tree with nodes corresponding to

the chapters, sections, subsections, etc.

Games like chess are analyzed in terms of trees. The root is the

current position. For each node its children are the positions

resulting from the possible moves. Chess playing programs often limit

the depth so that the number of examined moves is not too large.

An arithmetic expression tree

The leaves are constants or variables and the internal nodes are binary

arithmetic operations (+,-,*,/).

The tree is a proper ordered binary tree (since we are considering

binary operators).

The value of a leaf is the value of the constant or variable.

The value of an internal node is obtained by applying the operator to

the values of the children (in order).

Evaluate an arithmetic expression tree on the board.

Homework: R-2.2, but made easier by replacing 21

by 10. If you wish you can do the problem in the book instead (I

think it is harder).

2.3.1 The Tree Abstract Data Type

We have three accessor methods (i.e., methods that

permit us to access the nodes of the tree.

- root(): Return the root of the tree.

- parent(v): Return the parent of the node v. Error if v is

the root.

- children(v): Return an iterator of the children of v (in order if

the tree is ordered).

We have four query methods that test status.

- isInternal(v): Tests if v is internal.

- isLeaf(v): Tests if v is a leaf.

- isExternal(v): Same as isLeaf(v).

- isRoot(v): Tests if v is the root.

Finally generic methods that are useful but not

related to the tree structure.

- size(): Return the number of nodes

- elements(): Return an iterator of all the elements stored

in nodes (in no particular order).

- positions: Return an iterator of all the nodes of the tree.

- swapElements(v,w): Swap the elements in nodes v and w.

- replaceElement(v,e): Replace with e and return the element stored

at v.

2.3.2 Tree Traversal

Traversing a tree is a systematic method for

accessing or "visiting" each node. We will see and analyze three tree

traversal algorithms, inorder, preorder, and postorder. They differ in

when we visit an internal node relative to its children. In

preorder we visit the node first, in postorder we visit it last, and

in inorder, which is only defined for binary trees, we visit the node

between visiting the left and right children.

Recursion will be a very big deal in traversing

trees!!

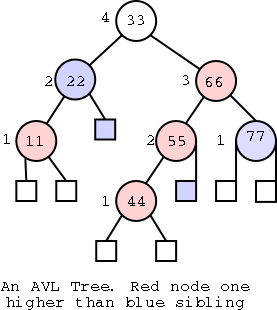

Motivating the Recursive Routines

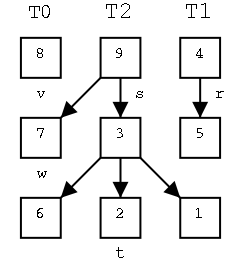

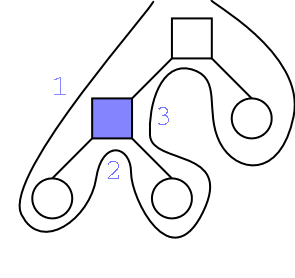

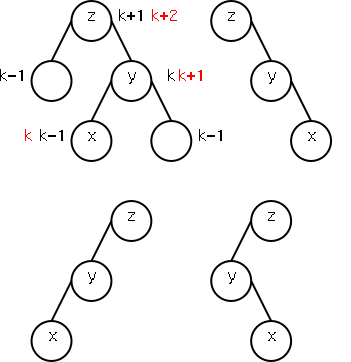

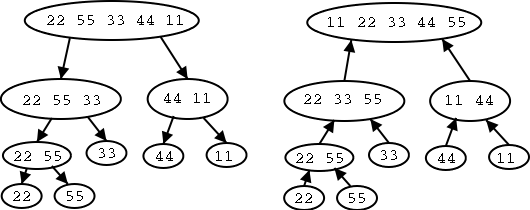

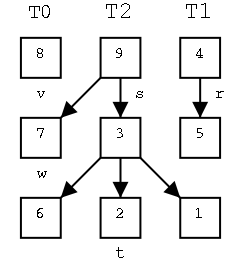

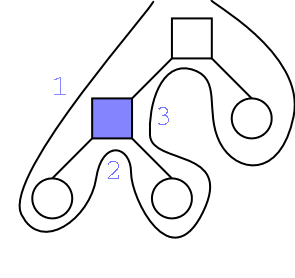

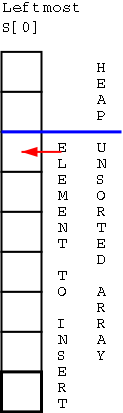

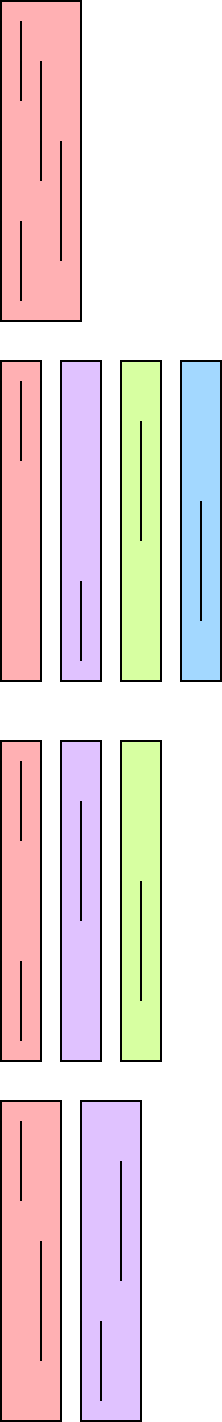

On the right are three trees. The left one just has a root, the

right has a root with one leaf as a child, and the middle one has

six nodes. For each node, the element in that node is shown inside

the box. All three roots are labeled and 2 other nodes are

also labeled. That is, we give a name to the position, e.g. the left

most root is position v. We write the name of the position under the

box.

We call the left tree T0 to remind us it has height zero. Similarly

the other two are labeled T2 and T1 respectively.

Our goal in this motivation is to calculate the sum the elements in

all the nodes of each tree. The answers are, from left to right, 8,

28, and 9.

For a start, lets write an algorithm called treeSum0

that calculates the sum for

trees of height zero. In fact the algorithm, will contain two

parameters, the tree and a node (position) in that tree, and our

algorithm will calculate the sum in the subtree rooted at the given

position assuming the position is at height 0. Note this is trivial:

since the node has height zero, it has no children and the sum desired

is simply the element in this node. So legal invocations would

include treeSum0(T0,s) and treeSum0(T2,t). Illegal invocations would

include treeSum0(T0,t) and treeSum0(T1,r).

Algorithm treeSum0(T,v)

Inputs: T a tree; v a height 0 node of T

Output: The sum of the elements of the subtree routed at v

Sum←v.element()

return Sum

Now lets write treeSum1(T,v), which calculates the sum for a node

at height 1. It will use treeSum0 to calculate the sum for each

child.

Algorithm treeSum1(T,v)

Inputs: T a tree; v a height 1 node of T

Output: the sum of the elements of the subtree routed at v

Sum←v.element()

for each child c of v

Sum←Sum+treeSum0(T,c)

return Sum

OK. How about height 2?

Algorithm treeSum2(T,v)

Inputs: T a tree; v a height 2 node of T

Output: the sum of the elements of the subtree routed at v

Sum←v.element()

for each child c of v

Sum←Sum+treeSum1(T,c)

return Sum

So all we have to do is to write treeSum3, treSum4, ... , where

treSum3 invokes treeSum2, treeSum4 invokes treeSum3, ... .

That would be, literally, an infinite amount of work.

Do a diff of treeSum1 and treeSum2.

What do you find are the differences.

In the Algorithm line and in the first comment a 1 becomes a 2.

In the subroutine call a 0 becomes a 1.

Why can't we write treeSumI and let I vary?

Because it is illegal to have a varying name for an algorithm.

The solution is to make the I a parameter and write

Algorithm treeSum(i,T,v)

Inputs: i≥0; T a tree; v a height i node of T

Output: the sum of the elements of the subtree routed at v

Sum←v.element()

for each child c of v

Sum←Sum+treeSum(i-1,T,c)

return Sum

This is wrong, why?

Because treeSum(0,T,v) invokes treeSum(-1,c,v), which doesn't

exist because i<0

But treeSum(0,T,v) doesn't have to call anything since v can't have

any children (the height of v is 0). So we get

Algorithm treeSum(i,T,v)

Inputs: i≥0; T a tree; v a height i node of T

Output: the sum of the elements of the subtree routed at v

Sum←v.element()

if i>0 then

for each child c of v

Sum←Sum+treeSum(i-1,T,c)

return Sum

The last two algorithms are recursive; they call themselves.

Note that when treeSum(3,T,v) calls treeSum(2,T,c), the new treeSum

has new variables Sum and c.

We are pretty happy with our treeSum routine, but ...

The algorithm is wrong! Why?

The children of a height i node need not all be of height i-1.

For example s is hight 2, but its left child w is height 0.

(A corresponding error also existed in treeSum2(T,v)

But the only real use we are making of i is to prevent us from

recursing when we are at a leaf (the i>0 test).

But we can use isInternal instead, giving our final algorithm

Algorithm treeSum(T,v)

Inputs: T a tree; v a node of T

Output: the sum of the elements of the subtree routed at v

Sum←v.element()

if T.isInternal(v) then

for each child c of v

Sum←Sum+treeSum(T,c)

return Sum

================ Start Lecture #8 ================

Our medium term goal is to learn about tree traversals (how to

"visit" each node of a tree once) and to analyze their complexity.

Complexity of Primitive Operations

Our complexity analysis will proceed in a somewhat unusual order.

Instead of starting with the bottom or lowest level routines (the tree

methods in 2.3.1, e.g., is Internal(v)) or the top level routines (the

traversals themselves),

we will begin by analyzing some middle level procedures assuming the

complexities of the low level are as we assert them to be. Then we

will analyze the traversals using the middle level routines and

finally we will give data structures for trees that achieve our

assumed complexity for the low level.

Let's begin!

Complexity Assumptions for the Tree ADT

These assumptions will be verified later.

- root(), parent(v), isInternal(v), isLeaf(v), isRoot(v),

swapElements(v,w), replaceElement(v,e) each take Θ(1) time.

- The methods returning iterators, namely children(v), elements(),

and positions(), each take time Θ(k), where k is the number of

items being iterated over. k=#children for the first method and

#nodes for the other two.

- For each iterator, the methods hasNext() and nextObject() take

Θ(1) time. nextObject() sometimes has other names like

nextPosition() or nextNode() or nextChild().

Middle level routines depth and height

Definitions of depth and

height.

- The depth of the root is 0.

- The height of a leaf is 0.

- The depth of a non-root v is 1 plus the depth of parent(v).

- The height of an internal node v is 1 plus the maximum

height of the children of v.

- The height of a tree is the height of its root.

Remark: Even our definitions are recursive!

From the recursive definition of depth, the recursive algorithm for

its computation essentially writes itself.

Algorithm depth(T,v)

if T.isRoot(v) then

return 0

else

return 1 + depth(T,T.parent(v))

The complexity is Θ(the answer), i.e. Θ(dv),

where dv is the depth of v in the tree T.

Problem Set #1, Problem 4:

Rewrite depth(T,v) without using recursion.

This is quite easy. I include it in the problem set to ensure

that you get practice understanding recursive definitions.

The problem set is now assigned. It is due in 3 lectures from now

(i.e., about 1.5 weeks).

The following algorithm computes the height of a position in a tree.

Algorithm height(T,v):

if T.isLeaf(v) then

return 0

else

h←0

for each w in T.children(v) do

h←max(h,height(T,w))

return h+1

Remarks on the above algorithm

-

The loop could (perhaps should) be written in pure iterator style.

Note that T.children(v) is an iterator.

-

This algorithm is not so easy to convert to non-recursive form

Why?

It is not tail-recursive, i.e. the recursive invocation is not

just at the end.

-

To get the height of the tree, execute height(T,T.root())

Algorithm height(T)

height(T,T.root())

Let's use the "official" iterator style.

Algorithm height(T,v):

if T.isLeaf then

return 0

else

h←0

childrenOfV←T.children(v) // "official" iterator style

while childrenOfV.hasNext()

h&lar;max(h,height(T,childrenOfV.nextObject())

return h+1

But the children iterator is defined to return the empty set for a

leaf so we don't need the special case

Algorithm height(T,v):

h←0

childrenOfV←T.children(v) // "official" iterator style

while childrenOfV.hasNext()

h&lar;max(h,height(T,childrenOfV.nextObject())

return h+1

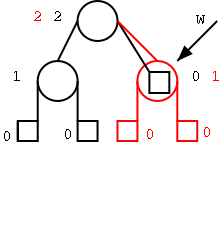

Theorem: Let T be a tree with n nodes and let

cv be the number of children of node v. The sum of

cv over all nodes of the tree is n-1.

Proof:

This is trivial! ... once you figure out what it is saying.

The sum gives the total number of children in a tree. But this almost

all nodes. Indeed, there is just one exception.

What is the exception?

The root.

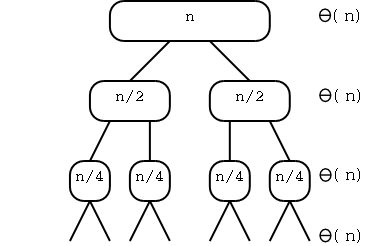

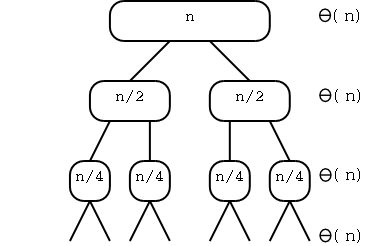

Corollary: Computing the height of an n-node tree

has time complexity Θ(n).

Proof:

Look at the code of the first version.

-

Since each node calls height on each of its children, height is

called recursively for every node that is a child, i.e. all but

the root. The root is called directly from the top level.

-

Hence height(T,v) is called once for each node v.

-

"Most" of the code in height is clearly Θ(1) per call, or

Θ(n) in total. The exception is the loop.

-

Each iteration of the loop is Θ(1).

-

The number of iterations in the invocation height(T,v) is the

number of children in v.

-

Hence the total number of iterations is the total number of

children, which by the theorem is n-1.

-

Hence the total cost of all the iterations is Θ(n).

-

Hence the total cost of height(T) is the cost of "most" of the

code plus the cost of the loops, which is Θ(N)+Θ(N),

i.e. Θ(N).

To be more formal, we should look at the "official" iterator

version. The only real difference is that in the official version, we

are charged for creating the iterator. But the charge is the number

of elements in the iterator, i.e., the number of children this node

has. So the sum of all the charges for creating iterators will be the

sum of the number of children each node has, which is the total number

of children, which is n-1, which is (another) $Theta;(n) and hence

doesn't change the final answer.

Do a few on the board. As mentioned above, becoming facile with

recursion is vital for tree analyses.

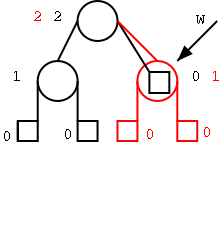

Definition: A traversal is a

systematic way of "visiting" every node in a tree.

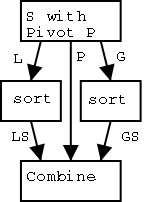

Preorder Traversal

Visit the root and then recursively traverse each child. More

formally we first give the procedure for a preorder traversal starting

at any node and then define a preorder traversal of the entire tree as

a preorder traversal of the root.

Algorithm preorder(T,v):

visit node v

for each child c of v

preorder(T,c)

Algorithm preorder(T):

preorder(T,T.root())

Remarks:

- In a preorder traversal, parents come

before children (which is as it should be :-)).

- If you describe a book as an ordered tree, with nodes for each

chapter, section, etc., then the pre-order traversal visits the

nodes in the order they would appear in a table of contents.

Do a few on the board. As mentioned above, becoming facile with

recursion is vital for tree analyses.

Theorem: Preorder traversal of a tree with n nodes

has complexity Θ(n).

Proof:

Just like height.

The nonrecursive part of each invocation takes O(1+cv)

There are n invocations and the sum of the c's is n-1.

Homework: R-2.3

================ Start Lecture #9 ================

Postorder Traversal

First recursively traverse each child then visit the root.

More formerly

Algorithm postorder(T,v):

for each child c of v

postorder(T,c)

visit node v

Algorithm postorder(T):

postorder(T,T.root())

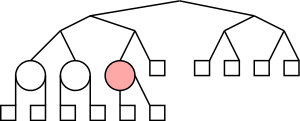

Theorem: Preorder traversal of a tree with n nodes

has complexity Θ(n).

Proof:

The same as for preorder.

Remarks:

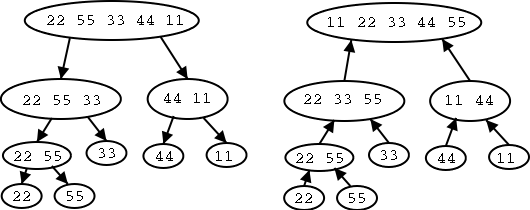

- Postorder is how you evaluate an arithmetic expression tree.

- Evaluate some arithmetic expression trees.

- When you write out the nodes in the order visited your get what is

called either "reverse polish notation" or "polish notation"; I

don't remember which.

- If you do preorder traversal you get the other one.

Problem Set 2, Problem 1.

Note that the height of a tree is the depth of a deepest node.

Extend the height algorithm so that it returns in addition to the

height the v.element() for some v that is of maximal depth. Note that

the height algorithm is for an arbitrary (not necessarily binary)

tree; your extension should also work for arbitrary trees (this is

*not* harder).

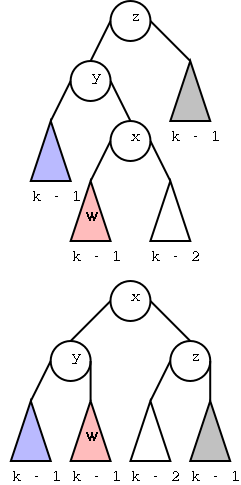

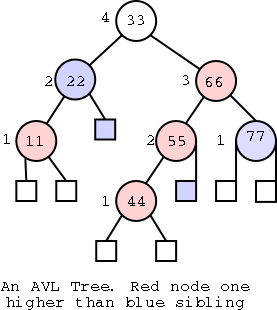

2.3.3 Binary Trees

Recall that a binary tree is an ordered tree in which no node has

more than two children. The left child is ordered before the right

child.

The book adopts the convention that, unless otherwise mentioned,

the term "binary tree" will mean "proper binary tree", i.e., all

internal nodes have two children.

This is a little convenient, but not a big deal.

If you instead permitted non-proper binary trees, you would test if a

left child existed before traversing it (similarly for right child.)

Will do binary preorder (first visit the node, then the left

subtree, then the right subtree, binary postorder (left subtree, right

subtree, node) and then inorder (left subtree, node, right subtree).

The Binary Tree ADT

We have three (accessor) methods in addition to the general tree

methods.

- leftChild(v): Return the (position of the) left child; signal an

error if v is a leaf.

- rightChild(v): Similar

- sibling(v): Return the (unique) sibling of v; signal an error if v

is the root (and hence has no sibling).

Remark: I will not hold you

responsible for the

proofs of the theorems.

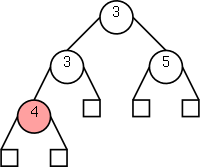

Theorem: Let T be a binary tree having height h

and n nodes. Then

- The number of leaves in T is at least h+1 and at most 2h.

- The number of internal nodes in T is at least h and at most

2h-1.

- The number of nodes in T is at least 2h+1 and at most

2h+1-1.

- log(n+1)-1≤h≤(n-1)/2

Proof:

- Induction on n the number of nodes in the tree.

Base case n=1: Clearly true for all trees having only one

node.

Induction hypothesis: Assume true for all trees having at most

k nodes.

Main inductive step: prove the assertion for all trees having k+1

nodes. Let T be a tree with k nodes and let h be the height of T.

Remove the root of T. The two subtrees produced each have no

more than k nodes so satisfy the assertion. Since each has height

at most h-1, each has at most 2h-1 leaves. At least

one of the subtrees has height exactly h-1 and hence has at least

h leaves. Put the original tree back together.

One subtree

has at least h leaves, the other has at least 1, so the original

tree has at least h+1. Each subtree has at most 2h-1

leaves and the original root is not a leaf, so the original has at

most 2h leaves.

- Same idea. Induction on the number of nodes. Clearly true if T

has one node. Remove the root. At least one of the subtrees is

height h-1 and hence has at least h-1 internal nodes. Each of the

subtrees has height at most h-1 so has at most 2h-1-1

internal nodes. Put the original tree back together. One subtree

has at least h-1 internal nodes, the other has at least 1, so the

original tree has at least h. Each subtree has at most

2h-1-1 internal nodes and the original root is an

internal node, so the original has at most 2(2h-1)+1

= 2h-1-1 internal nodes.

- Add parts 1 and 2.

- Apply algebra (including a log) to part 3.

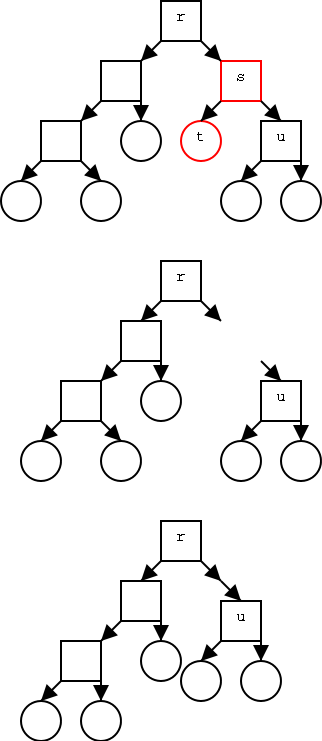

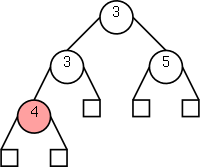

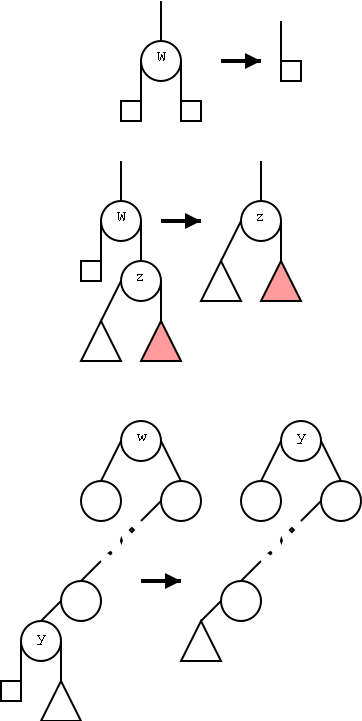

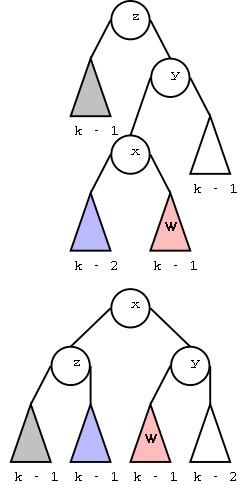

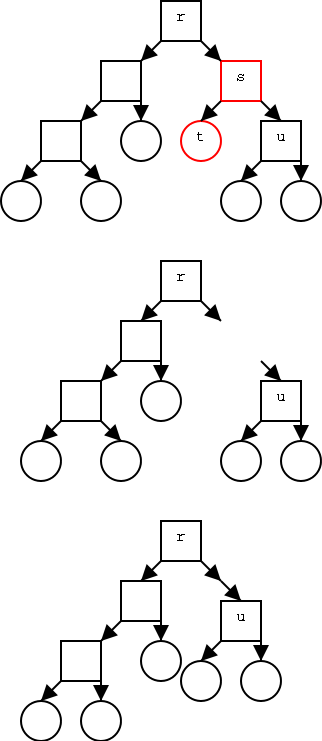

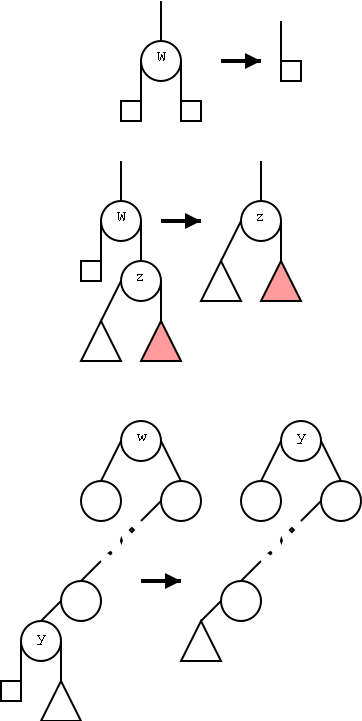

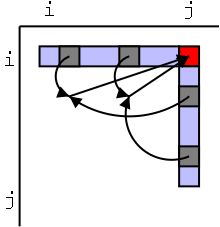

Theorem:In a binary tree T, the number of leaves

is 1 more than the number of internal nodes.

Proof:

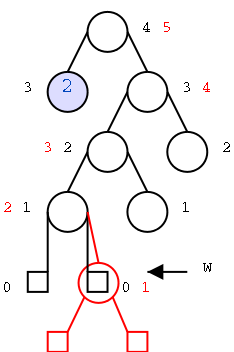

Again induction on the number of nodes. Clearly true for one node.

Assume true for trees with up to n nodes and let T be a tree with n+1

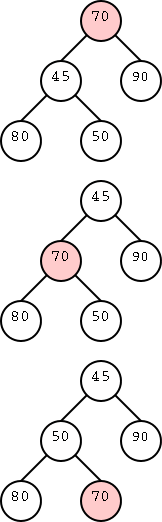

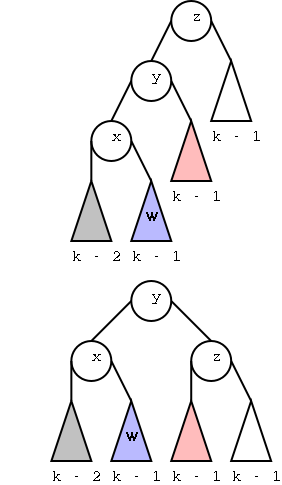

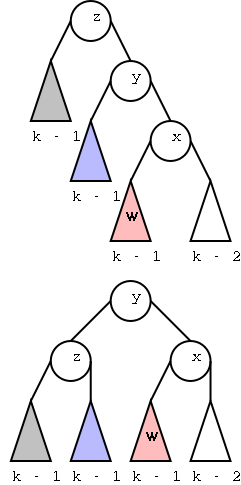

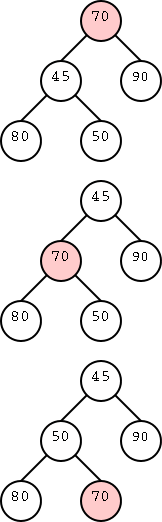

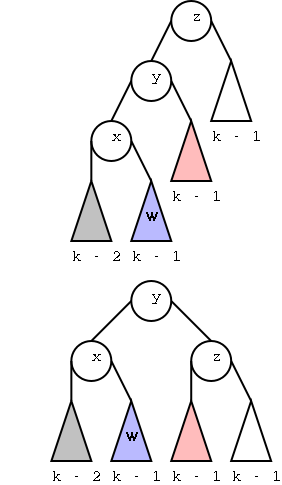

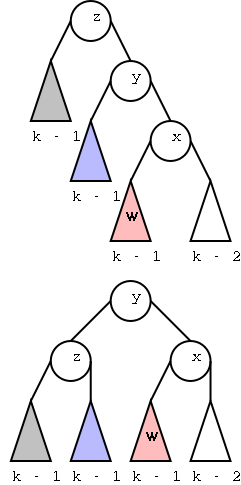

nodes. For example T is the top tree on the right.

- Choose a leaf and its parent (which of course is internal).

For example, the leaf t and parent s in red.

- Remove the leaf and its parent (middle diagram)

- Splice the tree back without the two nodes (bottom diagram).

- Since S has n-1 nodes, S satisfies the assertion.

- Note that T is just S + one leaf + one internal so also satisfies

the assertion.

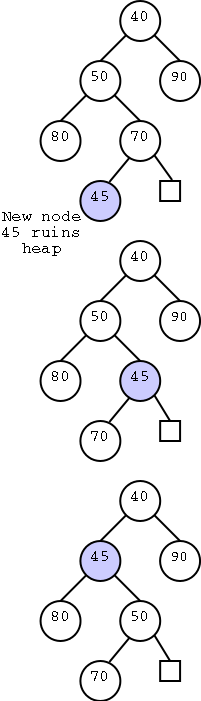

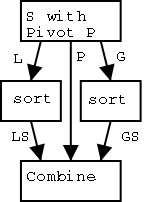

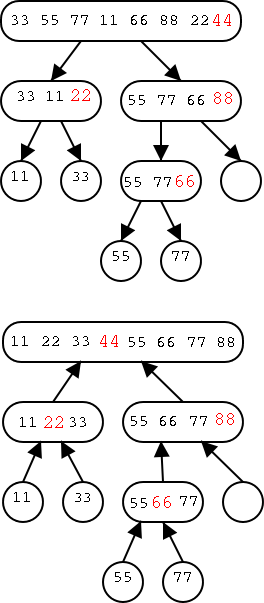

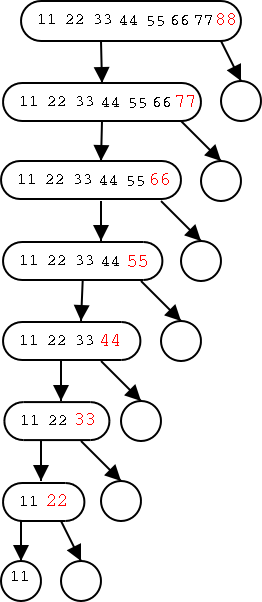

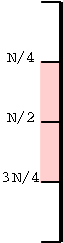

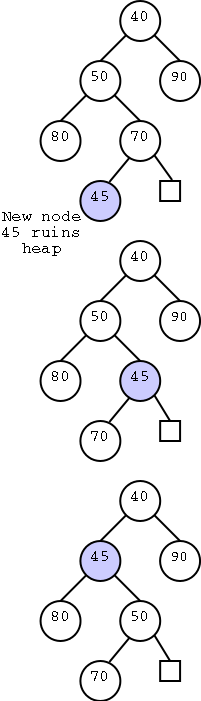

Alternate Proof (does not use the pictures):