Homework: 3.

There is not as much difference between mainframe, server, multiprocessor, and PC OSes as Tannenbaum suggests. For example Windows NT/2000/XP are used in all (except mainframes) and Unix and Linux are used on all.

Used in data centers, these systems ofter tremendous I/O capabilities and extensive fault tolerance.

Perhaps the most important servers today are web servers. Again I/O (and network) performance are critical.

These existed almost from the beginning of the computer age, but now are not exotic.

Some OSes (e.g. Windows ME) are tailored for this application. One could also say they are restricted to this application.

Very limited in power (both meanings of the word).

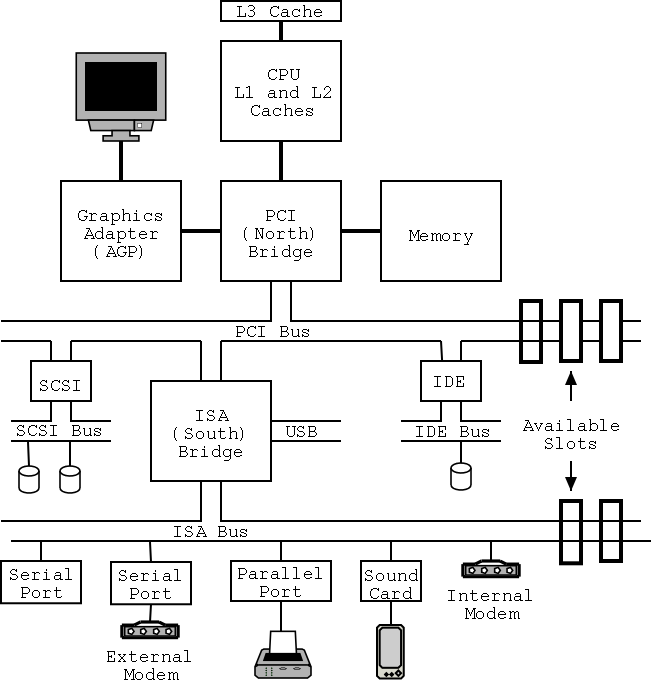

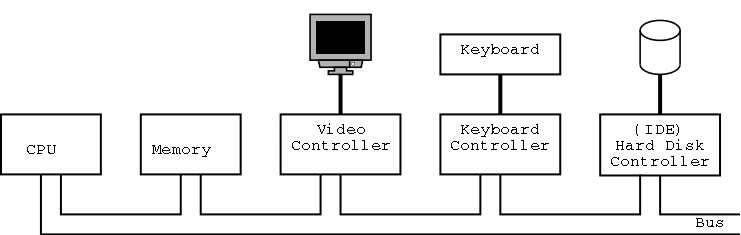

Tannenbaum's treatment is very brief and superficial. Mine is even more so. The picture on the right is very simplified. For one thing, today separate buses are used to Memory and Video.

We will ignore processor concepts such as program counters and stack pointers. We will also ignore computer design issues such as pipelining and superscalar. We do, however, need the notion of a trap, that is an instruction that atomically switches the processor into privileged mode and jumps to a pre-defined physical address.

We will ignore caches, but will (later) discuss demand paging, which is very similar although uses completely disjoint terminology. In both cases, the goal is to combine large slow memory with small fast memory and achieve the effect of large fast memory.

The central memory in a system is called RAM (Random Access Memory). A key point is that it is volatile, i.e. the memory loses its value if power is turned off.

I don't understand why Tanenbaum discusses disks here instead of in the next section entitled I/O devices, but he does. I don't.

ROM (Read Only Memory) is used to hold data that will not change, e.g. the serial number of a computer or the program use in a microwave. ROM is non-volatile.

But often this unchangable data needs to be changed (e.g., to fix bugs). This gives rise first to PROM (Programmable ROM), which, like a CD-R, can be written once (as opposed to being mass produced already written like a CD-ROM), and then to EPROM (Erasable PROM; not Erasable ROM as in Tanenbaum), which is like a CD-RW. An EPROM is especially. convenient if it can be erased with a normal circuit (EEPROM, Electrically EPROM or Flash RAM).

As mentioned above when discussing OS/MFT and OS/MVT multiprogramming requires that we protect one process from another. That is we need to translate the virtual addresses of each program into distinct physical addresses. The hardware that performs this translation is called the MMU or Memory Management Unit.

When context switching from one process to another, the translation must change, which can be an expensive operation.

When we do I/O for real, I will show a real disk opened up and illustrate the components

Devices are often quite complicated to manage and a separate computer, called a controller, is used to translate simple commands (read sector 123456) into what the device requires (read cylinder 321, head 6, sector 765). Actually the controller does considerably more, e.g. calculates a checksum for error detection.

How does the OS know when the I/O is complete?

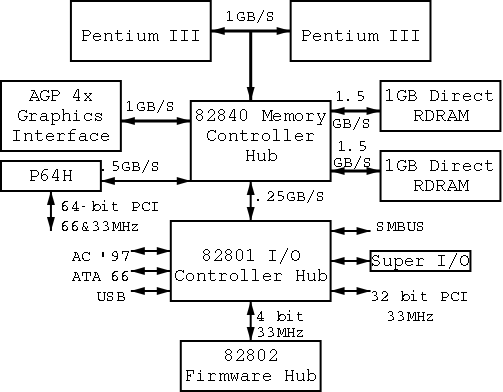

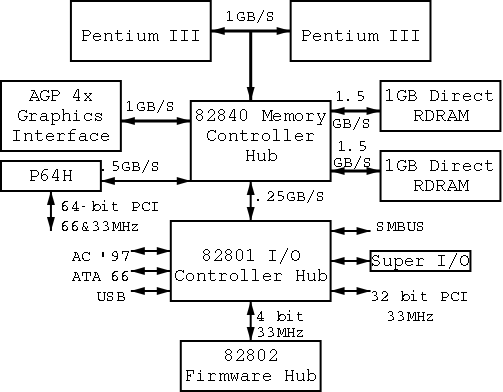

I don't care very much about the names of the buses, but the diagram

given in the book doesn't show a modern design. The one below

does. On the right is a figure showing the specifications for a modern chip

set (introduced in 2000). The chip set has two different width PCI

busses, which is not shown below. Instead of having the chip set

supply USB, a PCI USB controller may be used. Finally, the use of ISA

is decreasing. Indeed my newest machine does not have a ISA bus and I

had to replace my ISA sound card with a PCI version.