The word atomically means that the two actions performed by TAS(x) (testing, i.e., returning the old value of x and setting , i.e., assigning true to x) are inseparable. Specifically it is not possible for two concurrent TAS(x) operations to both return false (unless there is also another concurrent statement that sets x to false).

With TAS available implementing a critical section for any number of processes is trivial.

loop forever {

while (TAS(s)) {} ENTRY

CS

s<--false EXIT

NCS

Remark: Tanenbaum does both busy waiting (as above) and blocking (process switching) solutions. We will only do busy waiting, which is easier. Sleep and Wakeup are the simplest blocking primitives. Sleep voluntarily blocks the process and wakeup unblocks a sleeping process. We will not cover these.

Homework: Explain the difference between busy waiting and blocking.

Remark: Tannenbaum use the term semaphore only for blocking solutions. I will use the term for our busy waiting solutions. Others call our solutions spin locks.

The entry code is often called P and the exit code V. Thus the critical section problem is to write P and V so that

loop forever

P

critical-section

V

non-critical-section

satisfies

Note that I use indenting carefully and hence do not need (and sometimes omit) the braces {} used in languages like C or java.

A binary semaphore abstracts the TAS solution we gave for the critical section problem.

while (S=closed) {}

S<--closed <== This is NOT the body of the while

where finding S=open and setting S<--closed is atomic

The above code is not real, i.e., it is not an implementation of P. It is, instead, a definition of the effect P is to have.

To repeat: for any number of processes, the critical section problem can be solved by

loop forever

P(S)

CS

V(S)

NCS

The only specific solution we have seen for an arbitrary number of processes is the one just above with P(S) implemented via test and set.

Remark: Peterson's solution requires each process to know its processor number. The TAS soluton does not. Moreover the definition of P and V does not permit use of the processor number. Thus, strictly speaking Peterson did not provide an implementation of P and V. He did solve the critical section problem.

To solve other coordination problems we want to extend binary semaphores.

Both of the shortcomings can be overcome by not restricting ourselves to a binary variable, but instead define a generalized or counting semaphore.

while (S=0) {}

S--

where finding S>0 and decrementing S is atomic

These counting semaphores can solve what I call the semi-critical-section problem, where you premit up to k processes in the section. When k=1 we have the original critical-section problem.

initially S=k

loop forever

P(S)

SCS <== semi-critical-section

V(S)

NCS

Initially e=k, f=0 (counting semaphore); b=open (binary semaphore)

Producer Consumer

loop forever loop forever

produce-item P(f)

P(e) P(b); take item from buf; V(b)

P(b); add item to buf; V(b) V(e)

V(f) consume-item

| Busy wait | block/switch | |

|---|---|---|

| critical | (binary) semaphore | (binary) semaphore |

| semi-critical | counting semaphore | counting semaphore |

| Busy wait | block/switch | |

|---|---|---|

| critical | enter/leave region | mutex |

| semi-critical | no name | semaphore |

A classical problem from Dijkstra

The purpose of mentioning the Dining Philosophers problem without giving the solution is to give a feel of what coordination problems are like. The book gives others as well. We are skipping these (again this material would be covered in a sequel course). If you are interested look, for example, here.

Homework: 31 and 32 (these have short answers but are not easy). Note that the problem refers to fig. 2-20, which is incorrect. It should be fig 2-33, as noticed by Liang Chen.

Quite useful in multiprocessor operating systems and database systems. The ``easy way out'' is to treat all processes as writers in which case the problem reduces to mutual exclusion (P and V). The disadvantage of the easy way out is that you give up reader concurrency. Again for more information see the web page referenced above.

Scheduling processes on the processor is often called ``process scheduling'' or simply ``scheduling''.

The objectives of a good scheduling policy include

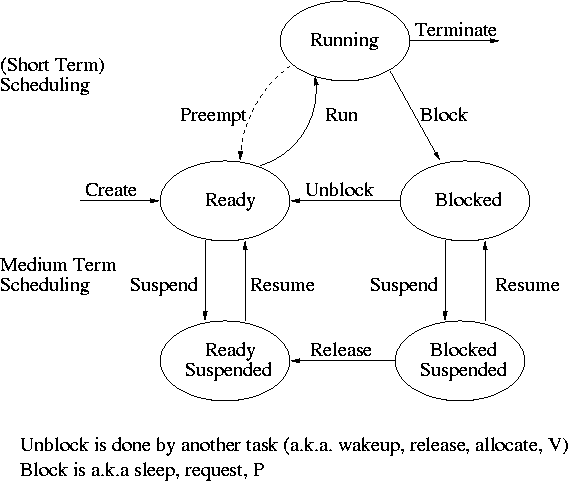

Recall the basic diagram describing process states

For now we are discussing short-term scheduling, i.e., the arcs connecting running <--> ready.

Medium term scheduling is discussed later.

It is important to distinguish preemptive from non-preemptive scheduling algorithms.