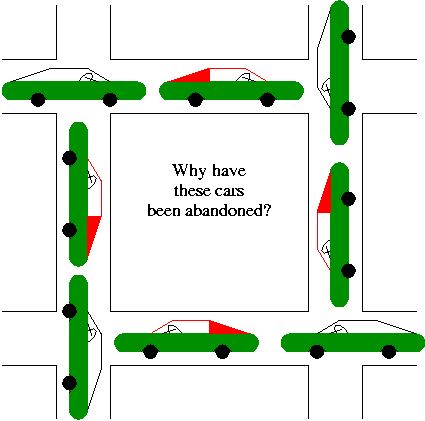

A set of processes each of which is blocked by a process in the

set. The automotive equivalent, shown at right, is gridlock.

Each process requires memory. The loader produces a load module that assumes the process is loaded at location 0. The operating system ensures that the processes are actually given disjoint memory. Current operating systems permit each process to be given more (virtual) memory than the total amount of (real) memory on the machine.

There are a wide variety of I/O devices that the OS must manage. For example, if two processes are printing at the same time, the OS must not interleave the output. The OS contains device specific code (drivers) for each device as well as device-independent I/O code.

Modern systems have a hierarchy of files. A file system tree.

You can name a file via an absolute path starting at the root directory or via a relative path starting at the current working directory.

In addition to regular files and directories, Unix also uses the file system namespace for devices (called special files, which are typically found in the /dev directory. Often utilities that are normally applied to (ordinary) files can be applied as well to some special files. For example, when you are accessing a unix system using a mouse and do not have anything serious going on (e.g., right after you log in), type the following command

cat /dev/mouse

and then move the mouse. You kill the cat by typing cntl-C. I tried

this on my linux box and no damage occurred. Your mileage may vary.

Before a file can be accessed, it must be opened and a file descriptor obtained. Many systems have standard files that are automatically made available to a process upon startup. These (initial) file descriptors are fixed

A convenience offered by some command interpretors is a pipe or pipeline. The pipeline

dir | wcwhich pipes the output dir into a character/word/line counter, will give the number of files in the directory (plus other info).

Files and directories normally have permissions

The command line interface to the operating system. The shell permits the user to

Homework: 8

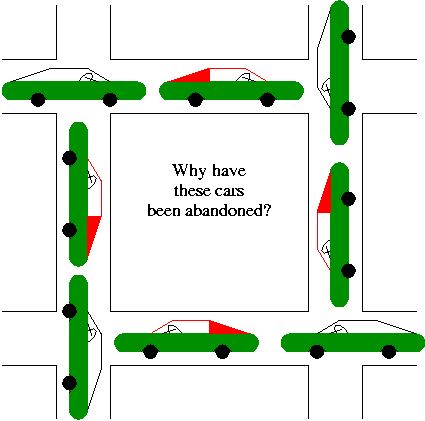

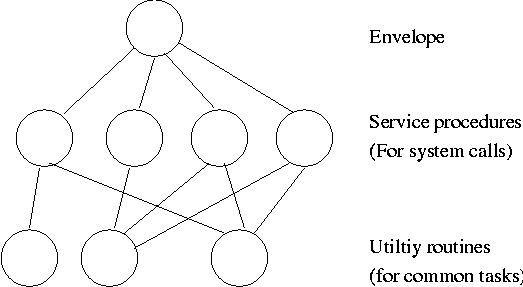

System calls are the way a user (i.e., a program) directly interfaces with the OS. Some textbooks use the term envelope for the component of the OS responsible for fielding system calls and dispatching them. On the right is a picture showing some of the OS components and the external events for which they are the interface.

Note that the OS serves two masters. The hardware (below) asynchronously sends interrupts and the user makes system calls and generates page faults.

Homework: 14

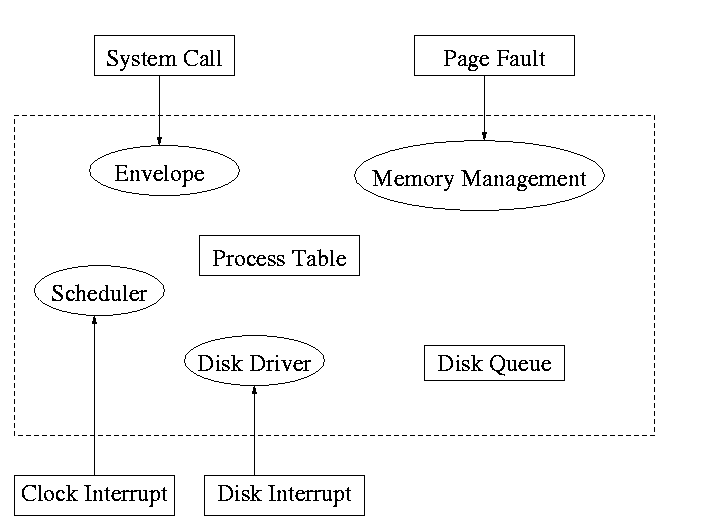

What happens when a user executes a system call such as read()?

We show a more detailed picture below, but at a high level what

happens is

The following actions occur when the user executes the (Unix)

system call

The following actions occur when the user executes the (Unix)

system call

count = read(fd,buffer,nbytes)which reads up to nbytes from the file described by fd into buffer. The actual number of bytes read is returned (it might be less than nbytes if, for example, an eof was encountered).

A major complication is that the system call handler may block.

Indeed for read it is likely. In that case a switch occurs to another

process. This is far from trivial and is discussed later in the course.

| Process Management | ||

|---|---|---|

| Posix | Win32 | Description |

| Fork | CreateProcess | Clone current process |

| exec(ve) | Replace current process | |

| waid(pid) | WaitForSingleObject | Wait for a child to terminate. |

| exit | ExitProcess | Terminate current process & return status |

| File Management | ||

| Posix | Win32 | Description |

| open | CreateFile | Open a file & return descriptor |

| close | CloseHandle | Close an open file |

| read | ReadFile | Read from file to buffer |

| write | WriteFile | Write from buffer to file |

| lseek | SetFilePointer | Move file pointer |

| stat | GetFileAttributesEx | Get status info |

| Directory and File System Management | ||

| Posix | Win32 | Description |

| mkdir | CreateDirectory | Create new directory |

| rmdir | RemoveDirectory | Remove empty directory |

| link | (none) | Create a directory entry |

| unlink | DeleteFile | Remove a directory entry |

| mount | (none) | Mount a file system |

| umount | (none) | Unmount a file system |

| Miscellaneous | ||

| Posix | Win32 | Description |

| chdir | SetCurrentDirectory | Change the current working directory |

| chmod | (none) | Change permissions on a file |

| kill | (none) | Send a signal to a process |

| time | GetLocalTime | Elapsed time since 1 jan 1970 |

The table on the right shows some systems calls; the descriptions are accurate for Unix and close for win32. To show how the four process management calls enable much of process management, consider the following highly simplified shell.

while (true)

display_prompt()

read_command(command)

if (fork() != 0) // true in parent false in child

waitpid(...)

else

execve(command) // the command itself executes exit()

endif

endwhile

Homework: 18.

I must note that Tanenbaum is a big advocate of the so called microkernel approach in which as much as possible is moved out of the (supervisor mode) kernel into separate processes. The (hopefully small) portion left in supervisor mode is called a microkernel.

In the early 90s this was popular. Digital Unix (now called True64) and Windows NT are examples. Digital Unix is based on Mach, a research OS from Carnegie Mellon university. Lately, the growing popularity of Linux has called into question the belief that ``all new operating systems will be microkernel based''.

The previous picture: one big program

The system switches from user mode to kernel mode during the poof and then back when the OS does a ``return''.

But of course we can structure the system better, which brings us to.

Some systems have more layers and are more strictly structured.

An early layered system was ``THE'' operating system by Dijkstra. The layers were.

The layering was done by convention, i.e. there was no enforcement by hardware and the entire OS is linked together as one program. This is true of many modern OS systems as well (e.g., linux).

The multics system was layered in a more formal manner. The hardware provided several protection layers and the OS used them. That is, arbitrary code could not jump to or access data in a more protected layer.

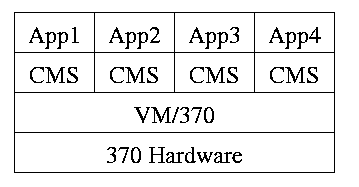

Use a ``hypervisor'' (beyond supervisor, i.e. beyond a normal OS) to switch between multiple Operating Systems. Made popular by IBM's VM/CMS

Similar to VM/CMS but the virtual machines have disjoint resources (e.g., distinct disk blocks) so less remapping is needed.

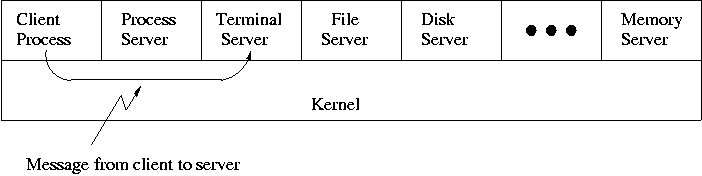

When implemented on one computer, a client server OS is using the microkernel approach in which the microkernel just supplies interprocess communication and the main OS functions are provided by a number of separate processes.

This does have advantages. For example an error in the file server cannot corrupt memory in the process server. This makes errors easier to track down.

But it does mean that when a (real) user process makes a system call there are more processes switches. These are not free.

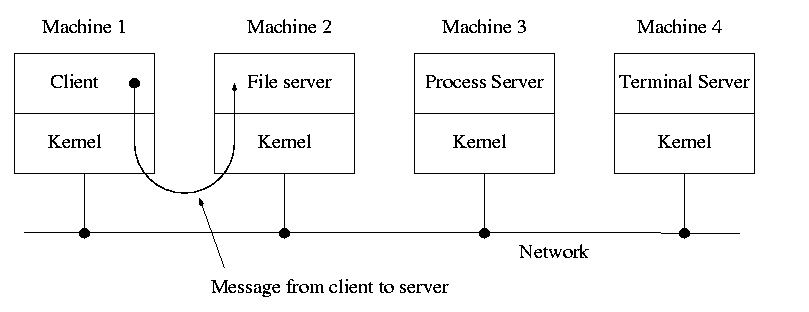

A distributed system can be thought of as an extension of the client server concept where the servers are remote.

Homework: 23

Tanenbaum's chapter title is ``Processes and Threads''. I prefer to add the word management. The subject matter is processes, threads, scheduling, interrupt handling, and IPC (InterProcess Communication--and Coordination).

Definition: A process is a program in execution.

Even though in actuality there are many processes running at once, the OS gives each process the illusion that it is running alone.

Think of the individual modules that are input to the linker. Each numbers its addresses from zero; the linker eventually translates these relative addresses into absolute addresses. That is the linker provides to the assembler a virtual memory in which addresses start at zero.

Virtual time and virtual memory are examples of abstractions provided by the operating system to the user processes so that the latter ``sees'' a more pleasant virtual machine than actually exists.

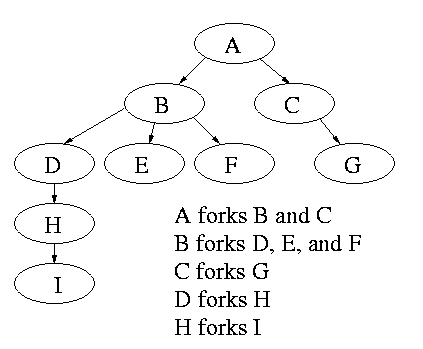

From the users or external viewpoint there are several mechanisms for creating a process.

But looked at internally, from the system's viewpoint, the second method dominates. Indeed in unix only one process is created at system initialization (the process is called init); all the others are children of this first process.

Why have init? That is why not have all processes created via

method 2?

Ans: Because without init there would be no running process to create

any others.

Again from the outside there appear to be several termination mechanism.

And again, internally the situation is simpler. In Unix terminology, there are two system calls kill and exit that are used. Kill (poorly named in my view) sends a signal to another process. If this signal is not caught (via the signal system call) the process is terminated. There is also an ``uncatchable'' signal. Exit is used for self termination and can indicate success or failure.

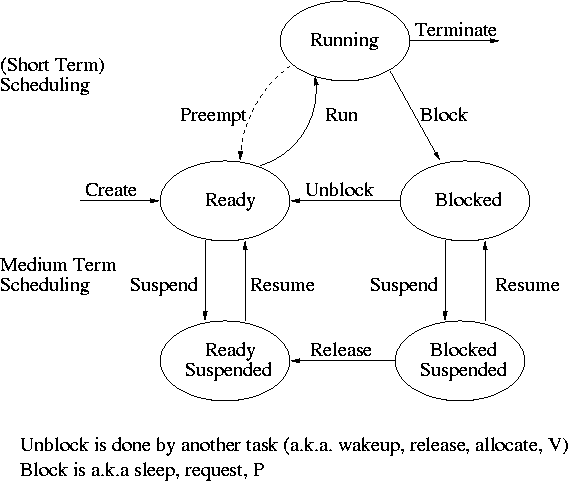

Modern general purpose operating systems permit a user to create and destroy processes.

Old or primitive operating system like

MS-DOS are not multiprogrammed, so when one process starts another,

the first process is automatically blocked and waits until

the second is finished.

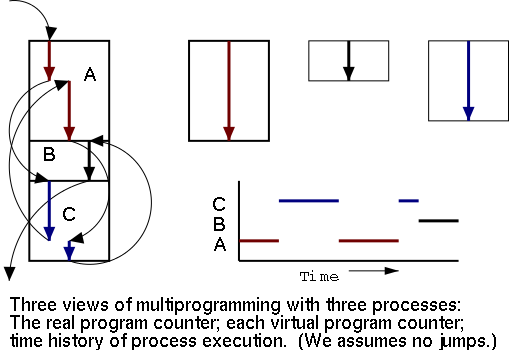

The diagram on the right contains much information.