The greedy method is applied to maximization/minimization problems. The idea is to at each decision point choose the configuration that maximizes/minimizes the objective function so far. Clearly this does not lead to the global max/min for all problems, but it does for a number of problems.

This chapter does not make a good case for the greedy method. since it is used to solve simple cases of standard problems in which the more normal cases do not use the greedy method. However, there are better examples, for example minimal the spanning tree and shortest path graph problems. The two algorithms chosen for this section, fractional knapsack and task scheduling, were (presumably) chosen because they are simple and natural to solve with the greedy method.

In the knapsack problem we have a knapsack of a fixed capacity (say W pounds) and different items i each with a given weight wi and a given benefit bi. We want to put items into the knapsack so as to maximize the benefit subject to the constraint that the sum of the weights must be less than W.

The knapsack problem is actually rather difficult in the normal case where one must either put an item in the knapsack or not. However, in this section, in order to illustrate greedy algorithms, we consider a much simpler variation in which we can take part of an item and get a proportional part of the benefit. This is called the ``fractional knapsack problem'' since we can take a fraction of an item. (The more common knapsack problem is called the ``0-1 knapsack problem'' since we either take all (1) or none (0) of an item.

In symbols, for each item i we choose an amount xi (0≤xi≤wi) that we will place in the knapsack. We are subject to the constraint that the sum of the xi is no more than W since that is all the knapsack can hold.

We again desire to maximize the total benefit. Since, for item i, we only put xi in the knapsack, we don't get the full benefit. Specifically we get benefit (xi/wi)bi.

But now this is easy!

Why doesn't this work for the normal knapsack problem when we must take all of an item or none of it?

algorithm FractionalKnapsack(S,W):

Input: Set S of items i with weight wi and benefit bi all positive.

Knapsack capacity W>0.

Output: Amount xi of i that maximizes the total benefit without

exceeding the capacity.

for each i in S do

xi ← 0 { for items not chosen in next phase }

vi ← bi/wi { the value of item i "per pound" }

w ← W { remaining capacity in knapsack }

while w > 0 do

remove from S an item of maximal value { greedy choice }

xi ← min(wi,w) { can't carry more than w more }

w ← w-xi

FractionalKnapsack has time complexity O(NlogN) where N is the number of items in S.

Homework: R-5.1

We again consider an easy case of a well known optimization problem.

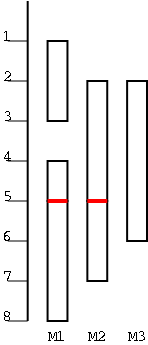

In the figure there are 6 tasks, with start times and finishing times (1,3), (2,5), (2,6), (4,5), (5,8), (5,7). They are scheduled on three machines M1, M2, M3. Clearly 3 machines are needed as can be seen by looking at time 4.

In our greedy algorithm we only go to a new machine when we find a task that cannot be scheduled on the current machines.

Algorithm TaskSchedule(T):

Input: A set T of tasks, each with start time si and finishing time fi

(si≤fi).

Output: A schedule of the tasks of T on the minimum number of machines.

m ← 0 { current number of machines }

while T is not empty do

remove from T a task i with smallest start time

if there is an Mj having all tasks non-conflicting with i then

schedule i on Mj

else

m ← m+1

schedule i on Mm

Assume the algorithm runs and declares m to be the minimum number of machines needed. We must show that m are really needed.

The book asserts that it is easy to see that the algorithm runs in time O(NlogN), but I don't think this is so easy. It is easy to see O(N2).

Homework: R-5.3

Problem Set 4, Problem 3.

Part A. C-5.3 (Do not argue why your algorithm is correct).

Part B. C-5.4.

Remark: Problem set 4 is now assigned and due 4 Dec 02.