Up to now we have not considered elements that must be retrieved in a fixed order. But often in practice we assign a priority to each item and want the most important (highest priority) item first. (For some reason that I don't know, low numbers are often used to represent high priority.)

For example consider processor scheduling from Operating Systems (202). The simplest scheduling policy is FCFS for which a queue of ready processors is appropriate. But if we want SJF (short job first) then we want to extract the ready process that has the smallest remaining time. Hence a FIFO queue is not appropriate.

For a non-computer example,consider managing your todo list. When you get another item to add, you decide on its importance (priority) and then insert the item into the todo list. When it comes time to perform an item, you want to remove the highest priority item. Again the behavior is not FIFO.

To return items in order, we must know when one item is less than another. For real numbers this is of course obvious.

We assume that each item has a key on which the priority is to be based. For the SJF example given above, the key is the time remaining. For the todo example, the key is the importance.

We assume the existence of an order relation (often called a total order) written ≤ satisfying for all keys s, t, and u.

Remark: For the complex numbers no such ordering exists that extends the natural ordering on the reals and imaginaries. This is unofficial (not part of 310).

Is it OK to define s≤t for all s and t?

No. That would not be antisymmetric.

Definition: A priority queue is a container of elements each of which has an associated key supporting the following methods.

Users may choose different comparison functions for the same data. For example, if the keys are longitude,latitude pairs, one user may be interested in comparing longitudes and another latitudes. So we consider a general comparator containing methods.

Given a priority queue it is trivial to sort a collection of elements. Just insert them and then do removeMin to get them in order. Written formally this is

Algorithm PQ-Sort(C,P)

Input: an n element sequence C and an empty priority queue P

Output: C with the elements sorted

while not C.isEmpty() do

e←C.removeFirst()

P.insertItem(e,e) // We are sorting on the element itself.

while not P.isEmpty()

C.insertLast(P.removeMin())

So whenever we give an implementation of a priority queue, we are also giving a sorting algorithm. Two obvious implementations of a priority queue give well known (but slow) sorts. A non-obvious implementation gives a fast sort. We begin with the obvious.

So insertItem() takes Θ(1) time and hence takes Θ(N) to insert all n items of C. But remove min, requires we go through the entire list. This requires time Θ(k) when there are k items in the list. Hence to remove all the items requires Θ(n+(n-1)+...+1) = Θ(N2) time.

This sorting algorithm is normally called selection sort since the dominant step is selecting the minimum each time.

Now removeMin() is trivial since it is just removeFirst(). But insertItem is Θ(k) when there are k items already in the priority queue since you must step through to find the correct location to insert and then slide the remaining elements over.

This sorting element is normally called insertion sort since the dominant effort is inserting each element.

We now consider the non-obvious implementation of a priority queue that gives a fast sort (and a fast priority queue). Since the priority queue algorithm will perform steps with complexity Θ(height of tree), we want to keep the height small. The way to do this is to fully use each level.

Definition: A binary tree of height h is complete if the levels 0,...,h-1 contain the maximum number of elements and on level h-1 all the internal nodes are to the left of all the leaves.

Remarks:

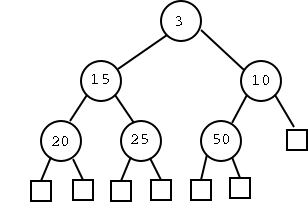

Definition: A tree storing a key at each node satisfies the heap-order property if, for every node v other than the root, the key at v is no smaller than the key at v's parent.

Definition: A heap is a complete binary tree satisfying the heap order property.

Definition: The last node of a heap is the right most internal node in level h-1.

Remark: As written the ``last node'' is really the last internal node. However, we actually don't use the leaves to store keys so in some sense ``last node'' is the last (significant) node.

With a heap it is clear where the minimum is located, namely at the root. We will also use last a reference to the last node since insertions will occur at the first node after last.

Theorem: A heap with storing n keys has height ⌈log(n+1)⌉

Proof:

Corollary: If we can implement insert and removeMin in time Θ(height), we will have implemented the priority queue operations in logarithmic time (our goal).

Since we know that a heap is complete is efficient to use the vector representation of a binary tree. We can actually not bother with the leaves since we don't ever use them. We call the last node w (remember that is the last internal node). Its index in the vector representation is n, the number of keys in the heap. We call the first leaf z; its index is n+1. Node z is where we will insert a new element and is called the insertion position.

This looks trivial. Since we know n, we can find n+1 and hence the reference to node z in O(1) time. But there is a problem; the result might not be a heap since the new key inserted at z might be less than the key stored at u the parent of z. Reminiscent of bubble sort, we need to bubble the value in z up to the correct location.