Remark: Should have mentioned last time the corollary that the number of nodes in a binary tree is odd.

Definition: A binary tree is fully complete if all the leaves are at the same (maximum) depth. This is the same as saying that the sibling of a leaf is a leaf.

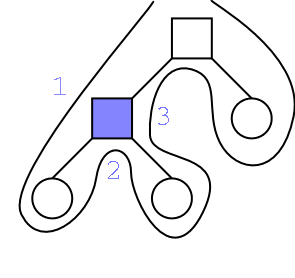

Generalizes the above. Visit the node three times, first when ``going left'', then ``going right'', then ``going up''. Perhaps the words should be ``going to go left'', ``going to go right'' and ``going to go up''. These words work for internal nodes. For a leaf you just visit it three times in a row (or you could put in code to only visit a leaf once; I don't do this). It is called an Euler Tour traversal because an Euler tour of a graph is a way of drawing each edge exactly once without taking your pen off the paper. The Euler tour traversal would draw each edge twice but if you add in the parent pointers, each edge is drawn once.

The book uses ``on the left'', ``from below'', ``on the right''. I prefer my names, but you may use either.

Algorithm eulerTour(T,v):

visit v going left

if T.isInternal(v) then

eulerTour(T,T.leftChild(v))

visit v going right

if T.isInternal(v) then

eulerTour(T,T.rightChild(v))

visit v going up

Algorithm eulerTour(T):

eulerTour(T,T.root))

Pre- post- and in-order traversals are special cases where two of the three visits are dropped.

It is quite useful to have this three visits. For example here is a nifty algorithm to print and expression tree with parentheses to indicate the order of the operations. We just give the three visits.

Algorithm visitGoingLeft(v):

if T.isInternal(v) then

print "("

Algorithm visitGoingRight(v)

print v.element()

Algorithm visitGoingUp(v)

if T.isInternal(v) then

print ")"

Homework: Plug these in to the Euler Tour and show that what you get is the same as

Algorithm printExpression(T,v):

input: T an expression tree v a node in T.

if T.isLeaf(v) then

print v.element() // for a leaf the element is a value

else

print "("

printExpression(T,T.leftChild(v))

print v.element() // for an internal node the element is an operator

printExpression(T,T.rightChild(v))

print ")"

Algorithm printExpression(T): printExpression(T,T.root())

Problem Set 2 problem 2. We have seen that traversals have complexity Θ(N), where N is the number of nodes in the tree. But we didn't count the costs of the visit()s themselves since the user writes that code. We know that visit() will be called N times, once per node, for post-, pre-, and in-order traversals and will be called 3N times for Euler tour traversal. So if each visit costs Θ(1), the total visit cost will be &Θ(N) and thus does not increase the complexity of a traversal. If each visit costs Θ(N), the total visit cost will be Θ(N2) and hence the total traversal cost will be Θ(N2). The same analysis works for any visit cost providing all the visits cost the same. For this problem we will be considering a variable cost visits. In particular, assume that the cost of visiting a node v is the height of v (so roots can be expensive to visit, but leaves are free).

Part A. How many nodes N are in a fully complete binary tree of height h?

Part B. How many nodes are at height i in a fully complete binary tree of height h? What is the total cost of visiting all the nodes at height i?

Part C. Write a formula using Σ (sum) for the total cost of visiting all the nodes. This is very easy given B.

One point extra credit. Show that the sum you wrote in part C is Θ(N).

Part D. Continue to assume the cost of visiting a node equals its height. Describe a class of binary trees for which the total cost of visiting the nodes is θ(N2). Naturally these will not be fully complete binary trees. Hint do problem 3.

We store each node as the element of a vector. Store the root in element 1 of the vector and the key idea is that we store the two children of the element at rank r in the elements at rank 2r and 2r+1.

Draw a fully complete binary tree of height 3 and show where each element is stored.

Draw an incomplete binary tree of height 3 and show where each element is stored and that there are gaps.

There must be a way to tell leaves from internal nodes. The book

doesn't make this explicit. Here is an explicit example.

Let the vector S be given. With a vector we have the current size.

S[0] is not used. S[1] has a pointer to the root node (or contains

the root node if you prefer. For each S[i], S[i] is null (a special

value) if the corresponding node doesn't exist). Then to see if the

node v at rank i is a leaf, look at 2i. If 2i exceeds S.size() then v

is a leaf since it has no children. Similarly if S[2i] is null, v is

a leaf. Otherwise v is external.

How do you know that if S[2i] is null, then s[2i+1] will be null?

Ans: Our binary trees are proper.

This implementation is very fast. Indeed all tree operations are O(1) except for positions() and elements(), which produce n results and take time Θ(n).

Homework: R-2.7

However, this implementation can waste a lot of space since many of the entries in S might be unused. That is there may be many i for which S[i] is null.

Problem Set 2 problem 3. Give a tree with fewer than 20 nodes for which S.size() exceeds 100. Give a tree with fewer than 25 nodes for which S.size() exceeds 1000. Give a tree with fewer than 100 nodes for which S.size() exceeds a million.

Represent each node by a quadruple.

Once again the algorithms are all O(1) except for positions() and elements(), which are Θ(n).

The space is Θ(n) which is much better that for the vector implementation. The constant is larger however since three pointers are stored for each position rather than one index.

The only difference is that we don't know how many children each node has. We could store k child pointers and say that we cannot process a tree having more than k children with the same parent.

Clearly we don't like this limit. Moreover, if we choose k moderate, say k=10. We are limited to 10-ary trees and for 3-ary trees most of the space is wasted.

So instead of storing the child references in the node, we store just one reference to a container. The container has references to the children. Imaging implementing the container as an extendable array.

Since a node v contains an arbitrary number of children, say Cv, the complexity of the children(v) iterator is Θ(Cv).