================ Start Lecture #10

================

Theoretical Issues

Considerable theory has been developed.

- NP completeness results abound.

- Much work in queuing theory to predict performance.

- Not covered in this course.

Medium-Term Scheduling

In addition to the short-term scheduling we have discussed, we add

medium-term scheduling in which

decisions are made at a coarser time scale.

- Called memory scheduling by Tanenbaum (part of three level scheduling).

- Suspend (swap out) some process if memory is over-committed.

- Criteria for choosing a victim.

- How long since previously suspended.

- How much CPU time used recently.

- How much memory does it use.

- External priority (pay more, get swapped out less).

- We will discuss medium term scheduling again when we study memory

management.

Long Term Scheduling

- ``Job scheduling''. Decide when to start jobs, i.e., do not

necessarily start them when submitted.

- Force user to log out and/or block logins if over-committed.

- CTSS (an early time sharing system at MIT) did this to insure

decent interactive response time.

- Unix does this if out of processes (i.e., out of PTEs).

- ``LEM jobs during the day'' (Grumman).

- Called admission scheduling by Tanenbaum (part of three level scheduling).

- Many supercomputer sites.

2.5.4: Scheduling in Real Time Systems

Skipped

2.5.5: Policy versus Mechanism

Skipped.

2.5.6: Thread Scheduling

Skipped.

Research on Processes and Threads

Skipped.

Note on Date of Midterm (vote)

The midterm will cover chapters 0-3 of the notes, i.e., linkers and

tanenbaum chapters 1-3. We will finish chapter 3 a week from today

and the exam must be given before the break so that it can be graded a

returned by the deadline.

Thus the only two possibilities are tues 5 march and thurs 7

march. The exam would be the same on either day. I will be in on wed

afternoon 6 march.

The exam is closed book and closed notes. I will hand out some

sample questions or review sheet on tuesday.

The vote was held and the winner was thursday 7 March.

End of Note

Notes on lab (scheduling)

- If several processes are waiting on I/O, you may assume

noninterference. For example, assume that on cycle 100 process A

flips a coin and decides its wait is 6 units (i.e., during cycles

101-106 A will be blocked. Assume B begins running at cycle 101 for a

burst of 1 cycle. So during 101

process B flips a coin and decides its wait is 3 units. You do NOT

alter process A. That is, Process A will become ready after

cycle 106 (100+6) so enters the ready list cycle 107 and process B

becomes ready after cycle 104 (101+3) and enters ready list cycle

105.

- For processor sharing (PS), which is part of the extra credit:

PS (processor sharing). Every cycle you see how many jobs are

ready (or running). Say there are 7. Then during this cycle (an exception

will be described below) each process gets 1/7 of a cycle.

EXCEPTION: Assume there are exactly 2 ready jobs, one needs 1/3 cycle

and one needs 1/2 cycle. The process needing only 1/3 gets only 1/3,

i.e. it is finished after 2/3 cycle. So the other process gets 1/3

cycle during the first 2/3 cycle and then starts to get all the CPU.

Hence it finishes after 2/3 + 1/6 = 5/6 cycle. The last 1/6 cycle is

not used by any process.

End of Notes

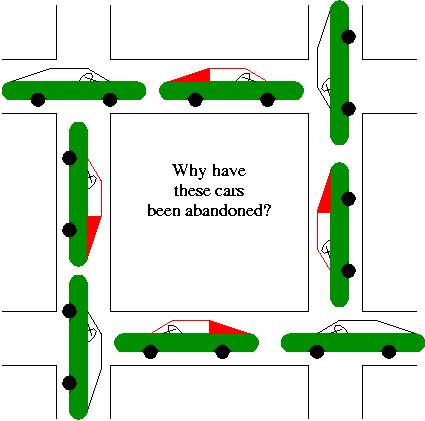

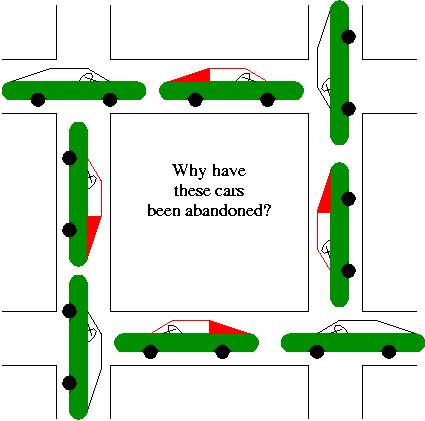

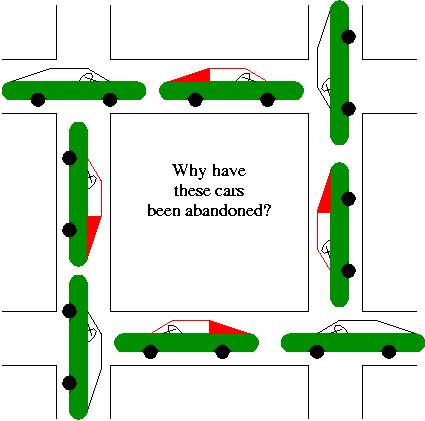

Chapter 3: Deadlocks

A deadlock occurs when a every member of a set of

processes is waiting for an event that can only be caused

by a member of the set.

Often the event waited for is the release of a resource.

In the automotive world deadlocks are called gridlocks.

- The processes are the cars.

- The resources are the spaces occupied by the cars

Reward: One point extra credit on the final exam

for anyone who brings a real (e.g., newspaper) picture of an

automotive deadlock. You must bring the clipping to the final and it

must be in good condition. Hand it in with your exam paper.

Note that it must really be a gridlock, i.e., motion is not possible

without breaking the traffic rules. A huge traffic jam is not

sufficient.

For a computer science example consider two processes A and B that

each want to print a file currently on tape.

- A has obtained ownership of the printer and will release it after

printing one file.

- B has obtained ownership of the tape drive and will release it after

reading one file.

- A tries to get ownership of the tape drive, but is told to wait

for B to release it.

- B tries to get ownership of the printer, but is told to wait for

A to release the printer.

Bingo: deadlock!

3.1: Resources:

The resource is the object granted to a process.

3.1.1: Preemptable and Nonpreemptable Resourses

- Resources come in two types

- Preemptable, meaning that the resource can be

taken away from its current owner (and given back later). An

example is memory.

- Non-preemptable, meaning that the resource

cannot be taken away. An example is a printer.

- The interesting issues arise with non-preemptable resources so

those are the ones we study.

- Life history of a resource is a sequence of

- Request

- Allocate

- Use

- Release

- Processes make requests, use the resourse, and release the

resourse. The allocate decisions are made by the system and we will

study policies used to make these decisions.

3.1.2: Resourse Acquisition

Simple example of the trouble you can get into.

- Two resources and two processes.

- Each process wants both resources.

- Use a semaphore for each. Call them S and T.

- If both processes execute P(S); P(T); --- V(T); V(S)

all is well.

- But if one executes instead P(T); P(S); -- V(S); V(T)

disaster! This was the printer/tape example just above.

Recall from the semaphore/critical-section treatment last

chapter, that it is easy to cause trouble if a process dies or stays

forever inside its critical section.

Similarly, we assume that no process maintains a resource forever.

It may obtain the resource an unbounded number of times (i.e. it can

have a loop forever with a resource request inside), but each time it

gets the resource, it must release it eventually.

3.2: Introduction to Deadlocks

To repeat: A deadlock occurs when a every member of a set of

processes is waiting for an event that can only be caused

by a member of the set.

Often the event waited for is the release of

a resource.

3.2.1: (Necessary) Conditions for Deadlock

The following four conditions (Coffman; Havender) are

necessary but not sufficient for deadlock. Repeat:

They are not sufficient.

- Mutual exclusion: A resource can be assigned to at most one

process at a time (no sharing).

- Hold and wait: A processing holding a resource is permitted to

request another.

- No preemption: A process must release its resources; they cannot

be taken away.

- Circular wait: There must be a chain of processes such that each

member of the chain is waiting for a resource held by the next member

of the chain.

The first three are characteristics of the system and resources.

For a given system fixed set of resource they are either true or

false, i.e., they don't change with time.

The truth or falsehood of the last condition does indeed change with

time as the resources are requested/allocated/released.

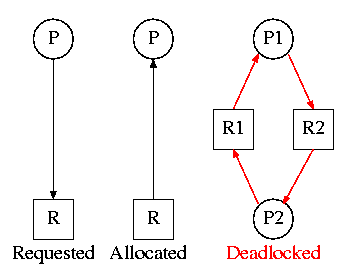

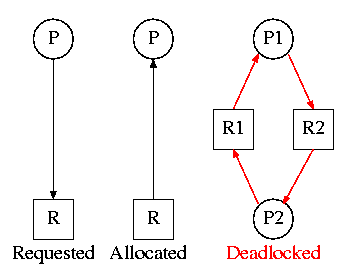

3.2.2: Deadlock Modeling

On the right is the Resource Allocation Graph,

also called the Reusable Resource Graph.

- The processes are circles.

- The resources are squares.

- An arc (directed line) from a process P to a resource R signifies

that process P has requested (but not yet been allocated) resource R.

- An arc from a resource R to a process P indicates that process P

has been allocated resource R.

Homework: 5.

Consider two concurrent processes P1 and P2 whose programs are.

P1: request R1 P2: request R2

request R2 request R1

release R2 release R1

release R1 release R2

On the board draw the resource allocation graph for various possible

executions of the processes, indicating when deadlock occurs and when

deadlock is no longer avoidable.

There are four strategies used for dealing with deadlocks.

- Ignore the problem

- Detect deadlocks and recover from them

- Avoid deadlocks by carefully deciding when to allocate resources.

- Prevent deadlocks by violating one of the 4 necessary conditions.

3.3: Ignoring the problem--The Ostrich Algorithm

The ``put your head in the sand approach''.

- If the likelihood of a deadlock is sufficiently small and the cost

of avoiding a deadlock is sufficiently high it might be better to

ignore the problem. For example if each PC deadlocks once per 100

years, the one reboot may be less painful that the restrictions needed

to prevent it.

- Clearly not a good philosophy for nuclear missile launchers.

- For embedded systems (e.g., missile launchers) the programs run

are fixed in advance so many of the questions Tanenbaum raises (such

as many processes wanting to fork at the same time) don't occur.

3.4: Detecting Deadlocks and Recovering From Them

3.4.1: Detecting Deadlocks with Single Unit Resources

Consider the case in which there is only one

instance of each resource.

- So a request can be satisfied by only one specific resource.

- In this case the 4 necessary conditions for

deadlock are also sufficient.

- Remember we are making an assumption (single unit resources) that

is often invalid. For example, many systems have several printers and

a request is given for ``a printer'' not a specific printer.

Similarly, one can have many tape drives.

- So the problem comes down to finding a directed cycle in the resource

allocation graph. Why?

Answer: Because the other three conditions are either satisfied by the

system we are studying or are not in which case deadlock is not a

question. That is, conditions 1,2,3 are conditions on the system in

general not on what is happening right now.

To find a directed cycle in a directed graph is not hard. The

algorithm is in the book. The idea is simple.

- For each node in the graph do a depth first traversal (hoping the

graph is a DAG (directed acyclic graph), building a list as you go

down the DAG.

- If you ever find the same node twice on your list, you have found

a directed cycle and the graph is not a DAG and deadlock exists among

the processes in your current list.

- If you never find the same node twice, the graph is a DAG and no

deadlock occurs.

- The searches are finite since the list size is bounded by the

number of nodes.

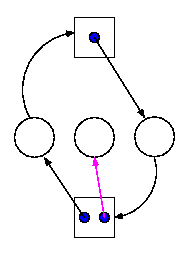

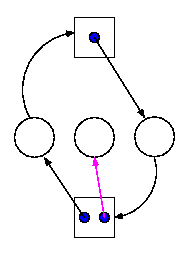

3.4.2: Detecting Deadlocks with Multiple Unit Resources

This is more difficult.

- The figure on the right shows a resource allocation graph with

multiple unit resources.

- Each unit is represented by a dot in the box.

- Request edges are drawn to the box since they represent a request

for any dot in the box.

- Allocation edges are drawn from the dot to represent that this

unit of the resource has been assigned (but all units of a resource

are equivalent and the choice of which one to assign is arbitrary).

- Note that there is a directed cycle in black, but there is no

deadlock. Indeed the middle process might finish, erasing the magenta

arc and permitting the blue dot to satisfy the rightmost process.

- The book gives an algorithm for detecting deadlocks in this more

general setting. The idea is as follows.

- look for a process that might be able to terminate (i.e., all

its request arcs can be satisfied).

- If one is found pretend that it does terminate (erase all its

arcs), and repeat step 1.

- If any processes remain, they are deadlocked.

- We will soon do in detail an algorithm (the Banker's algorithm) that

has some of this flavor.