================ Start Lecture #10

================

Note: Typo on lab 2.

The last input set has a process with a zero

value for IO, which is an error. Specifically, you should replace

5 (0 3 200 3) (0 9 500 3) (0 20 500 3) (100 1 100 0) (100 100 500 3)

with

5 (0 3 200 3) (0 9 500 3) (0 20 500 3) (100 1 100 3) (100 100 500 3)

End of Note.

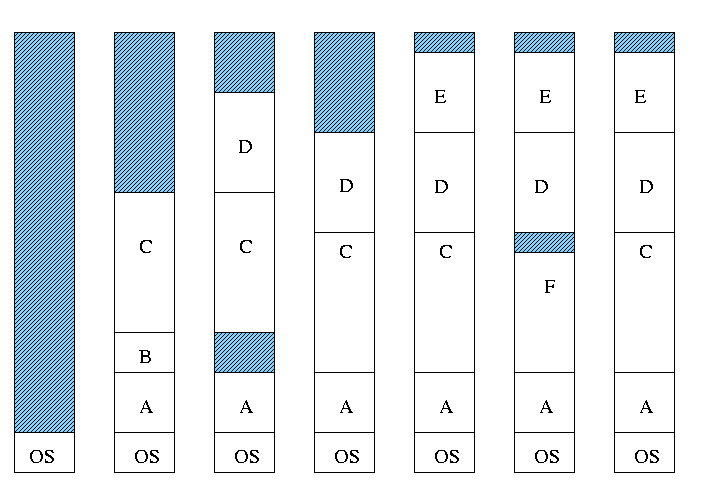

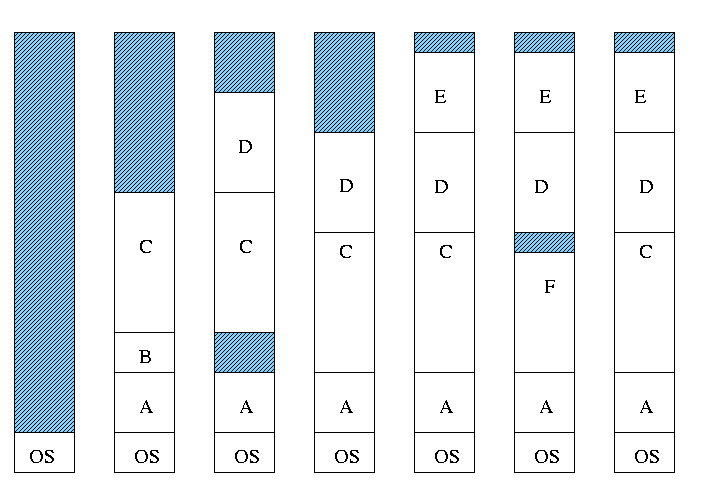

3.2: Swapping

Moving entire processes between disk and memory is called

swapping.

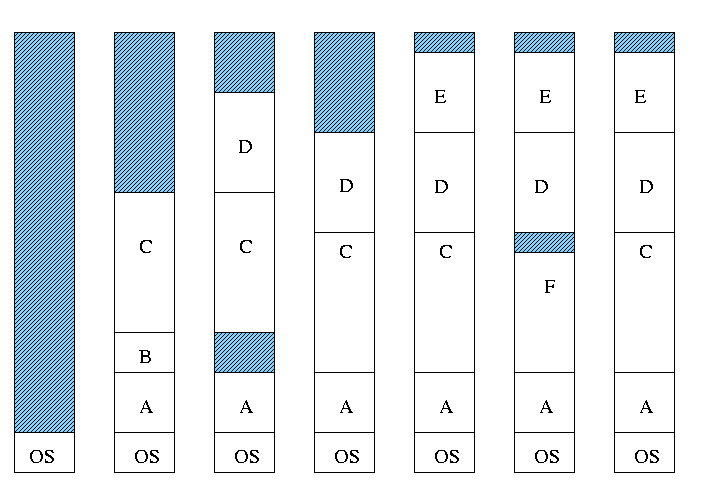

3.2.1: Multiprogramming with variable partitions

- Both the number and size of the partitions change with time.

- IBM OS/MVT (multiprogramming with a varying number of tasks).

- Also early PDP-10 OS.

- Job still has only one segment (as with MFT) but now can be of any

size up to the size of the machine and can change with time.

- A single ready list.

- Job can move (might be swapped back in a different place).

- This is dynamic address translation (during run time).

- Must perform an addition on every memory reference (i.e. on every

address translation) to add the start address of the partition.

- Called a DAT (dynamic address translation) box by IBM.

- Eliminates internal fragmentation.

- Find a region the exact right size (leave a hole for the

remainder).

- Not quite true, can't get a piece with 10A755 bytes. Would

get say 10A760. But internal fragmentation is much

reduced compared to MFT. Indeed, we say that internal

fragmentation has been eliminated.

- Introduces external fragmentation, i.e., holes

outside any region.

- What do you do if no hole is big enough for the request?

- Can compactify

- Transition from bar 3 to bar 4 in diagram below.

- This is expensive.

- Not suitable for real time (MIT ping pong).

- Can swap out one process to bring in another

- Bars 5-6 and 6-7 in diagram

Homework: 4

- There are more processes than holes. Why?

- Because next to a process there might be a process or a hole

but next to a hole there must be a process

- So can have ``runs'' of processes but not of holes

- If after a process equally likely to have a process or a hole,

you get about twice as many processes as holes.

- Base and limit registers are used.

- Storage keys not good since compactifying would require

changing many keys.

- Storage keys might need a fine granularity to permit the

boundaries move by small amounts. Hence many keys would need to be

changed

MVT Introduces the ``Placement Question'', which hole (partition)

to choose

- Best fit, worst fit, first fit, circular first fit, quick fit, Buddy

- Best fit doesn't waste big holes, but does leave slivers and

is expensive to run.

- Worst fit avoids slivers, but eliminates all big holes so a

big job will require compaction. Even more expensive than best

fit (best fit stops if it finds a perfect fit).

- Quick fit keeps lists of some common sizes (but has other

problems, see Tanenbaum).

- Buddy system

- Round request to next highest power of two (causes

internal fragmentation).

- Look in list of blocks this size (as with quick fit).

- If list empty, go higher and split into buddies.

- When returning coalesce with buddy.

- Do splitting and coalescing recursively, i.e. keep

coalescing until can't and keep splitting until successful.

- See Tanenbaum for more details (or an algorithms book).

- A current favorite is circular first fit (also know as next fit)

- Use the first hole that is big enough (first fit) but start

looking where you left off last time.

- Doesn't waste time constantly trying to use small holes that

have failed before, but does tend to use many of the big holes,

which can be a problem.

- Buddy comes with its own implementation. How about the others?

- Bit map

- Only question is how much memory does one bit represent.

- Big: Serious internal fragmentation

- Small: Many bits to store and process

- Linked list

- Each item on list says whether Hole or Process, length,

starting location

- The items on the list are not taken from the memory to be

used by processes

- Keep in order of starting address

- Double linked

- Boundary tag

- Knuth

- Use the same memory for list items as for processes

- Don't need an entry in linked list for blocks in use, just

the avail blocks are linked

- For the blocks currently in use, just need a hole/process bit at

each end and the length. Keep this in the block itself.

- See knuth, the art of computer programming vol 1

Homework: 2, 5.

MVT Also introduces the ``Replacement Question'', which victim to

swap out

We will study this question more when we discuss

demand paging.

Considerations in choosing a victim

- Cannot replace a job that is pinned,

i.e. whose memory is tied down. For example, if Direct Memory

Access (DMA) I/O is scheduled for this process, the job is pinned

until the DMA is complete.

- Victim selection is a medium term scheduling decision

- Job that has been in a wait state for a long time is a good candidate.

- Often choose as a victim a job that has been in memory for a long

time.

- Another point is how long should it stay swapped out.

- For demand paging, where swaping out a page is not as drastic as

swapping out a job, choosing the victim is an important memory

management decision and we shall study several policies,

NOTEs:

- So far the schemes presented have had two properties:

- Each job is stored contiguously in memory. That is, the job is

contiguous in physical addresses.

- Each job cannot use more memory than exists in the system. That

is, the virtual addresses space cannot exceed the physical address

space.

- Tanenbaum now attacks the second item. I wish to do both and start

with the first.br>

- Tanenbaum (and most of the world) uses the term ``paging'' to mean

what I call demand paging. This is unfortunate as it mixes together

two concepts.

- Paging (dicing the address space) to solve the placement

problem and essentially eliminate external fragmentation.

- Demand fetching, to permit the total memory requirements of

all loaded jobs to exceed the size of physical memory.

- Tanenbaum (and most of the world) uses the term virtual memory as

a synonym for demand paging. Again I consider this unfortunate.

- Demand paging is a fine term and is quite descriptive

- Virtual memory ``should'' be used in contrast with physical

memory to describe any virtual to physical address translation.

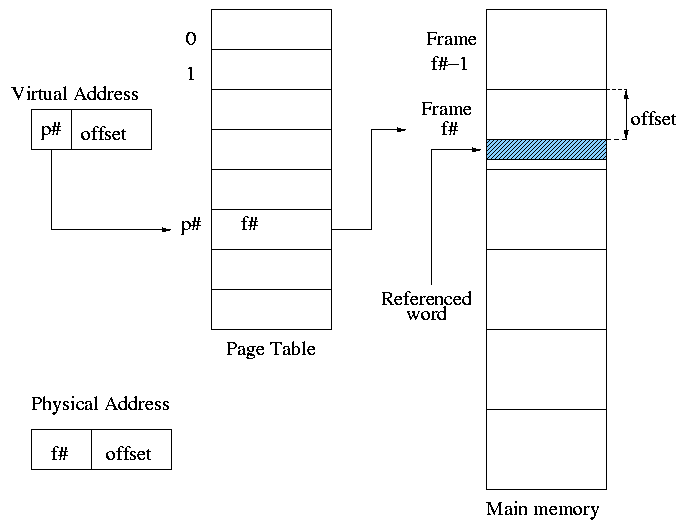

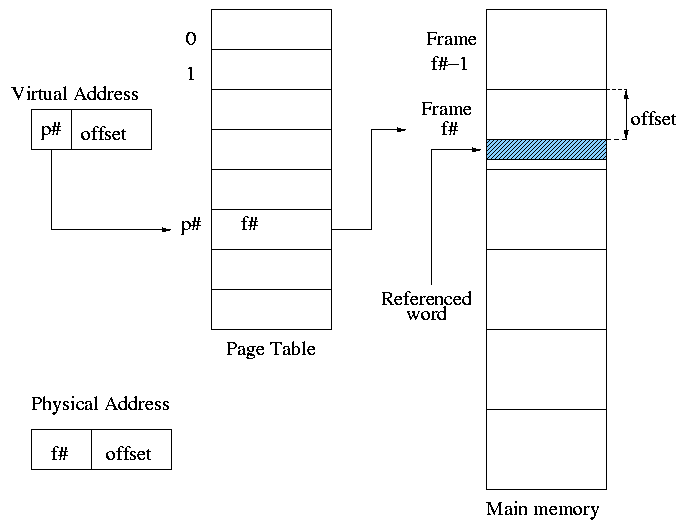

** (non-demand) Paging

Simplest scheme to remove the requirement of contiguous physical

memory.

- Chop the program into fixed size pieces called

pages (invisible to the programmer).

- Chop the real memory into fixed size pieces called page

frames or simply frames.

- Size of a page (the page size) = size of a frame (the frame size).

- Sprinkle the pages into the frames.

- Keep a table (called the page table) having an

entry for each page. The page table entry or PTE for

page p contains the number of the frame f that contains page p.