Homework: 6

Assumed knowledge

Homework: 9.

I must note that Tanenbaum is a big advocate of the so called microkernel approach in which as much as possible is moved out of the (supervisor mode) microkernel into separate processes.

In the early 90s this was popular. Digital Unix (now called True64) and Windows NT are examples. Digital Unix is based on Mach, a research OS from Carnegie Mellon university. Lately, the growing popularity of Linux has called into question the belief that ``all new operating systems will be microkernel based''.

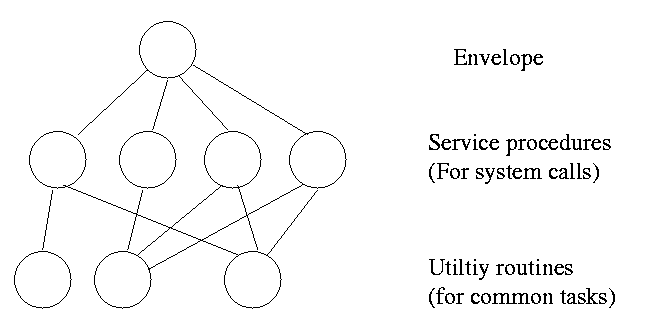

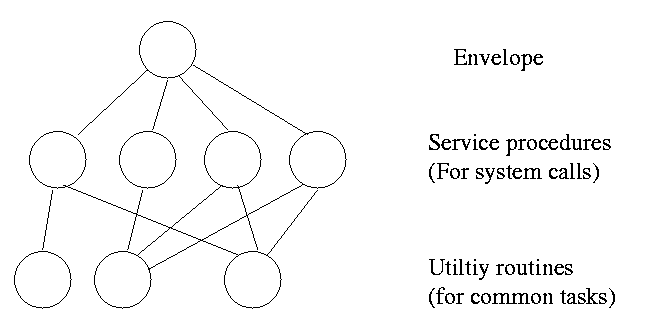

The previous picture: one big program

The system switches from user mode to kernel mode during the poof and then back when the OS does a ``return''.

But of course we can structure the system better, which brings us to.

Some systems have more layers and are more strictly structured.

An early layered system was ``THE'' operating system by Dijkstra. The layers were.

The layering was done by convention, i.e. there was no enforcement by hardware and the entire OS is linked together as one program. This is true of many modern OS systems as well (e.g., linux).

The multics system was layered in a more formal manner. The hardware provided several protection layers and the OS used them. That is, arbitrary code could not jump to or access data in a more protected layer.

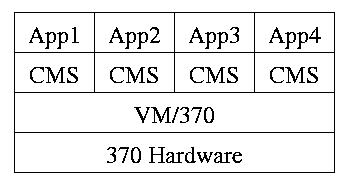

Use a ``hypervisor'' (beyond supervisor, i.e. beyond a normal OS) to switch between multiple Operating Systems

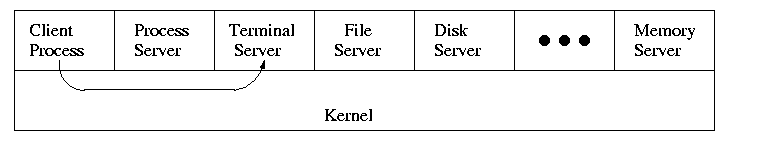

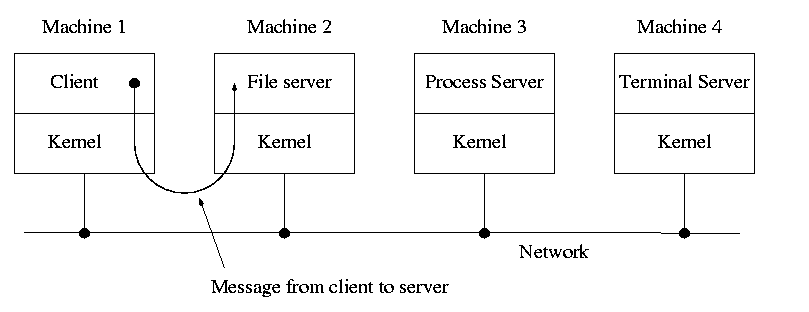

When implemented on one computer, a client server OS is the microkernel approach in which the microkernel just supplies interprocess communication and the main OS functions are provided by a number of separate processes.

This does have advantages. For example an error in the file server cannot corrupt memory in the process server. This makes errors easier to track down.

But it does mean that when a (real) user process makes a system call there are more processes switches. These are not free.

A distributed system can be thought of as an extension of the client server concept where the servers are remote.

Homework: 11

Tanenbaum's chapter title is ``processes''. I prefer process management. The subject matter is processes, process scheduling, interrupt handling, and IPC (Interprocess communication--and coordination).

Definition: A process is a program in execution.

Even though in actuality there are many processes running at once, the OS gives each process the illusion that it is running alone.

Think of the individual modules that are input to the linker. Each numbers its addresses from zero; the linker eventually translates these relative addresses into absolute addresses. That is the linker provides to the assembler a virtual memory in which addresses start at zero.

Virtual time and virtual memory are examples of abstractions provided by the operating system to the user processes so that the latter ``sees'' a more pleasant virtual machine than actually exists.

Modern general purpose operating systems permit a user to create and destroy processes.

Old or primitive operating system like

MS-DOS are not multiprogrammed so when one process starts another,

the first process is automatically blocked and waits until

the second is finished.

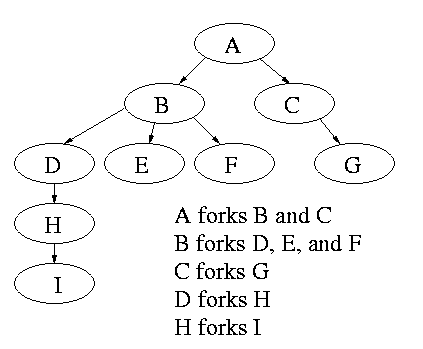

The above diagram contains a great deal of information.