Lecture #1

Chapter -1: Administrivia

Contact Information

- gottlieb@nyu.edu (best method)

- http://allan.ultra.nyu.edu/~gottlieb

- 715 Broadway, Room 1001

Web Page

There is a web page for the course. You can find it from my home page.

- these notes are there.

Let me know if you can't find it.

- They will be updated as bugs are found.

- Will also have each lecture available as a separate page. I will

produce the page after the lecture is given. These individual pages

might not get updated.

Textbook

Text is Tanenbaum, "Modern Operating Systems".

- Available in bookstore.

- We will do part 1, starting with chapter 1.

Computer Accounts and Mailman mailing list

- You are entitled to a computer account, get it.

- Sign up for a Mailman mailing list for the course.

http://www.cs.nyu.edu/mailman/listinfo/v22_0202_002_sp01

- If you want to send mail to just me, use gottlieb@nyu.edu not

the mailing list.

- Questions on the labs should go to the mailing list.

You may answer questions on the list as well.

I will respond to all questions; if another student answers the

question before I get to it, I will confirm if the answer given is correct.

Grades

Assuming 3 labs, which is likely, the grades will computed

.3*MidtermExam + .3*LabAverage + .4*FinalExam

Midterm

We will have a midterm. As the time approaches we will vote in

class for the exact date. Please do not schedule any trips during

days when the class meets until the midterm date is scheduled.

Homeworks and Labs

I make a distinction between homeworks and labs.

Labs are

- Required

- Due several lectures later (date given on assignment).

- Graded and form part of your final grade.

- Penalized for lateness.

- Computer programs you must write.

Homeworks are

- Optional.

- Due the beginning of Next lecture.

- Not accepted late.

- Mostly from the book.

- Collected and returned.

- Can help, but not hurt, your grade.

You may do lab assignments on any system you wish, but ...

- You are responsible for the machine. I extend deadlines if

the nyu machines are down, not if yours are.

- Be sure to upload your assignments to the

nyu systems.

- If somehow your assignment is misplaced by me or a grader,

we need a to have a copy ON AN NYU SYSTEM

that can be used to verify the date the lab was completed.

- When you complete a lab (and have it on an nyu system), do

not edit those files. Indeed, put the lab in a separate

directory and keep out of the directory. You do not want to

alter the dates.

Upper left board for lab/homework assignments and announcements.

A Grade of ``Incomplete''

It is university policy that a student's request for an incomplete

be granted only in exceptional circumstances and only if applied for

in advance. Of course the application must be before the final exam.

Dates (From Robin Simon)

Dear Instructors -

Below please find dates to keep in mind as the spring semester gets

underway. You may also want to post some or all of this info on

your course homepages. Please let students know the date of the final

exam on the FIRST day of class and keep reminding them that we will

not honor travel plans for travel before the day of the exam.

- Robin

First Day of Class: Tuesday, January 16

Add Dates: Monday, January 29 - Last day to add WITHOUT

instructor's permission

January 30 to February 5 - Add WITH

instructor's permission only

Drop Date: Monday, February 5 - Last day to drop courses

President's Day: Holiday Monday, February 19 - no classes

Pass/Fail Option

Deadline: Tuesday, February 20

Spring Recess: Monday, March 12 - Saturday March 17

Midterm Grading

Deadline: Friday, March 9 - Midterms must be GIVEN, GRADED

and RETURNED to students

Withdraw

Deadline: MONDAY, MARCH 26 - Last day for students to withdraw

with a "W". All remaining students will receive a grade.

Last Day

of Classes: Monday, April 30

Final Exams: Wednesday, May 2 to Wednesday, May 9

Group Final Exams: FRIDAY MAY 4, 2:00 - 3:50Pm

V22.0002.001 - 004

V22.0004.001 - 004

V22.0102.001 - 002

V22.0202.001 - 002

Chapter 0: Interlude on Linkers (covered in recitation)

Originally called linkage editors by IBM.

This is an example of a utility program included with an

operating system distribution. Like a compiler, it is not part of the

operating system per se, i.e. it does not run in supervisor mode.

Unlike a compiler it is OS dependent (what object/load file format is

used) and is not (normally) language dependent.

What does a Linker Do?

Link of course.

When the assembler has finished it produces an object

module that is almost runnable. There are two primary

problems that must be solved for the object module to be runnable.

Both are involved with linking (that word, again) together multiple

object modules. They are relocating relative

addresses and resolving external references.

- Relocating relative addresses.

- Each module (mistakenly) believes it will be loaded at

location zero (or some other fixed location). We will

use zero.

- So when there is an internal jump in the program, say jump to

location 100, this means jump to location 100 of the current

module.

- To convert this relative address to an absolute

address, the linker adds the base address of the module

to the relative address. The base address is the address at which

this module will be loaded.

- Example: Module A is to be loaded starting at location 2300 and

contains the instruction

jump 120

The linker changes this instruction to

jump 2420

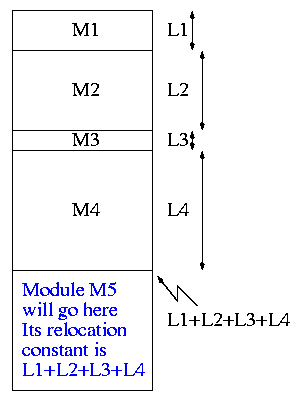

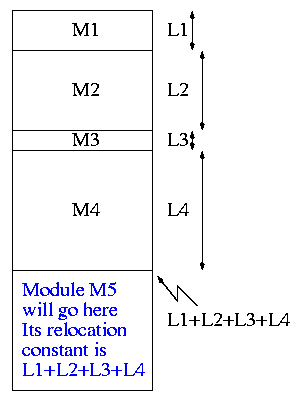

- How does the linker know that Module M5 is to be loaded starting at

location 2300?

- It processes the modules one at a time. The first module is

to be loaded at location zero. So the relocating is trivial

(adding zero). We say the relocation constant is zero.

- When finished with the first module (say M1), the linker knows

the length of M1 (say that length is L1).

- Hence the next module is to be loaded starting at L1, i.e.,

the relocation constant is L1.

- In general the linker keeps track of the sum of the lengths of

all the modules it has already processed and this is the location

at which the next module is to be loaded.

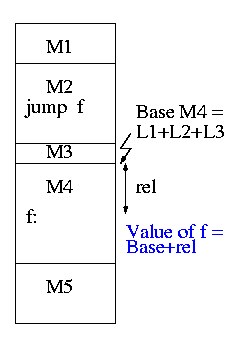

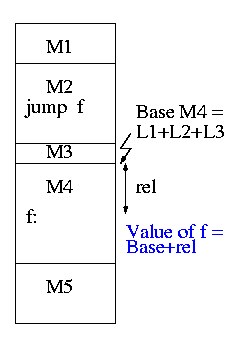

- Resolving external references.

- If a C (or Java, or Pascal) program contains a function call

f(x)

to a function f() that is compiled separately, the resulting

object module will contain some kind of jump to the beginning of

f.

- But this is impossible!

- When the C program is compiled. the compiler (and assembler)

do not know the location of f() so there is no way it

can supply the starting address.

- Instead a dummy address is supplied and a notation made that

this address needs to be filled in with the location of

f(). This is called a use of f.

- The object module containing the definition of f() indicates

that f is being defined and gives its relative address (which the

linker will convert to an absolute address). This is called a

definition of f.

The output of a linker is called a load module

because it is now ready to be loaded and run.

To see how a linker works lets consider the following example,

which is the first dataset from lab #1. The description in lab1 is

more detailed.

The target machine is word addressable and has a memory of 1000 words,

each consisting of 4 decimal digits. The first (leftmost) digit is

the opcode and the remaining three digits form an address.

Each object module contains three parts, a definition list, a use

list, and the program text itself. Each definition is a pair (sym,

loc). Each use is a pair (sym, loc). The address in loc points to

the next use or is 888 to end the chain.

For those text entries that do not form part of a use chain a fifth

(leftmost) digit is added.

- If the type is 1, the address field is an immediate operand.

- If it is 2, the field is an absolute address.

- If 3, the field is a local relative address.

Input set #1

1 xy 2

1 z 4

5 31004 15678 2888 38002 7002

0

1 z 3

6 38001 1888 1001 3002 31002 21010

0

1 z 1

2 35001 4888

1 z 2

1 xy 2

3 28000 1888 2001

The first pass simply

produces the symbol table giving the values for xy and z (2 and 15

respectively). The second pass does the real work (using the values

in the symbol table).

(Unofficial) Remark:

It is faster (less I/O) to do a one pass approach, but is harder

since you need ``fix-up code'' whenever a use occurs in a module that

precedes the module with the definition.

Symbol Table

xy=2

z=15

.ft CO

+0

0: 31004 1004+0 = 1004

1: 15678 5678

2: xy: 2888 ->z 2015

3: 38002 8002+0 = 8002

4: ->z 7002 7015

+5

0 38001 8001+5 = 8006

1 1888 ->z 1015

2 1001 ->z 1015

3 ->z 3002 3015

4 31002 1002+5 = 1007

5 21010 1010

+11

0 35001 5001+11= 5012

1 ->z 4888 4015

+13

0 28000 8000

1 1888 ->xy 1002

2 z:->xy 2001 2002

The linker on unix is mistakenly called ld (for loader), which is

unfortunate since it links but does not load.

Lab #1:

Implement a linker. The specific assignment is detailed on the sheet

handed out in in class and is due 7 February. The

content of the handout is available on the web as well (see the class

home page).

End of Interlude on Linkers

Chapter 1: Introduction

Homework: Read Chapter 1 (Introduction)

Levels of abstraction (virtual machines)

- Software (and hardware, but that is not this course) is often

implemented in layers.

- The higher layers use the facilities provided by lower layers.

- Alternatively said, the upper layers are written using a more

powerful and more abstract virtual machine than the lower layers.

- Alternatively said, each layer is written as though it runs on the

virtual machine supplied by the lower layer and in turn provides a more

abstract (pleasent) virtual machine for the higher layer to run on.

- Using a broad brush, the layers are.

- Scripts (e.g. shell scripts)

- Applications and utilities

- Libraries

- The OS proper (the kernel)

- Hardware

- The kernel itself is itself normally layered, e.g.

- ...

- Filesystems

- Machine independent I/O

- Machine dependent device drivers

- The machine independent I/O part is written assuming ``virtual

(i.e. idealized) hardware''. For example, the machine independent I/O

portion simply reads a block from a ``disk''. But

in reality one must deal with the specific disk controller.

- Often the machine independent part is more than one layer.

- The term OS is not well defined. Is it just the kernel? How

about the libraries? The utilities? All these are certainly

system software but not clear how much is part of the OS.

1.1: What is an operating system?

The kernel itself raises the level of abstraction and hides details.

For example a user (of the kernel) can write to a file (a concept not

present in hardware) and ignore whether the file resides on a floppy,

a CD-ROM, or a hard magnetic disk

The kernel is a resource manager (so users don't conflict).

How is an OS fundamentally different from a compiler (say)?

Answer: Concurrency! Per Brinch Hansen in Operating Systems

Principles (Prentice Hall, 1973) writes.

The main difficulty of multiprogramming is that concurrent activities

can interact in a time-dependent manner, which makes it practically

impossibly to locate programming errors by systematic testing.

Perhaps, more than anything else, this explains the difficulty of

making operating systems reliable.

Homework: 1. (unless otherwise stated, problems

numbers are from the end of the chapter in Tanenbaum.)

1.2 History of Operating Systems

- Single user (no OS).

- Batch, uniprogrammed, run to completion.

- The OS now must be protected from the user program so that it is

capable of starting (and assisting) the next program in the batch).

- Multiprogrammed

- The purpose was to overlap CPU and I/O

- Multiple batches

- IBM OS/MFT (Multiprogramming with a Fixed number of Tasks)

- The (real) memory is partitioned and a batch is

assigned to a fixed partition.

- The memory assigned to a

partition does not change

- IBM OS/MVT (Multiprogramming with a Variable number of Tasks)

(then other names)

- Each job gets just the amount of memory it needs. That

is, the partitioning of memory changes as jobs enter and leave

- MVT is a more ``efficient'' user of resources but is

more difficult.

- When we study memory management, we will see that with

varying size partitions questions like compaction and

``holes'' arise.

- Time sharing

- This is multiprogramming with rapid switching between jobs

(processes). Deciding when to switch and which process to

switch to is called scheduling.

- We will study scheduling when we do processor management

- Multiple computers

- Multiprocessors: Almost from the beginning of the computer

age but now are not exotic.

- Network OS: Make use of the multiple PCs/workstations on a LAN.

- Distributed OS: A ``seamless'' version of above.

- Not part of this course (but often in G22.2251).

- Real time systems

- Often in embedded systems

- Soft vs hard real time. In the latter missing a deadline is a

fatal error--sometimes literally.

- Very important commercially, but not covered much in this course.