Homework: Read Chapter 1 (Introduction)

The kernel itself raises the level of abstraction and hides details. For example a user (of the kernel) can write to a file (a concept not present in hardware) and ignore whether the file resides on a floppy, a CD-ROM, or a hard magnetic disk

The kernel is a resource manager (so users don't conflict).

How is an OS fundamentally different from a compiler (say)?

Answer: Concurrency! Per Brinch Hansen in Operating Systems Principles (Prentice Hall, 1973) writes.

The main difficulty of multiprogramming is that concurrent activities can interact in a time-dependent manner, which makes it practically impossibly to locate programming errors by systematic testing. Perhaps, more than anything else, this explains the difficulty of making operating systems reliable.Homework: 1. (unless otherwise stated, problems numbers are from the end of the chapter in Tanenbaum.)

Note:

There was a tiny typo in the lab the line

1 1888 ->x 1002should have been>

1 1888 ->xy 1002I cleaned up the lecture notes on the linker interlude. I suggest that those of you who heard the recitation on wednesday, reread the lecture notes.

This will be very brief. Much of the rest of the course will consist in ``filling in the details''.

A program in execution. If you run the same program twice, you have created two processes. For example if you have two editors running in two windows, each instance of the editor is a separate process.

Often one distinguishes the state or context (memory image, open files) from the thread of control. Then if one has many threads running in the same task, the result is a ``multithreaded processes''.

The OS keeps information about all processes in the process table. Indeed, the OS views the process as the entry. An example of an active entity being viewed as a data structure (cf. discrete event simulations). An observation made by Finkel in his (out of print) OS textbook.

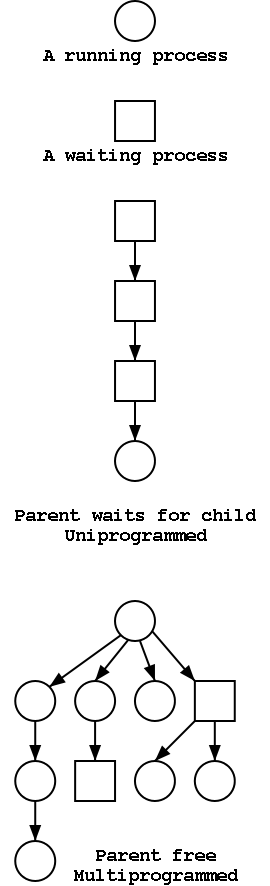

The set of processes forms a tree via the fork system call. The forker is the parent of the forkee. If the parent stops running until the child finishes, the ``tree'' is quite simple, just a line. But the parent (in many OSes) is free to continue executing and in particular is free to fork again producing another child.

A process can send a signal to another process to cause the latter to execute a predefined function (the signal handler). This can be tricky to program since the programmer does not know when in his ``main'' program the signal handler will be invoked.

Modern systems have a hierarchy of files. A file system tree.

Files and directories normally have permissions

Devices (mouse, tape drive, cdrom) are often view as ``special files''. In a unix system these are normally found in the /dev directory. Often utilities that are normally applied to (ordinary) files can be applied as well to some special files. For example, when you are accessing a unix system using a mouse and do not have anything serious going on (e.g., right after you log in), type the following command

cat /dev/mouse

and then move the mouse. You kill the cat by typing cntl-C. I tried

this on my linux box and no damage occurred. Your mileage may vary.

Many systems have standard files that are automatically made available to a process upon startup. These (initial) file descriptors are fixed

A convenience offered by some command interpretors is a pipe or pipeline. The pipeline

ls | wcwill give the number of files in the directory (plus other info).

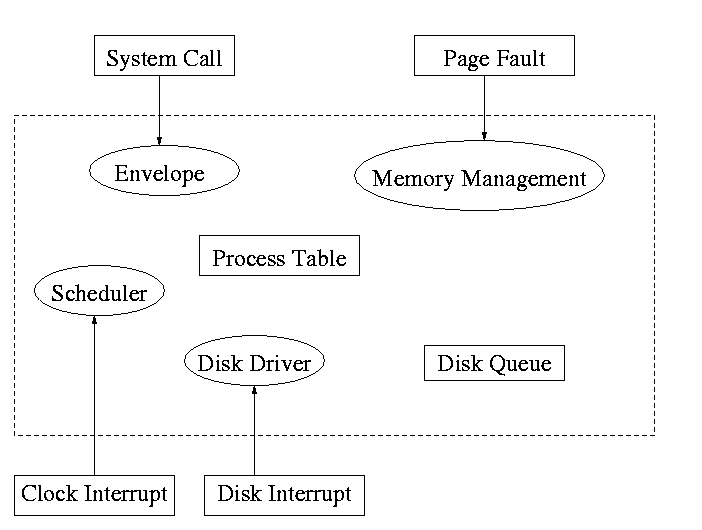

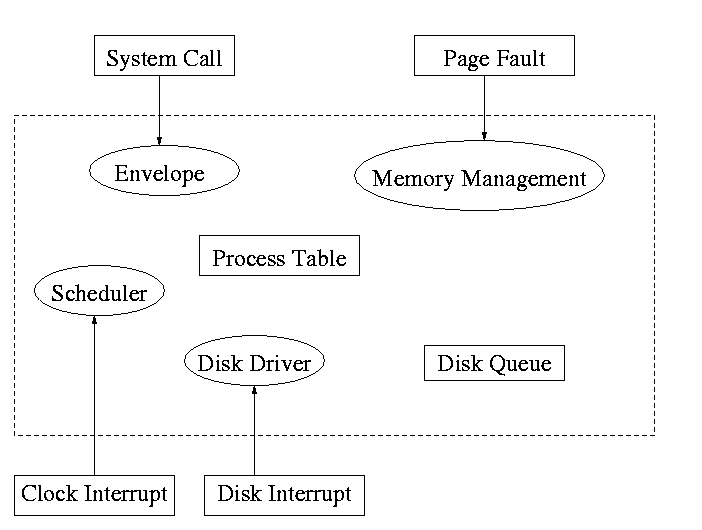

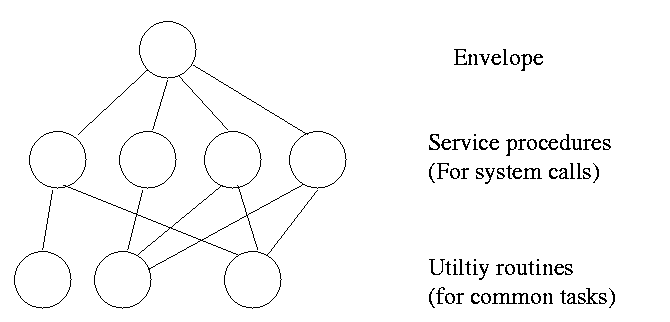

System calls are the way a user (i.e. a program) directly interfaces with the OS. Some textbooks use the term envelope for the component of the OS responsible for fielding system calls and dispatching them. Here is a picture showing some of the OS components and the external events for which they are the interface.

Note that the OS serves two masters. The hardware (below) asynchronously sends interrupts and the user makes system calls and generates page faults.

What happens when a user executes a system call such as read()? We discuss this in much more detail later but briefly what happens is

Homework: 6

Assumed knowledge

Homework: 9.

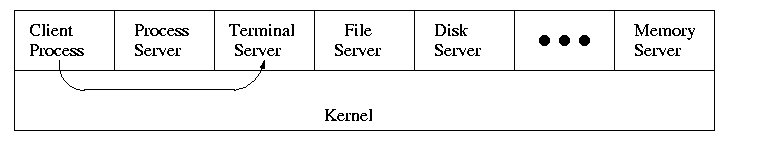

I must note that Tanenbaum is a big advocate of the so called microkernel approach in which as much as possible is moved out of the (supervisor mode) microkernel into separate processes.

In the early 90s this was popular. Digital Unix (now called True64) and Windows NT are examples. Digital Unix is based on Mach, a research OS from Carnegie Mellon university. Lately, the growing popularity of Linux has called into question the belief that ``all new operating systems will be microkernel based''.

The previous picture: one big program

The system switches from user mode to kernel mode during the poof and then back when the OS does a ``return''.

But of course we can structure the system better, which brings us to.

Some systems have more layers and are more strictly structured.

An early layered system was ``THE'' operating system by Dijkstra. The layers were.

The layering was done by convention, i.e. there was no enforcement by hardware and the entire OS is linked together as one program. This is true of many modern OS systems as well (e.g., linux).

The multics system was layered in a more formal manner. The hardware provided several protection layers and the OS used them. That is, arbitrary code could not jump to or access data in a more protected layer.

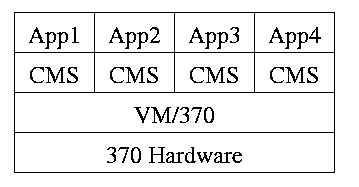

Use a ``hypervisor'' (beyond supervisor, i.e. beyond a normal OS) to switch between multiple Operating Systems

When implemented on one computer, a client server OS is the microkernel approach in which the microkernel just supplies interprocess communication and the main OS functions are provided by a number of separate processes.

This does have advantages. For example an error in the file server cannot corrupt memory in the process server. This makes errors easier to track down.

But it does mean that when a (real) user process makes a system call there are more processes switches. These are not free.

A distributed system can be thought of as an extension of the client server concept where the servers are remote.

Homework: 11