Computer Architecture

1999-2000 Fall

MW 3:30-4:45

Ciww 109

Allan Gottlieb

gottlieb@nyu.edu

http://allan.ultra.nyu.edu/~gottlieb

715 Broadway, Room 1001

212-998-3344

609-951-2707

email is best

======== START LECTURE #18

========

What is the MIPS rating for a computer and how useful is it?

-

MIPS stands for Millions of Instructions Per Second.

- It is a unit of rate or speed (like MHz), not of time (like ns.).

-

It is not the same as the MIPS computer (but the name

similarity is not a coincidence).

- The number of seconds required to execute a given (machine

language) program is

the number of instructions executed / the number executed per second.

- The number of microseconds required to execute a given (machine

language) program is

the number of instructions executed / MIPS.

-

BUT ... .

- The same program in C (or Java, or Ada, etc) might need

different number of instructions

on different computers.

For example, one VAX instruction might require 2

instructions on a power-PC and 3 instructions on an X86).

- The same program in C when compiled by two different compilers

for the same computer architecture, might need to executed

different numbers of instructions.

-

Different programs may achieve different MIPS ratings on the

same architecture.

- Some programs execute more long instructions

than do other programs.

- Some programs have more cache misses and hence cause

more waiting for memory.

- Some programs inhibit full pipelining

(e.g., they may have more mispredicted branches).

- Some programs inhibit full superscalar behavior

(e.g., they may have unhideable data dependencies).

-

One can often raise the MIPS rating by adding NOPs, despite

increasing execution time. How?

Ans. MIPS doesn't require useful instructions and

NOPs, while perhaps useless, are nonetheless very fast.

- So, unlike MHz, MIPS is not a value that be defined for a specific

computer; it depends on other factors, e.g., language/compiler used,

problem solved, and algorithm employed.

Homework:

Carefully go through and understand the example on pages 61-3

How about MFLOPS (Million of FLoating point OPerations per Second)?

For numerical calculations floating point operations are the

ones you are interested in; the others are ``overhead'' (a

very rough approximation to reality).

It has similar problems to MIPS.

- The same program needs different numbers of floating point operations

on different machines (e.g., is sqrt one instruction or several?).

- Compilers effect the MFLOPS rating.

- MFLOPS is Not as bad as MIPS since adding NOPs lowers the MFLOPs

rating.

- But you can insert unnecessary floating point ADD instructions

and this will probably raise the MFLOPS rating. Why?

Because it will lower the percentage of ``overhead'' (i.e.,

non-floating point) instructions.

Benchmarks are better than MIPS or MFLOPS, but still have difficulties.

- It is hard to find benchmarks that represent your future

usage.

- Compilers can be ``tuned'' for important benchmarks.

- Benchmarks can be chosen to favor certain architectures.

- If your processor has 256KB of cache memory and

your competitor's has 128MB, you try to find a benchmark that

frequently accesses a region of memory having size between 128MB

and 256MB.

- If your 128MB cache is 2 way set associative (defined later this

month) while your competitors 256MB cache is direct mapped, then

you build/choose a benchmark that frequently accesses exactly two

10K arrays separated by an exact multiple of 256KB.

Homework:

Carefully go through and understand 2.7 ``fallacies and pitfalls''.

Chapter 7 Memory

Homework:

Read Chapter 7

Ideal memory is

-

Big (in capacity; not physical size).

-

Fast.

-

Cheap.

-

Impossible.

So we use a memory hierarchy ...

-

Registers

-

Cache (really L1, L2, and maybe L3)

-

Memory

-

Disk

-

Archive

... and try to catch most references

in the small fast memories near the top of the hierarchy.

There is a capacity/performance/price gap between each pair of

adjacent levels.

We will study the cache <---> memory gap

-

In modern systems there are many levels of caches.

-

Similar considerations apply to the other gaps (e.g.,

memory<--->disk, where virtual memory techniques are applied).

This is the gap studied in 202.

-

But the terminology is often different, e.g., in architecture we

evict cache blocks or lines whereas in OS we evict pages.

-

In fall 97 my OS class was studying ``the same thing'' at this

exact point (memory management). That isn't true this year since

the OS text changed and memory management is earlier.

We observe empirically (and teach in 202).

-

Temporal Locality: The word referenced now is likely to be

referenced again soon. Hence it is wise to keep the currently

accessed word handy (high in the memory hierarchy) for a while.

-

Spatial Locality: Words near the currently referenced

word are likely to be referenced soon. Hence it is wise to

prefetch words near the currently referenced word and keep them

handy (high in the memory hierarchy) for a while.

A cache is a small fast memory between the

processor and the main memory. It contains a subset of the contents

of the main memory.

A Cache is organized in units of blocks.

Common block sizes are 16, 32, and 64 bytes.

This is the smallest unit we can move to/from a cache.

-

We view memory as organized in blocks as well. If the block

size is 16, then bytes 0-15 of memory are in block 0, bytes 16-31

are in block 1, etc.

-

Transfers from memory to cache and back are one block.

-

Big blocks make good use of spatial locality.

-

If you remember memory management in OS, think of pages and page

frames.

A hit occurs when a memory reference is found in

the upper level of memory hierarchy.

-

We will be interested in cache hits (OS courses

study page hits), when the reference is found in the cache (OS:

when found in main memory).

-

A miss is a non-hit.

-

The hit rate is the fraction of memory references

that are hits.

-

The miss rate is 1 - hit rate, which is the

fraction of references that are misses.

-

The hit time is the time required for a hit.

-

The miss time is the time required for a miss.

-

The miss penalty is Miss time - Hit time.

We start with a very simple cache organization.

-

Assume all referencess are for one word (not too bad).

-

Assume cache blocks are one word.

-

This does not take advantage of spatial locality so is not

done in modern machines.

-

We will drop this assumption soon.

-

Assume each memory block can only go in one specific cache block

-

This is called a Direct Mapped organization.

-

The location of the memory block in the cache (i.e., the block

number in the cache) is the memory block number modulo the

number of blocks in the cache.

-

For example, if the cache contains 100 blocks, then memory

block 34452 is stored in cache block 52. Memory block 352 is

also stored in cache block 52 (but not at the same time, of

course).

-

In real systems the number of blocks in the cache is a power

of 2 so taking modulo is just extracting low order bits.

-

Example: if the cache has 16 blocks, the location of a block in

the cache is the low order 4 bits of block number.

-

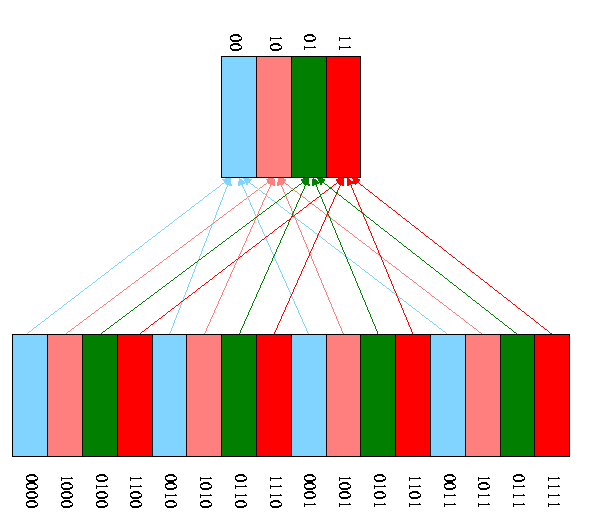

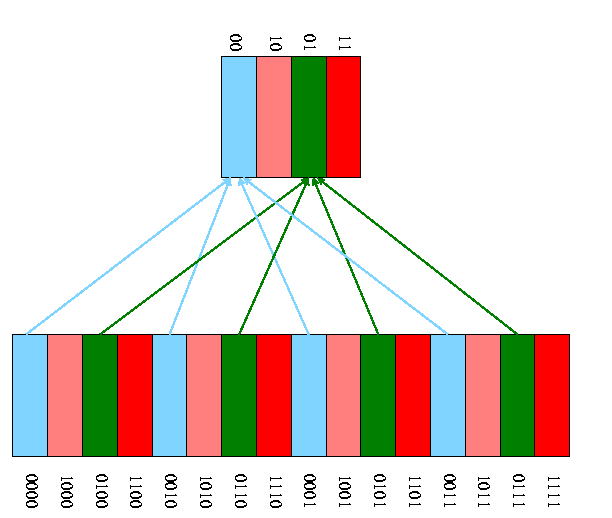

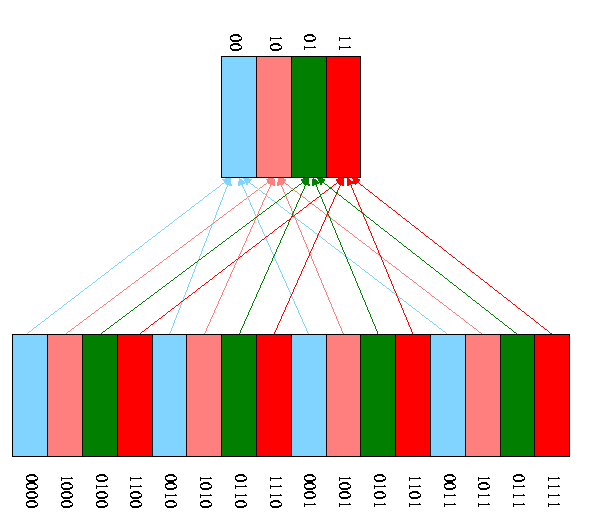

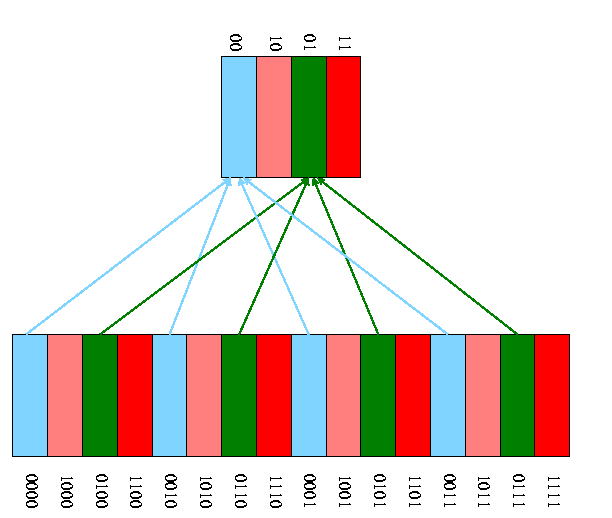

A pictorial example for a cache with only 4 blocks and a memory

with only 16 blocks.