Computer Architecture

1999-2000 Fall

MW 3:30-4:45

Ciww 109

Allan Gottlieb

gottlieb@nyu.edu

http://allan.ultra.nyu.edu/~gottlieb

715 Broadway, Room 1001

212-998-3344

609-951-2707

email is best

0: Administrivia

Web Pages

There is a web page for the course. You can find it from my home

page, which is http://allan.ultra.nyu.edu/~gottlieb

- I will soon mirror my home page on the CS web site

- You can find these notes there on the course home page.

Please let me know if you can't find it.

- The notes will be updated as bugs are found.

- I will also produce a separate page for each lecture after the

lecture is given. These individual pages

might not get updated as quickly as the large page

Textbook

Text is Hennessy and Patterson ``Computer Organization and Design

The Hardware/Software Interface'', 2nd edition.

- Available in the bookstore.

- Used last year so probably used copies exist

- The main body of the book assumes you know logic design.

- I do NOT make that assumption.

- We will start with appendix B, which is logic design review.

- A more extensive treatment of logic design is M. Morris Mano

``Computer System Architecture'', Prentice Hall.

- We will not need as much as Mano covers and it is not a cheap book so

I am not requiring you to get it. I will have it put into the library.

- My treatment will follow H&P not mano.

- Most of the figures in these notes are based on figures from the

course textbook. The following copyright notice applies.

``All figures from Computer Organization and Design:

The Hardware/Software Approach, Second Edition, by

David Patterson and John Hennessy, are copyrighted

material (COPYRIGHT 1998 MORGAN KAUFMANN

PUBLISHERS, INC. ALL RIGHTS RESERVED).

Figures may be reproduced only for classroom or

personal educational use in conjunction with the book

and only when the above copyright line is included. They

may not be otherwise reproduced, distributed, or

incorporated into other works without the prior written

consent of the publisher.''

Computer Accounts and mailman mailing list

- You are entitled to a computer account, get it.

- Sign up for the course mailman mailing list.

http://www.cs.nyu.edu/mailman/listinfo/v22_0436_001_fl00

- If you want to send mail to me, use gottlieb@nyu.edu not

the mailing list.

- You may do assignments on any system you wish, but ...

- You are responsible for the machine. I extend deadlines if

the nyu machines are down, not if yours is.

- Be sure to upload your assignments to the

nyu systems.

- If somehow your assignment is misplaced by me or a grader,

we need a to have a copy ON AN NYU SYSTEM

that can be used to verify the date the lab was completed.

- When you complete a lab (and have it on an nyu system), do

not edit those files. Indeed, put the lab in a separate

directory and keep out of the directory. You do not want to

alter the dates.

Homeworks and Labs

I make a distinction between homework and labs.

Labs are

- Required

- Due several lectures later (date given on assignment)

- Graded and form part of your final grade

- Penalized for lateness

Homeworks are

- Optional

- Due beginning of Next lecture

- Not accepted late

- Mostly from the book

- Collected and returned

- Can help, but not hurt, your grade

Upper left board for assignments and announcements

Appendix B: Logic Design

Homework: Read B1

B.2: Gates, Truth Tables and Logic Equations

Homework: Read B2

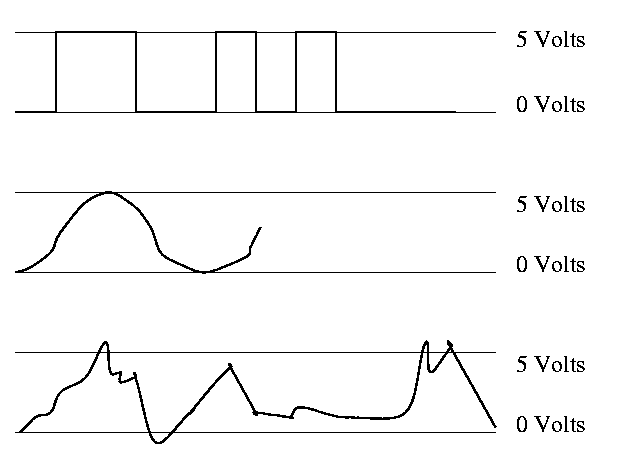

Digital ==> Discrete

Primarily (but NOT exclusively) binary at the hardware level

Use only two voltages--high and low.

- This hides a great deal of engineering.

- Must make sure not to sample the signal when not in one of these two states.

- Sometimes it is just a matter of waiting long enough

(determines the clock rate i.e. how many megahertz).

- Other times it is worse and you must avoid glitches.

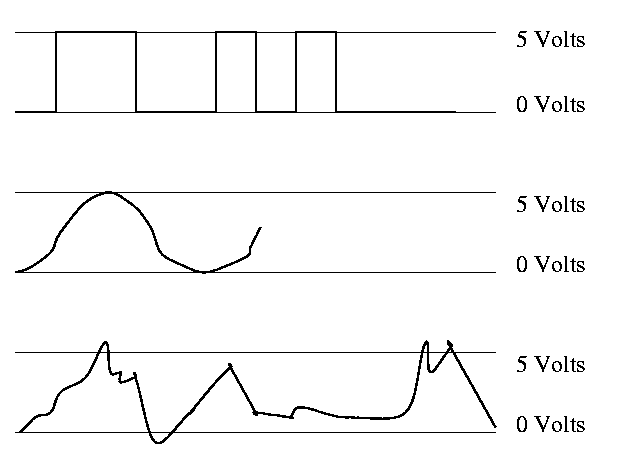

- Oscilloscope traces shown below.

- Vertical axis is voltage; horizontal axis is time.

- Square wave--the ideal. How we think of circuits

- (Poorly drawn) Sine wave

- Actual wave

- Non-zero rise times and fall times

- Overshoots and undershoots

- Glitches

Since this is not an engineering course, we will ignore these

issues and assume square waves.

In English digital implies 10 (based on digit, i.e. finger),

but not in computers.

Bit = Binary digIT

Instead of saying high voltage and low voltage, we say true and false

or 1 and 0 or asserted and deasserted.

0 and 1 are called complements of each other.

A logic block can be thought of as a black box that takes signals in

and produces signals out. There are two kinds of blocks

- Combinational (or combinatorial)

- Does NOT have memory elements.

- Is simpler than circuits with memory since the outputs are a

function of the inputs. That is, if the same inputs are presented on

Monday and Tuesday, the same outputs will result.

- Sequential

- Contains memory.

- The current value in the memory is called the state of the block.

- The output depends on the input AND the state.

We are doing combinational blocks now. Will do sequential blocks

later (in a few lectures).

TRUTH TABLES

Since combinatorial logic has no memory, it is simply a function from

its inputs to its outputs. A

Truth Table has as columns all inputs

and all outputs. It has one row for each possible set of input values

and the output columns have the output for that input. Let's start

with a really simple case a logic block with one input and one output.

There are two columns (1 + 1) and two rows (2**1).

In Out

0 ?

1 ?

How many are there?

How many different truth tables are there for a ``one in one out''

logic block?

Just 4: The constant functions 1 and 0, the identity, and an inverter

(pictures in a few minutes). There were two `?'s in the above table

each can be a 0 or 1 so 2**2 possibilities.

OK. Now how about two inputs and 1 output.

Three columns (2+1) and 4 rows (2**2).

In1 In2 Out

0 0 ?

0 1 ?

1 0 ?

1 1 ?

How many are there? It is just the number ways can you fill in the

output entries, i.e. the question marks. There are 4 output entries

so answer is 2**4=16.

How about 2 in and 8 out?

- 10 cols

- 4 rows

- 2**(4*8)=4 billion possible

3 in and 8 out?

- 11 cols

- 8 rows

- 2**(8**8)=2**64 possible

n in and k out?

- n+k cols

- 2**n rows

- 2**([2**n]*k) possible

Gets big fast!

Boolean algebra

Certain logic functions (i.e. truth tables) are quite common and

familiar.

We use a notation that looks like algebra to express logic functions and

expressions involving them.

The notation is called Boolean algebra in honor of

George Boole.

A Boolean value is a 1 or a 0.

A Boolean variable takes on Boolean values.

A Boolean function takes in boolean variables and produces boolean values.

- The (inclusive) OR Boolean function of two variables. Draw its

truth table. This is written + (e.g. X+Y where X and Y are Boolean

variables) and often called the logical sum. (Three out of four

output values in the truth table look right!)

- AND. Draw TT. Called logical product and written as a centered dot

(like product in regular algebra). All four values look right.

- NOT. Draw TT. This is a unary operator (One argument, not two

as above; functions with two inputs are called binary). Written A with a bar

over it. I will use ' instead of a bar as it is easier for me to type

in html.

- Exclusive OR (XOR). Written as + with a circle around it. True if

exactly one input is true (i.e., true XOR true = false). Draw TT.

Homework:

Consider the Boolean function of 3 boolean variables that is true

if and only if exactly 1 of the three variables is true. Draw the TT.

Some manipulation laws. Remember this is Boolean ALGEBRA.

Identity:

-

A+0 = 0+A = A

-

A.1 = 1.A = A

-

(using . for and)

Inverse:

- A+A' = A'+A = 1

- A.A' = A'.A = 0

- (using ' for not)

Both + and . are commutative so my identity and inverse examples

contained redundancy.

The name inverse law is somewhat funny since you

Add the inverse and get the identity for Product

or Multiply by the inverse and get the identity for Sum.

Associative:

- A+(B+C) = (A+B)+C

- A.(B.C)=(A.B).C

Due to the associative law we can write A.B.C since either order of

evaluation gives the same answer. Similarly we can write A+B+C.

We often elide the . so the product associative law is A(BC)=(AB)C.

So we better not have three variables A, B, and AB. In fact, we

normally use one letter variables.

Distributive:

- A(B+C)=AB+AC

- A+(BC)=(A+B)(A+C)

- Note that BOTH distributive laws hold UNLIKE ordinary arithmetic.

How does one prove these laws??

- Simple (but long). Write the TTs for each and see that the outputs

are the same.

- Prove the first distributive laws on the board.

Homework: Prove the second distributive law.

Let's do (on the board) the examples on pages B-5 and B-6.

Consider a logic function with three inputs A, B, and C; and three

outputs D, E, and F defined as follows: D is true if at least one

input is true, E if exactly two are true, and F if all three are true.

(Note that by ``if'' we mean ``if and only if''.

Draw the truth table.

Show the logic equations.

- For E first use the obvious method of writing one condition

for each 1-value in the E column i.e.

(A'BC) + (AB'C) + (ABC')

- Observe that E is true if two (but not three) inputs are true,

i.e.,

(AB+AC+BC) (ABC)' (using . higher precedence than +)

The first way we solved part E shows that any logic function

can be written using just AND, OR, and NOT. Indeed, it is in a nice

form. Called two levels of logic, i.e. it is a sum of products of

just inputs and their compliments.

DeMorgan's laws:

- (A+B)' = A'B'

- (AB)' = A'+B'

You prove DM laws with TTs. Indeed that is ...

Homework: B.6 on page B-45.

Do beginning of HW on the board.

======== START LECTURE #2

========

With DM (DeMorgan's Laws) we can do quite a bit without resorting to

TTs. For example one can show that the two expressions for E in the

example above (page B-6) are equal. Indeed that is

Homework: B.7 on page B-45

Do beginning of HW on board.

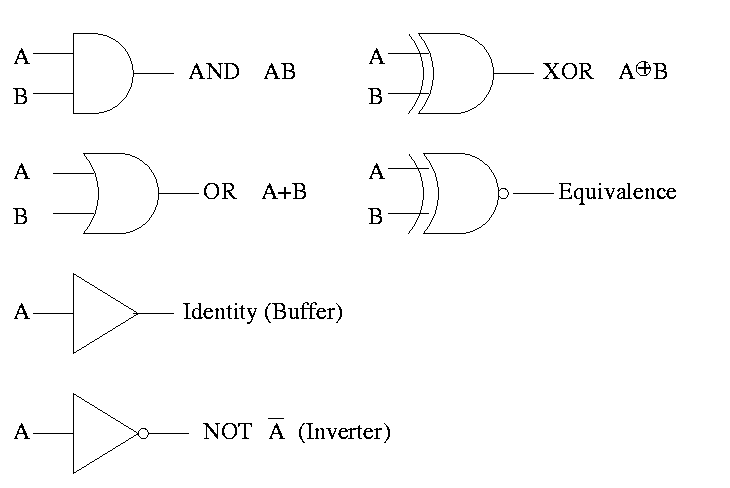

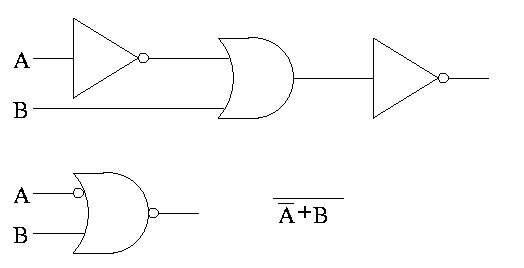

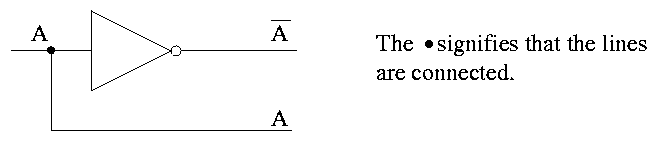

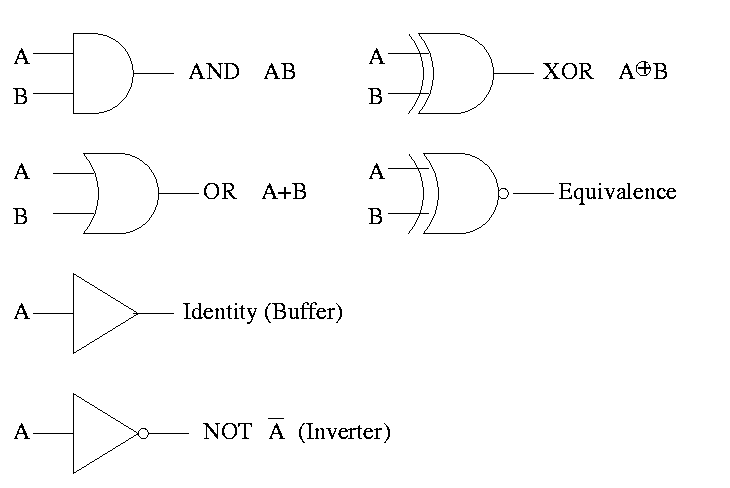

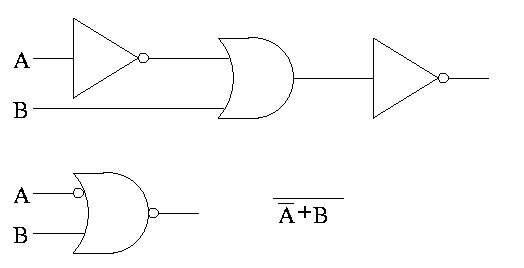

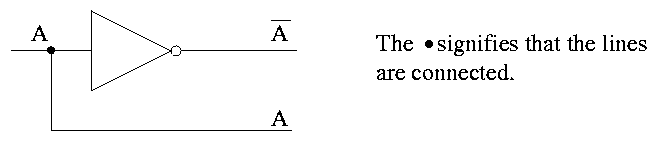

GATES

Gates implement basic logic functions: AND OR NOT XOR Equivalence

Often omit the inverters and draw the little circles at the input or

output of the other gates (AND OR). These little circles are

sometimes called bubbles.

This explains why the inverter is drawn as a buffer with a bubble.

Show why the picture for equivalence is the negation of XOR, i.e (A

XOR B)' is AB + A'B'

(A XOR B)' =

(A'B+AB')' =

(A'B)' (AB')' =

(A''+B') (A'+B'') =

(A + B') (A' + B) =

AA' + AB + B'A' + B'B =

0 + AB + B'A' + 0 =

AB + A'B'

Homework: B.2 on page B-45 (I previously did the first part

of this homework).

Homework: Consider the Boolean function of 3 boolean vars

(i.e. a three input function) that is true if and only if exactly 1 of

the three variables is true. Draw the TT. Draw the logic diagram

with AND OR NOT. Draw the logic diagram with AND OR and bubbles.

A set of gates is called universal if these gates are

sufficient to generate all logic functions.

-

We have seen that any logic function can be constructed from AND OR

NOT. So this triple is universal.

-

Are there any pairs that are universal?

Ans: Sure, A+B = (A'B')' so can get OR from AND and NOT. Hence the

pair AND NOT is universal

Similarly, can get AND from OR and NOT and hence the pair OR NOT

is universal

-

Could there possibly be a single function that is universal all by

itself?

AND won't work as you can't get NOT from just AND

OR won't work as you can't get NOT from just OR

NOT won't work as you can't get AND from just NOT.

-

But there indeed is a universal function! In fact there are two.

NOR (NOT OR) is true when OR is false. Do TT.

NAND (NOT AND) is true when AND is false. Do TT.

Draw two logic diagrams for each, one from the definition and an

equivalent one with bubbles.

Theorem

A 2-input NOR is universal and

a 2-input NAND is universal.

Proof

We must show that you can get A', A+B, and AB using just a two input

NOR.

- A' = A NOR A

- A+B = (A NOR B)' (we can use ' by above)

- AB = (A' OR B')'

Homework: Show that a 2-input NAND is universal.

Can draw NAND and NOR each two ways (because (AB)' = A' + B')

We have seen how to get a logic function from a TT. Indeed we can

get one that is just two levels of logic. But it might not be the

simplest possible. That is, we may have more gates than are necessary.

Trying to minimize the number of gates is NOT trivial. Mano covers

the topic of gate minimization in detail. We will not cover it in

this course. It is not in H&P. I actually like it but must admit

that it takes a few lectures to cover well and it not used much in

practice since it is algorithmic and is done automatically by CAD

tools.

Minimization is not unique, i.e. there can be two or more minimal

forms.

Given A'BC + ABC + ABC'

Combine first two to get BC + ABC'

Combine last two to get A'BC + AB

Sometimes when building a circuit, you don't care what the output is

for certain input values. For example, that input combination might

be known not to occur. Another example occurs when, for some

combination of input values, a later part of the circuit will ignore

the output of this part. These are called don't care

outputs situations. Making use of don't cares can reduce the

number of gates needed.

Can also have don't care inputs

when, for certain values of a subset of the inputs, the output is

already determined and you don't have to look at the remaining

inputs. We will see a case of this in the very next topic, multiplexors.

An aside on theory

Putting a circuit in disjunctive normal form (i.e. two levels of

logic) means that every path from the input to the output goes through

very few gates. In fact only two, an OR and an AND. Maybe we should

say three since the AND can have a NOT (bubble). Theorticians call

this number (2 or 3 in our case) the depth of the circuit.

Se we see that every logic function can be implemented with small

depth. But what about the width, i.e., the number of gates.

The news is bad. The parity function takes n inputs

and gives TRUE if and only if the number of TRUE inputs is odd.

If the depth is fixed (say limited to 3), the number of gates needed

for parity is exponential in n.

B.3 COMBINATIONAL LOGIC

Homework:

Read B.3.

Generic Homework:

Read sections in book corresponding to the lectures.

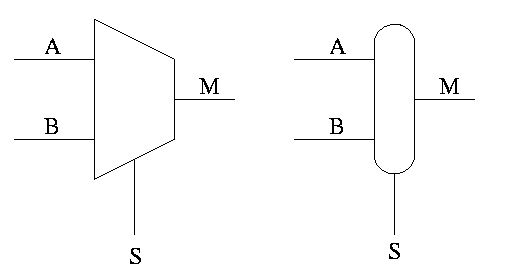

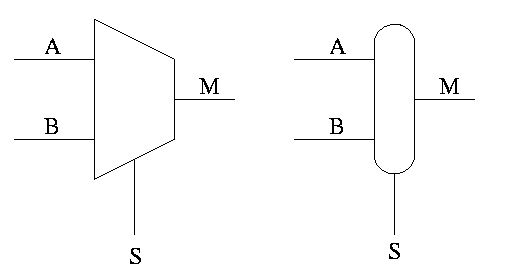

Multiplexor

Often called a mux or a selector

Show equiv circuit with AND OR

Hardware if-then-else

if S=0

M=A

else

M=B

endif

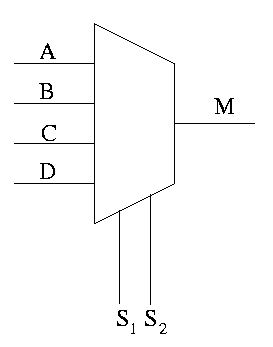

Can have 4 way mux (2 selector lines)

This is an if-then-elif-elif-else

if S1=0 and S2=0

M=A

elif S1=0 and S2=1

M=B

elif S1=1 and S2=0

M=C

else -- S1=1 and S2=1

M=D

endif

Do a TT for 2 way mux. Redo it with don't care values.

Do a TT for 4 way mux with don't care values.

Homework:

B.12.

B.5 (Assume you have constant signals 1 and 0 as well.)

======== START LECTURE #3

========

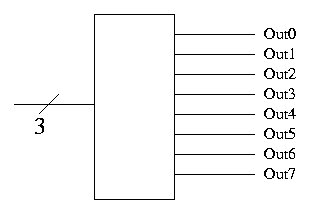

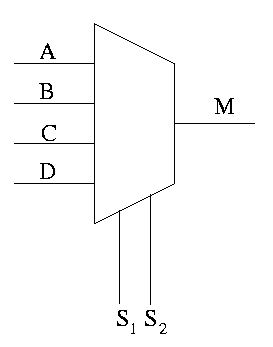

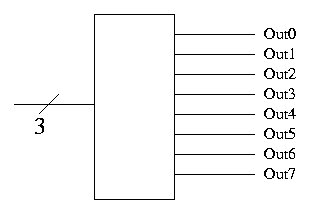

Decoder

- Note the ``3'' with a slash, which signifies a three bit input.

This notation represents three (1-bit) wires.

- A decoder with n input bits, produces 2^n output bits.

- View the input as ``k written an n-bit binary number'' and

view the output as 2^n bits with the k-th bit set and all the

other bits clear.

- Implement on board with AND/OR.

- Why do we use decoders and encoders?

- The encoded form takes (MANY) fewer bits so is better for

communication.

- The decoded form is easier to work with in hardware since

there is no direct way to test if 8 wires represent a 5

(101). You would have to test each wire. But it easy to see

if the encoded form is a five (00100000)

Encoder

- Reverse "function" of decoder.

- Not defined for all inputs (exactly one must be 1)

Sneaky way to see that NAND is universal.

- First show that you can get NOT from NAND. Hence we can build

inverters.

- Now imagine that you are asked to do a circuit for some function

with N inputs. Assume you have only one output.

- Using inverters you can get 2N signals the N original and N

complemented.

- Recall that the natural sum of products form is a bunch of ORs

feeding into one AND.

- Naturally you can add pairs of bubbles since they ``cancel''

- But these are all NANDS!!

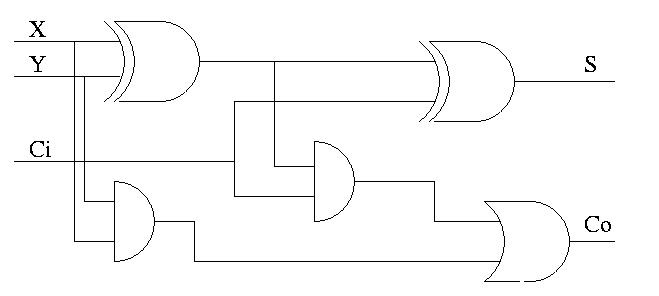

Half Adder

- Two 1-bit inputs: X and Y

- Two 1-bit outputs S and Co (carry out)

- No carry in

- Draw TT

Homework: Draw logic diagram

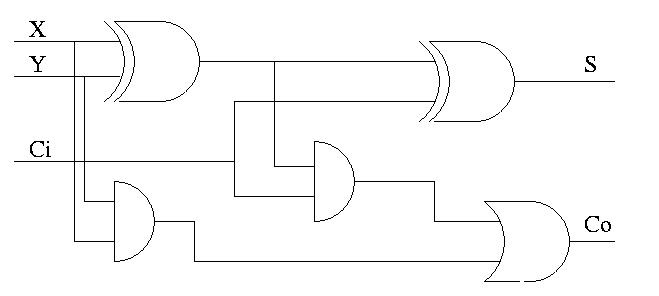

Full Adder

- Three 1-bit inputs: X, Y and Ci.

- Two 1-bit output: S and Co

- S = ``the total number of 1s in X, Y, and Ci is odd''

- Co = #1s is at least 2

Homework:

- Draw TT (8 rows)

- Show S = X XOR Y XOR Ci

- Show Co = XY + (X XOR Y)Ci

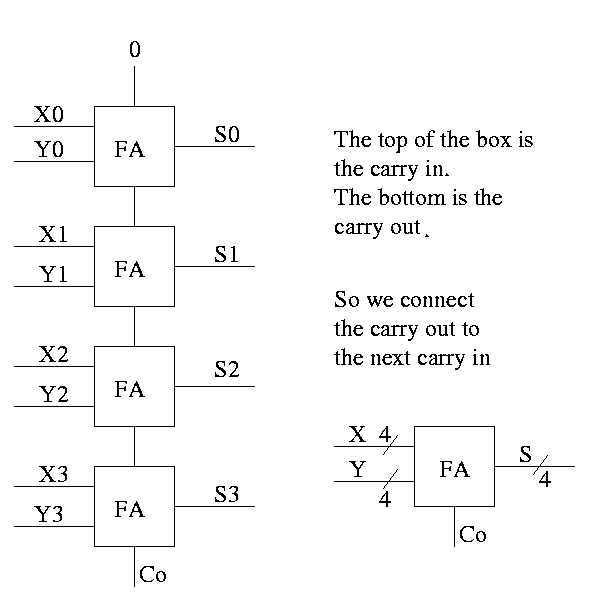

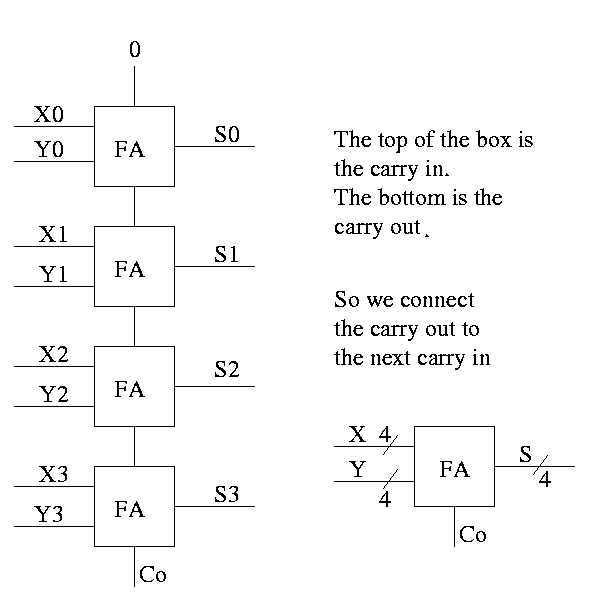

How about 4 bit adder ?

How about an n-bit adder ?

- Linear complexity, i.e. the time for a 64-bit add is twice

that for a 32-bit add.

- Called ripple carry since the carry ripples down the circuit

from the low order bit to the high order bit. This is why the

circuit has linear complexity.

- Faster methods exist. Indeed we will learn one soon.

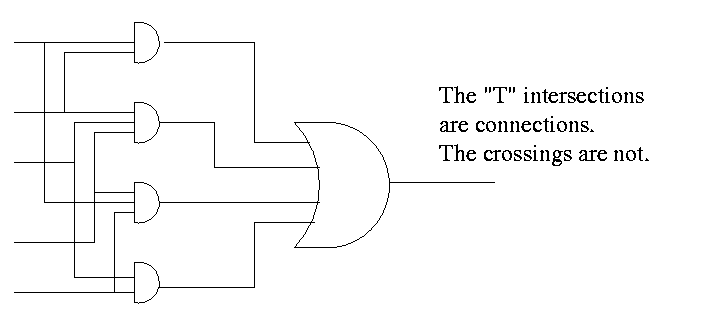

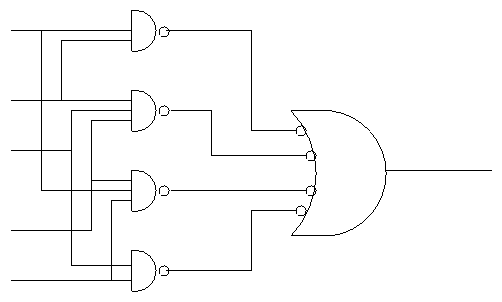

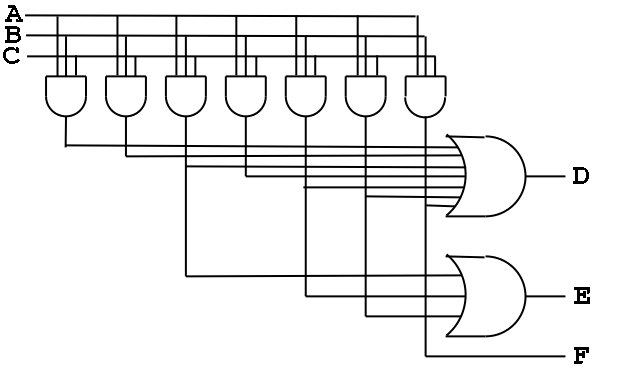

PLAs--Programmable Logic Arrays

Idea is to make use of the algorithmic way you can look at a TT and

produce a circuit diagram in the sums of product form.

Consider the following TT from the book (page B-13)

A | B | C || D | E | F

--+---+---++---+---+--

O | 0 | 0 || 0 | 0 | 0

0 | 0 | 1 || 1 | 0 | 0

0 | 1 | 0 || 1 | 0 | 0

0 | 1 | 1 || 1 | 1 | 0

1 | 0 | 0 || 1 | 0 | 0

1 | 0 | 1 || 1 | 1 | 0

1 | 1 | 0 || 1 | 1 | 0

1 | 1 | 1 || 1 | 0 | 1

- Recall how we construct a circuit from a truth table.

- The circuit is in sum of products form.

- There is a big OR for each output. The OR has one

input for each row that the output is true.

- Since there are 7 rows for which at least one output is true,

there are 7 product terms that will be used in one

or more of the ORs (in fact all seven will be used in D, but that is

special to this example)

- Each of these product terms is called a Minterm

- So we need a bunch of ANDs (in fact, seven, one for each minterm)

taking A, B, C, A', B', and C' as inputs.

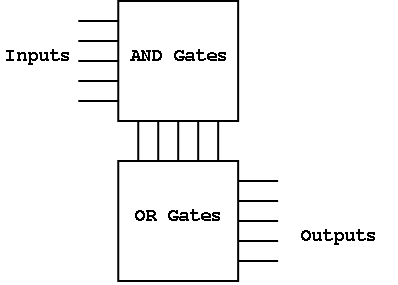

- This is called the AND plane and the collection of

ORs mentioned above is called the OR plane.

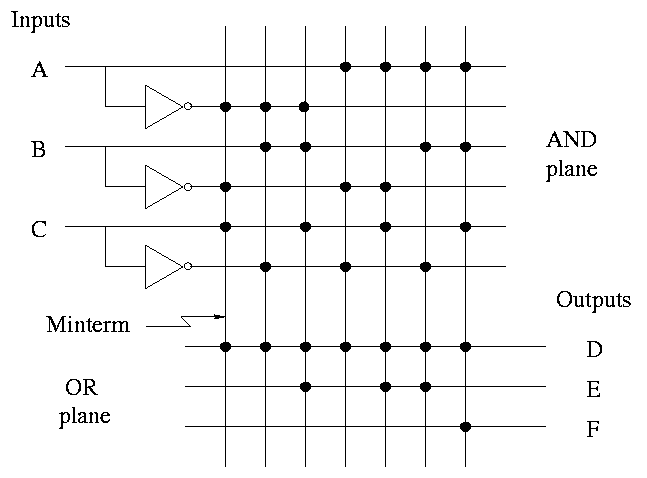

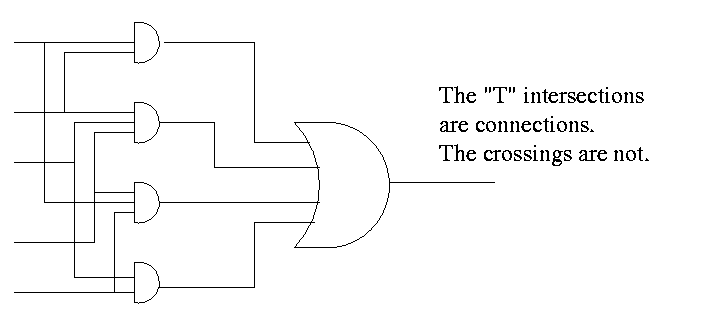

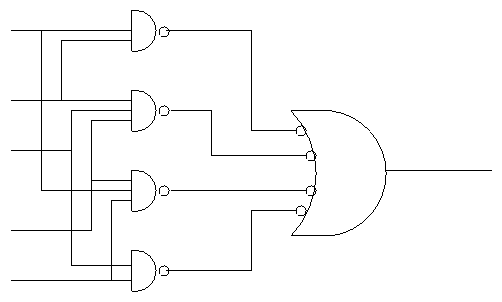

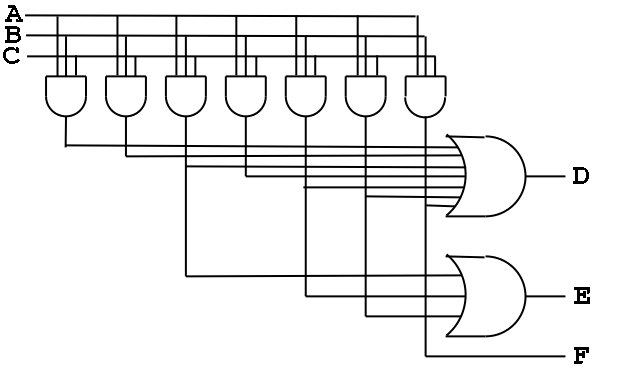

Here is the circuit diagram for this truth table.

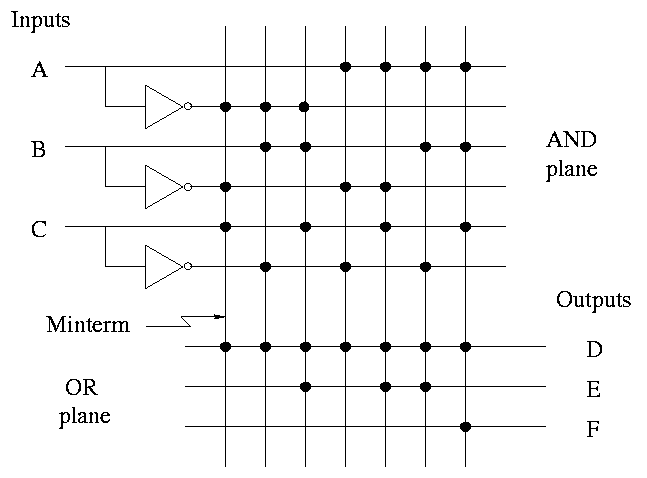

Here it is redrawn in a more schmatic style.

- This figure shows more clearly the AND plane, the OR plane, and

the minterms.

- Rather than having bubbles (i.e., custom AND gates that invert

certain inputs), we

simply invert each input once and send the inverted signal all the way

accross.

- AND gates are shown as vertical lines; ORs as horizontal.

- Note the dots to represent connections.

- Imagine building a bunch of these but not yet specifying where the

dots go. This would be a generic precurson to a PLA.

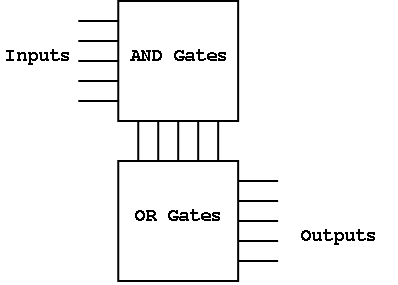

Finally, it can be redrawn in a more abstract form.

Before a PLA is manufactured all the connections are specified.

That is, a PLA is specific for a given circuit. It is somewhat of a

misnomer since it is notprogrammable by the user

Homework: B.10 and B.11

Can also have a PAL or Programmable array logic

in which the final dots are specified

by the user. The manufacturer produces a ``sea of gates''; the user

programs it to the desired logic function by adding the dots.

======== START LECTURE #4

========

ROMs

One way to implement a mathematical (or C) function (without side

effects) is to perform a table lookup.

A ROM (Read Only Memory) is the analogous way to implement a logic

function.

- For a math function f we start with x and get f(x).

- For a ROM with start with the address and get the value stored at

that address.

- Normally math functions are defined for an infinite number of

values, for example f(x) = 3x for all real numbers x

- We can't build an infinite ROM (sorry), so we are only interested

in functions defined for a finite number of values. Today a million

is OK a billion is too big.

- How do we create a ROM for the function f(3)=4, f(6)=20 all other

values don't care?

Simply have the ROM store 4 in address 3 and 20 in address 6.

- Consider a function defined for all n-bit numbers (say n=20) and

having a k-bit output for each input.

- View an n-bit input as n 1-bit inputs.

- View a k-bit output as k 1-bit outputs.

- Since there are 2^n possible inputs and each requires a k 1-bit output,

there are a total of (2^n)k bits of output, i.e. the ROM must hold

(2^n)k bits.

- Now consider a truth table with n inputs and k outputs.

The total number of output bits is again (2^n)k (2^n rows and k output

columns).

- Thus the ROM implements a truth table, i.e. is a logic function.

Important: A ROM does not have state. It is

another combinational circuit. That is, it does not represent

``memory''. The reason is that once a ROM is manufactured, the output

depends only on the input.

A PROM

is a programmable ROM. That is you buy the ROM with ``nothing'' in

its memory and then before

it is placed in the circuit you load the memory, and never change it.

This is like a CD-R.

An EPROM is an erasable PROM. It costs more

but if you decide to change its memory this is possible (but is slow).

This is like a CD-RW.

``Normal'' EPROMs are erased by some ultraviolet light process. But

EEPROMs (electrically erasable PROMS) are faster and

are done electronically.

All these EPROMS are erasable not writable, i.e. you can't just change

one bit.

A ROM is similar to PLA

- Both can implement any truth table, in principle.

- A 2Mx8 ROM can really implment any truth table with 21 inputs

(2^21=2M) and 8 outputs.

- It stores 2M bytes

- In ROM-speak, it has 21 address pins and 8 data pins

- A PLA with 21 inputs and 8 outputs might need to have 2M minterms

(AND gates).

- The number of minterms depends on the truth table itself.

- For normal TTs with 21 inputs the number of minterms is MUCH

less than 2^21.

- The PLA is manufactured with the number of minterms needed

- Compare a PAL with a PROM

- Both can in principle implement any TT

- Both are user programmable

- A PROM with n inputs and k outputs can implement any TT with n

inputs and k outputs.

- A PAL that you buy does not have enough gates for all

possibilities since most TTs with n inputs and k outputs don't

require nearly (2^n)k gates.

Don't Cares

- Sometimes not all the input and output entries in a TT are

needed. We indicate this with an X and it can result in a smaller

truth table.

- Input don't cares.

- The output doesn't depend on all inputs, i.e. the output has

the same value no matter what value this input has.

- We saw this when we did muxes

- Output don't cares

- For some input values, either output is OK.

- This input combination is impossible.

- For this input combination, the given output is not used

(perhaps it is ``muxed out'' downstream)

Example (from the book):

- If A or C is true, then D is true (independent of B).

- If A or B is true, then E is true.

- F is true if exactly one of the inputs is true, but we don't care

about the value of F if both D and E are true

Full truth table

A B C || D E F

----------++----------

0 0 0 || 0 0 0

0 0 1 || 1 0 1

0 1 0 || 0 1 1

0 1 1 || 1 1 0

1 0 0 || 1 1 1

1 0 1 || 1 1 0

1 1 0 || 1 1 0

1 1 1 || 1 1 1

This has 7 minterms.

Put in the output don't cares

A B C || D E F

----------++----------

0 0 0 || 0 0 0

0 0 1 || 1 0 1

0 1 0 || 0 1 1

0 1 1 || 1 1 X

1 0 0 || 1 1 X

1 0 1 || 1 1 X

1 1 0 || 1 1 X

1 1 1 || 1 1 X

Now do the input don't cares

- B=C=1 ==> D=E=11 ==> F=X ==> A=X

- A=1 ==> D=E=11 ==> F=X ==> B=C=X

A B C || D E F

----------++----------

0 0 0 || 0 0 0

0 0 1 || 1 0 1

0 1 0 || 0 1 1

X 1 1 || 1 1 X

1 X X || 1 1 X

These don't cares are important for logic minimization. Compare the

number of gates needed for the full TT and the reduced TT. There are

techniques for minimizing logic, but we will not cover them.

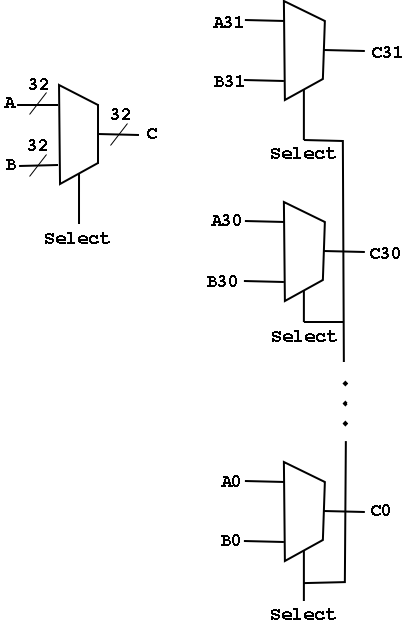

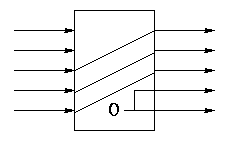

Arrays of Logic Elements

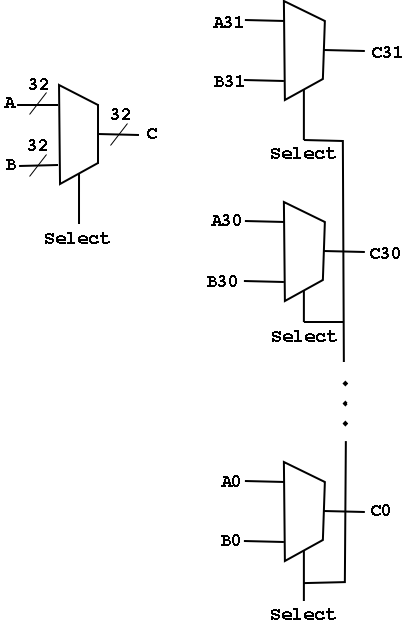

- Do the same thing to many signals

- Draw thicker lines and use the ``by n'' notation.

- Diagram below shows a 32-bit 2-way mux and an implementation with 32

1-bit, 2-way muxes.

- A Bus is a collection of data lines treated

as a single logical (n-bit) value.

- Use an array of logic elements to process a bus.

For example, the above mux switches between 2 32-bit buses.

*** Big Change Coming ***

Sequential Circuits, Memory, and State

Why do we want to have state?

- Memory (i.e. ram not just rom or prom)

- Counters

- Reducing gate count

- Multiplier would be quadradic in comb logic.

- With sequential logic (state) can do in linear.

- What follows is unofficial (i.e. too fast to

understand)

- Shift register holds partial sum

- Real slick is to share this shift reg with

multiplier

- We will do this circuit later in the course

Assume you have a real OR gate. Assume the two inputs are both

zero for an hour. At time t one input becomes 1. The output will

OSCILLATE for a while before settling on exactly 1. We want to be

sure we don't look at the answer before its ready.

B.4: Clocks

Frequency and period

- Hertz (Hz), Megahertz, Gigahertz vs. Seconds, Microseconds,

Nanoseconds

- Old (descriptive) name for Hz is cycles per second (CPS)

- Rate vs. Time

Edges

- Rising Edge; falling edge

- We use edge-triggered logic

- State changes occur only on a clock edge

- Will explain later what this really means

- One edge is called the Active edge

- The edge (rising or falling) on which changes occur

- Choice is technology dependent

- Sometimes trigger on both edges (e.g., RAMBUS or DDR memory)

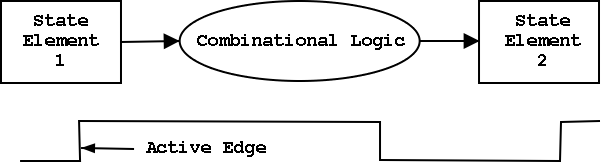

Synchronous system

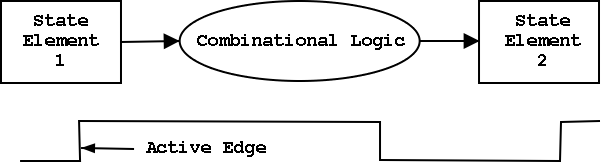

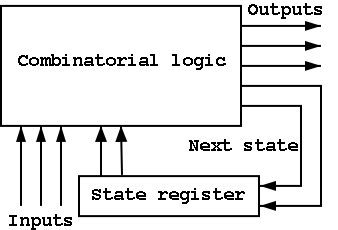

Now we are going to add state elements to the combinational

circuits we have been using previously.

Remember that a combinational/combinatorial circuits has its outpus

determined by its input, i.e. combinatorial circuits do not contain

state.

State elements include state (naturally).

- i.e., memory

- state-elements have clock as an input

- can change state only at active edge

- produce output Always; based on current state

- all signals that are written to state elements must be valid at

the time of the active edge.

- For example, if cycle time is 10ns make sure combinational circuit

used to compute new state values completes in 10ns

- So state elements change on active edge, comb circuit

stabilizes between active edges.

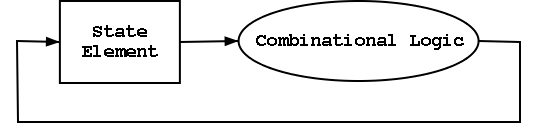

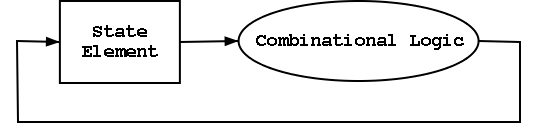

- Think of registers or memory as state elements.

- Can have loops like at the right.

- A loop like this is a cycle of the computer.

B.5: Memory Elements

We want edge-triggered clocked memory and will only use

edge-triggered clocked memory in our designs. However we get

there by stages. We first show how to build unclocked

memory; then using unclocked memory we build

level-sensitive clocked memory; finally from

level-sensitive clocked memory we build edge-triggered

clocked memory.

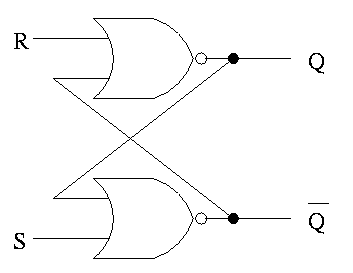

Unclocked Memory

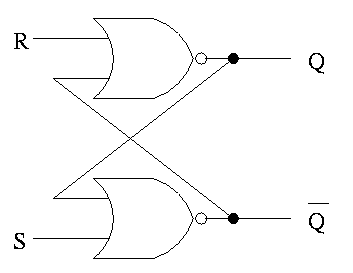

S-R latch (set-reset)

- ``Cross-coupled'' nor gates

- Don't assert both S and R at once

- When S is asserted (i.e., S=1 and R=0)

- the latch is Set (that's why it is called S)

- Q becomes true (Q is the output of the latch)

- Q' becomes false (Q' is the complemented output)

- When R is asserted

- the latch is Reset

- Q becomes false

- Q' becomes true

- When neither one is asserted

- The latch remains the same, i.e. Q and Q' stay as they

were

- This is the memory aspect

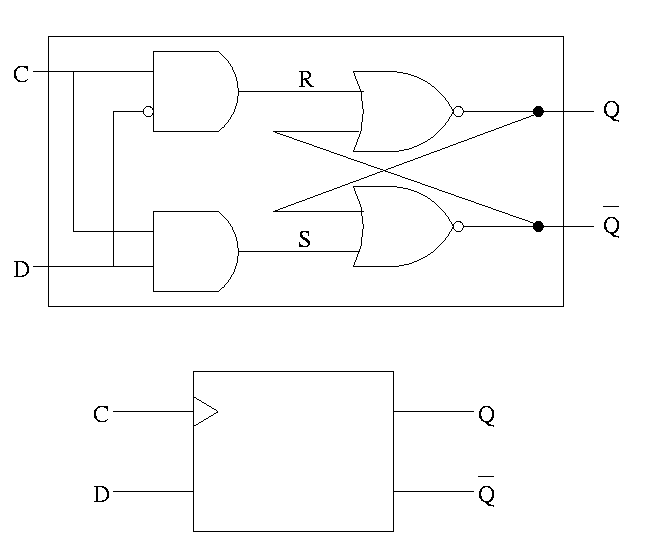

Clocked Memory: Flip-flops and latches

The S-R latch defined above is not clocked memory. Unfortunately the

terminology is not perfect.

For both flip-flops and

latches the output equals the value stored in the

structure. Both have an input and an output (and the complemented

output) and a clock input as well. The clock determines when the

internal value is set to the current input. For a latch, the change

occurs whenever the clock is asserted (level sensitive). For a

flip-flop, the change occurs at the active edge.

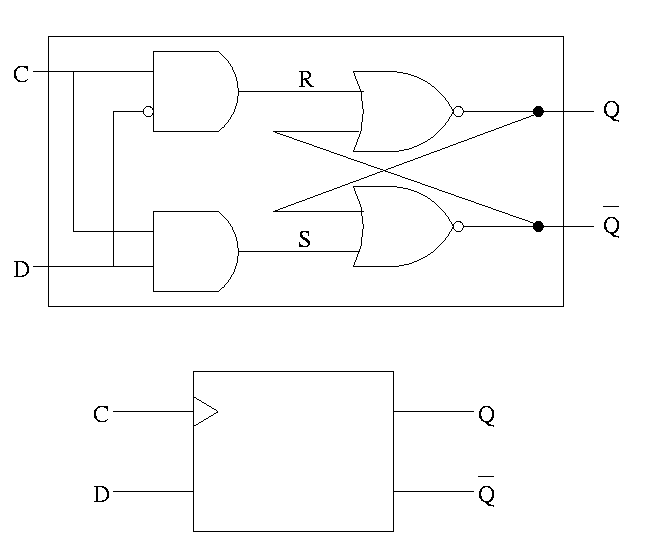

D latch

The D is for data

- The left part uses the clock.

- When the clock is low, both R and S are forced low.

- When the clock is high, S=D and R=D' so the value store is D.

- Output changes when input changes and the clock is asserted.

- Level sensitive rather than edge triggered.

- Sometimes called a transparent latch.

- We won't use these in designs.

- The right hand part of the circuit is the S-R (unclocked) latch we

just constructed.

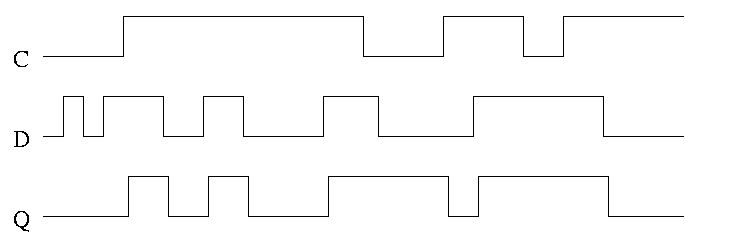

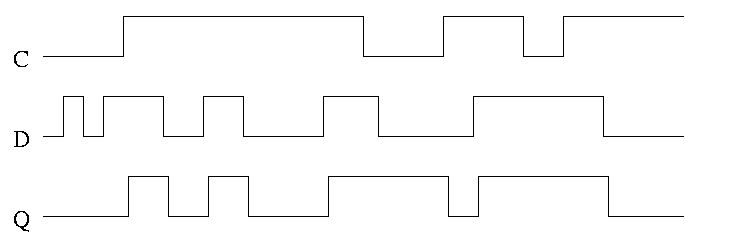

In the traces below notice how the output follows the input when the

clock is high and remains constant when the clock is low. We assume

the stored value is initially low.

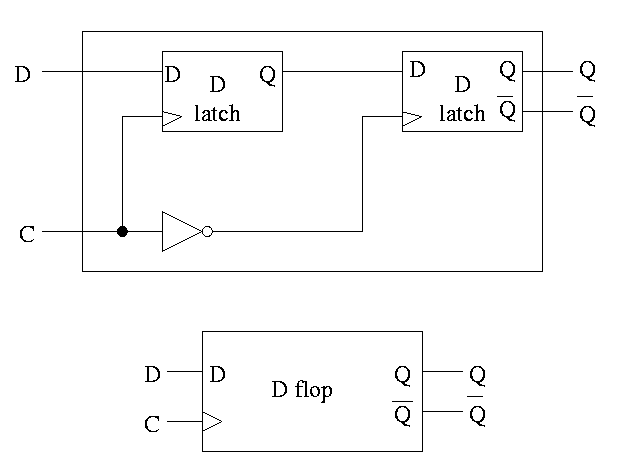

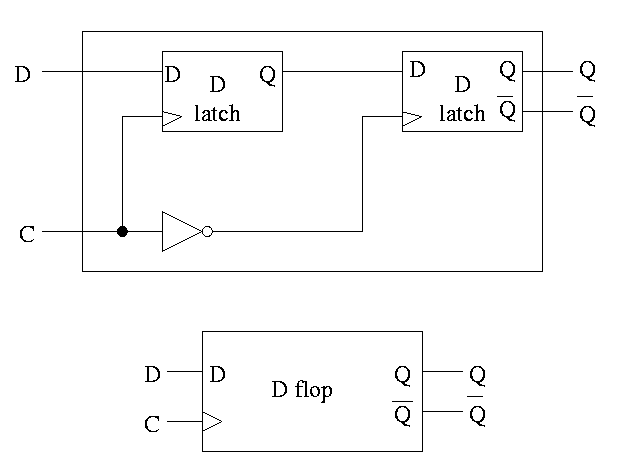

D or Master-Slave Flip-flop

This was our goal. We now have an edge-triggered, clocked memory.

- Built from D latches, which are transparent

- The result is Not transparent

- Changes on the active edge

- This one has the falling edge as active edge

- Sometimes called a master-slave flip-flop

- Note substructures with letters reused

having different meaning (block structure a la algol)

- Master latch (the left one) is set during the time clock is

asserted.

Remember that the latch is transparent, i.e. follows

its input when its clock is asserted. But the second

latch is ignoring its input at this time. When the

clock falls, the 2nd latch pays attention and the

first latch keeps producing whatever D was at

fall-time.

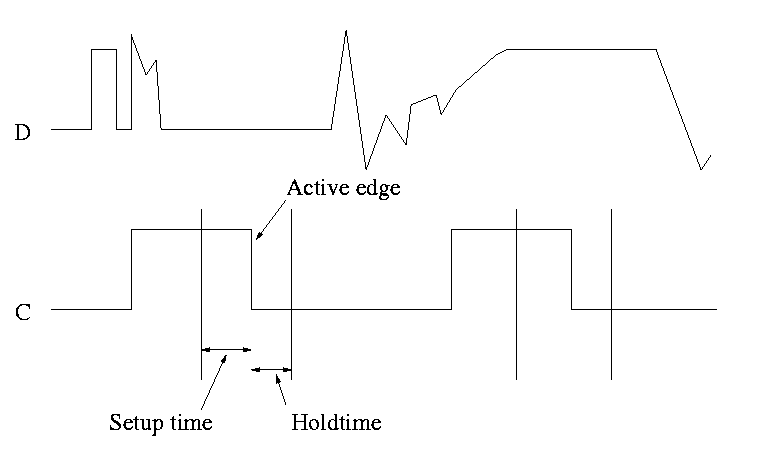

- Actually D must remain constant for some time around

the active edge.

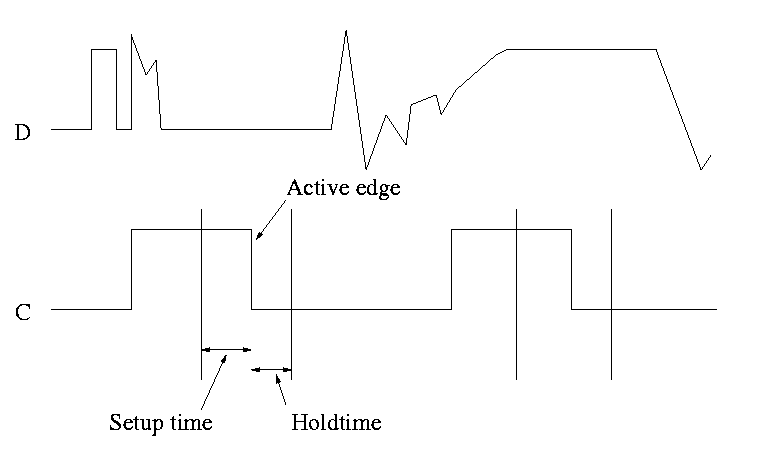

- The set-up time before the edge

- The hold time after the edge

- See diagram below

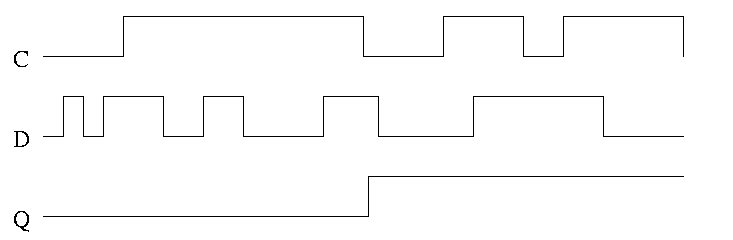

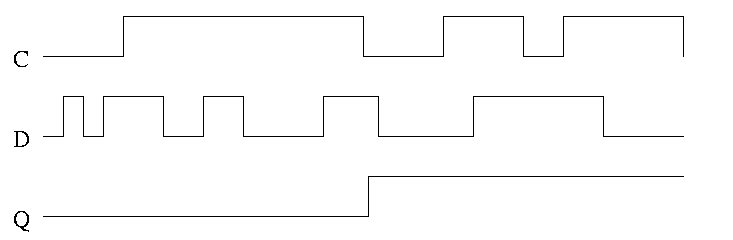

Note how much less wiggly the output is with the master-slave flop

than before with the transparent latch. As before we are assuming the

output is initially low.

Homework:

Try moving the inverter to the other latch

What has changed?

======== START LECTURE #5

========

- This picture shows the setup and hold times discussed above.

- It is crucial when building circuits with flip flops that D is

stable during the interval between the setup and hold times.

- Note that D is wild outside the critical interval, but that is OK.

Homework:

B.18

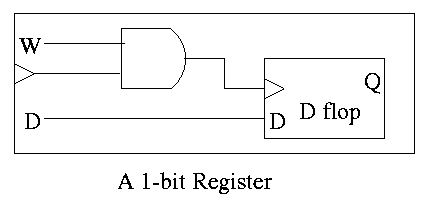

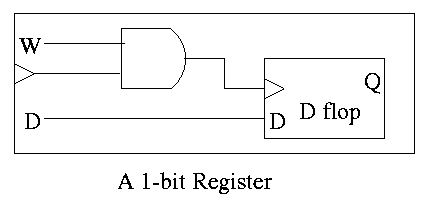

Registers

- Basically just an array of D flip-flops

- But what if you don't want to change the register during a

particular cycle?

- Introduce another input, the write line

- The write line is used to ``gate the clock''

- The book forgot the write line.

- Clearly if the write line is high forever, the clock input to

the register is passed right along to the D flop and hence the

input to the register is stored in the D flop when the active edge

occurs (for us the falling edge).

- Also clear is that if the write line is low forever, the clock

to the D flop is always low so has no edges and no writing occurs.

- But what about changing the write line?

- Assert or deassert the write line while the clock is low and

keep it at this value until the clock is low again.

- Not so good! Must have the write line correct quite a while

before the active edge. That is you must know whether you are

writing quite a while in advance.

- Better to do things so the write line must be correct when the

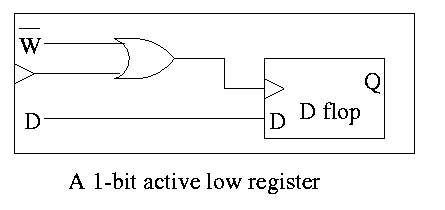

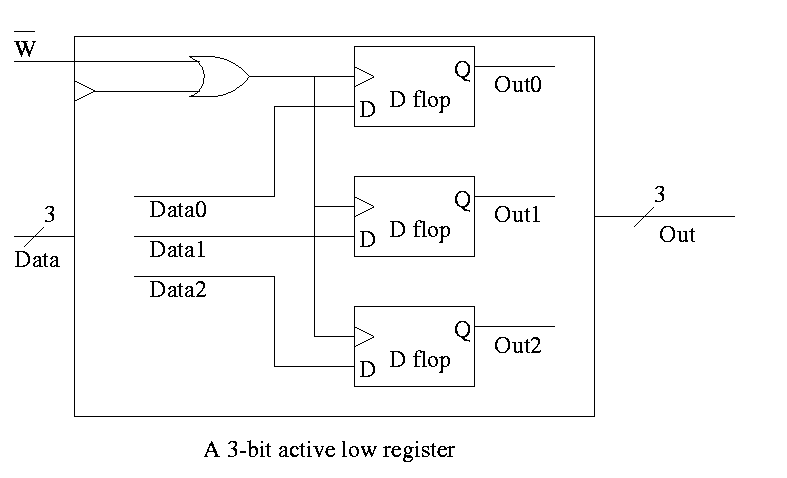

clock is high (i.e., just before the active edge

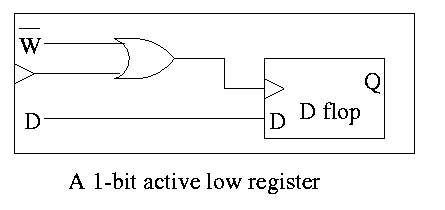

- An alternative is to use an active low write line,

i.e. have a W' input.

- Must have write line and data line valid during setup and hold

times

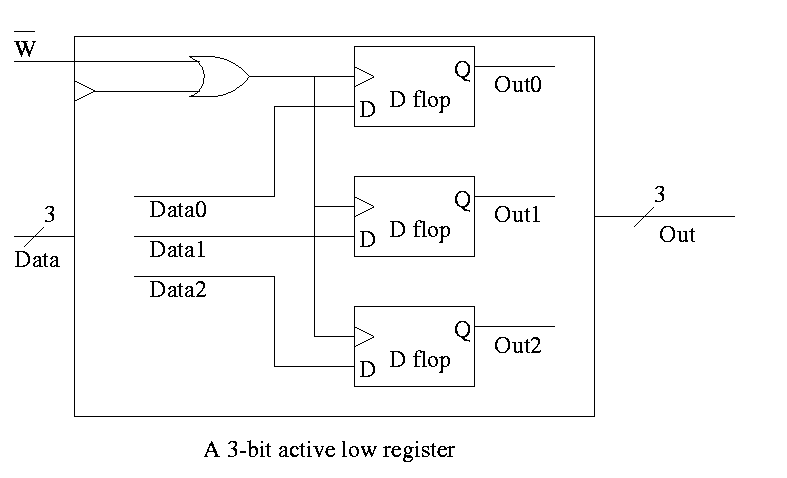

- To do a multibit register, just use multiple D flops.

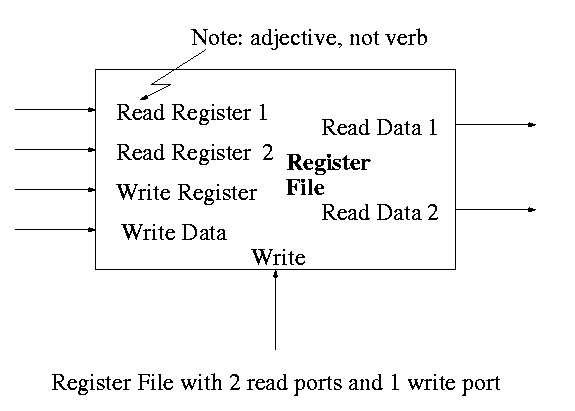

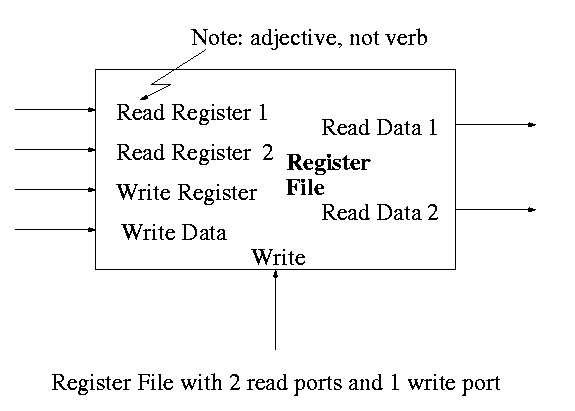

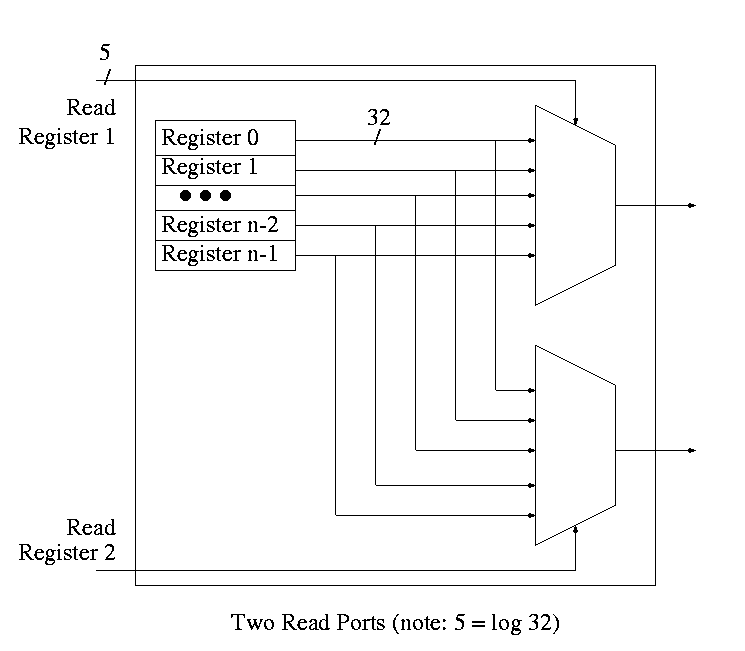

Register File

Set of registers each numbered

- Supply reg#, write line, and data (if a write)

- Can read and write same reg same cycle. You read the old value and

then the written value replaces this old value for subsequent cycles.

- Often have several read and write ports so that several

registers can be read and written during one cycle.

- We will do 2 read ports and one write port since that is

needed for ALU ops. This is Not adequate for superscalar (or

EPIC) or any other system where more than one operation is to be

calculated each cycle.

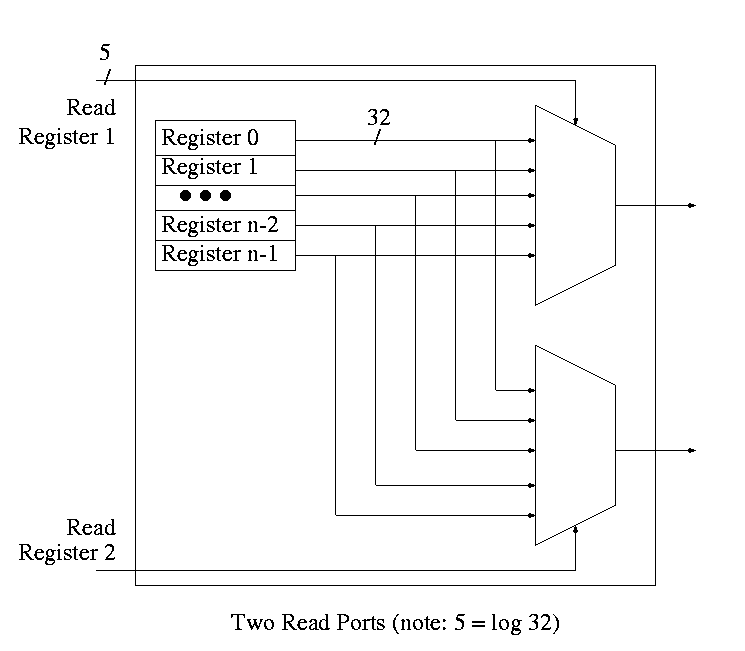

To read just need mux from register file to select correct

register.

- Have one of these for each read port

- Each is an n to 1 mux, b bits wide; where

- n is the number of registers (32 for MIPS)

- b is the width of each register (32 for MIPS)

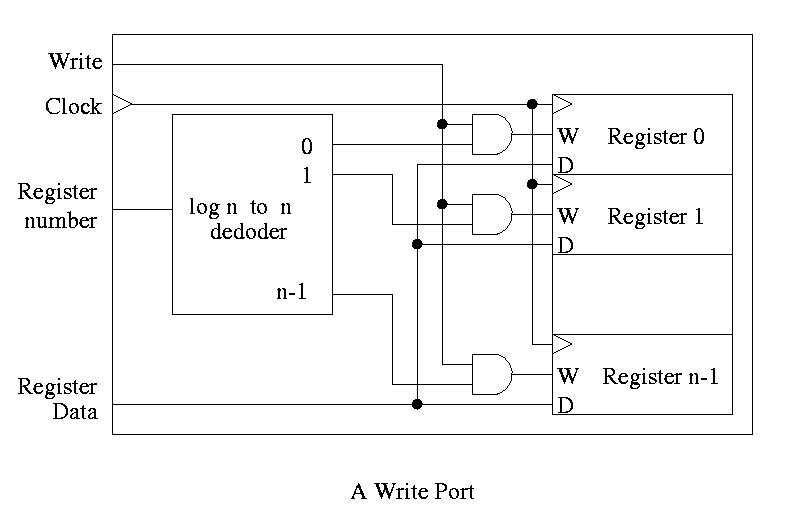

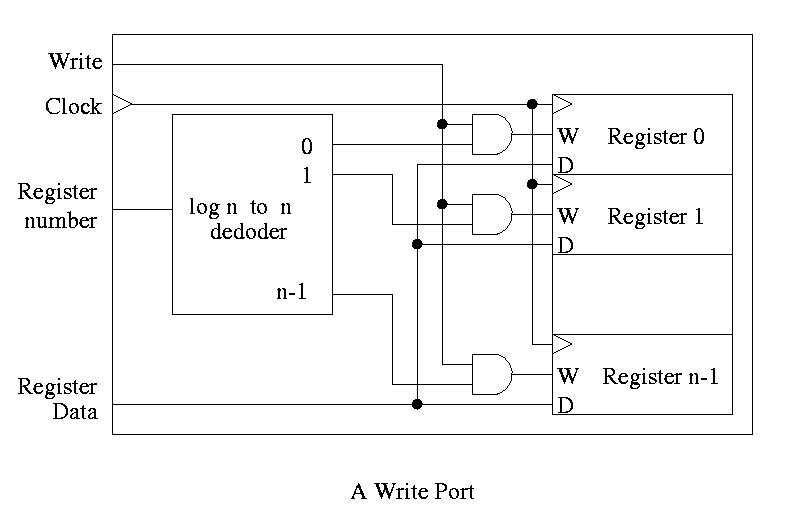

For writes use a decoder on register number to determine which

register to write. Note that 3 errors in the book's figure were fixed

- decoder is log n to n

- decoder outputs numbered 0 to n-1 (NOT n)

- clock is needed

The idea is to gate the write line with the output of the decoder. In

particular, we should perform a write to register r this cycle providing

- Recall that the inputs to a register are W, the write line, D the

data to write (if the write line is asserted) and the clock.

- The clock to each register is simply the clock input to the

register file.

- The data to each register is simply the write data to the register file.

- The write line to each register is unique

- The register number is fed to a decoder.

- The rth output of the decoder is asserted if r is the

specified register.

- Hence we wish to write register r if

- The write line to the register file is asserted

- The rth output of the decoder is asserted

- Bingo! We just need an and gate.

Homework: 20

======== START LECTURE #6

========

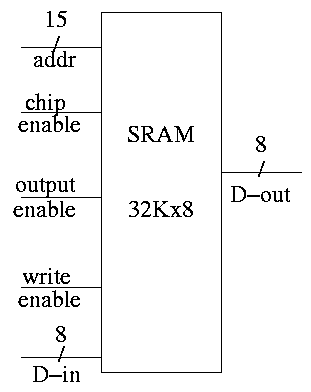

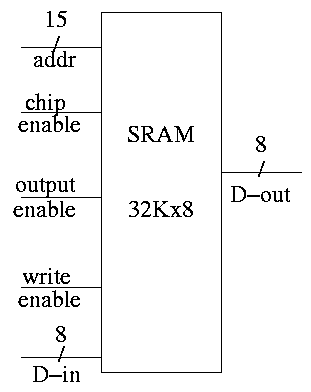

SRAMS and DRAMS

- External interface is on right

- 32Kx8 means it hold 32K words each 8 bits.

- Addr, D-in, and D-out are same as registers. Addr is 15

bits since 2 ^ 15 = 32K. D-out is 8 bits since we have a by 8

SRAM.

- Write enable is similar to the write line (unofficial: it

is a pulse; there is no clock),

- Output enable is for the three state (tri-state) drivers

discussed just below (unofficial).

- Ignore chip enable (perfer not to have all chips enabled

for electrical reasons).

- (Sadly) we will not look inside officially. Following is

unofficial

- Conceptually, an SRAM is like a register file but we can't

use the register file implementation for a large SRAM because

there would be too many wires and the muxes would be too big.

- Two stage decode.

- For a 32Kx8 SRAM would need a 15-32K decoder.

- Instead package the SRAM as eight 512x64 SRAMS.

- Pass 9 bits of the address through a 9-512 decoder and

use these 512 wires to select the appropriate 64-bit word

from each of the sub SRAMS. Use the remaining 6 bits to

select the appropriate bit from each 64-bit word.

- Tri-state buffers (drivers) used instead of a mux.

- I was fibbing when I said that signals always have a 1 or 0.

- However, we will not use tristate logic; we will use muxes.

- DRAM uses a version of the above two stage decode.

- View the memory as an array.

- First select (and save in a ``faster'' memory) an

entire row.

- Then select and output only one (or a few) column(s).

- So can speed up access to elts in same row.

- SRAM and ``logic'' are made from similar technologies but

DRAM technology is quite different.

- So easy to merge SRAM and CPU on one chip (SRAM

cache).

- Merging DRAM and CPU is more difficult but is now

being done.

- Error Correction (Omitted)

Note:

There are other kinds of flip-flops T, J-K. Also one could learn

about excitation tables for each. We will not cover this

material (H&P doesn't either). If interested, see Mano

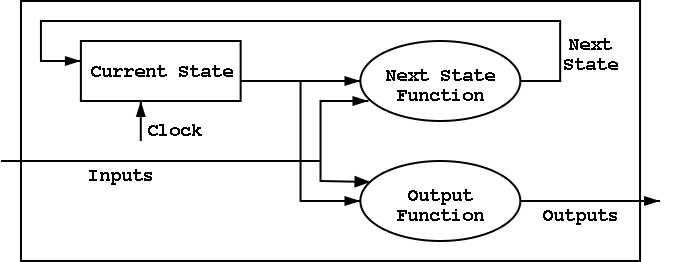

B.6: Finite State Machines

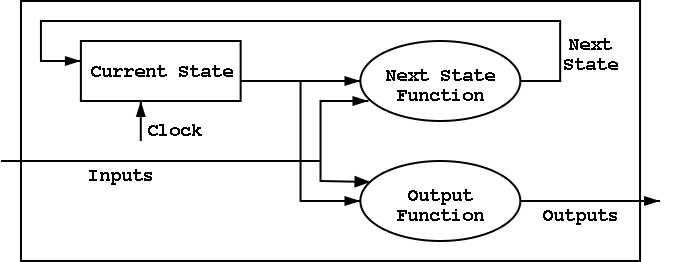

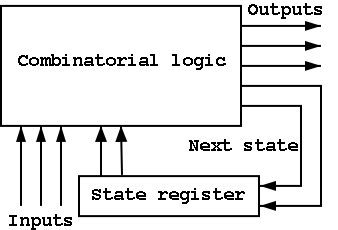

I do a different example from the book (counters instead of traffic

lights). The ideas are the same and the two generic pictures (below)

apply to both examples.

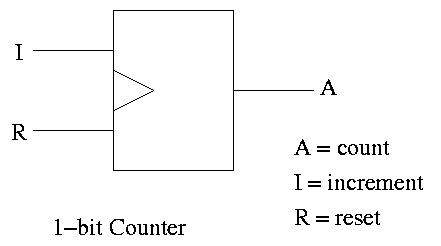

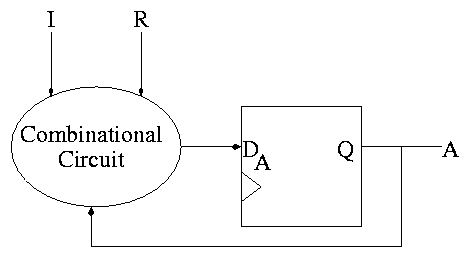

Counters

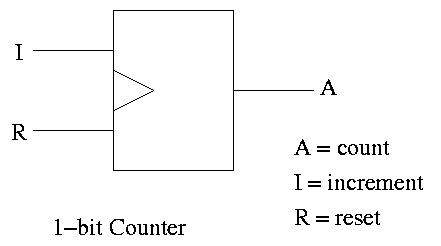

A counter counts (naturally).

- The counting is done in binary.

- Increments (i.e., counts) on clock ticks (active edge).

- Actually only on those clocks ticks when the ``increment'' line is

asserted.

- If reset asserted at a clock tick, the counter is reset to zero.

- What if both reset and increment assert?

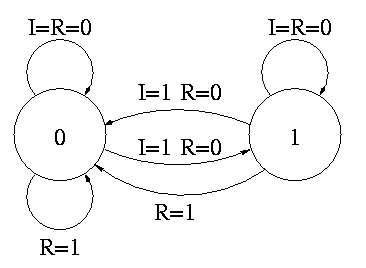

Ans: Shouldn't do that. Will accept any answer (i.e., don't care).

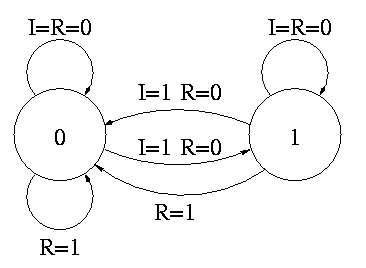

The state transition diagram

- The figure shows the state transition diagram for A, the output of

a 1-bit counter.

- In this implementation, if R=I=1 we choose to set A to zero. That

is, if Reset and Increment are both asserted, we do the Reset.

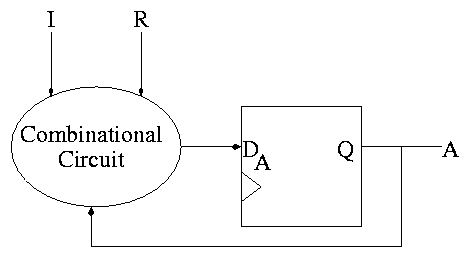

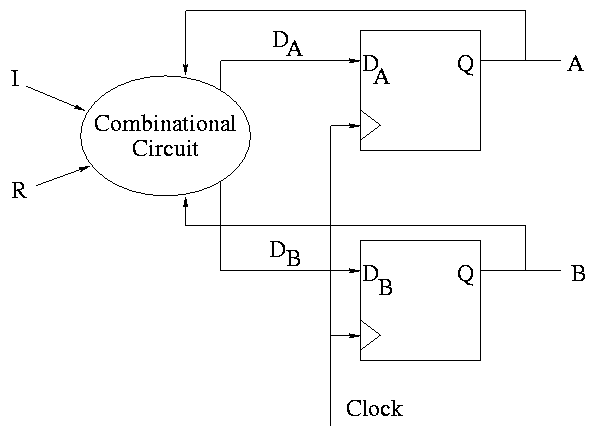

The circuit diagram.

- Uses one flop and a combinatorial circuit.

- The (combinatorial) circuit is determined by the transition diagram.

- The circuit must calculate the next value of A from the current

value and I and R.

- The flop producing A is often itself called A and the D input to this

flop is called DA (really D sub A).

How do we determine the combinatorial circuit?

- This circuit has three inputs, I, R, and the current A.

- It has one output, DA, which is the desired next A.

- So we draw a truth table, as before.

- For convenience I added the label Next A to the DA column

Current || Next A

A I R || DA <-- i.e. to what must I set DA

-------------++-- in order to get the desired

0 0 0 || 0 Next A for the next cycle.

1 0 0 || 1

0 1 0 || 1

1 1 0 || 0

x x 1 || 0

But this table is simply the truth table for the combinatorial

circuit.

A I R || DA

-------++--

0 0 0 || 0

1 0 0 || 1

0 1 0 || 1

1 1 0 || 0

x x 1 || 0

DA = R' (A XOR I)

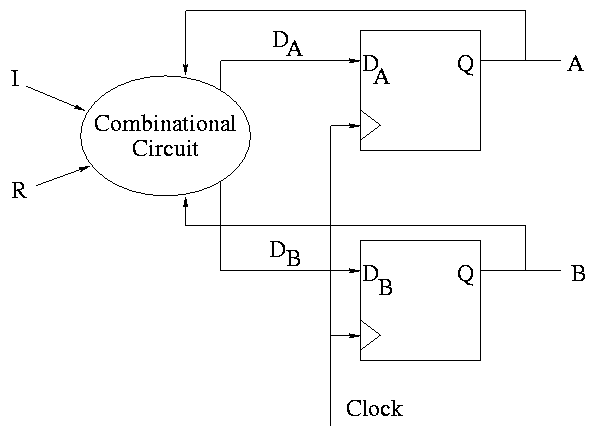

How about a two bit counter.

- State diagram has 4 states 00, 01, 10, 11 and transitions from one

to another

- The circuit diagram has 2 D flops

To determine the combinatorial circuit we could precede as before

Current ||

A B I R || DA DB

-------------++------

This would work but we can instead think about how a counter works and

see that.

DA = R'(A XOR I)

DB = R'(B XOR AI)

Homework: B.23

B.7 Timing Methodologies

Skipped

======== START LECTURE #7

========

Simulating Combinatorial Circuits at the Gate Level

The idea is, given a circuit diagram, write a program that behaves the

way the circuit does. This means more than getting the same answer.

The program is to work the way the circuit does.

For each logic box, you write a procedure with the following properties.

- A parameters is defined for each input and output wire.

- A (local) variable is defined for each internal wire.

Really means a variable define for each signal. If a signal is

sent from one gate to say 3 others, you might not call all those

connections one wire, but it is one signal and is represented by

one variable

- The only operations used are AND OR XOR NOT

- In the C language & | ^ ! (do NOT use ~)

- Other languages similar.

- Best is a language with variables and constants of type Boolean.

- An assignment statement (with an operator) corresponds to a

gate.

For example A = B & C; would mean that there is an AND gate with

input wires B and C and output wire A.

- NO conditional assignment.

- NO if then else statements.

Implement a mux using ANDs, ORs, and NOTs.

- Single assignment to each variable.

Multiple assignments would correspond to a cycle or to two outputs

connected to the same wire.

- A bus (i.e., a set of signals) is represented by an array.

- Testing

- Exhaustive possible for 1-bit cases.

- Cleverness for n-bit cases (n=32, say).

Simulating a Full Adder

Remember that a full adder has three inputs and two outputs. Hand

out hard copies of

FullAdder.c.

Simulating a 4-bit Adder

This implementation uses the full adder code above. Hand out hard

copies of FourBitAdder.c.

Lab 1: Simulating A 1-bit ALU

Hand out Lab 1, which is available in

text (without the diagram),

pdf, and

postscript.

Chapter 1: Computer Abstractions and Technologies

Homework:

READ chapter 1. Do 1.1 -- 1.26 (really one matching question)

Do 1.27 to 1.44 (another matching question),

1.45 (and do 10,000 RPM),

1.46, 1.50

Chapter 3: Instructions: Language of the Machine

Homework:

Read sections 3.1 3.2 3.3

3.4 Representing instructions in the Computer (MIPS)

Register file

- We just learned how to build this

- 32 Registers each 32 bits

- Register 0 is always 0 when read and stores to register 0 are ignored

Homework:

3.2.

The fields of a MIPS instruction are quite consistent

op rs rt rd shamt funct <-- name of field

6 5 5 5 5 6 <-- number of bits

- op is the opcode

- rs,rt are source operands

- rd is destination

- shamt is the shift amount

- funct is used for op=0 to distinguish alu ops

- alu is arithmetic and logic unit

- add/sub/and/or/not etc.

- We will see there are other formats (but similar to this one).

R-type instruction (R for register)

Example: add $1,$2,$3

- R-type use the format above

- The example given has for its 6 fields

0--2--3--1--0--32

- op=0, alu op

- funct=32 specifies add

- reg1 <-- reg2 + reg3

- The regs can all be the same (doubles the value in the reg).

- Do sub by just changing the funct

- If the regs are the same for subtract, the instruction clears the register.

I-type (why I?)

op rs rt address

6 5 5 16

- rs is a source reg.

- rt is the destination reg.

Examples: lw/sw $1,1000($2)

- $1 <-- Mem[$2+1000]

$1 --> Mem[$2+1000]

- Transfers to/from memory, normally in words (32-bits)

- But the machine is byte addressable!

- Then how come have load/store word instead of byte?

Ans: It has load/store byte as well, but we don't cover it.

- What if the address is not a multiple of 4D?

Ans: An error (MIPS requires aligned accesses).

- machine format is: 35/43 $2 $1 1000

RISC-like properties of the MIPS architecture.

- All instructions are the same length (32 bits).

- Field sizes of R-type and I-type correspond.

- The type (R-type, I-type, etc.) is determined by the opcode.

- rs is the reference to memory for both load and store.

- These properties will prove helpful when we construct a MIPS processor.

Branching instruction

slt (set less-then)

Example: slt $3,$8,$2

- R-type

- reg3 <-- (if reg8 < reg2 then 1 else 0)

- Like other R-types: read 2nd and 3rd reg, write 1st

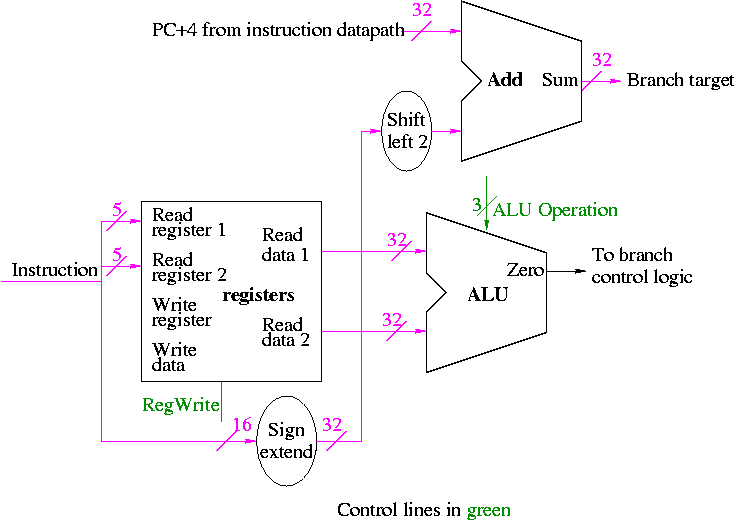

beq and bne (branch (not) equal)

Examples: beq/bne $1,$2,123

- I-type

- if reg1=reg2 then go to the 124rd instruction after this one.

- if reg1!=reg2 then go to the 124rd instruction after this one.

- Why 124 not 123?

Ans: We will see that the CPU adds 4 to the program counter (for

the no branch case) and then adds (4 times) the third operand.

- Normally one writes a label for the third operand and the

assembler calculates the offset needed.

======== START LECTURE #8

========

blt (branch if less than)

Examples: blt $5,$8,123

- I-type

- if reg5 < reg8 then go to the 124rd instruction after this one.

- *** WRONG ***

- There is no blt instruction.

- Instead use

stl $1,$5,$8

bne $1,$0,123

ble (branch if less than or equal)

- There is no ``ble $5,$8,L'' instruction.

- There is also no ``sle $1,$5,$8'' set $1 if $5 less or equal $8.

- Note that $5<=$8 <==> NOT ($8<$5).

- Hence we test for $8<$5 and branch if false.

stl $1,$8,$5

beq $1,$0,L

bgt (branch if greater than>

- There is no ``bgt $5,$8,L'' instruction.

- There is also no ``sgt $1,$5,$8'' set $1 if $5 greater than $8.

- Note that $5>$8 <==> $8<$5.

- Hence we test for $8<$5 and branch if true.

stl $1,$8,$5

bne $1,$0,L

bge (branch if greater than or equal>

- There is no ``bge $5,$8,L'' instruction.

- There is also no ``sge $1,$5,$8'' set $1 if $5 greater or equal $8l

- Note that $5>=$8 <==> NOT ($5<$8)l

- Hence we test for $5<$8 and branch if false.

stl $1,$5,$8

beq $1,$0,L

Note:

Please do not make the mistake of thinking that

stl $1,$5,$8

beq $1,$0,L

is the same as

stl $1,$8,$5

bne $1,$0,L

The negation of X < Y is not Y < X

End of Note

Homework:

3.12

J-type instructions (J for jump)

op address

6 26

j (jump)

Example: j 10000

- Jump to instruction (not byte) 10000.

- Branches are PC relative, jumps are absolute.

- J type

- Range is 2^26 words = 2^28 bytes = 1/4 GB

jr (jump register)

Example: jr $10

- Jump to the location in register 10.

- R type, but uses only one register.

- Will it use one of the source registers or the destination register?

Ans: This will be obvious when we construct the processor.

jal (jump and link)

Example: jal 10000

- Jump to instruction 10000 and store the return address (the

address of the instruction after the jal).

- Used for subroutine calls.

- J type.

- Return address is stored in register 31. By using a fixed

register, jal avoids the need for a second register field and hence

can have 26 bits for the instruction address (i.e., can be a J type).

I type instructions (revisited)

- The I is for immediate.

- These instructions have an immediate third operand,

i.e., the third operand is contained in the instruction itself.

- This means the operand itself, and not just its address or register

number, is contained in the instruction.

- Two registers and one immediate operand.

- Compare I and R types: Since there is no shamt and no funct, the

immediate field can be larger than the field for a register.

- Recall that lw and sw were I type. They had an immediate operand,

the offset added to the register to specify the memory address.

addi (add immediate)

Example: addi $1,$2,100

- $1 = $2 + 100

- Why is there no subi?

Ans: Make the immediate operand negative.

slti (set less-than immediate)

Example slti $1,$2,50

- Set $1 to 1 if $2 less than 50; set $1 to 0 otherwise.

lui (load upper immediate)

Example: lui $4,123

- Loads 123 into the upper 16 bits of register 4 and clears the

lower 16 bits of the register.

- What is the use of this instruction?

- How can we get a 32-bit constant into a register since we can't

have a 32 bit immediate?

- Load the word

- Have the constant placed in the program text (via some

assembler directive).

- Issue lw to load the register.

- But memory accesses are slow and this uses a cache entry.

- Load shift add

- Load immediate the high order 16 bits (into the low order

of the register).

- Shift the register left 16 bits (filling low order with

zero)

- Add immediate the low order 16 bits

- Three instructions, three words of memory

- load-upper add

- Use lui to load immediate the desired 16-bit value into

the high order 16 bits of the register and clear the low

order bits.

- Add immediate the desired low order 16 bits.

- lui $4,123 -- puts 123 into top half of register 4.

addi $4,$4,456 -- puts 456 into bottom half of register 4.

Homework:

3.1, 3.3, 3.4, and 3.5.

Chapter 4

Homework:

Read 4.1-4.4

4.2: Signed and Unsigned Numbers

MIPS uses 2s complement (just like 8086)

To form the 2s complement (of 0000 1111 0000 1010 0000 0000 1111 1100)

- Take the 1s complement.

- That is, complement each bit (1111 0000 1111 0101 1111 1111 0000 0011)

- Then add 1 (1111 0000 1111 0101 1111 1111 0000 0100)

Need comparisons for signed and unsigned.

- For signed a leading 1 is smaller (negative) than a leading 0

- For unsigned a leading 1 is larger than a leading 0

sltu and sltiu

Just like slt and slti but the comparison is unsigned.

Homework:

4.1-4.9

4.3: Addition and subtraction

To add two (signed) numbers just add them. That is don't treat

the sign bit special.

To subtract A-B, just take the 2s complement of B and add.

Overflows

An overflow occurs when the result of an operatoin cannot be

represented with the available hardware. For MIPS this means when the

result does not fit in a 32-bit word.

- We have 31 bits plus a sign bit.

- The result would definitely fit in 33 bits (32 plus sign)

- The hardware simply discards the carry out of the top (sign) bit

- This is not wrong--consider -1 + -1

11111111111111111111111111111111 (32 ones is -1)

+ 11111111111111111111111111111111

----------------------------------

111111111111111111111111111111110 Now discard the carry out

11111111111111111111111111111110 this is -2

- The bottom 31 bits are always correct.

Overflow occurs when the 32 (sign) bit is set to a value and not

the sign.

- Here are the conditions for overflow

Operation Operand A Operand B Result

A+B >= 0 >= 0 < 0

A+B < 0 < 0 >= 0

A-B >= 0 < 0 < 0

A-B < 0 >= 0 >= 0

- These conditions are the same as

Carry-In to sign position != Carry-Out

Homework:

Prove this last statement (4.29)

(for fun only, do not hand in).

addu, subu, addiu

These add and subtract the same as add and sub,

but do not signal overflow

4.4: Logical Operations

Shifts: sll, srl

- R type, with shamt used and rs not used.

- sll $1,$2,5

reg2 gets reg1 shifted left 5 bits.

- Why do we need both sll and srl,

i.e, why not just have one of them and use a negative

shift amt for the other?

Ans: The shift amt is only 5 bits and need shifts from 0 to 31

bits. Hence not enough bits for negative shifts.

- These are shifts not rotates.

- Op is 0 (these are ALU ops, will understand why in a few weeks).

Bitwise AND and OR: and, or, andi, ori

No surprises.

- and $r1,$r2,$r3

or $r1,$r2,$r3

- standard R-type instruction

- andi $r1,$r2,100

ori $r1,$r2,100

- standard I-type

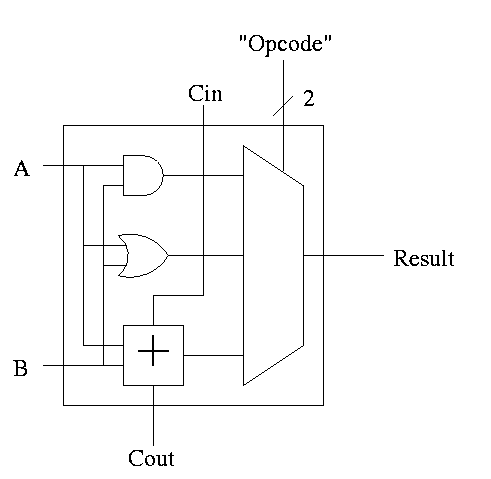

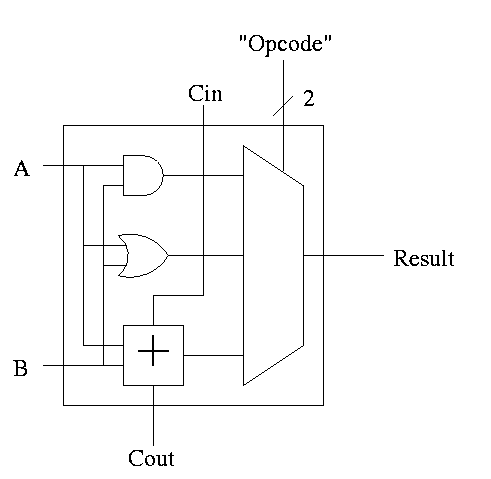

4.5: Constructing an ALU--the fun begins

First goal is 32-bit AND, OR, and addition

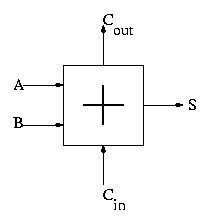

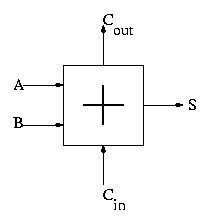

Recall we know how to build a full adder. We will draw it as shown on

the right.

With this adder, the ALU is easy.

- Just choose the correct operation (ADD, AND, OR)

- Note the principle that if you want a logic box that sometimes

computes X and sometimes computes Y, what you do is

- Always compute X.

- Always compute Y.

- Put both X and Y into a mux.

- Use the ``sometimes'' condition as the select line to the mux.

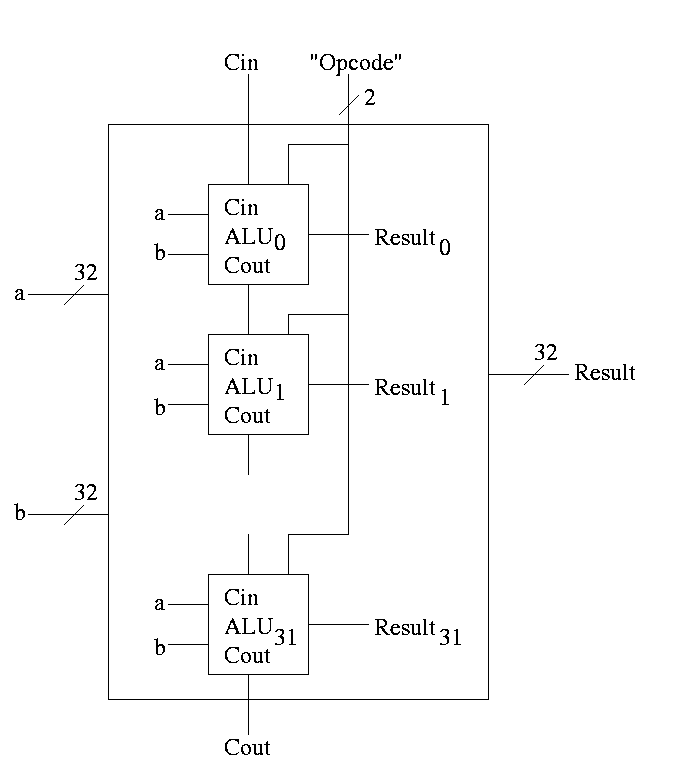

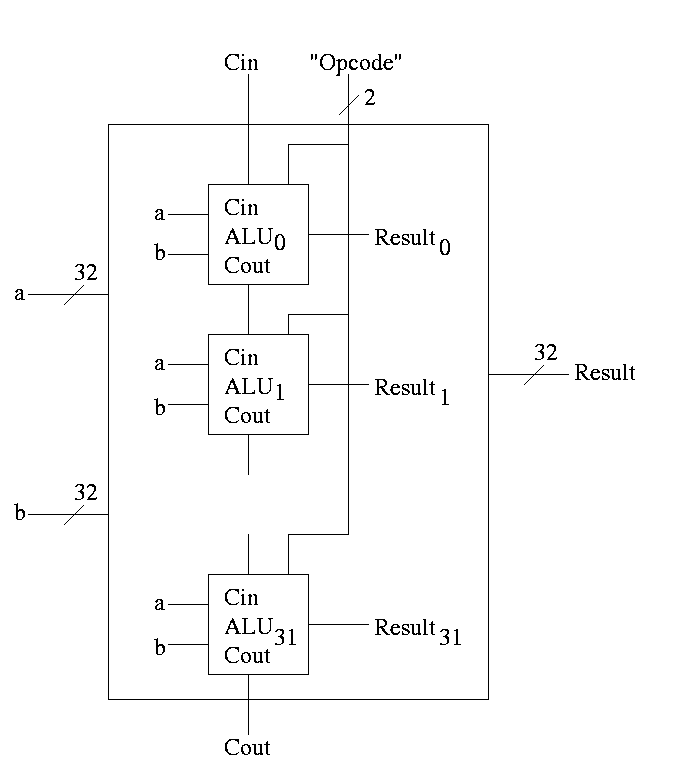

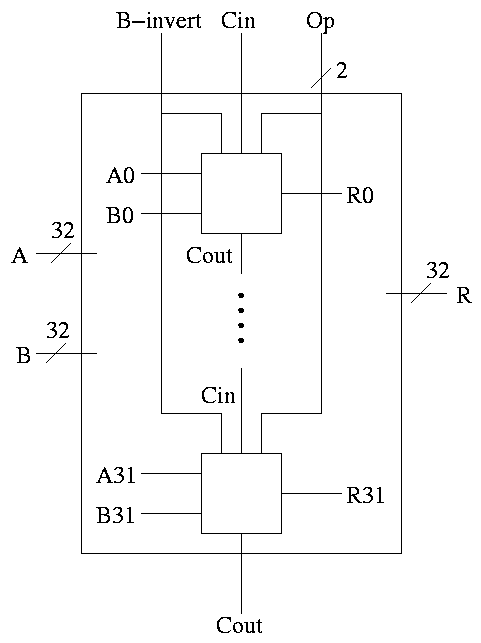

With this 1-bit ALU, constructing a 32-bit version is simple.

- Use an array of logic elements for the logic. The logic element

is the 1-bit ALU

- Use buses for A, B, and Result.

- ``Broadcast'' Opcode to all of the internal 1-bit ALUs. This

means wire the external Opcode to the Opcode input of each of the

internal 1-bit ALUs

First goal accomplished.

======== START LECTURE #9

========

Now we augment the ALU so that we can perform subtraction (as well

as addition, AND, and OR).

- Big deal about 2's compliment is that

A - B = A + (2's comp B) = A + (B' + 1).

- Get B' from an inverter (naturally).

- Get +1 from the Carry-In.

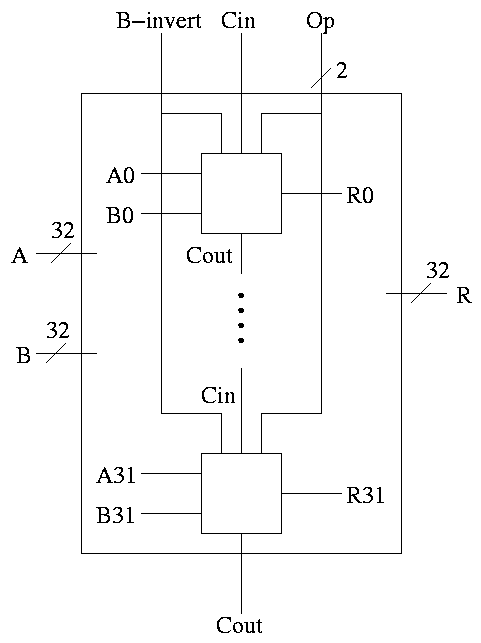

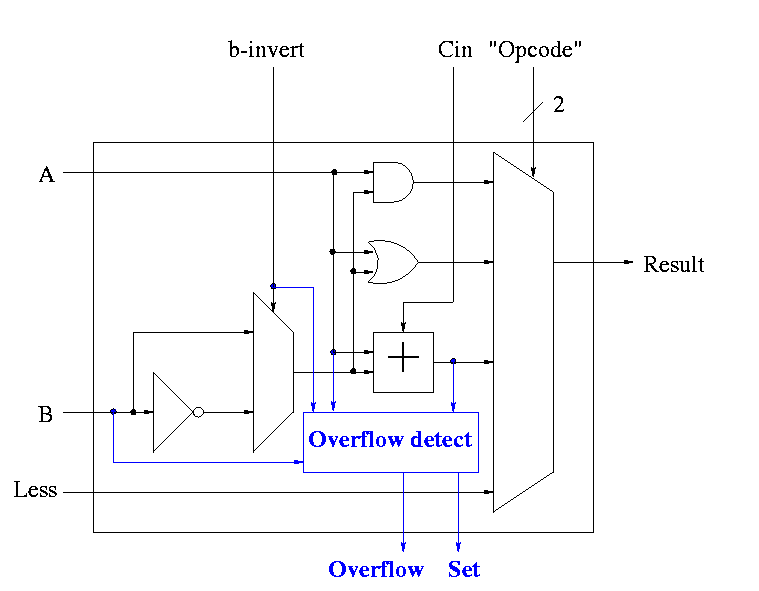

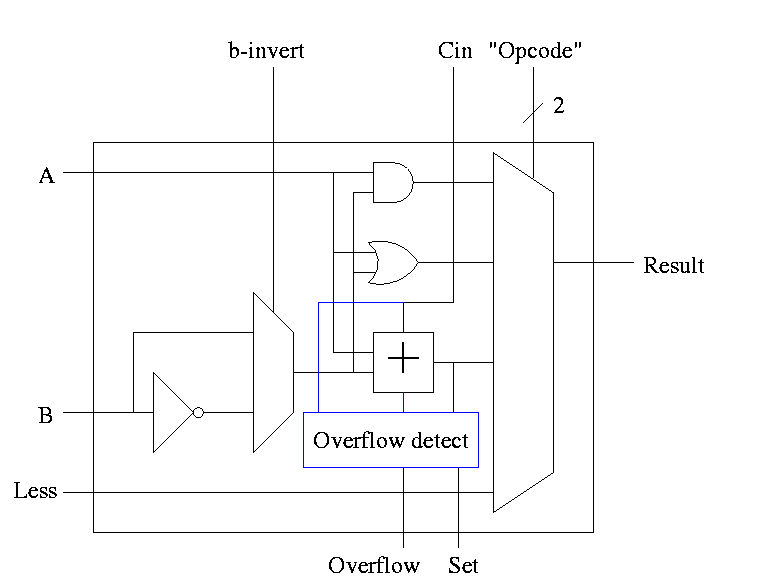

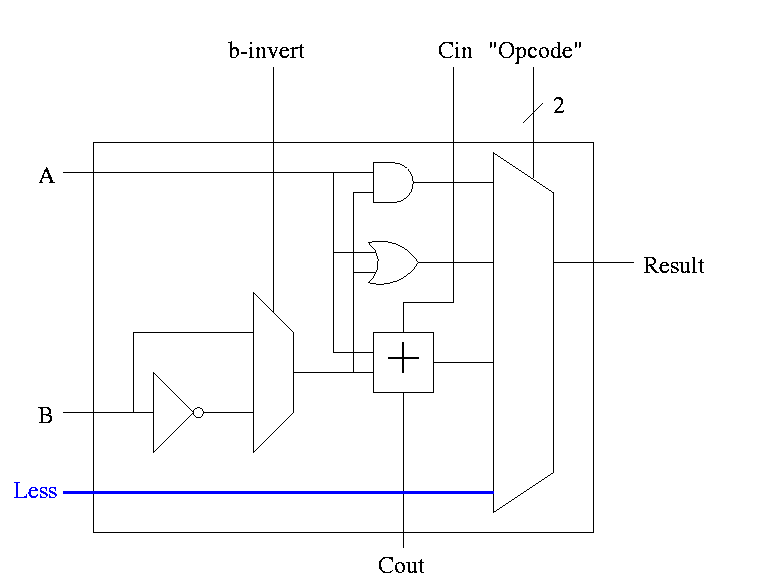

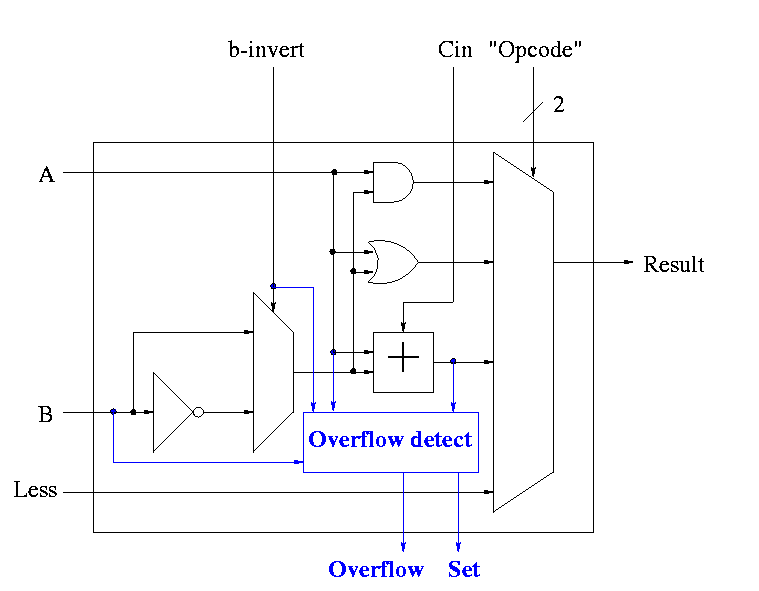

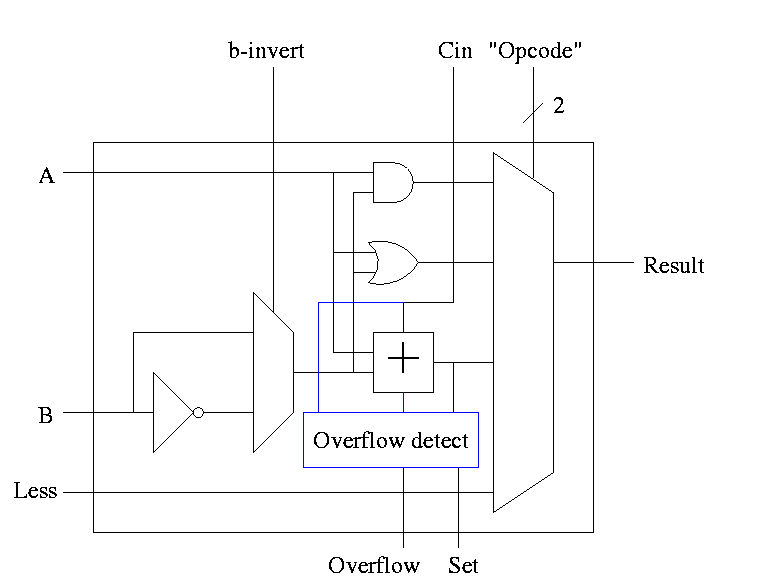

1-bit ALU with ADD, SUB, AND, OR is

Implementing addition and subtraction

- To implement addition we use opcode 10 as before and de-assert

both b-invert and Cin.

- To implement subtraction we still use opcode 10 but we assert

both b-invert and Cin.

32-bit version is simply a bunch of these.

- For subtraction assert both B-invert and Cin.

- For addition de-assert both B-invert and Cin.

- For AND and OR de-assert B-invert. Cin is a don't care.

- We get for free A+B'

If we let A=0, this gives B', i.e. the NOT operation

However, we will not use it. Indeed we will soon give it away.

(More or less) all ALUs do AND, OR, ADD, SUB.

Now we want to customize our ALU for the MIPS architecture.

Extra requirements for MIPS ALU:

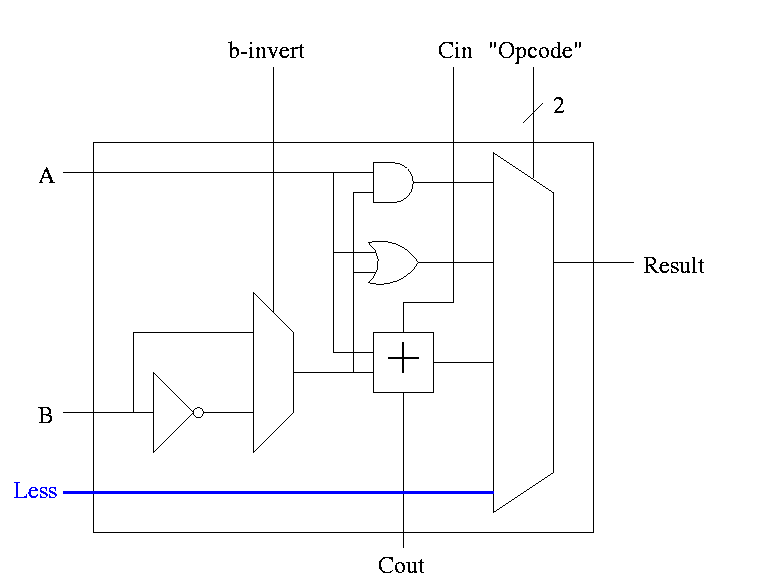

-

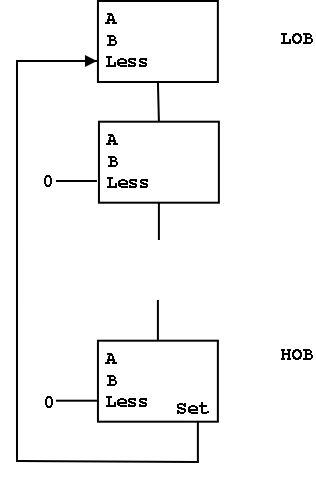

slt set-less-than

-

Result reg is 1 if a < b

Result reg is 0 if a >= b

-

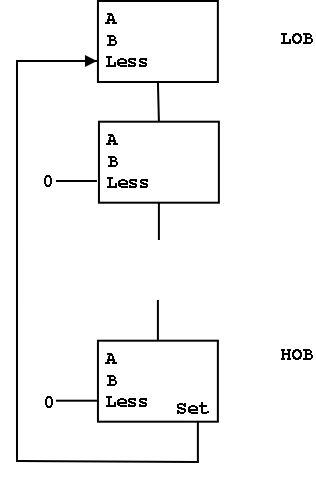

So need to set the LOB (low order bit, aka least significant bit)

of the result equal to the sign bit of a subtraction, and set the

rest of the result bits to zero.

-

Idea #1. Give the mux another input, called LESS.

This input is brought in from outside the bit cell.

That is, if the opcode is slt we make the select line to the

mux equal to 11 (three) so that the the output is the this new

input. For all the bits except the LOB, the LESS input is

zero. For the LOB we must figure out how to set LESS.

-

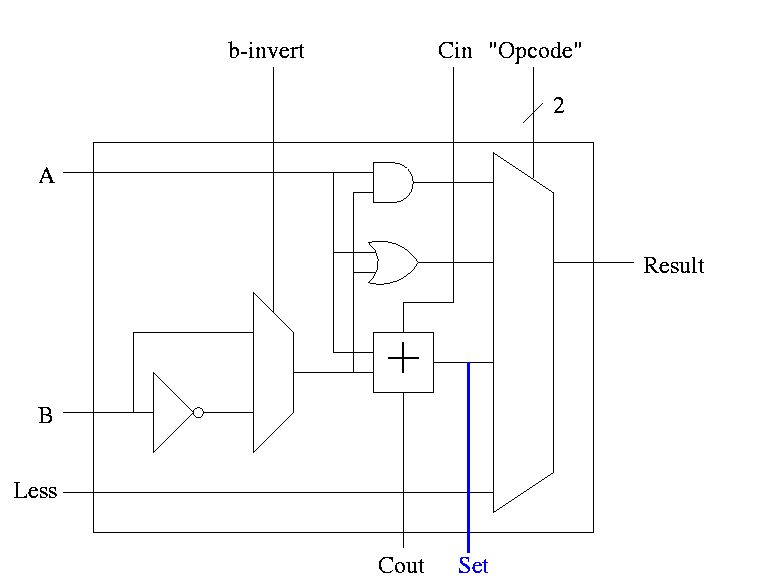

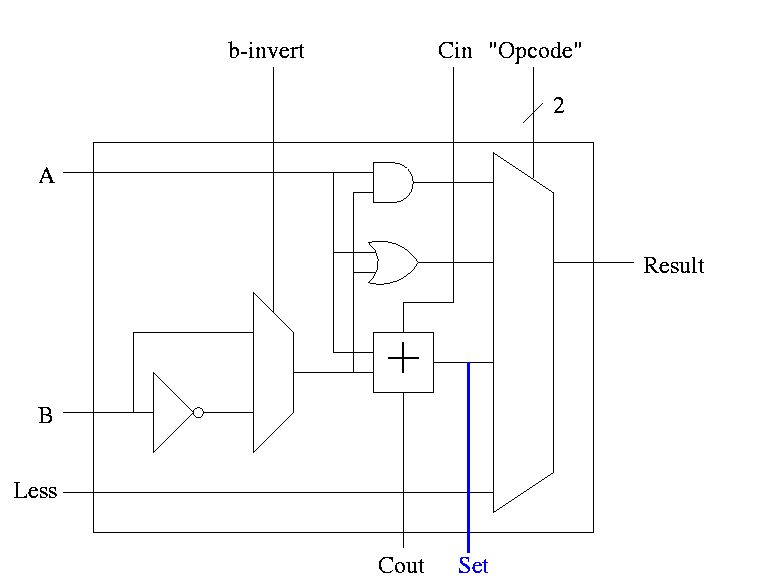

Idea #2. Bring out the result of the adder (BEFORE the mux)

Only needed for the HOB (high order bit, i.e. sign) Take this

new output from the HOB, call it SET and connect it to the

LESS input in idea #1 for the LOB. The LESS input for other

bits are set to zero.

-

Why isn't this method used?

-

Ans: It is wrong!

-

Example using 3 bit numbers (i.e. -4 .. 3).

- Try slt on -3 and +2.

- True subtraction (-3 - +2) gives -5.

- The negative sign in -5 indicates (correctly) that -3 < +2.

- But three bit subtraction -3 - +2 gives +3 !

- Hence we will incorrectly conclude that -3 is NOT less than +2.

- (Really, the subtraction signals an overflow.

unless doing unsigned)

-

Solution: Need the correct rule for less than (not just sign of

subtraction).

Homework: figure out correct rule, i.e. prob 4.23.

Hint: when an overflow occurs the sign bit is definitely wrong (so the

complement of the sign bit is right).

-

Overflows

- The HOB ALU is already unique (outputs SET).

- Need to enhance it some more to produce the overflow output.

- Recall that we gave the rule for overflow. You need to examine:

- Whether the operation is add or sub (binvert).

- The sign of A.

- The sign of B.

- The sign of the result.

- Since this is the HOB we have all the sign bits.

- The book also uses Cout, but this appears to be an error.

- Simpler overflow detection.

- An overflow occurs if and only if the carry in to the HOB

differs from the carry out of the HOB.

-

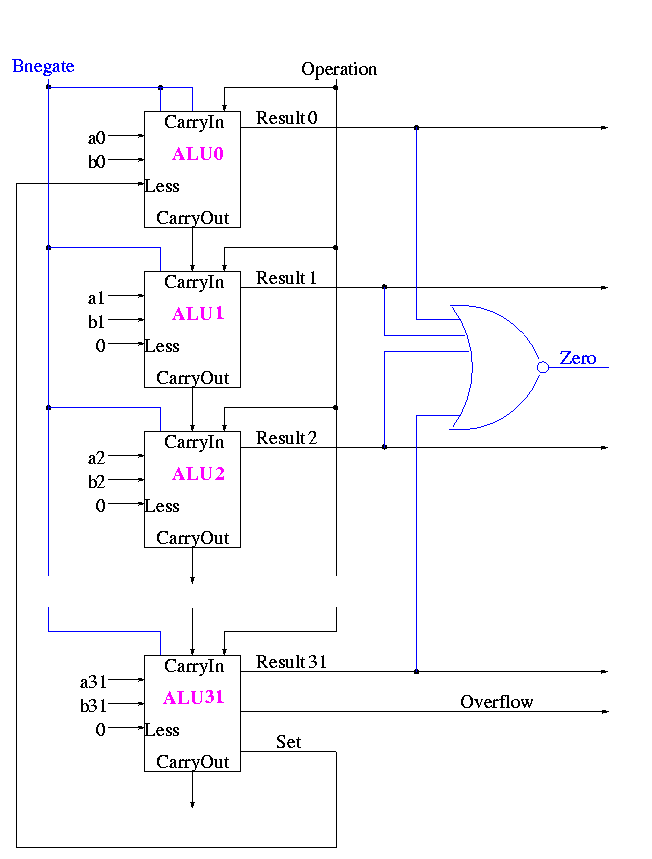

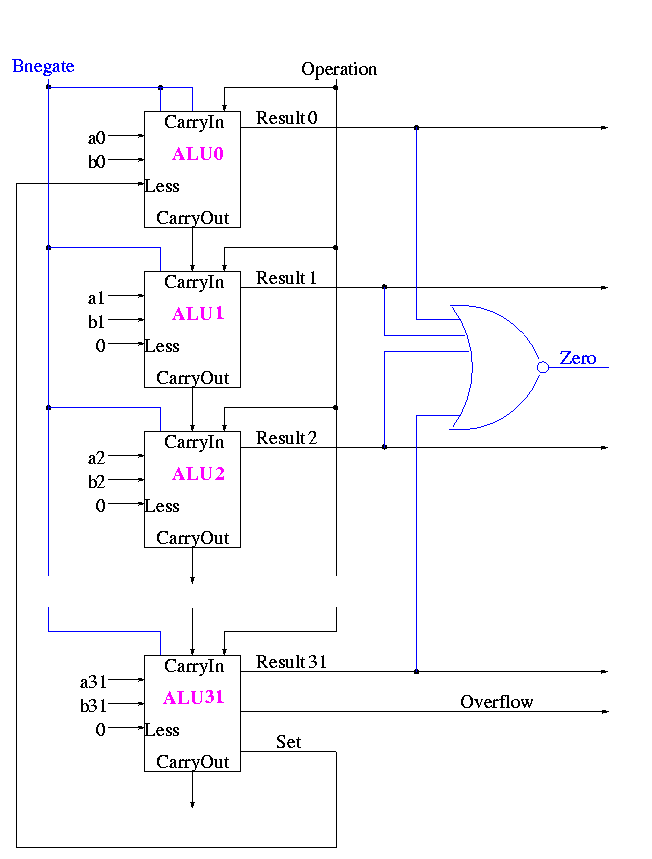

Zero Detect

- To see if all bits are zero just need NOR of all the bits

- Conceptually trivially but does require some wiring

-

Observation: The CarryIn to the LOB and Binvert

to all the 1-bit ALUs are always the same.

So the 32-bit ALU has just one input called Bnegate, which is sent

to the appropriate inputs in the 1-bit ALUs.

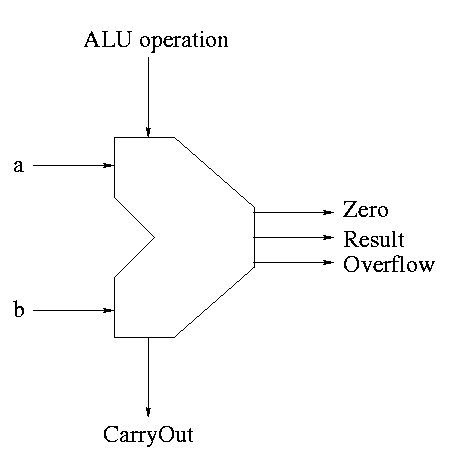

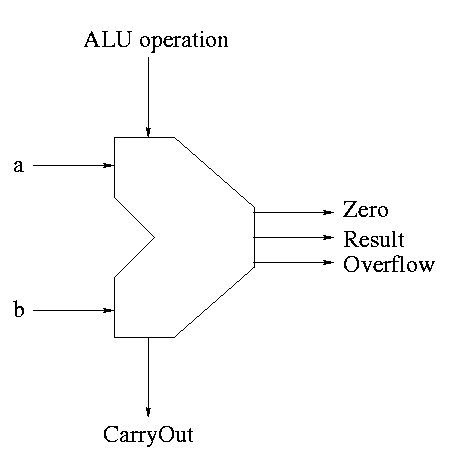

The Final Result is

The symbol used for an ALU is on the right

What are the control lines?

-

Bnegate (1 bit)

-

OP (2 bits)

What functions can we perform?

-

and

-

or

-

add

-

sub

-

set on less than

What (3-bit) values for the control lines do we need for each

function? The control lines are Bnegate (1-bit) and Operation (2-bits)

| and | 0 | 00 |

| or | 0 | 01 |

| add | 0 | 10 |

| sub | 1 | 10 |

| slt | 1 | 11 |

======== START LECTURE #10

========

Fast Adders

-

We have done what is called a ripple carry adder.

- The carry ``ripples'' from one bit to the next (LOB to HOB).

- So the time required is proportional to the wordlength

- Each carry can be computed with two levels of logic (any function

can be so computed) hence the number of gate delays for an n bit

adder is 2n.

- For a 4-bit adder 8 gate delays are required.

- For an 16-bit adder 32 gate delays are required.

- For an 32-bit adder 64 gate delays are required.

- For an 64-bit adder 128 gate delays are required.

-

What about doing the entire 32 (or 64) bit adder with 2 levels of

logic?

-

Such a circuit clearly exists. Why?

Ans: A two levels of logic circuit exists for any

function.

-

But it would be very expensive: many gates and wires.

-

The big problem: When expressed with two levels of

login, the AND and OR gates have high

fan-in, i.e., they have a large number of inputs. It is

not true that a 64-input AND takes the same time as a

2-input AND.

-

Unless you are doing full custom VLSI, you get a toolbox of

primative functions (say 4 input NAND) and must build from that

-

There are faster adders, e.g. carry lookahead and carry save. We

will study carry lookahead adders.

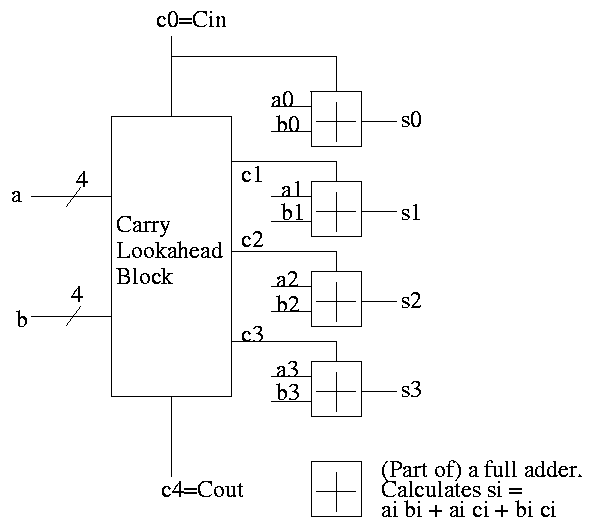

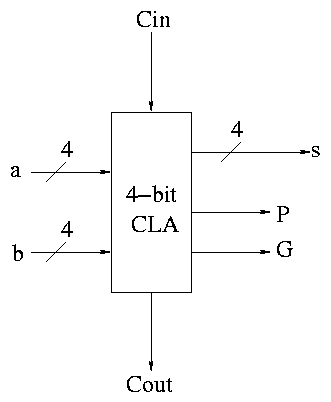

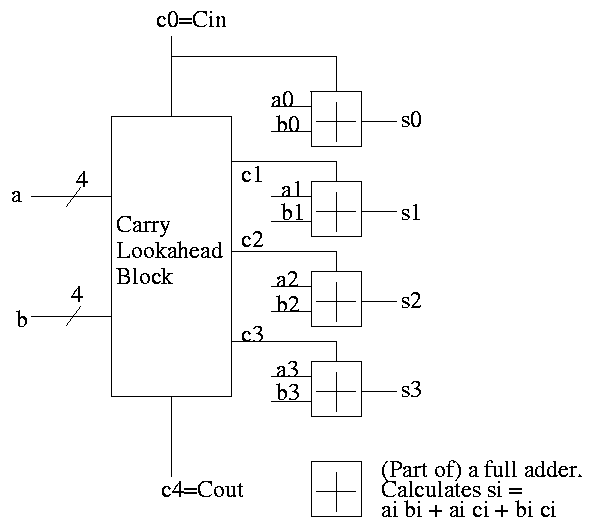

Carry Lookahead Adder (CLA)

This adder is much faster than the ripple adder we did before,

especially for wide (i.e., many bit) addition.

- For each bit position we have two input bits, a and b (really

should say ai and bi as I will do below).

- We can, in one gate delay, calculate two other bits

called generate g and propagate p, defined as follows:

- The idea for propagate is that p is true if the

current bit will propagate a carry from its input to its output.

- It is easy to see that p = (a OR b), i.e.

if and only if (a OR b)

then if there is a carry in

then there is a carry out

- The idea for generate is that g is true if the

current bit will generate a carry out (independent of the carry in).

- It is easy to see that g = (a AND b), i.e.

if and only if (a AND b)

then the must be a carry-out independent of the carry-in

To summarize, using a subscript i to represent the bit number,

to generate a carry: gi = ai bi

to propagate a carry: pi = ai+bi

H&P give a plumbing analogue

for generate and propagate.

Given the generates and propagates, we can calculate all the carries

for a 4-bit addition (recall that c0=Cin is an input) as follows (this

is the formula version of the plumbing):

c1 = g0 + p0 c0

c2 = g1 + p1 c1 = g1 + p1 g0 + p1 p0 c0

c3 = g2 + p2 c2 = g2 + p2 g1 + p2 p1 g0 + p2 p1 p0 c0

c4 = g3 + p3 c3 = g3 + p3 g2 + p3 p2 g1 + p3 p2 p1 g0 + p3 p2 p1 p0 c0

Thus we can calculate c1 ... c4 in just two additional gate delays

(where we assume one gate can accept upto 5 inputs). Since we get gi

and pi after one gate delay, the total delay for calculating all the

carries is 3 (this includes c4=Carry-Out)

Each bit of the sum si can be calculated in 2 gate delays given ai,

bi, and ci. Thus, for 4-bit addition, 5 gate delays after we are

given a, b and Carry-In, we have calculated s and Carry-Out.

So, for 4-bit addition, the faster adder takes time 5 and the slower

adder time 8.

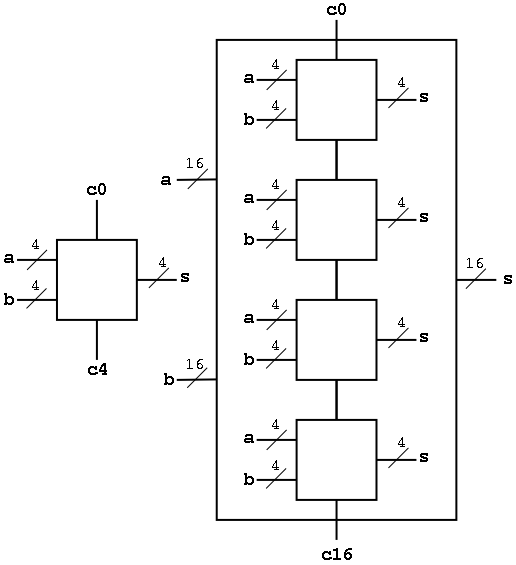

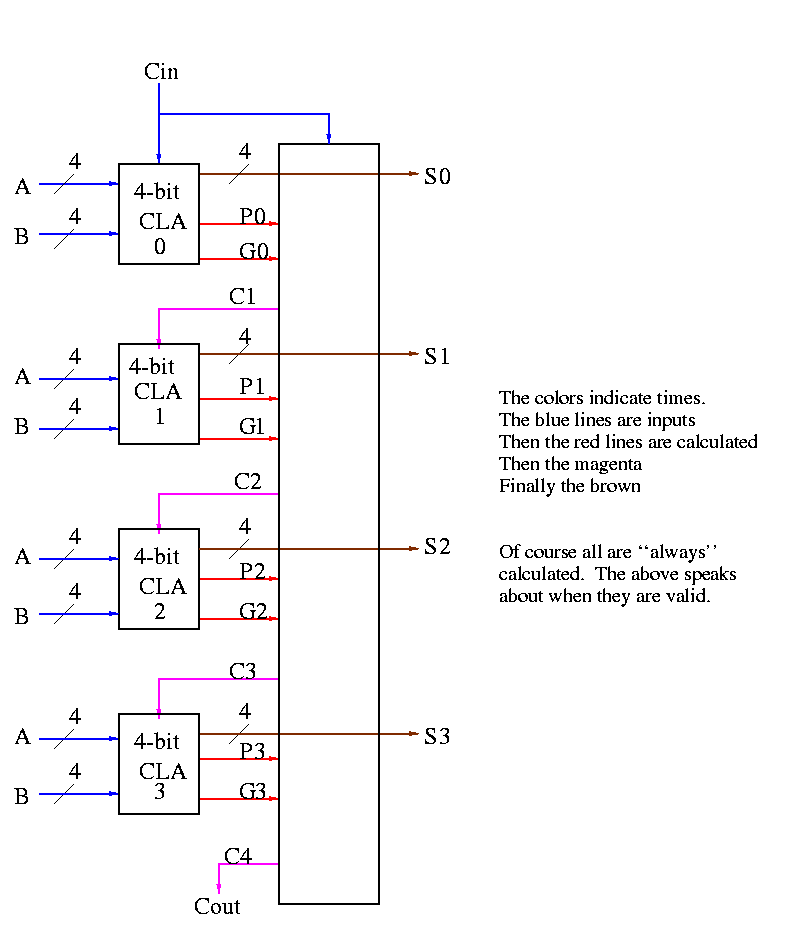

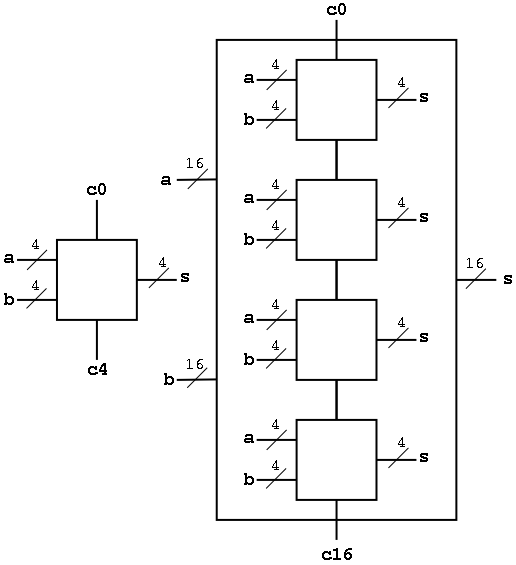

Now we want to put four of these together to get a fast 16-bit

adder.

As black boxes, both ripple-carry adders and carry-lookahead adders

(CLAs) look the same.

We could simply put four CLAs together and let the Carry-Out from

one be the Carry-In of the next. That is, we could put these CLAs

together in a ripple-carry manner to get a hybrid 16-bit adder.

- Since the Carry-Out is calculated in 3 gate delays, the Carry-In to

the high order 4-bit adder is calculated in 3*3=9 delays.

- Hence the overall Carry-Out takes time 9+3=12 and the high order

four bits of the sum take 9+5=14. The other bits take less time.

- So this mixed 16-bit adder takes 14 gate delays compared with

2*16=32 for a straight ripple-carry 16-bit adder.

We want to do better so we will put the 4-bit carry-lookahead

adders together in a carry-lookahead manner. Thus the diagram above

is not what we are going to do.

- We have 33 inputs a0,...,a15; b0,...b15; c0=Carry-In

- We want 17 outputs s0,...,s15; c16=c=Carry-Out

- Again we are assuming a gate can accept upto 5 inputs.

- It is important that the number of inputs per gate does not grow

with the number of bits in each number.

- If the technology available supplies only 4-input gates (instead

of the 5-input gates we are assuming),

we would use groups of three bits rather than four

We start by determining ``super generate'' and ``super propagate''

bits.

- The super generate indicates whether the 4-bit

adder constructed above generates a Carry-Out.

- The super propagate indicates whether the 4-bit

adder constructed above propagates a

Carry-In to a Carry-Out.

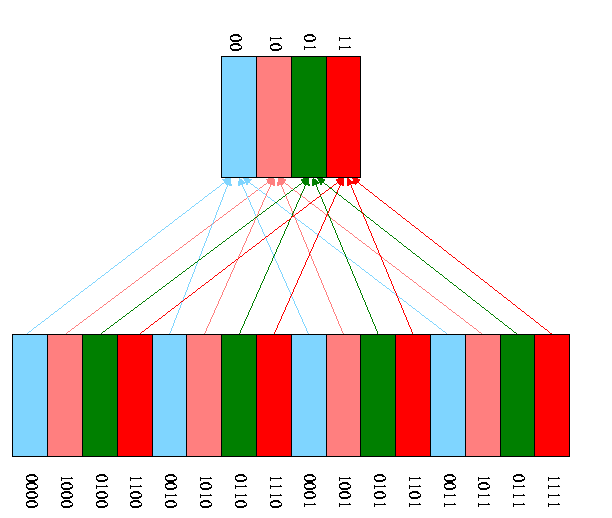

P0 = p3 p2 p1 p0 Does the low order 4-bit adder

propagate a carry?

P1 = p7 p6 p5 p4

P2 = p11 p10 p9 p8

P3 = p15 p14 p13 p12 Does the high order 4-bit adder

propagate a carry?

G0 = g3 + p3 g2 + p3 p2 g1 + p3 p2 p1 g0 Does low order 4-bit

adder generate a carry

G1 = g7 + p7 g6 + p7 p6 g5 + p7 p6 p5 g4

G2 = g11 + p11 g10 + p11 p10 g9 + p11 p10 p9 g8

G3 = g15 + p15 g14 + p15 p14 g13 + p15 p14 p13 g12

From these super generates and super propagates, we can calculate the

super carries, i.e. the carries for the four 4-bit adders.

- The first super carry

C0, the Carry-In to the low-order 4-bit adder, is just c0 the input

Carry-In.

- The second super carry C1 is the Carry-Out of the low-order 4-bit

adder (which is also the Carry-In to the 2nd 4-bit adder.

- The last super carry C4 is the Carry-out of the high-order 4-bit

adder (which is also the overall Carry-out of the entire 16-bit adder).

C1 = G0 + P0 c0

C2 = G1 + P1 C1 = G1 + P1 G0 + P1 P0 c0

C3 = G2 + P2 C2 = G2 + P2 G1 + P2 P1 G0 + P2 P1 P0 c0

C4 = G3 + P3 C3 = G3 + P3 G2 + P3 P2 G1 + P3 P2 P1 G0 + P3 P2 P1 P0 c0

Now these C's (together with the original inputs a and b) are just

what the 4-bit CLAs need.

How long does this take, again assuming 5 input gates?

- We calculate the p's and g's (lower case) in 1 gate delay (as with

the 4-bit CLA).

- We calculate the P's one gate delay after we have the p's or

2 gate delays after we start.

- The G's are determined 2 gate delays after we have the g's and

p's. So the G's are done 3 gate delays after we start.

- The C's are determined 2 gate delays after the P's and G's. So

the C's are done 5 gate delays after we start.

- Now the C's are sent back to the 4-bit CLAs, which have already

calculated the p's and g's. The C's are calculated in 2 more

gate delays (7 total) and the s's 2 more after that (9 total).

In summary, a 16-bit CLA takes 9 cycles instead of 32 for a ripple carry

adder and 14 for the mixed adder.

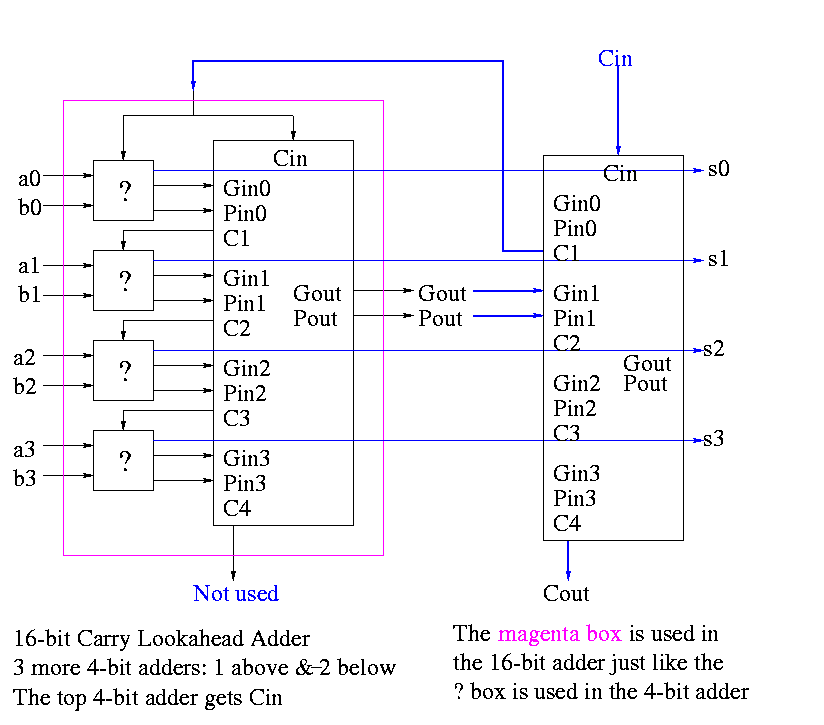

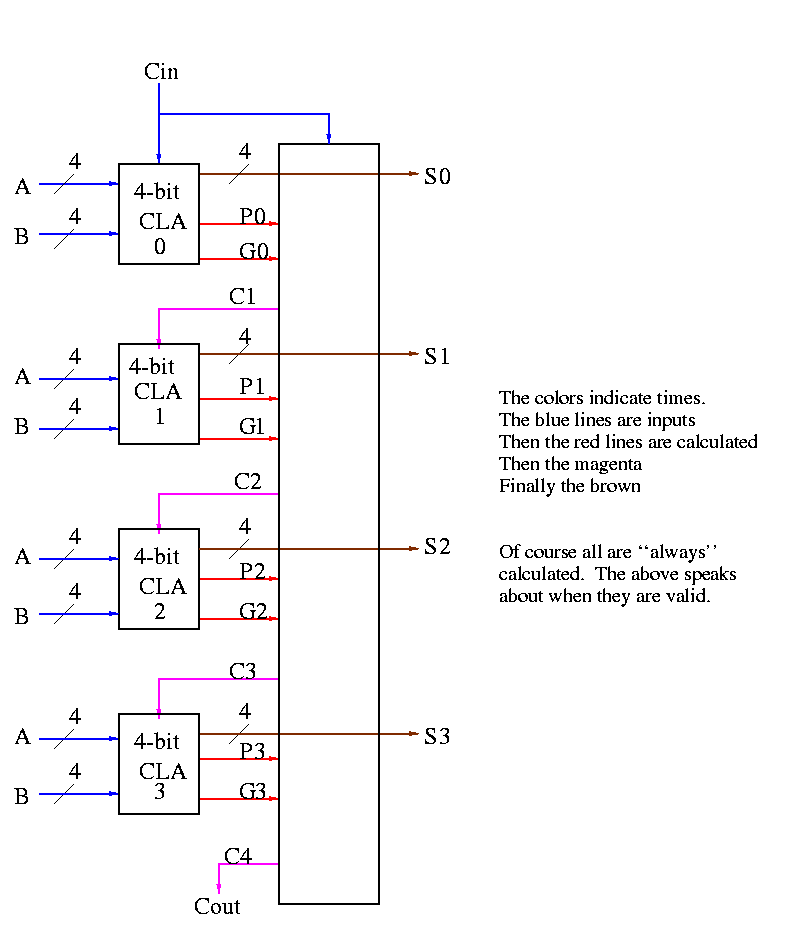

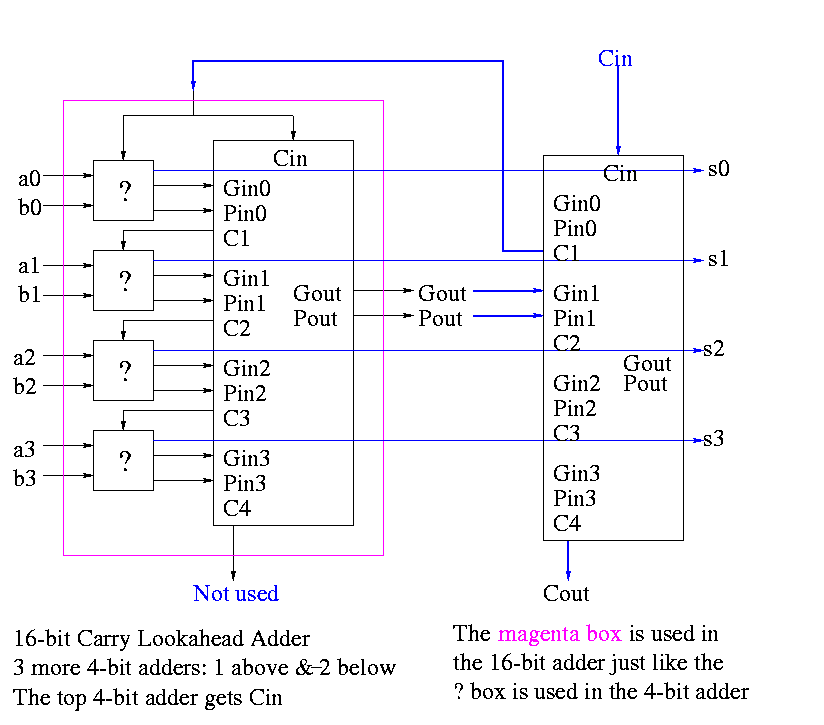

Some pictures follow.

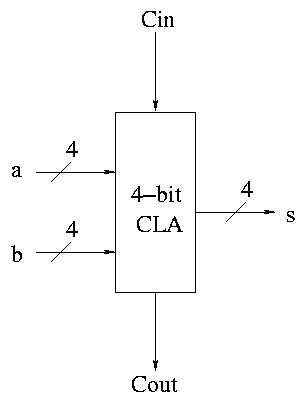

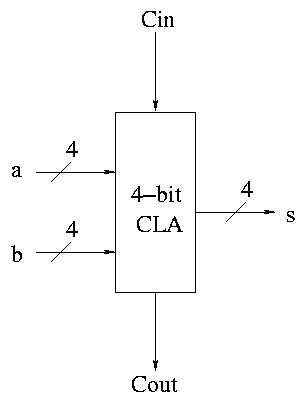

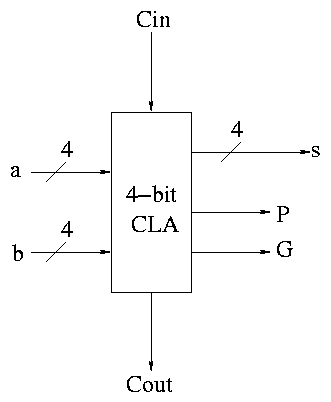

Take our original picture of the 4-bit CLA and collapse

the details so it looks like.

Next include the logic to calculate P and G.

Now put four of these with a CLA block (to calculate C's from P's,

G's and Cin) and we get a 16-bit CLA. Note that we do not use the Cout

from the 4-bit CLAs.

Note that the tall skinny box is general. It takes 4 Ps 4Gs and

Cin and calculates 4Cs. The Ps can be propagates, superpropagates,

superduperpropagates, etc. That is, you take 4 of these 16-bit CLAs

and the same tall skinny box and you get a 64-bit CLA.

Homework:

4.44, 4.45

As noted just above the tall skinny box is useful for all size

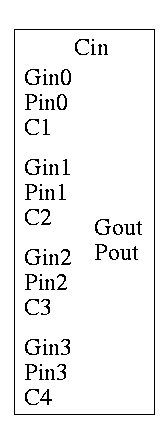

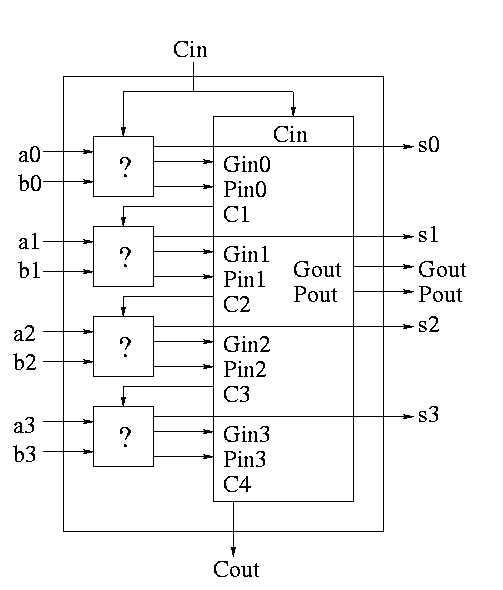

CLAs. To expand on that point and to review CLAs, let's redo CLAs with

the general box.

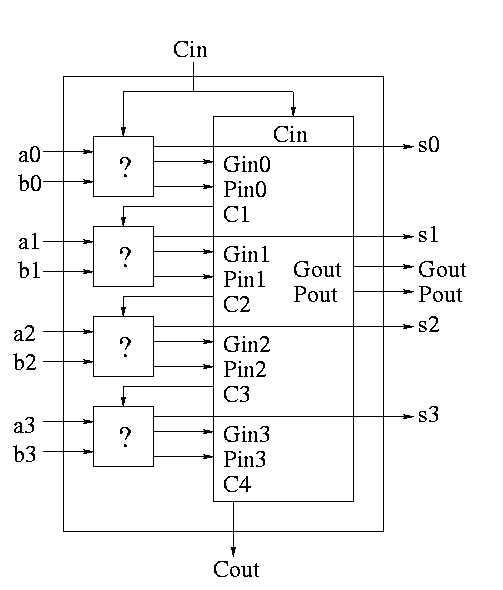

Since we are doing 4-bits at a time, the box takes 9=2*4+1 input bits

and produces 6=4+2 outputs

A 4-bit adder is now

What does the ``?'' box do?

- Calculates Gi and Pi based on ai and bi

- Calculate s1 based on ai, bi, and Ci=Cin (normal full adder)

- Do not bother calculating Cout

Now take four of these 4-bit adders and use the identical

CLA box to get a 16-bit adder

Four of these 16-bit adders with the identical

CLA box to gives a 64-bit adder.

======== START LECTURE #11

========

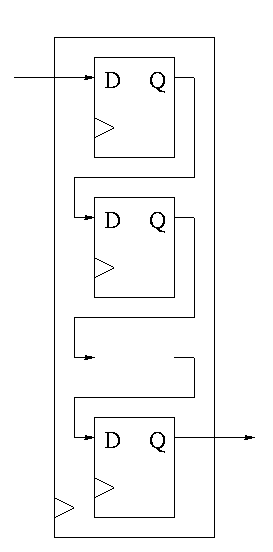

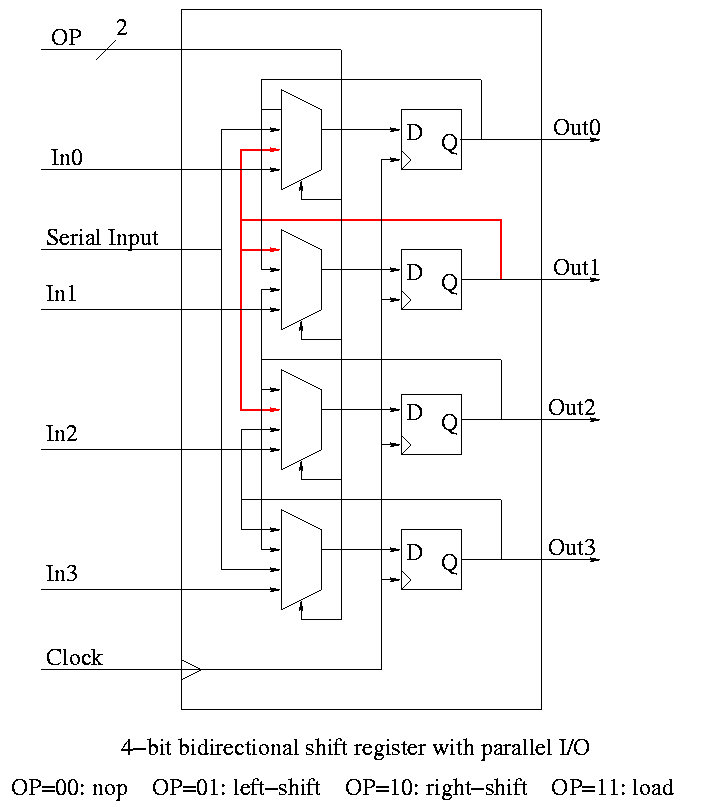

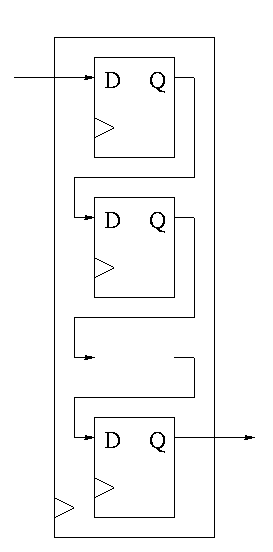

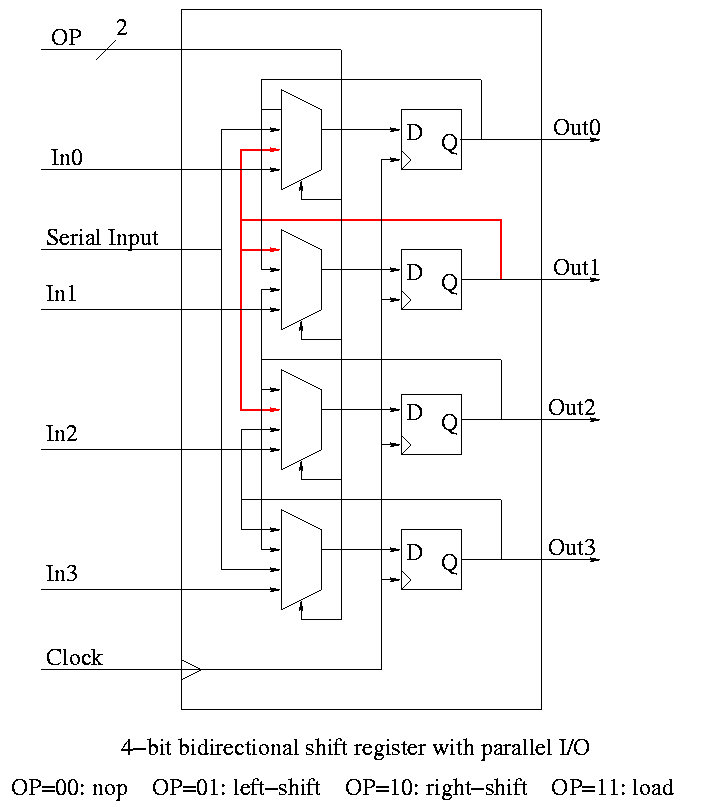

Shifter

This is a sequential circuit.

-

Just a string of D-flops; output of one is input of next

-

Input to first is the serial input.

-

Output of last is the serial output.

-

We want more.

-

Left and right shifting (with serial input/output)

-

Parallel load

-

Parallel Output

-

Don't shift every cycle

-

Parallel output is just wires.

-

Shifter has 4 modes (left-shift, right-shift, nop, load) so

-

4-1 mux inside

-

2 control lines must come in

-

We could modify our registers to be shifters (bigger mux), but ...

-

Our shifters are slow for big shifts; ``barrel shifters'' are

better and kept separate from the processor registers.

Homework:

A 4-bit shift register initially contains 1101. It is

shifted six times to the right with the serial input being

101101. What is the contents of the register after each

shift.

Homework:

Same register, same initial condition. For

the first 6 cycles the opcodes are left, left, right, nop,

left, right and the serial input is 101101. The next cycle

the register is loaded (in parallel) with 1011. The final

6 cycles are the same as the first 6. What is the contents

of the register after each cycle?

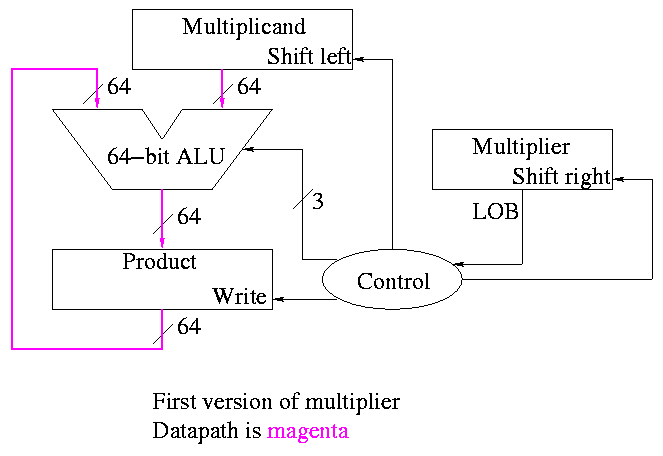

4.6: Multiplication

- Of course we can do this with two levels of logic since

multiplication is just a function of its inputs.

- But just as with addition, would have a very big circuit and large

fan in. Instead we use a sequential circuit that mimics the

algorithm we all learned in grade school.

-

Recall how to do multiplication.

-

Multiplicand times multiplier gives product

-

Multiply multiplicand by each digit of multiplier

-

Put the result in the correct column

-

Then add the partial products just produced

-

We will do it the same way ...

... but differently

-

We are doing binary arithmetic so each ``digit'' of the

multiplier is 1 or zero.

-

Hence ``multiplying'' the mulitplicand by a digit of the

multiplier means either

-

Getting the multiplicand

-

Getting zero

-

Use an ``if appropriate bit of multiplier is 1'' stmt

-

To get the ``appropriate bit''

-

Start with the LOB of the multiplier

-

Shift the multiplier right (so the next bit is the LOB)

-

Putting in the correct column means putting it one column

further left that the last time.

-

This is done by shifting the

multiplicand left one bit each time (even if the multiplier

bit is zero)

-

Instead of adding partial products at end, we keep a running sum.

-

If the multiplier bit is zero, add the (shifted)

multiplicand to the running sum

-

If the bit is zero, simply skip the addition.

-

This results in the following algorithm

product <- 0

for i = 0 to 31

if LOB of multiplier = 1

product = product + multiplicand

shift multiplicand left 1 bit

shift multiplier right 1 bit

Do on the board 4-bit multiplication (8-bit registers) 1100 x 1101.

Since the result has (up to) 8 bits, this is often called a 4x4->8

multiply.

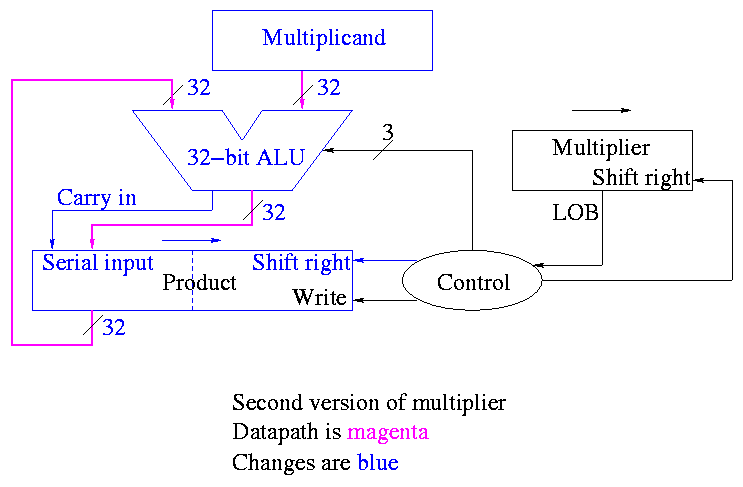

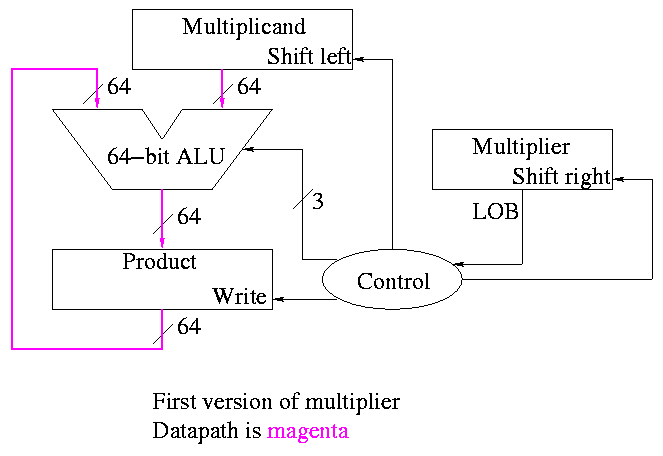

The diagrams below are for a 32x32-->64 multiplier.

What about the control?

-

Always give the ALU the ADD operation

-

Always send a 1 to the multiplicand to shift left

-

Always send a 1 to the multiplier to shift right

-

Pretty boring so far but

-

Send a 1 to write line in product if and only if

LOB multiplier is a 1

-

I.e. send LOB to write line

-

I.e. it really is pretty boring

This works!

But, when compared to the better solutions to come, is wasteful of

resourses and hence is

-

slower

-

hotter

-

bigger

-

all these are bad

The product register must be 64 bits since the product can contain 64

bits.

Why is multiplicand register 64 bits?

-

So that we can shift it left

-

I.e., for our convenience.

By this I mean it is not required by the problem specification,

but only by the solution method chosen.

Why is ALU 64-bits?

-

Because the product is 64 bits

-

But we are only adding a 32-bit quantity to the

product at any one step.

-

Hmmm.

-

Maybe we can just pull out the correct bits from the product.

-

Would be tricky to pull out bits in the middle

because which bits to pull changes each step

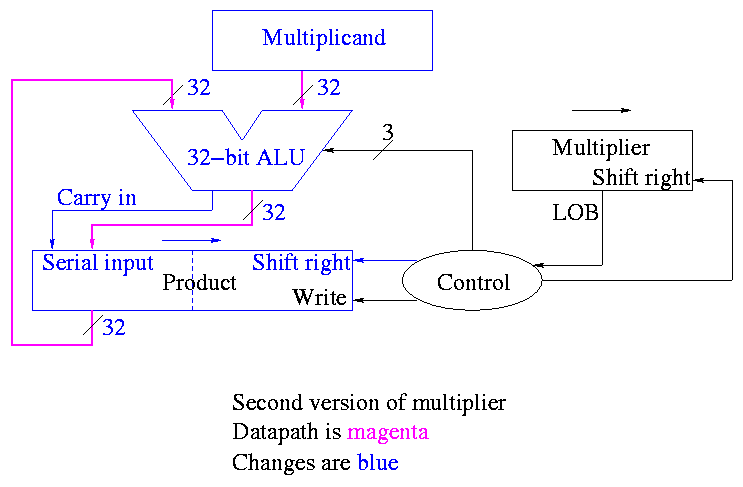

POOF!! ... as the smoke clears we see an idea.

We can solve both problems at once

-

DON'T shift the multiplicand left

-

Hence register is 32-bits.

-

Also register need not be a shifter

-

Instead shift the product right!

-

Add the high-order (HO) 32-bits of product register to the

multiplicand and place the result back into HO 32-bits

-

Only do this if the current multiplier bit is one.

-

Use the Carry Out of the sum as the new bit to shift

in

-

The book forgot the last point but their example used numbers

too small to generate a carry

This results in the following algorithm

product <- 0

for i = 0 to 31

if LOB of multiplier = 1

(serial_in, product[32-63]) <- product[32-63] + multiplicand

shift product right 1 bit

shift multiplier right 1 bit

What about control

-

Just as boring as before

-

Send (ADD, 1, 1) to (ALU, multiplier (shift right), Product

(shift right)).

-

Send LOB to Product (write).

Redo same example on board

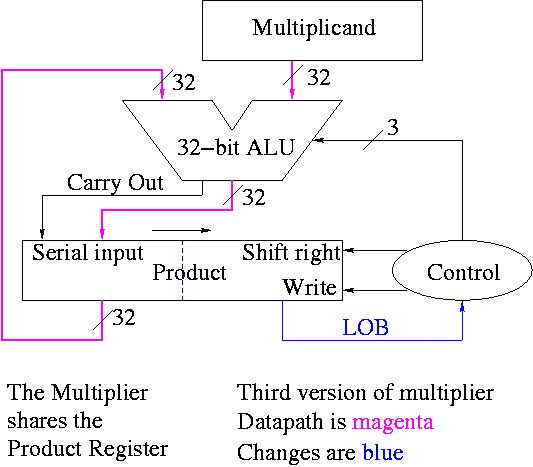

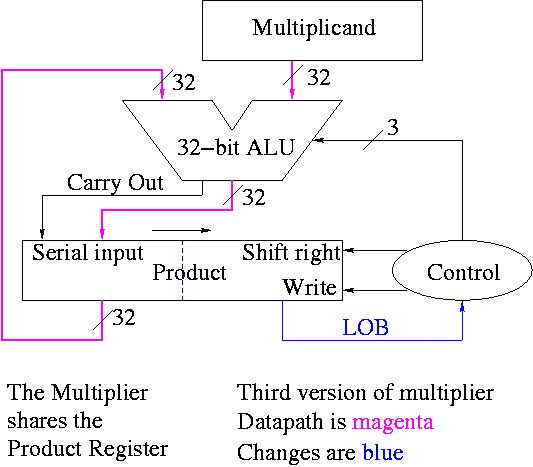

A final trick (``gate bumming'', like code bumming of 60s).

-

There is a waste of registers, i.e. not full unilization.

-

The multiplicand is fully unilized since we always need all 32 bits.

-

But once we use a multiplier bit, we can toss it so we need

less and less of the multiplier as we go along.

-

And the product is half unused at beginning and only slowly ...

-

POOF!!

-

``Timeshare'' the LO half of the ``product register''.

-

In the beginning LO half contains the multiplier.

-

Each step we shift right and more goes to product

less to multiplier.

-

The algorithm changes to:

product[0-31] <- multiplier

for i = 0 to 31

if LOB of product = 1

(serial_in, product[32-63]) <- product[32-63] + multiplicand

shift product right 1 bit

Control again boring.

- Send (ADD, 1) to (ALU, Product (shift right)).

- Send LOB to Product (write).

Redo the same example on the board.

The above was for unsigned 32-bit multiplication.

What about signed multiplication.

-

Save the signs of the multiplier and multiplicand.

-

Convert multiplier and multiplicand to non-neg numbers.

-

Use above algorithm.

-

Only use 31 steps not 32 since there are only 31 multiplier bits

(the HOB of the multiplier is the sign bit, not a bit used for

multiplying).

-

Compliment product if original signs were different.

There are faster multipliers, but we are not covering them.

4.7: Division

We are skiping division.

4.8: Floating Point

We are skiping floating point.

4.9: Real Stuff: Floating Point in the PowerPC and 80x86

We are skiping floating point.

Homework:

Read 4.10 ``Fallacies and Pitfalls'', 4.11 ``Conclusion'',

and 4.12 ``Historical Perspective''.

======== START LECTURE #12

========

Notes:

Midterm exam 25 Oct.

Lab 2. Due 1 November. Extend Modify lab 1 to a 32 bit alu that

in addition handles sub, slt, zero detect, and overflow. That is,

produce a gate level simulation of Figure 4.19. This figure is also

in the class notes; it is the penultimate figure before ``Fast Adders''.

It is NOW DEFINITE that on monday 23 Oct, my office

hours will have to move from 2:30--3:30 to 1:30-2:30 due to a

departmental committee meeting.

Don't forget the mirror site. My main website will be

going down for an OS upgrade at some point. Start at http://cs.nyu.edu

End of Notes:

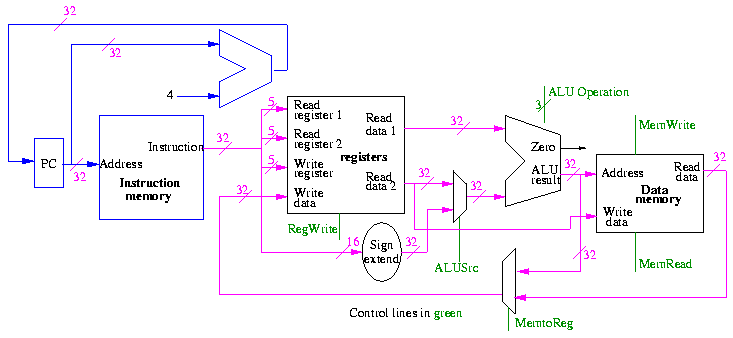

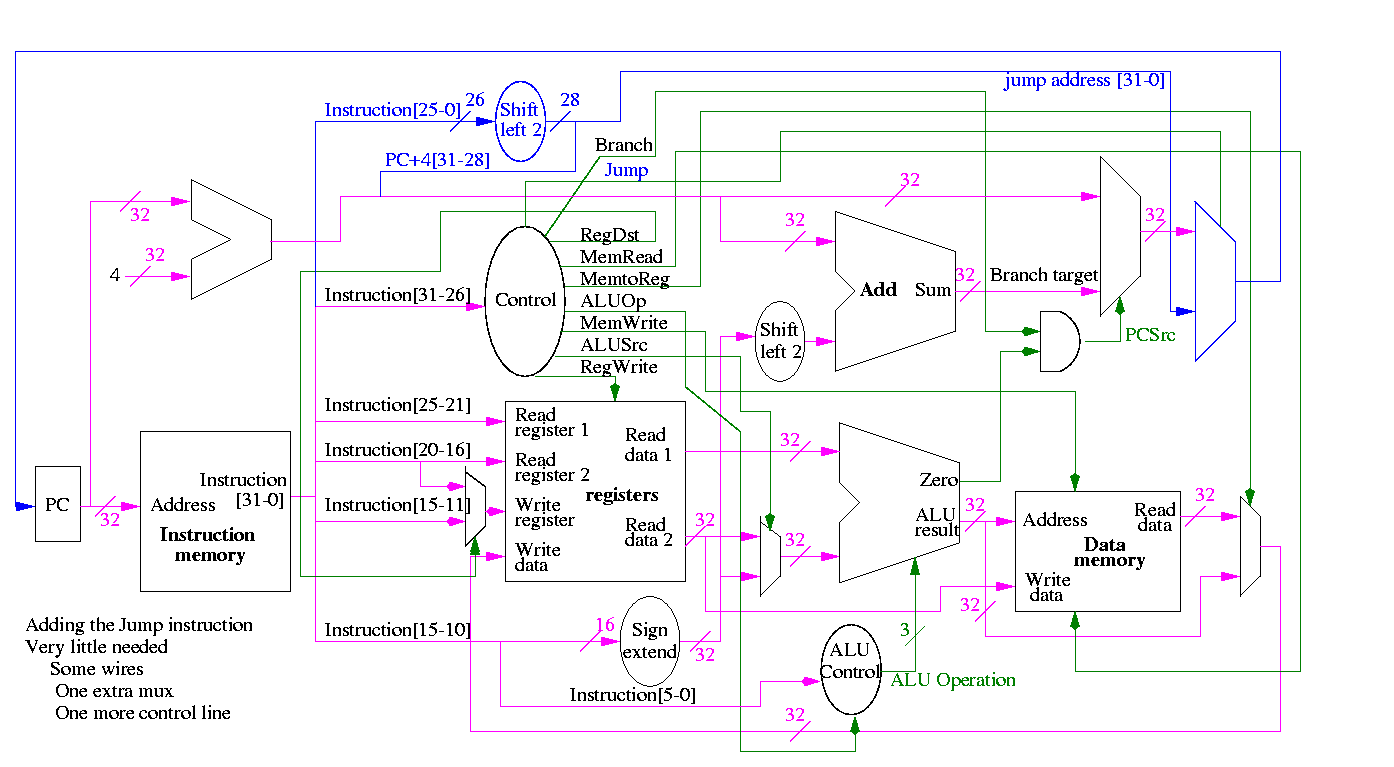

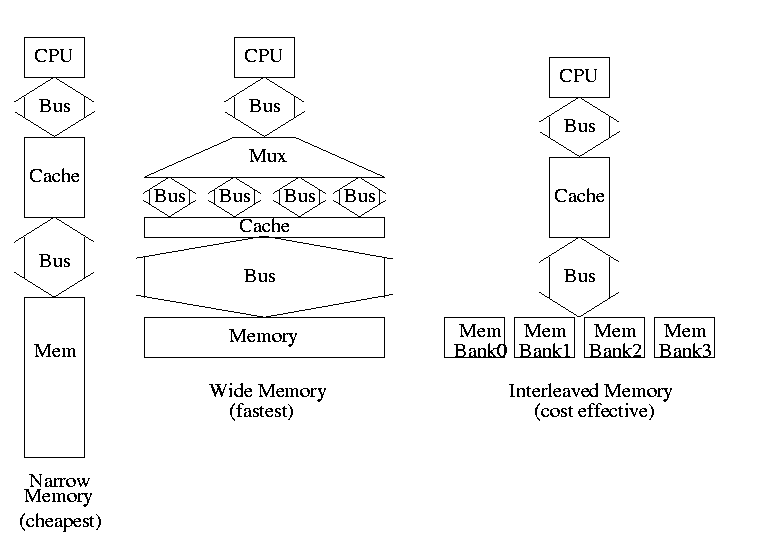

Chapter 5: The processor: datapath and control

Homework:

Start Reading Chapter 5.

5.1: Introduction

We are going to build the MIPS processor

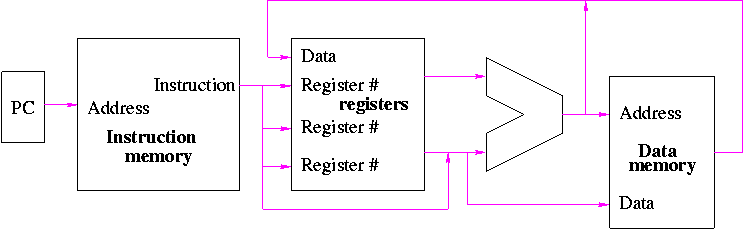

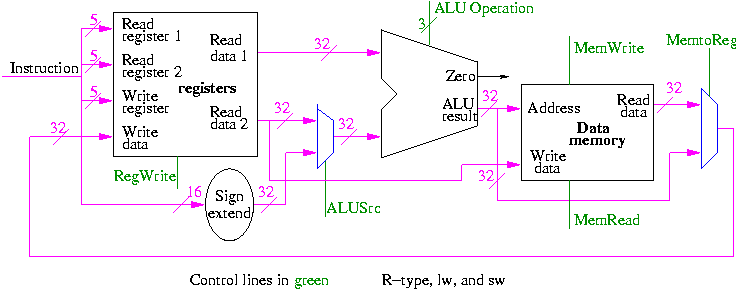

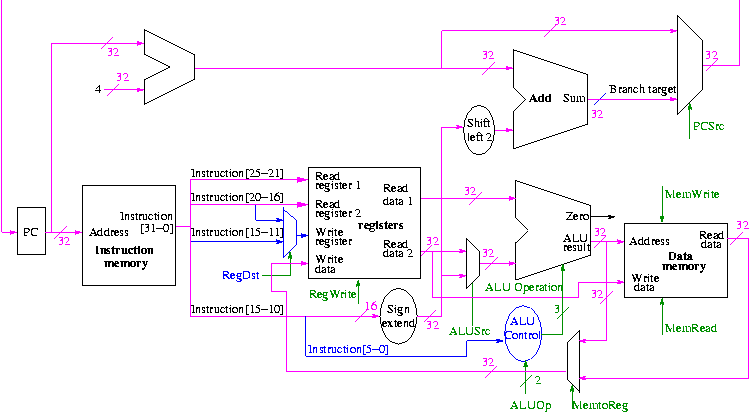

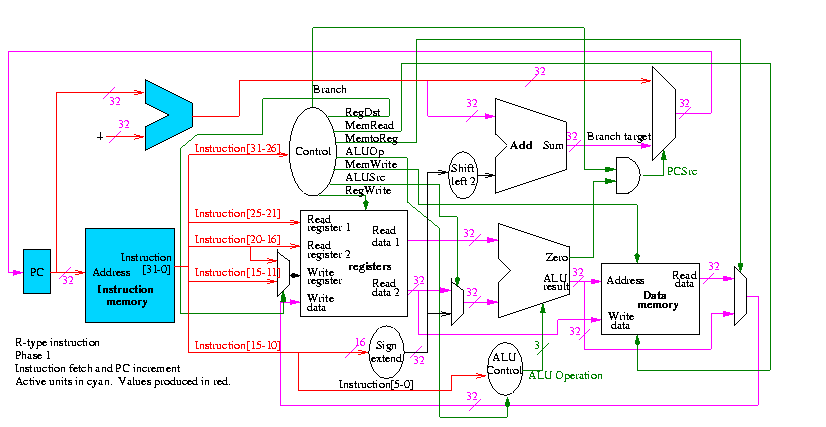

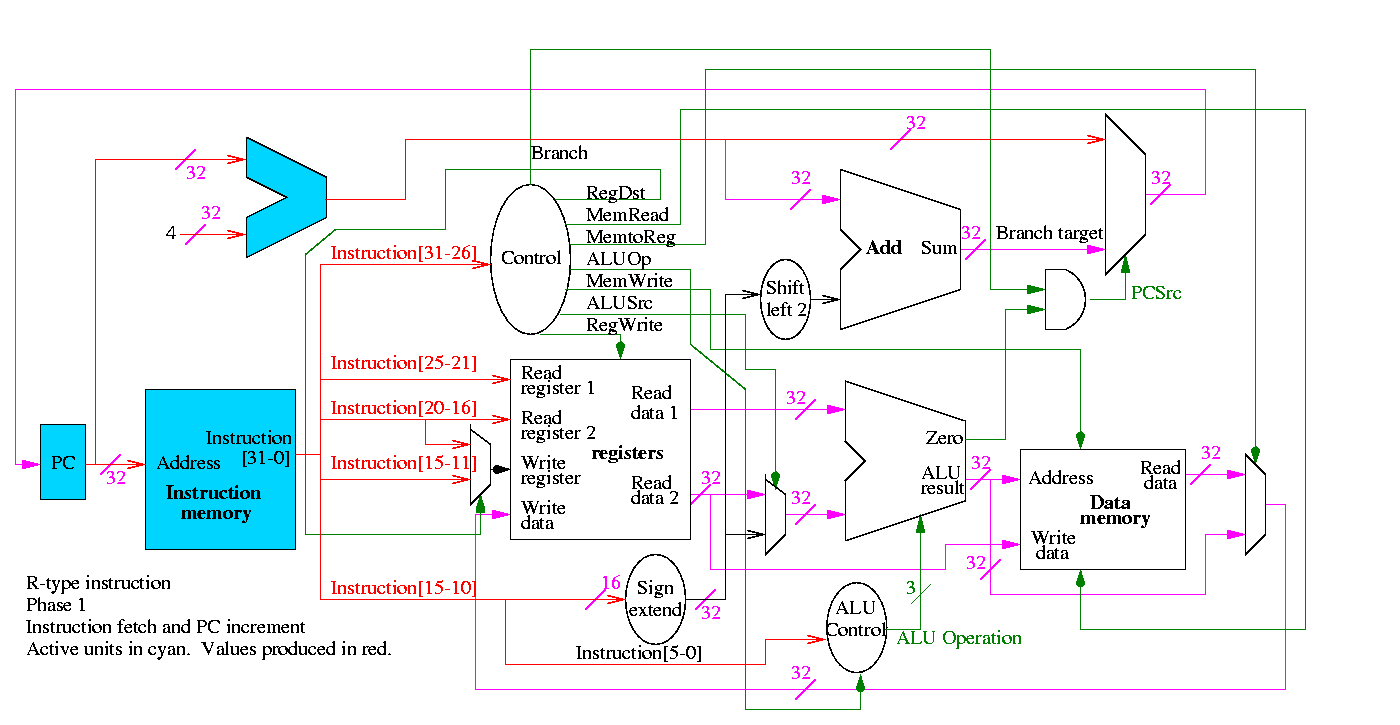

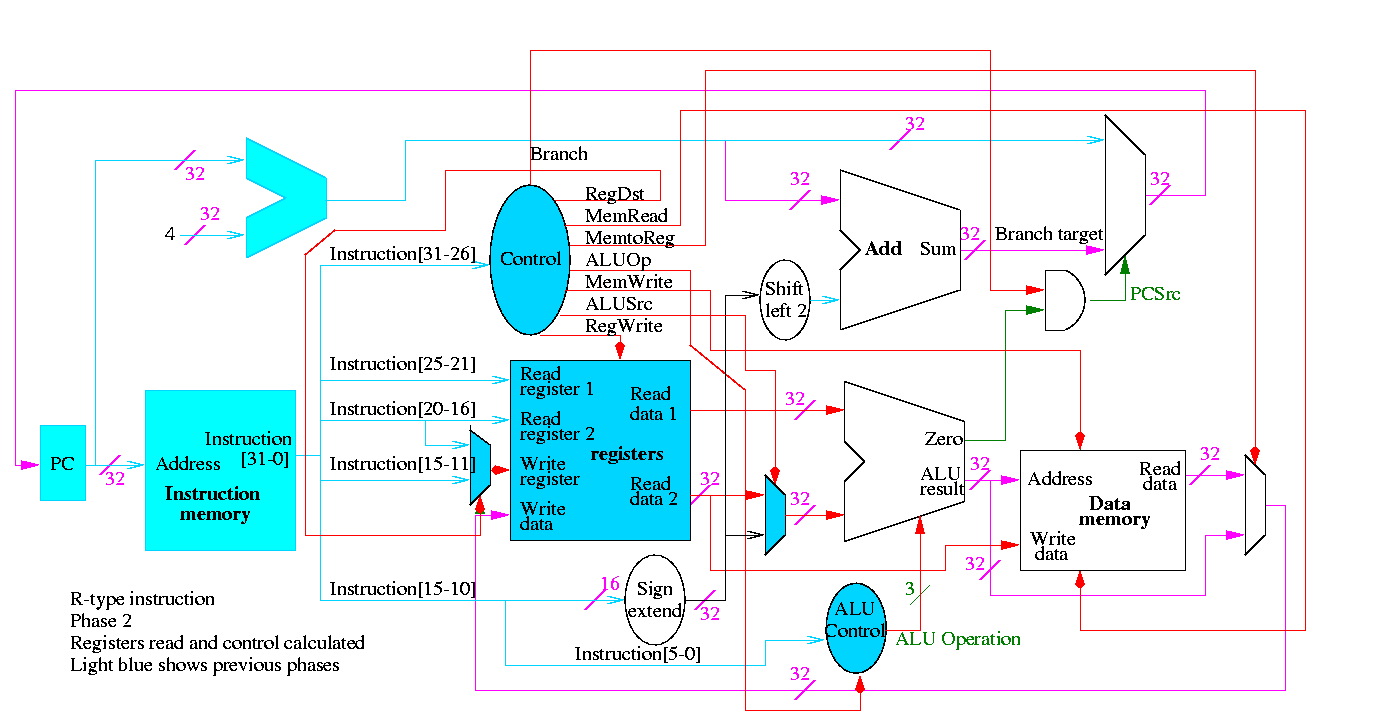

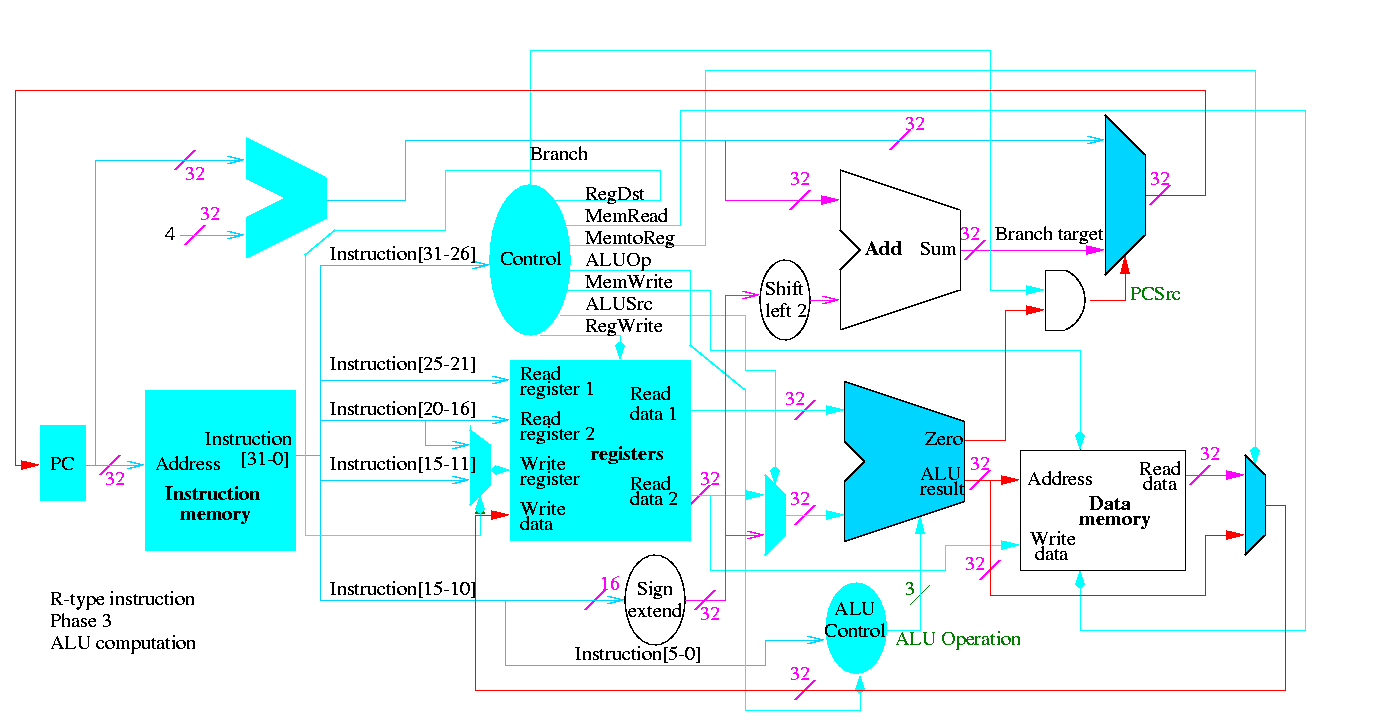

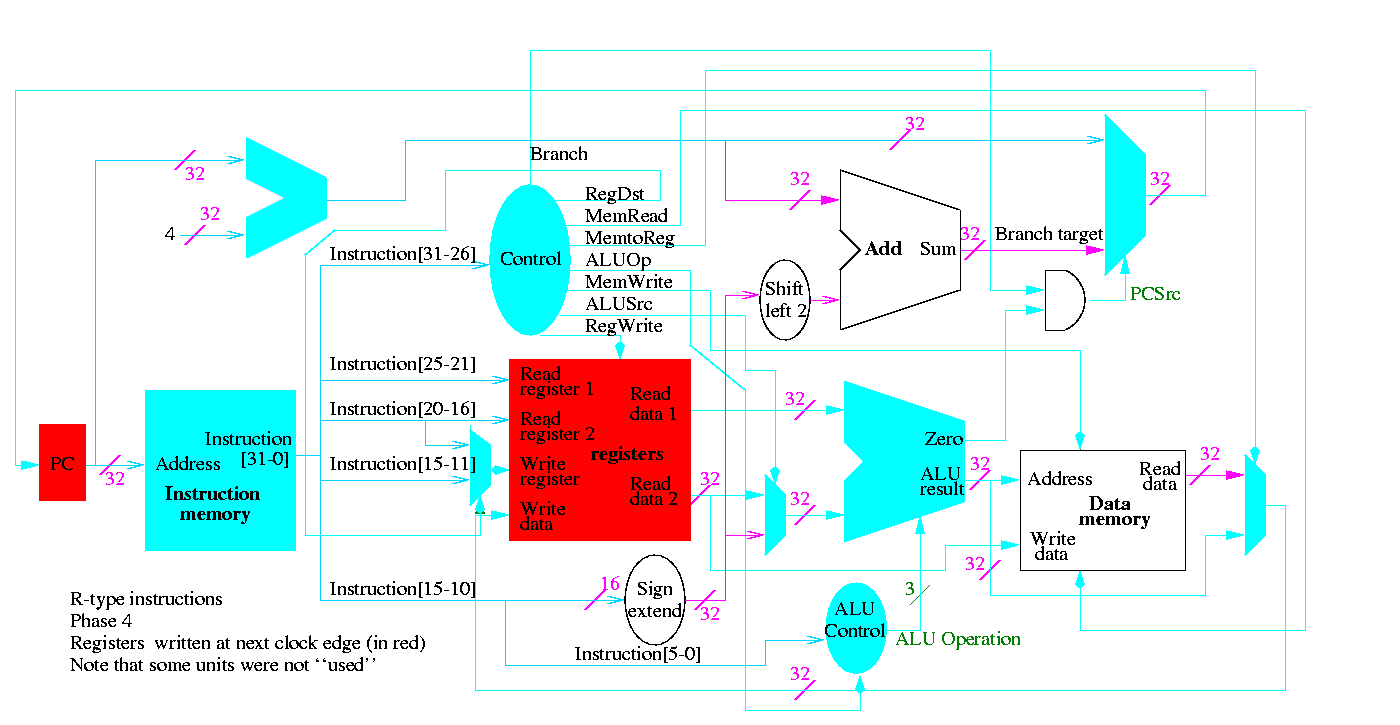

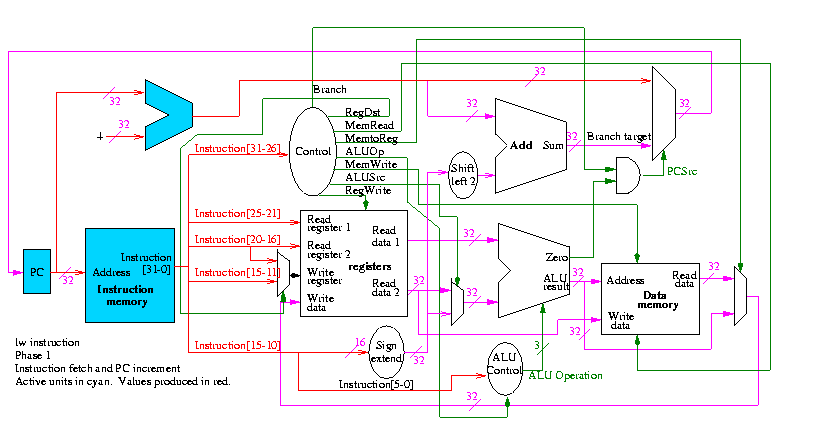

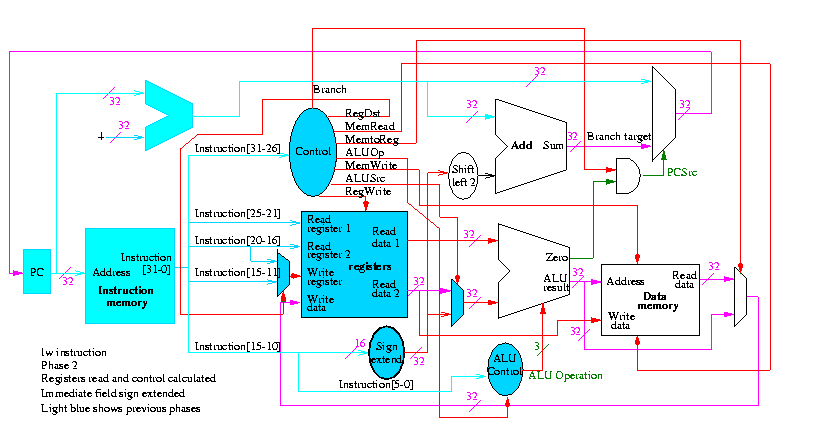

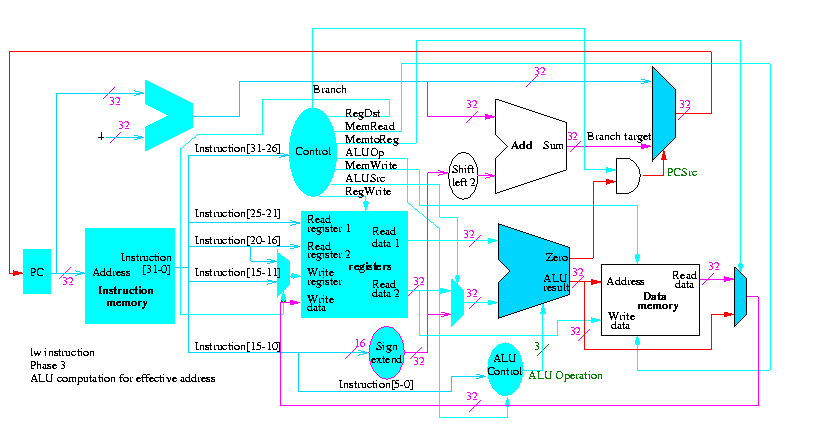

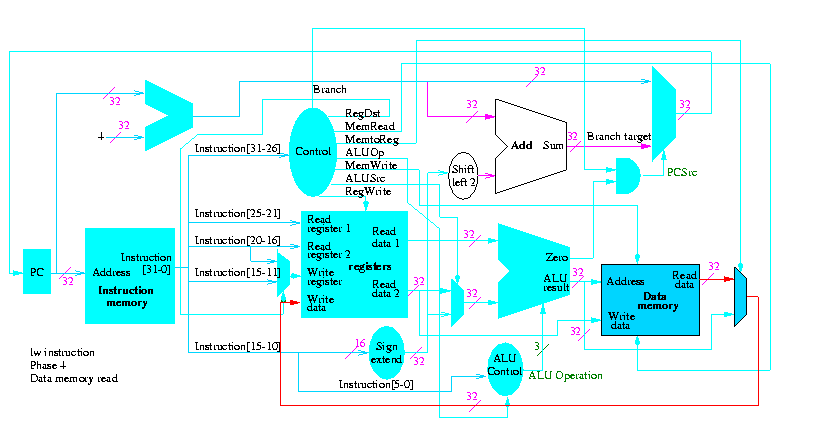

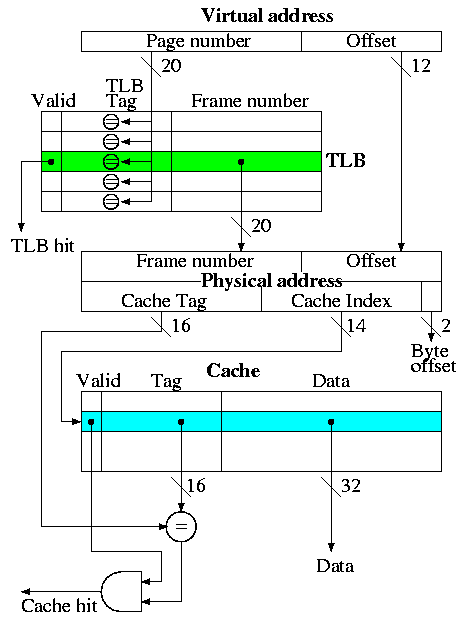

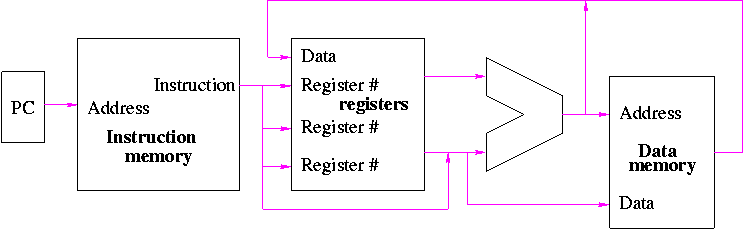

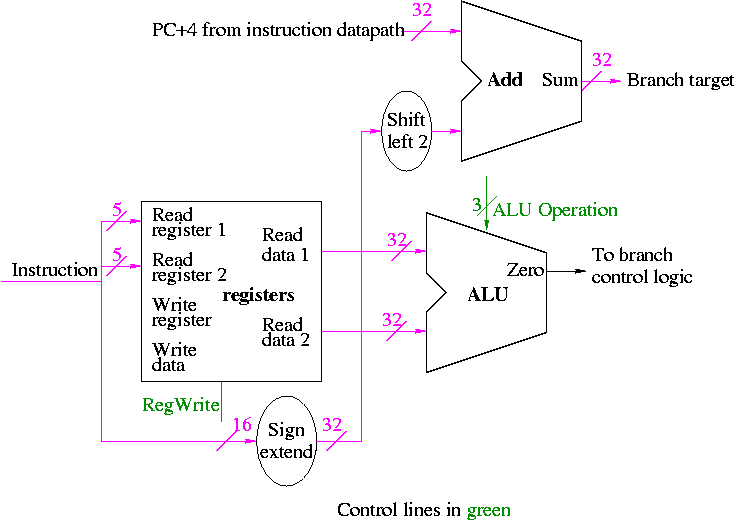

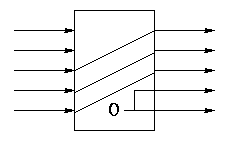

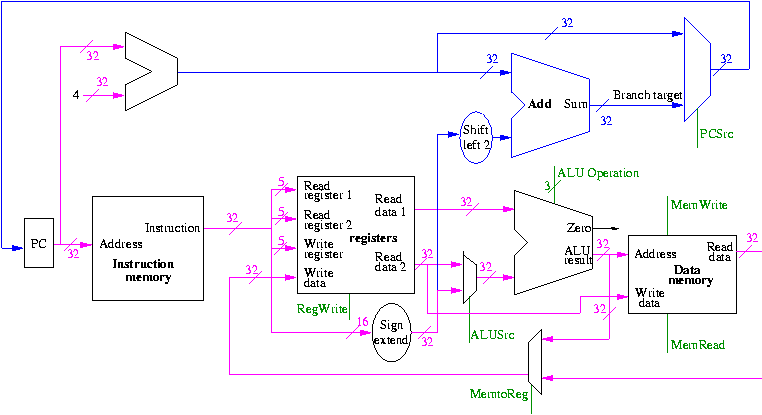

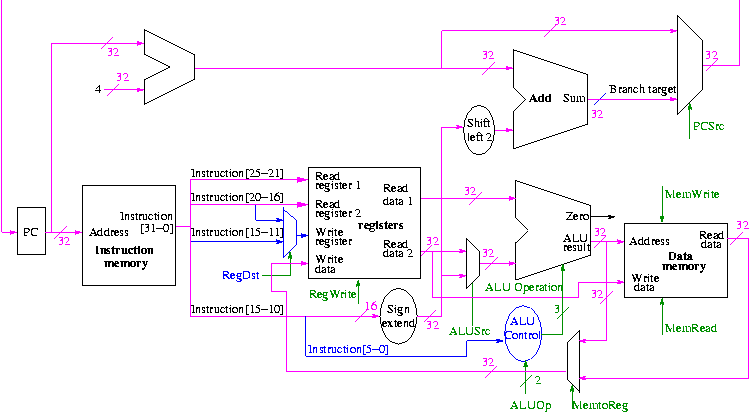

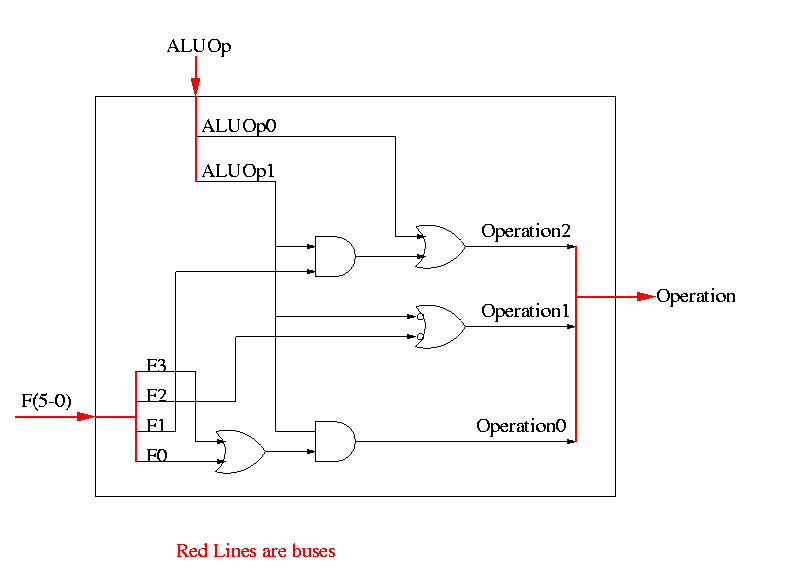

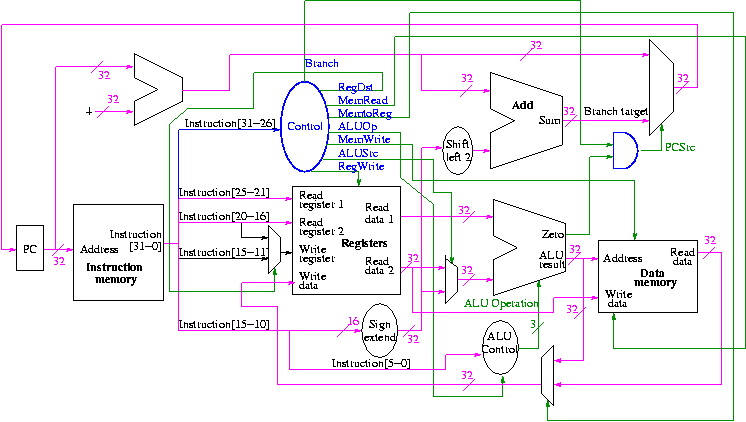

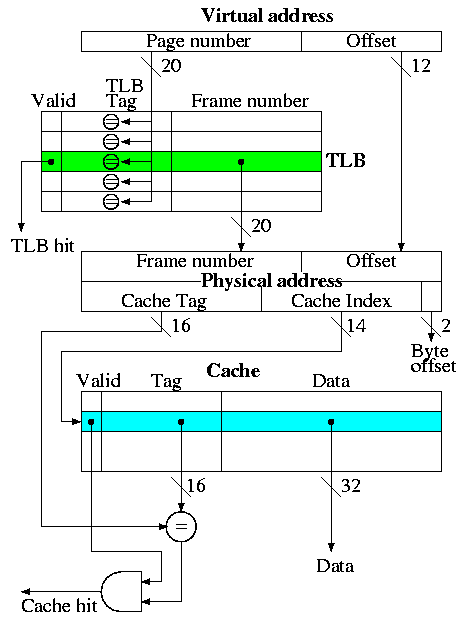

Figure 5.1 redrawn below shows the main idea

Note that the instruction gives the three register numbers as well

as an immediate value to be added.

- No instruction actually does all this.

- We have datapaths for all possibilities.

- Will see how we arrange for only certain datapaths to be used for

each instruction type.

- For example R type uses all three registers but not the

immediate field.

- The I type uses the immediate but not all three registers.

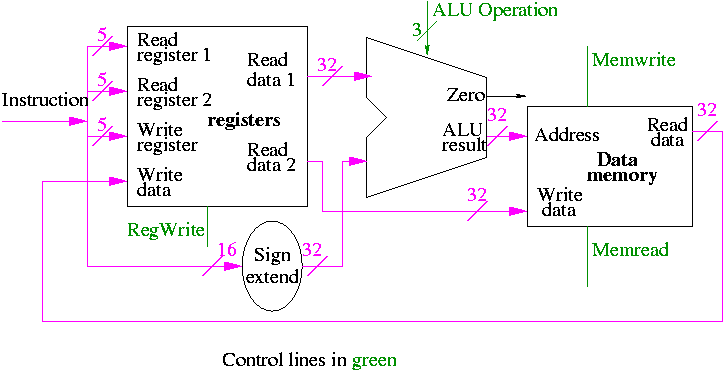

- The memory address for a load or store is the sum of a register

and an immediate.

- The data value to be stored comes from a register.

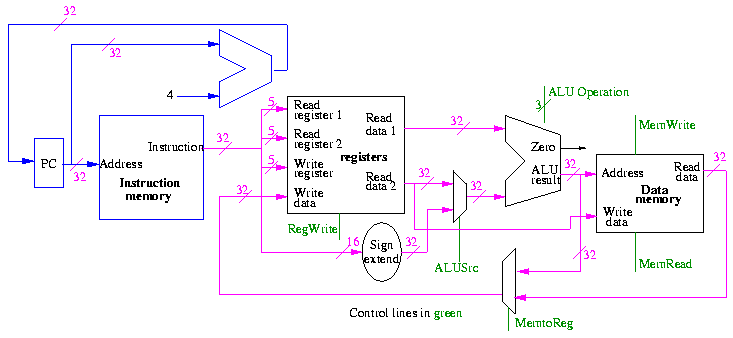

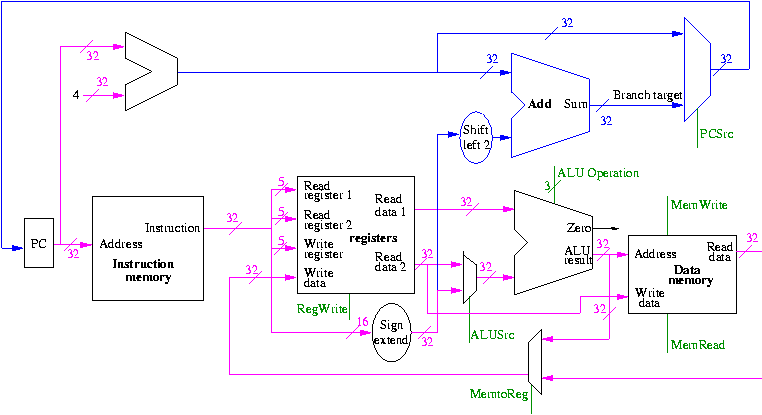

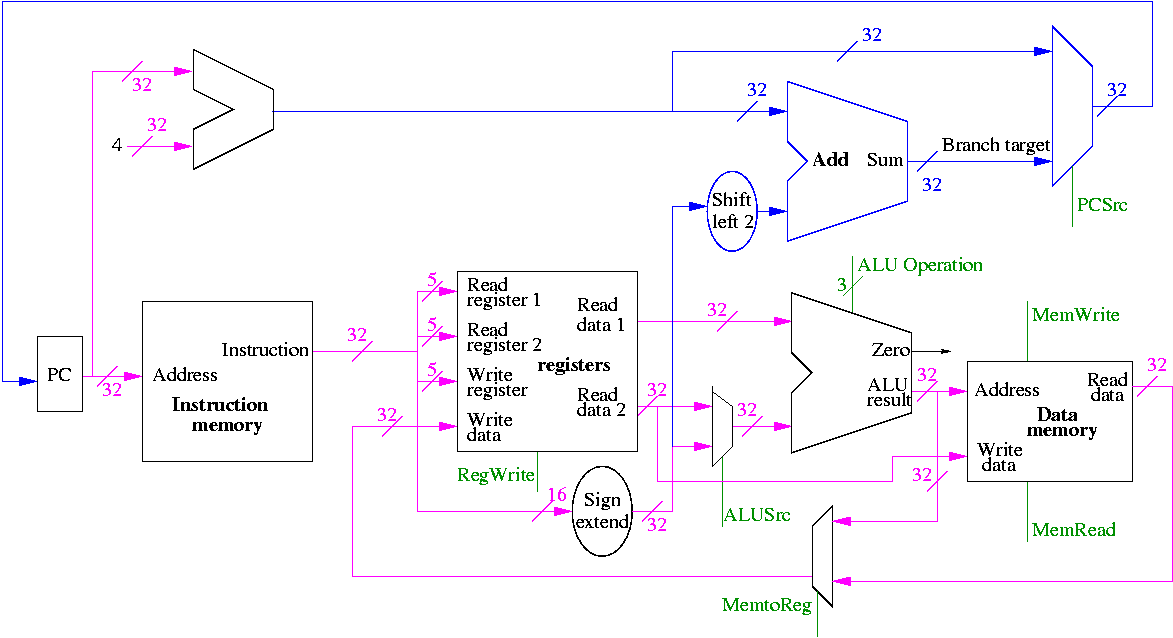

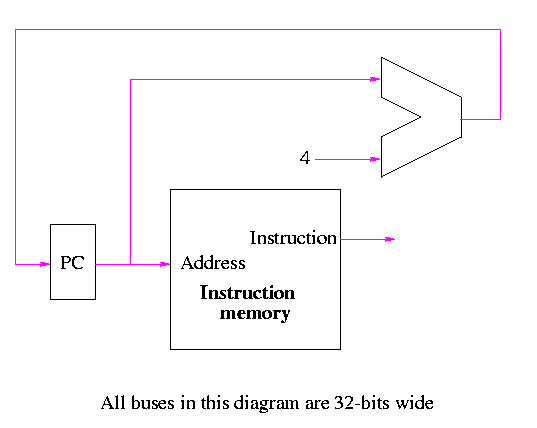

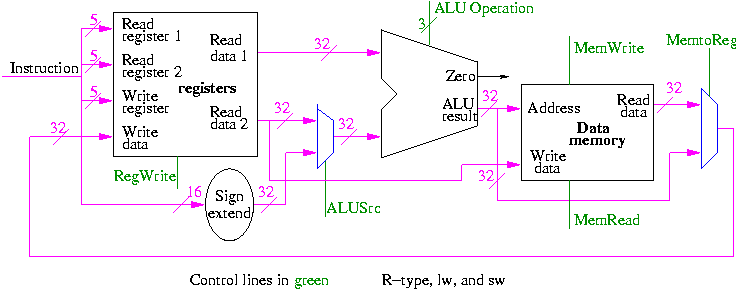

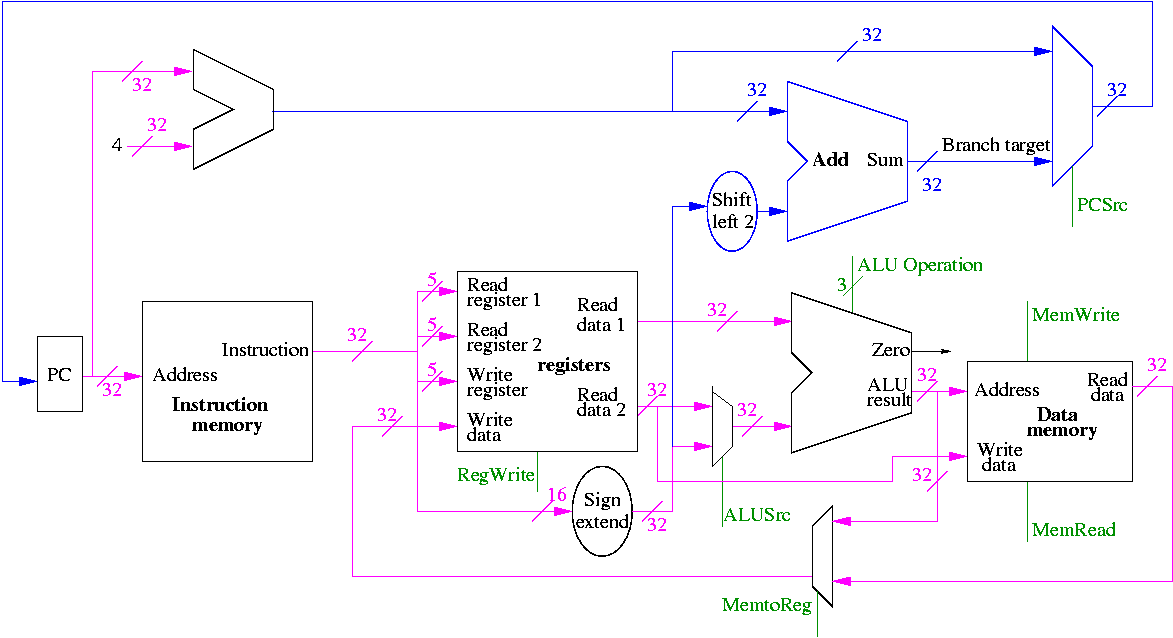

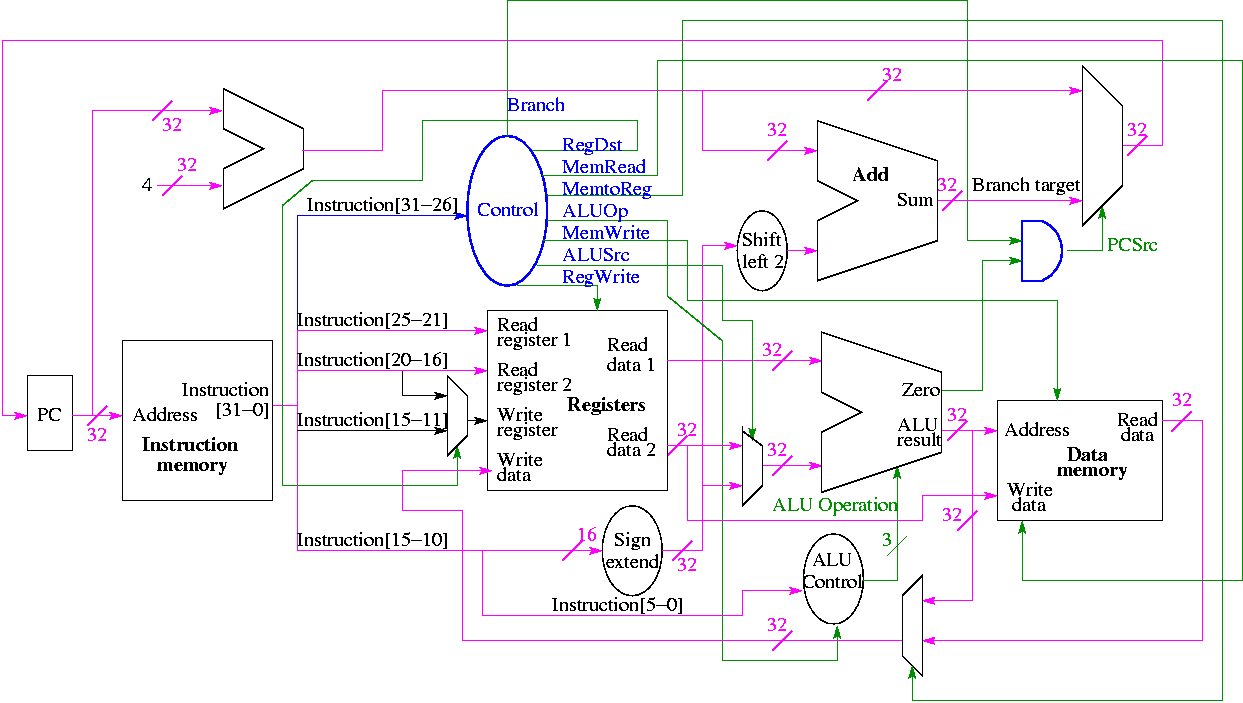

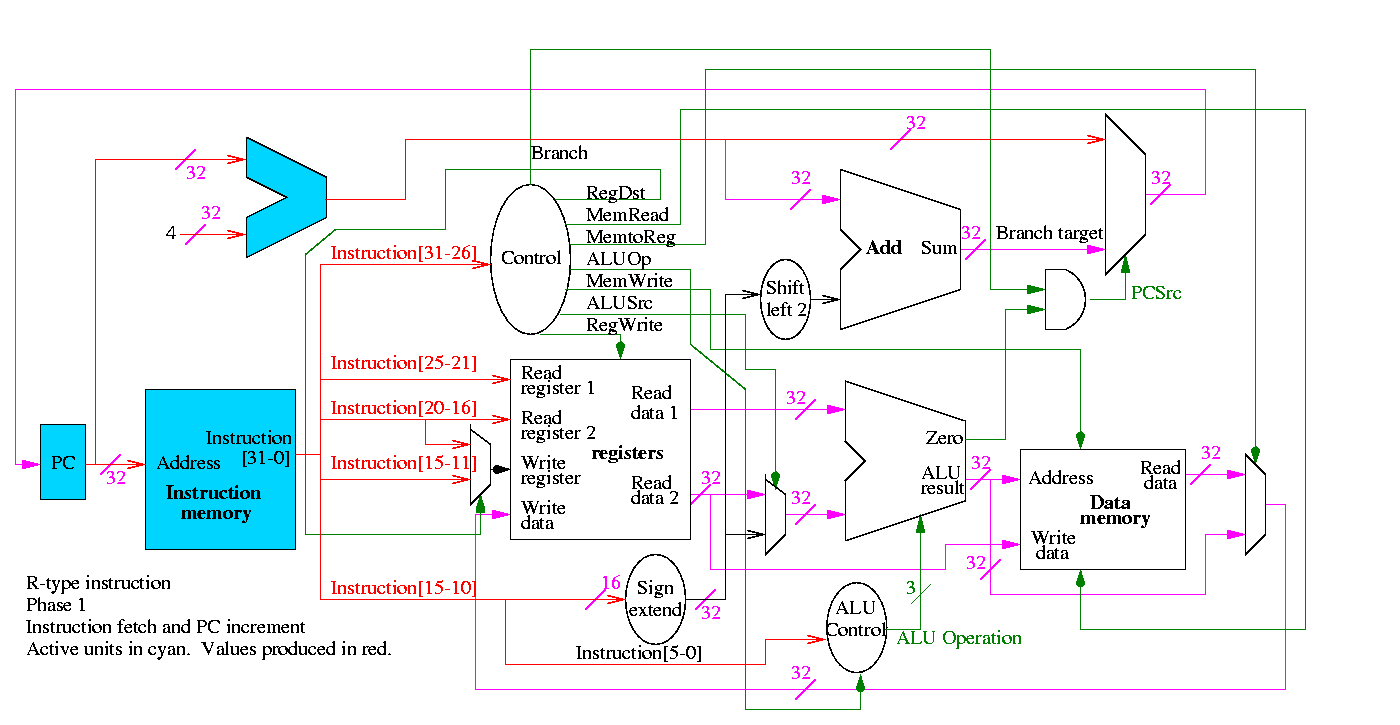

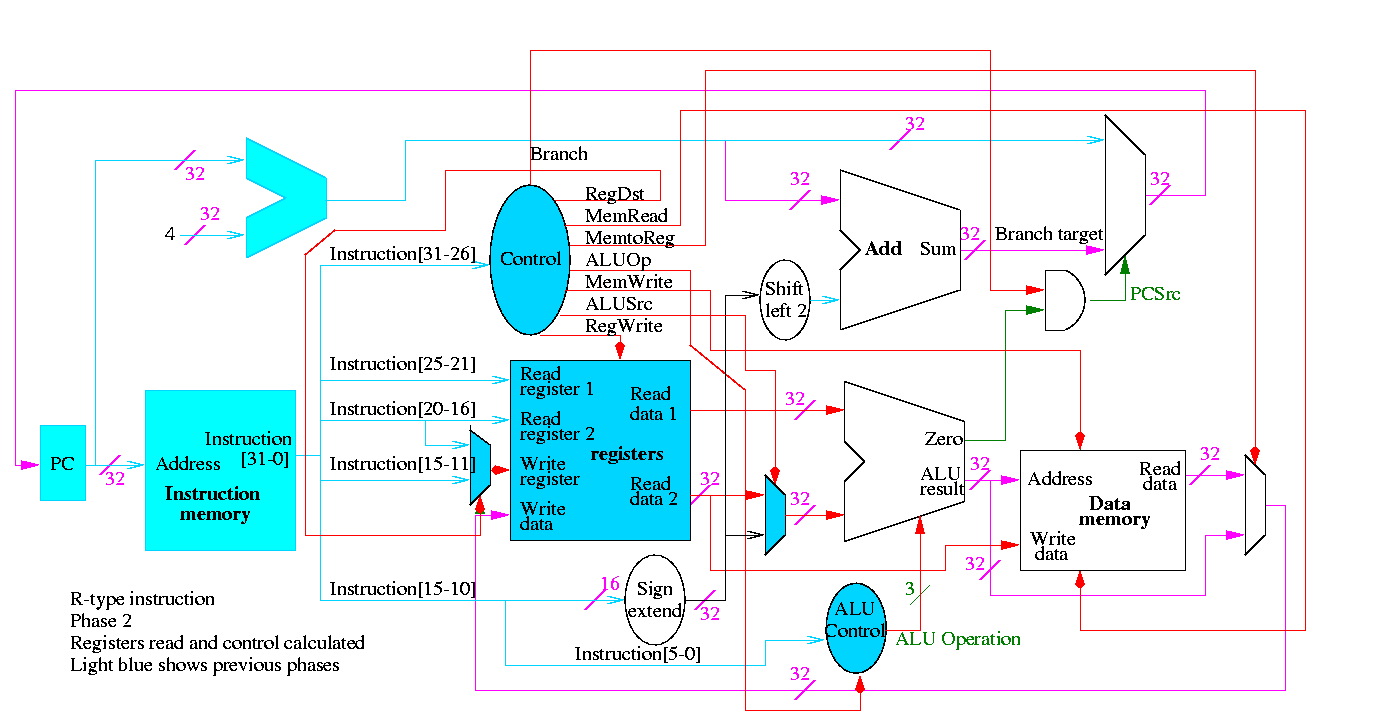

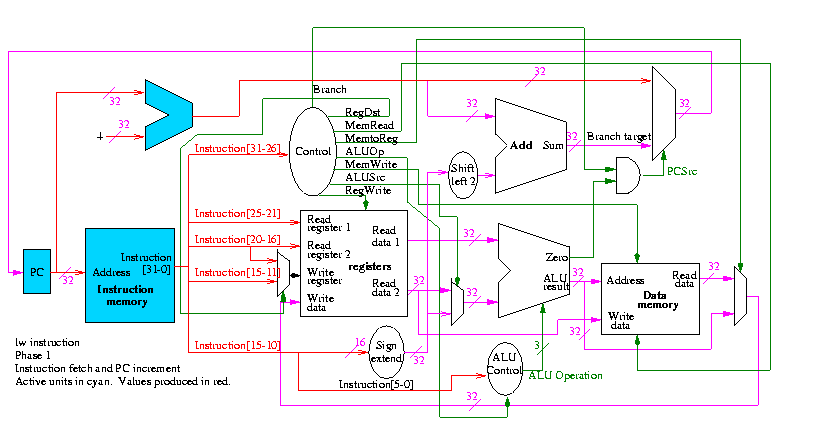

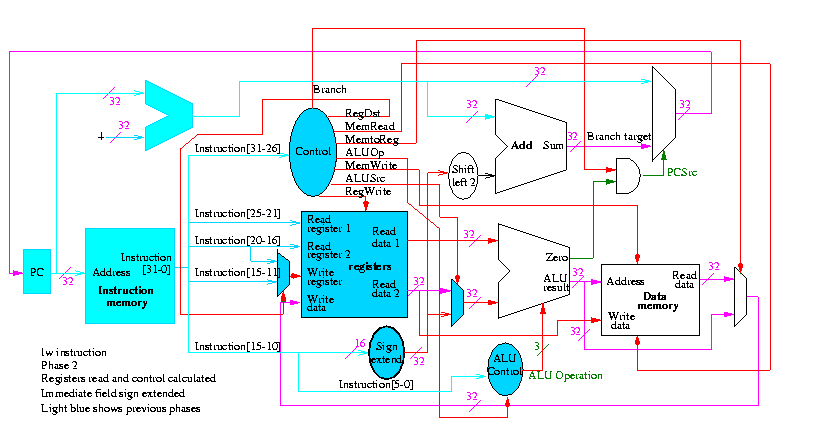

5.2: Building a datapath

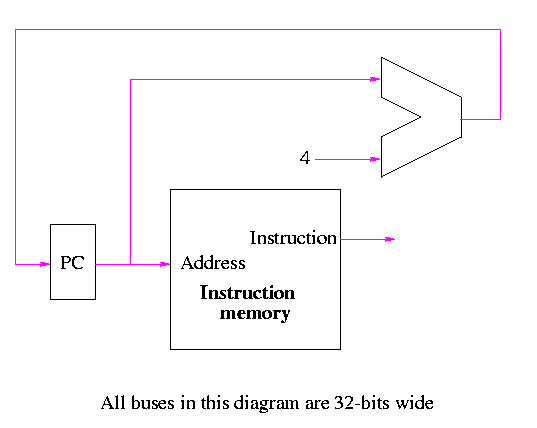

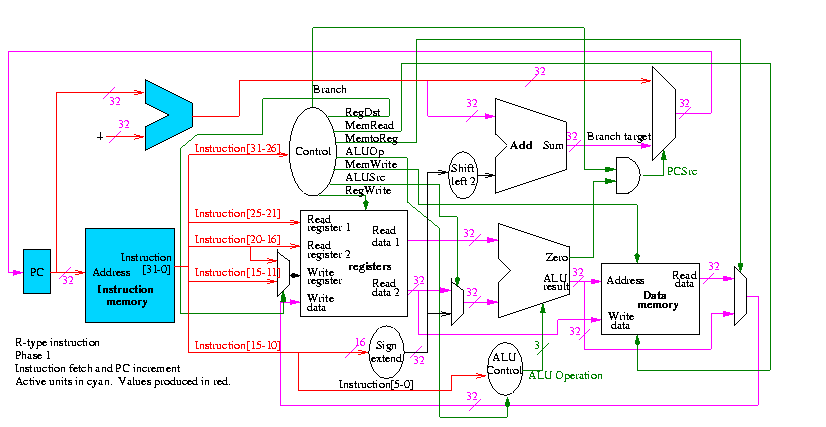

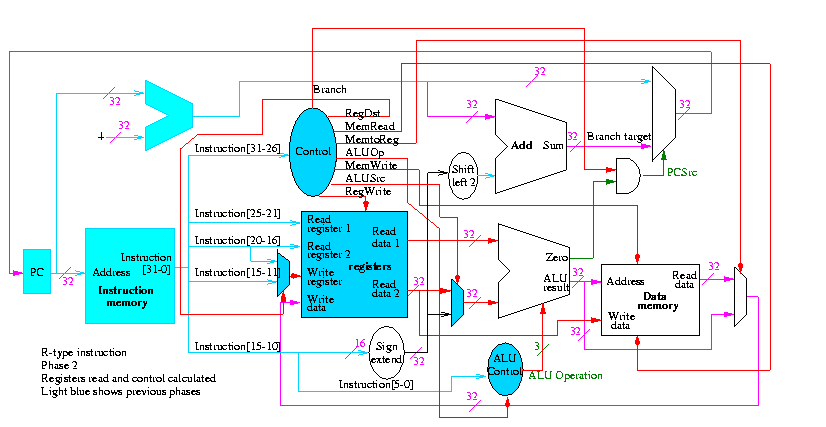

Let's begin doing the pieces in more detail.

Instruction fetch

We are ignoring branches for now.

- How come no write line for the PC register?

- Ans: We write it every cycle.

- How come no control for the ALU

- Ans: This one always adds

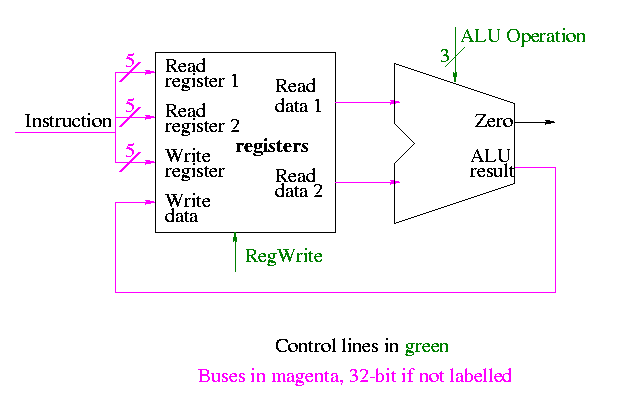

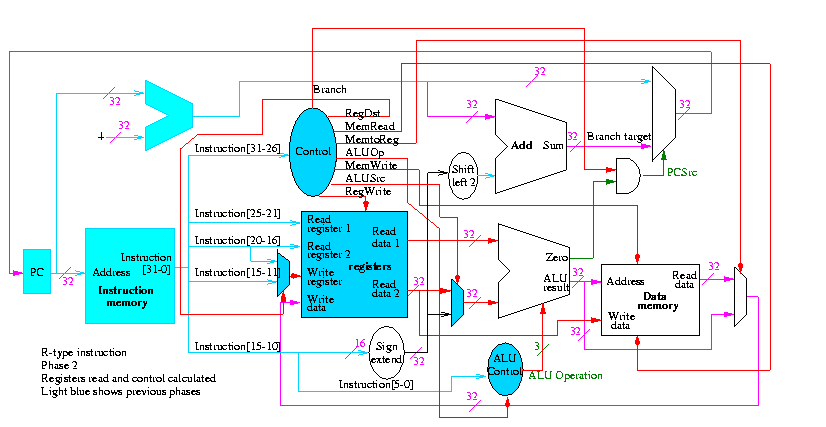

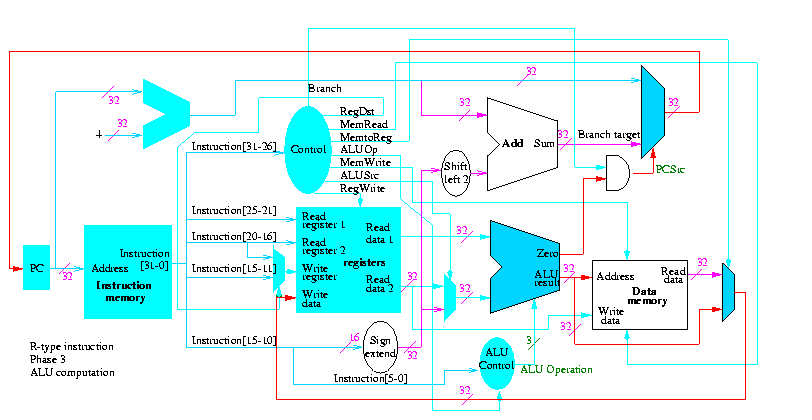

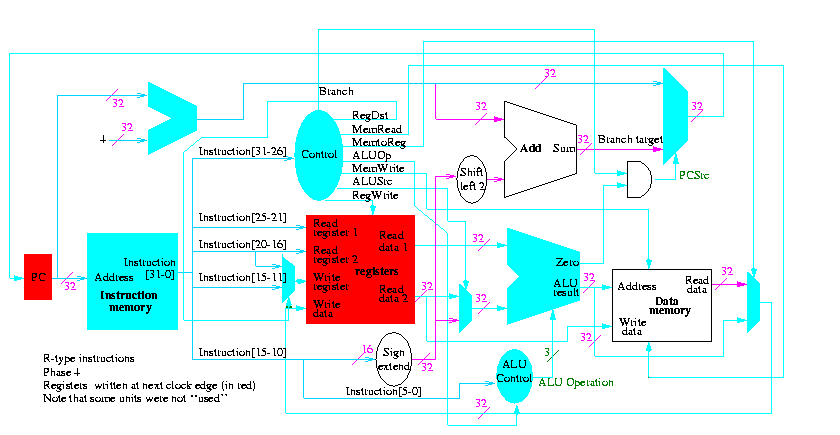

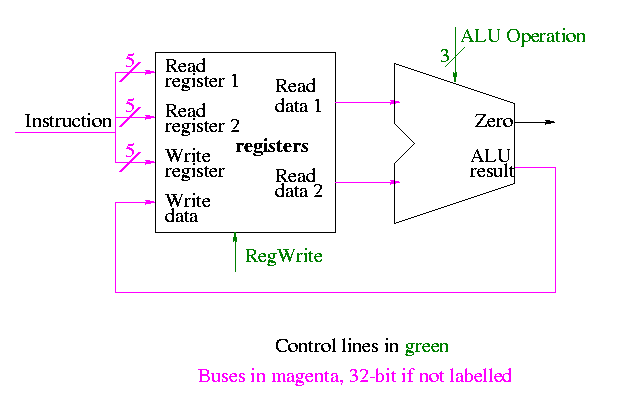

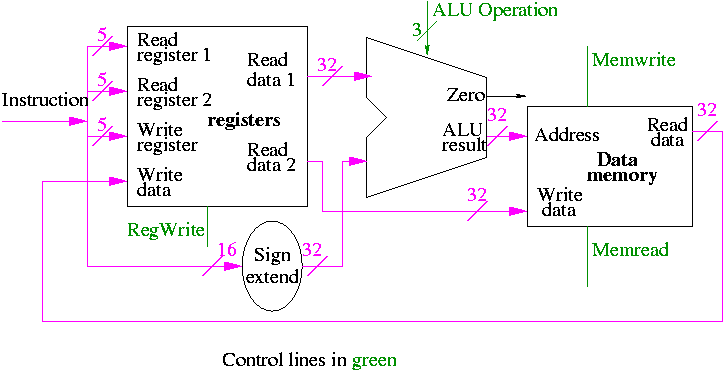

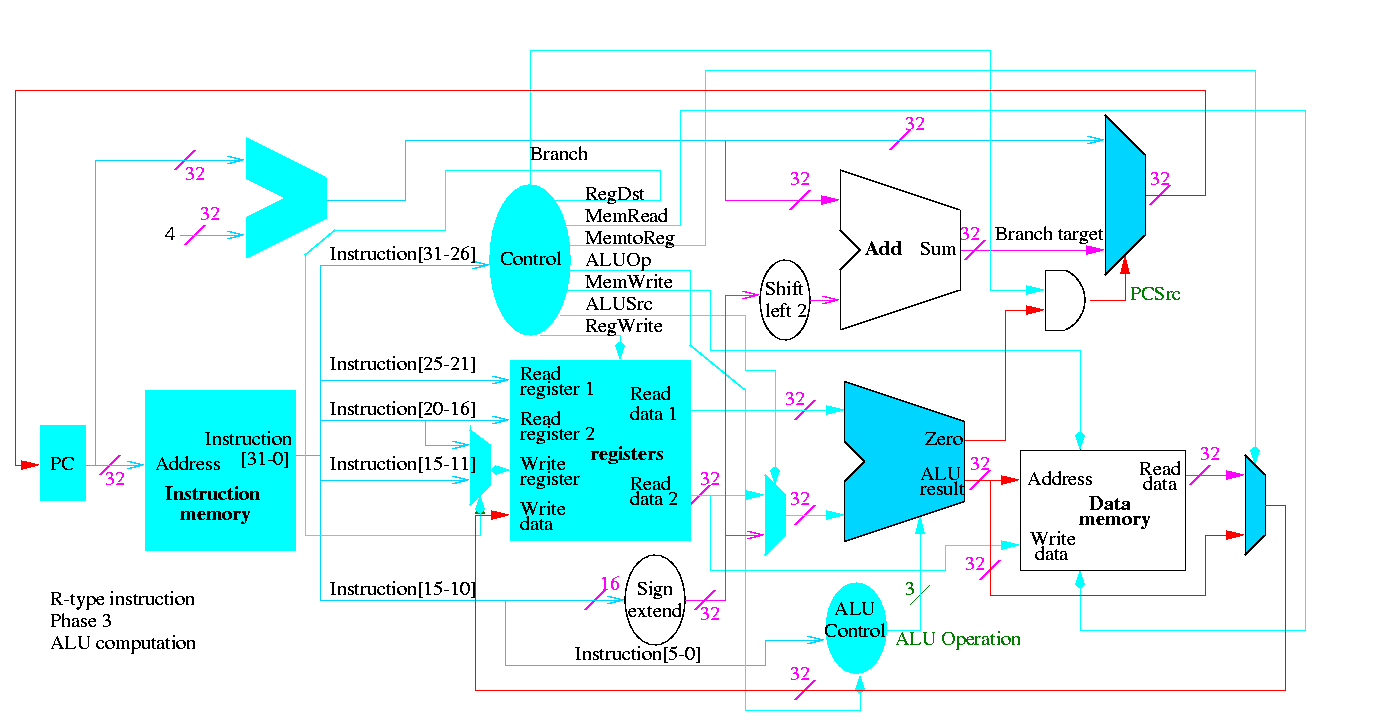

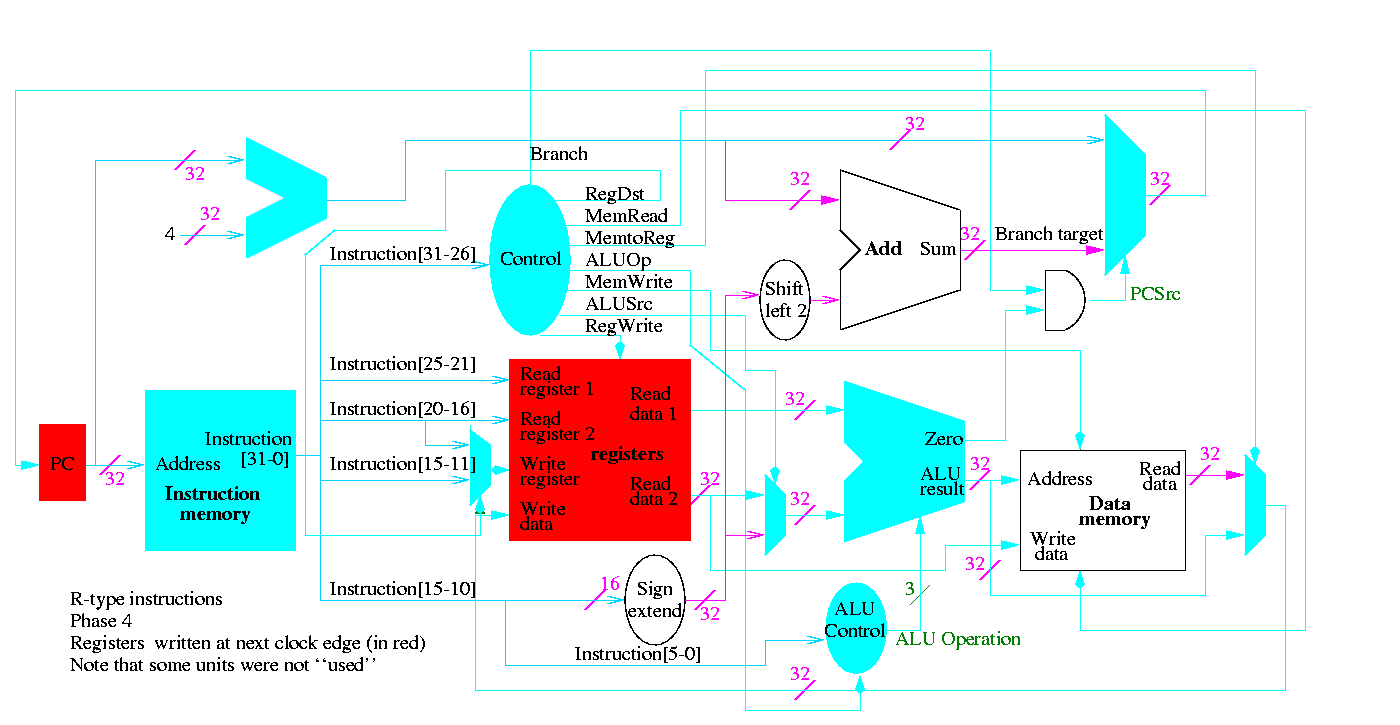

R-type instructions

- ``Read'' and ``Write'' in the diagram are adjectives not verbs.

- The 32-bit bus with the instruction is divided into three 5-bit

buses for each register number (plus other wires not shown).

- Two read ports and one write port, just as we learned in chapter 4.

- The 3-bit control consists of Bnegate and Op from chapter 4.

- The RegWrite control line is always asserted for R-type

instructions.

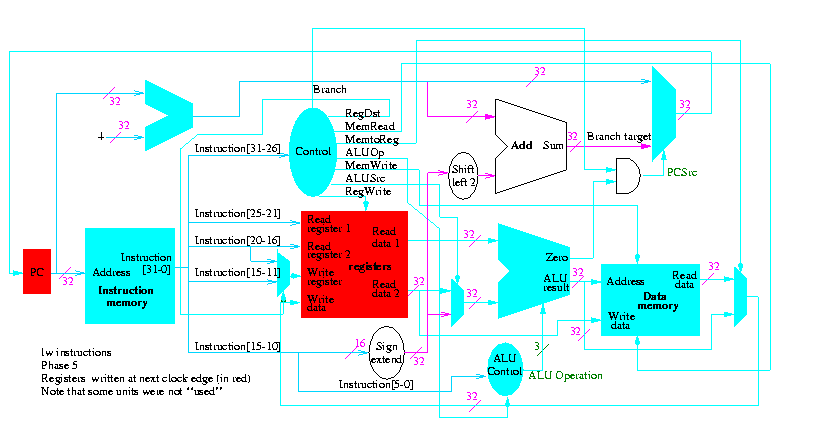

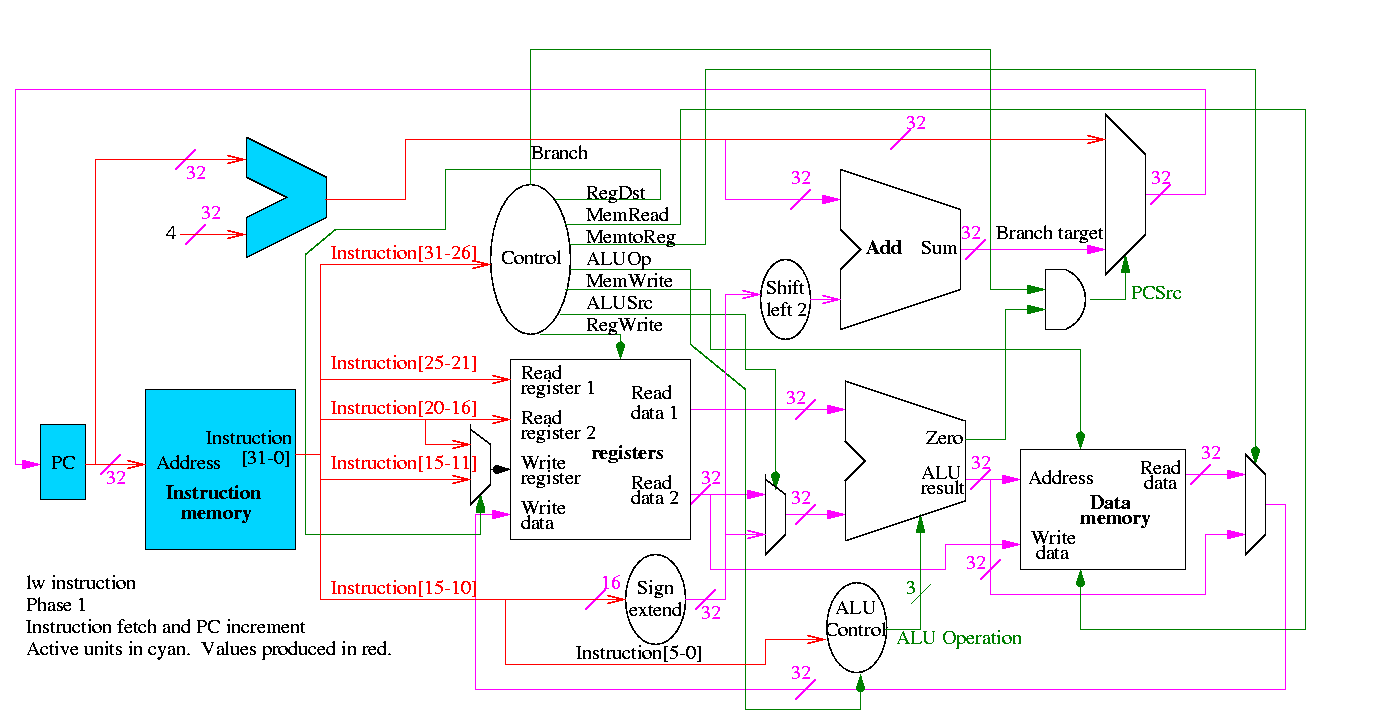

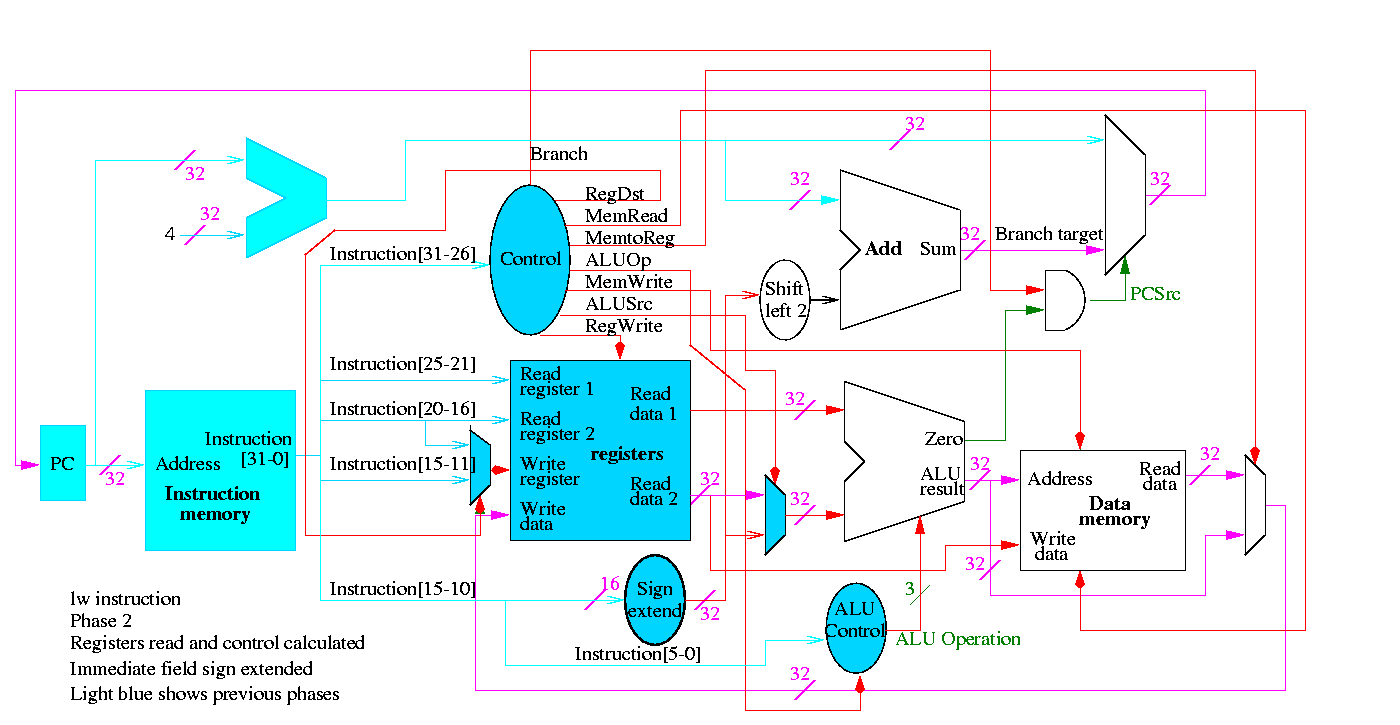

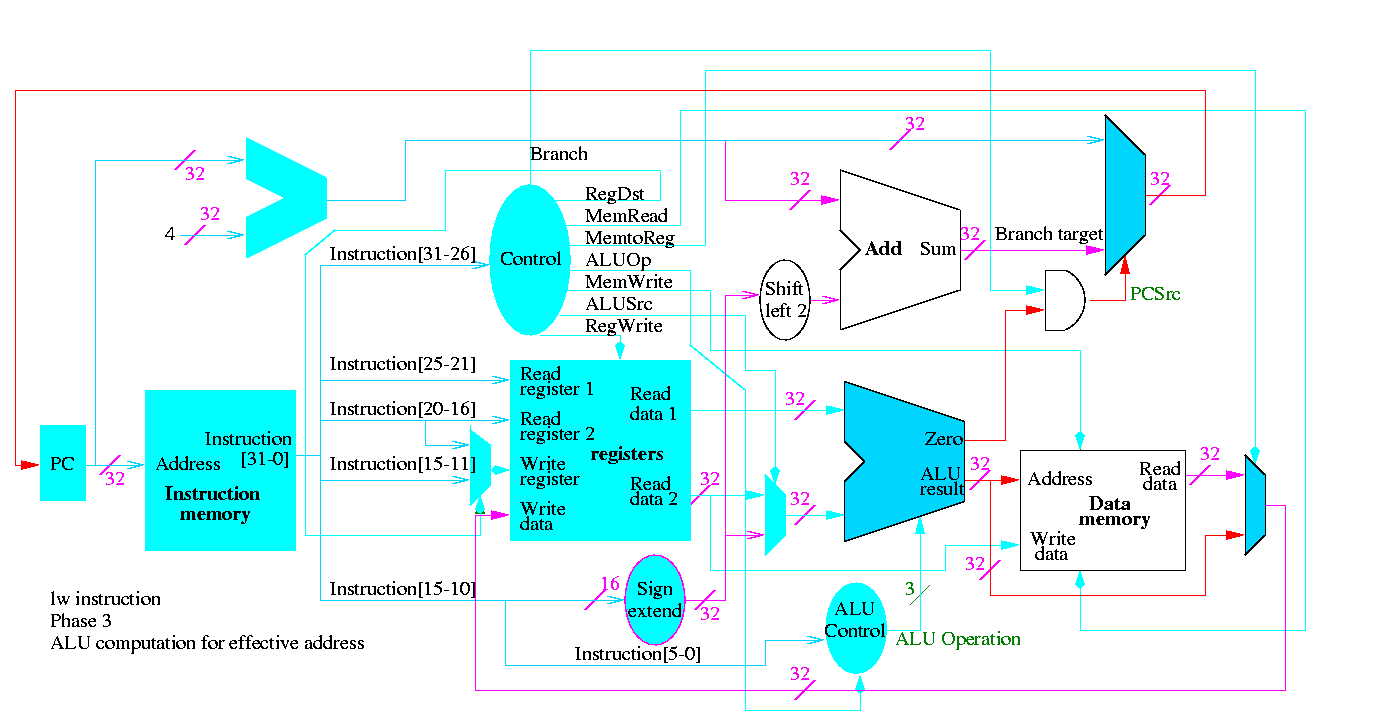

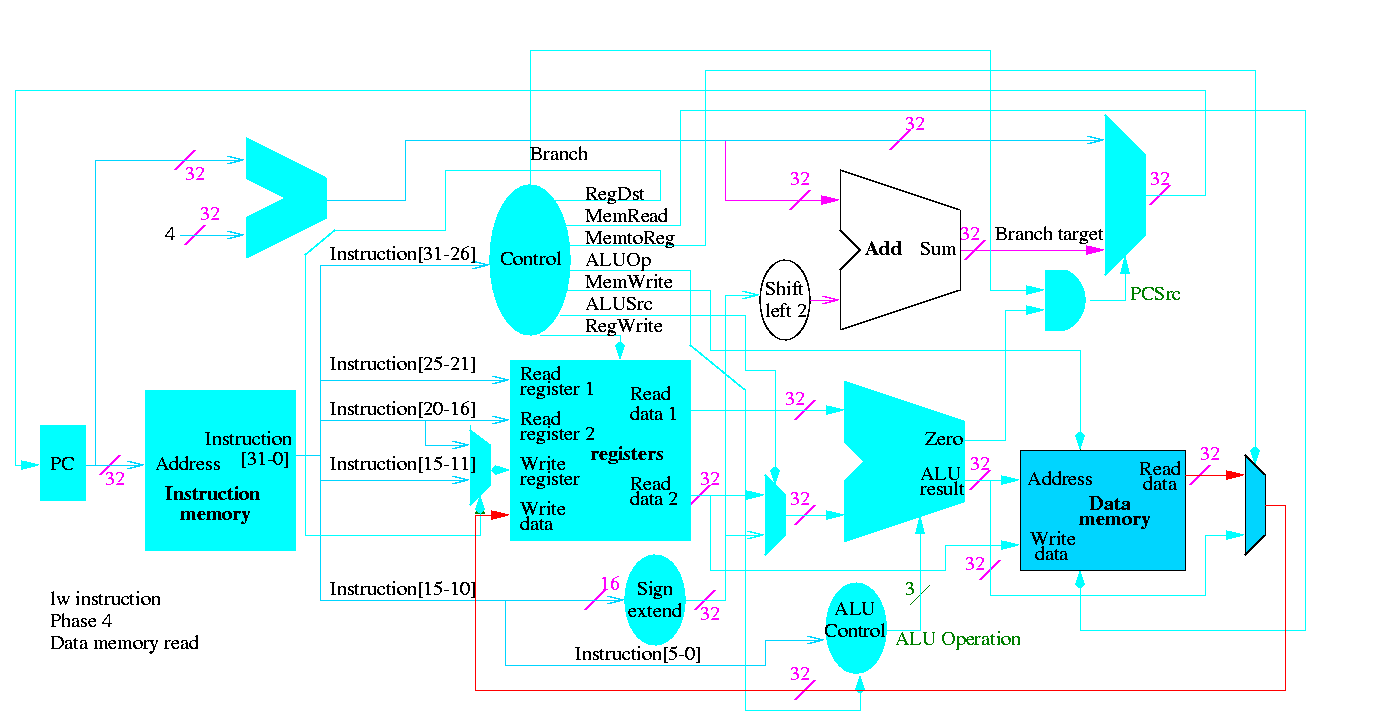

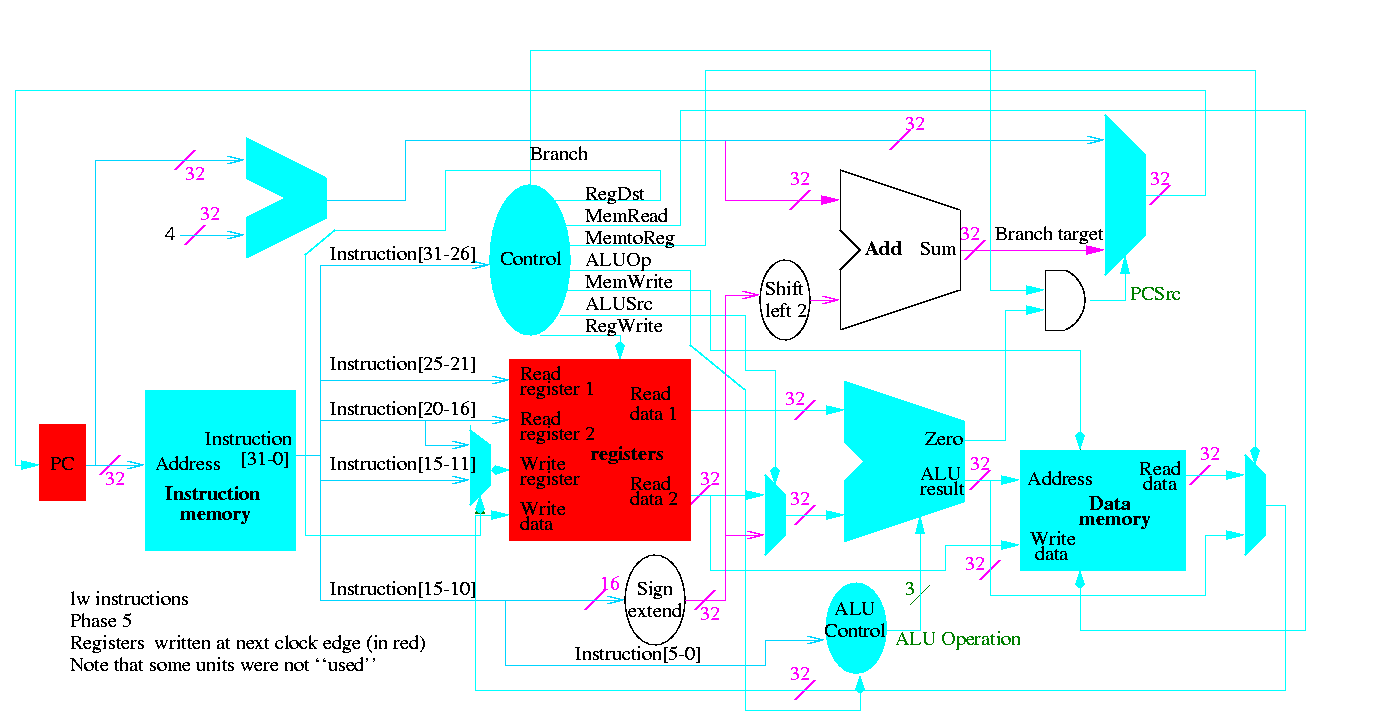

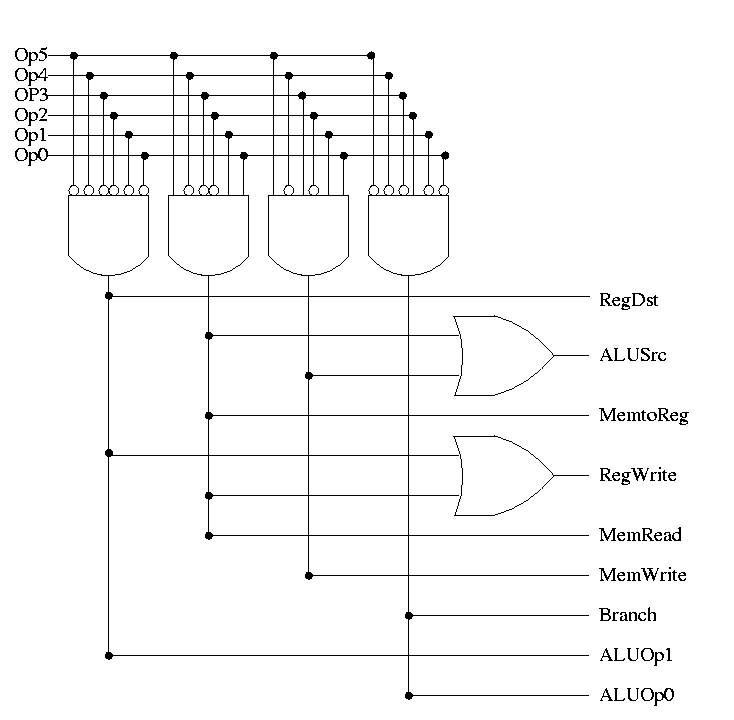

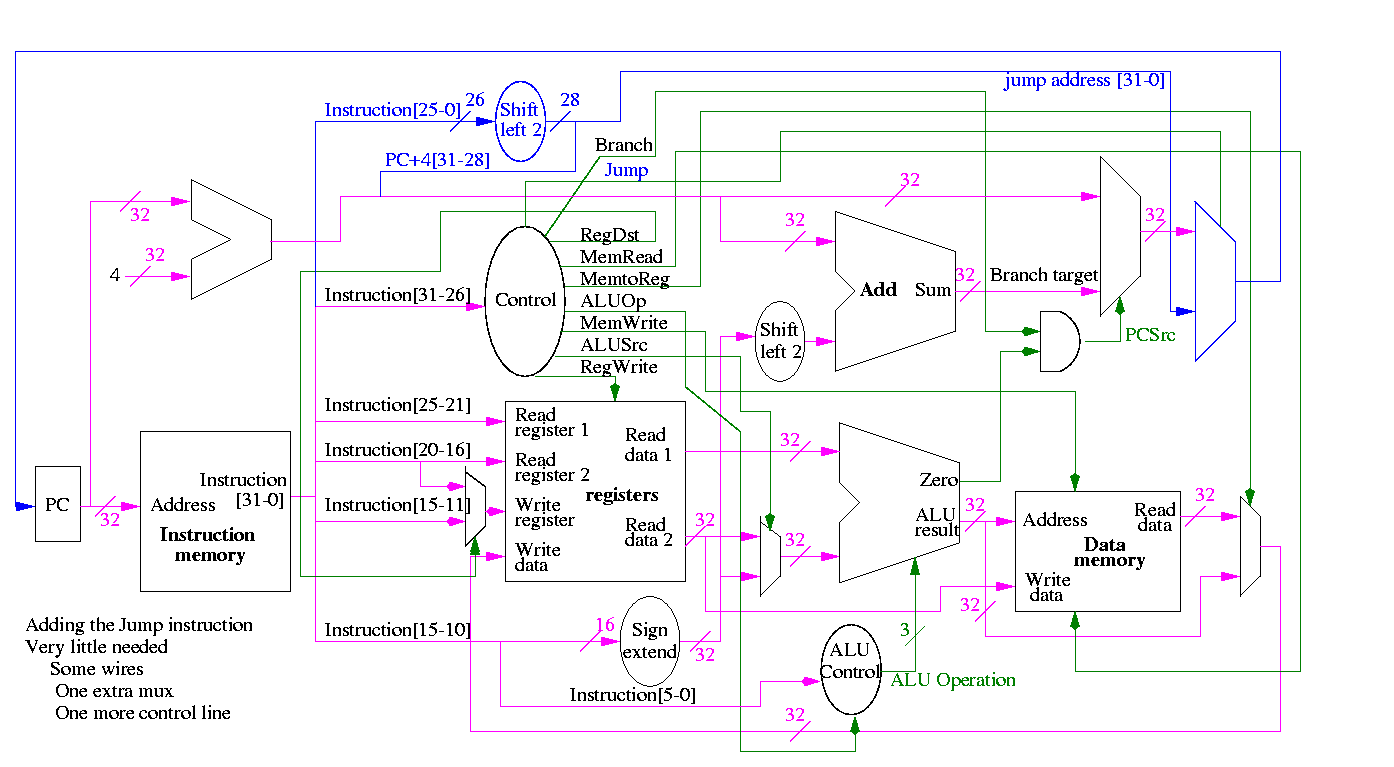

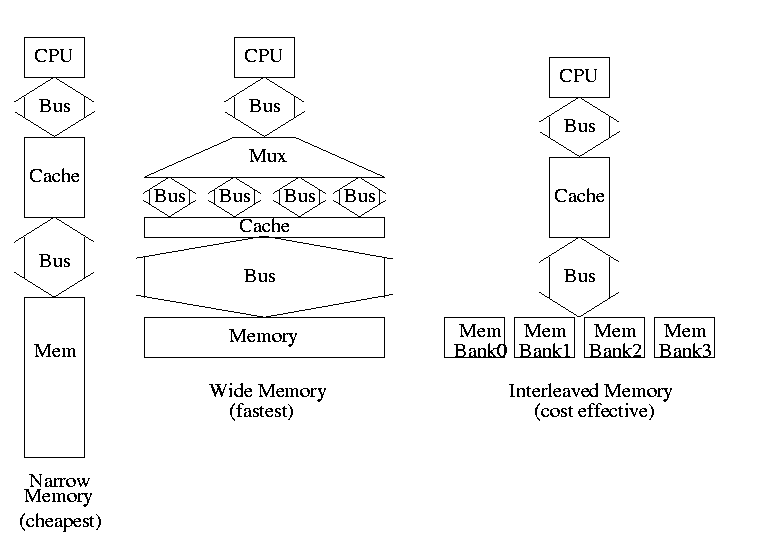

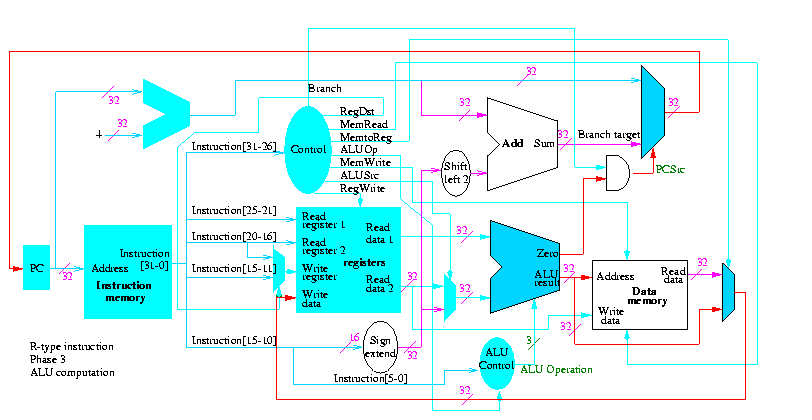

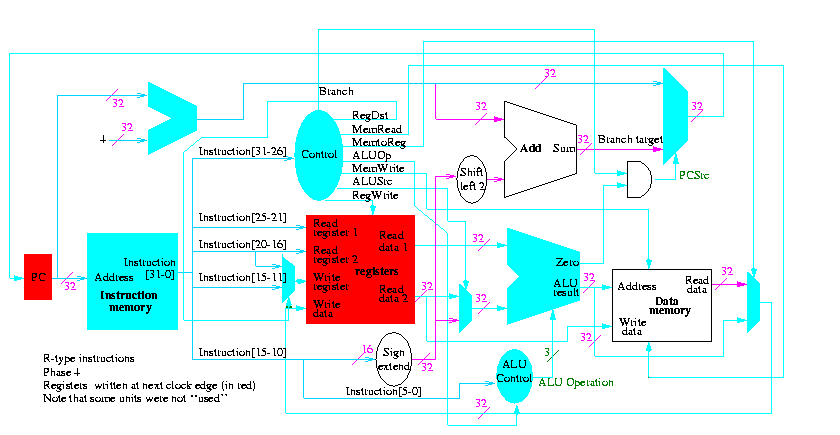

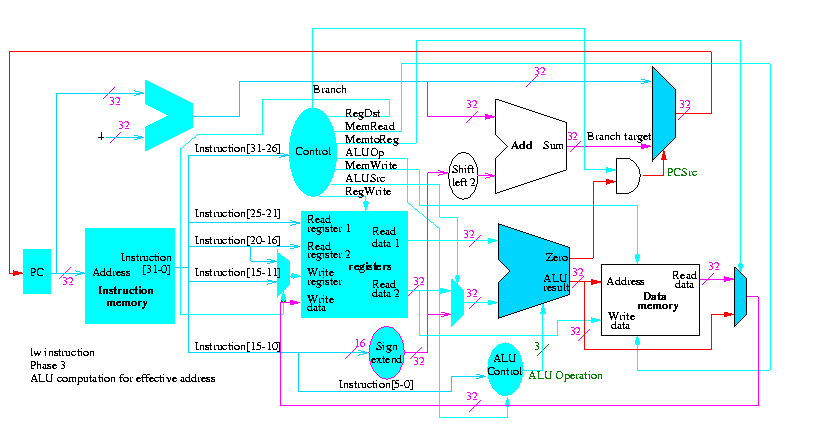

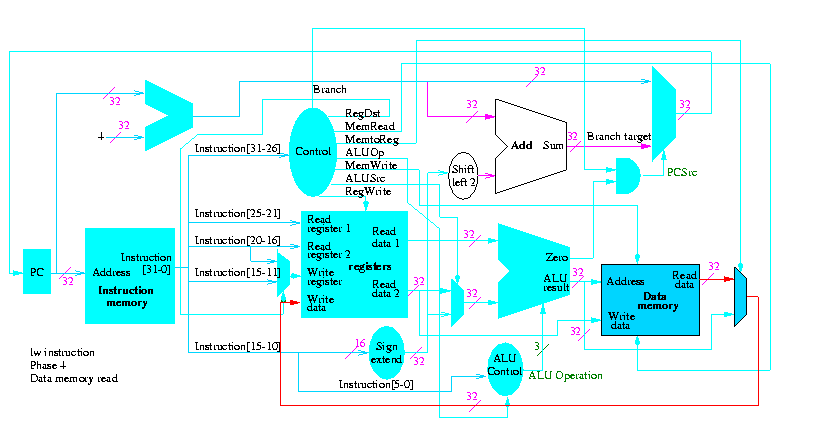

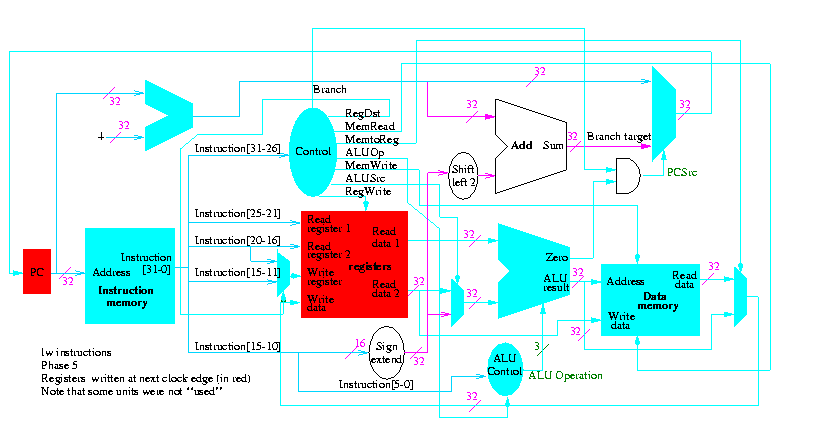

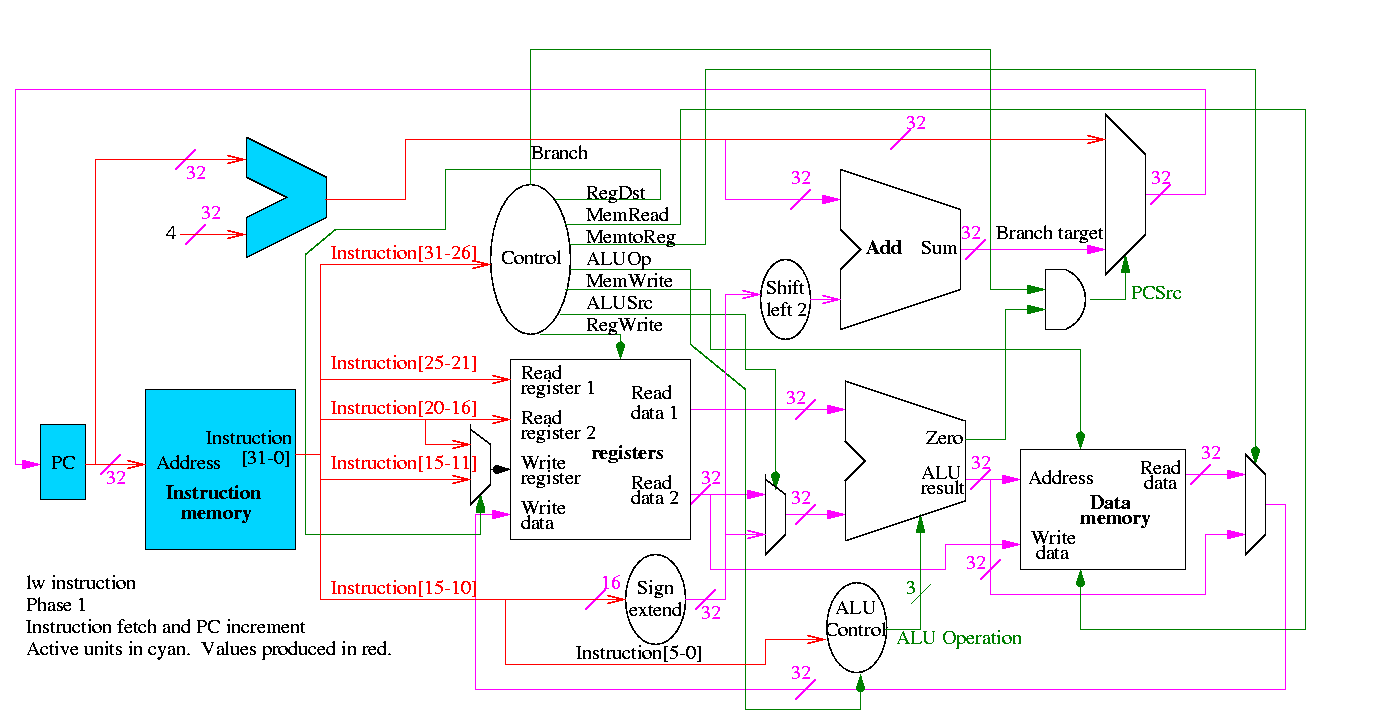

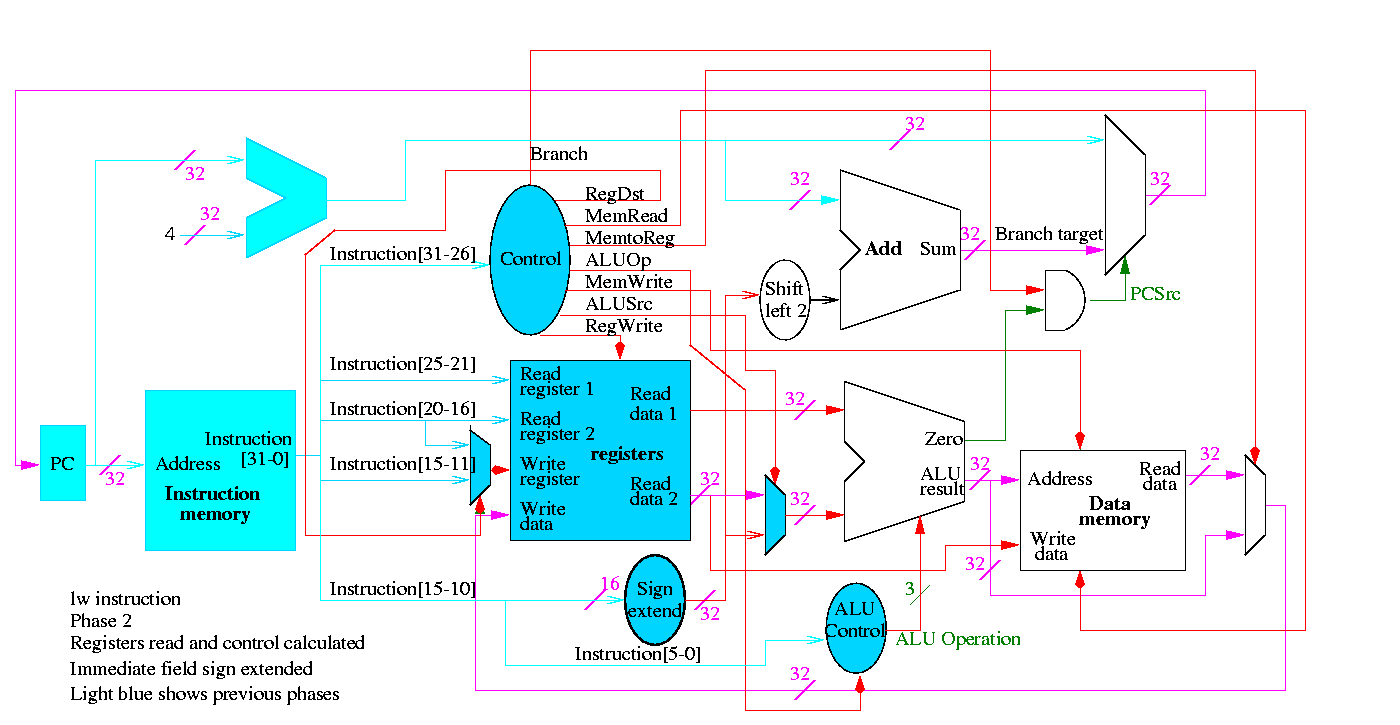

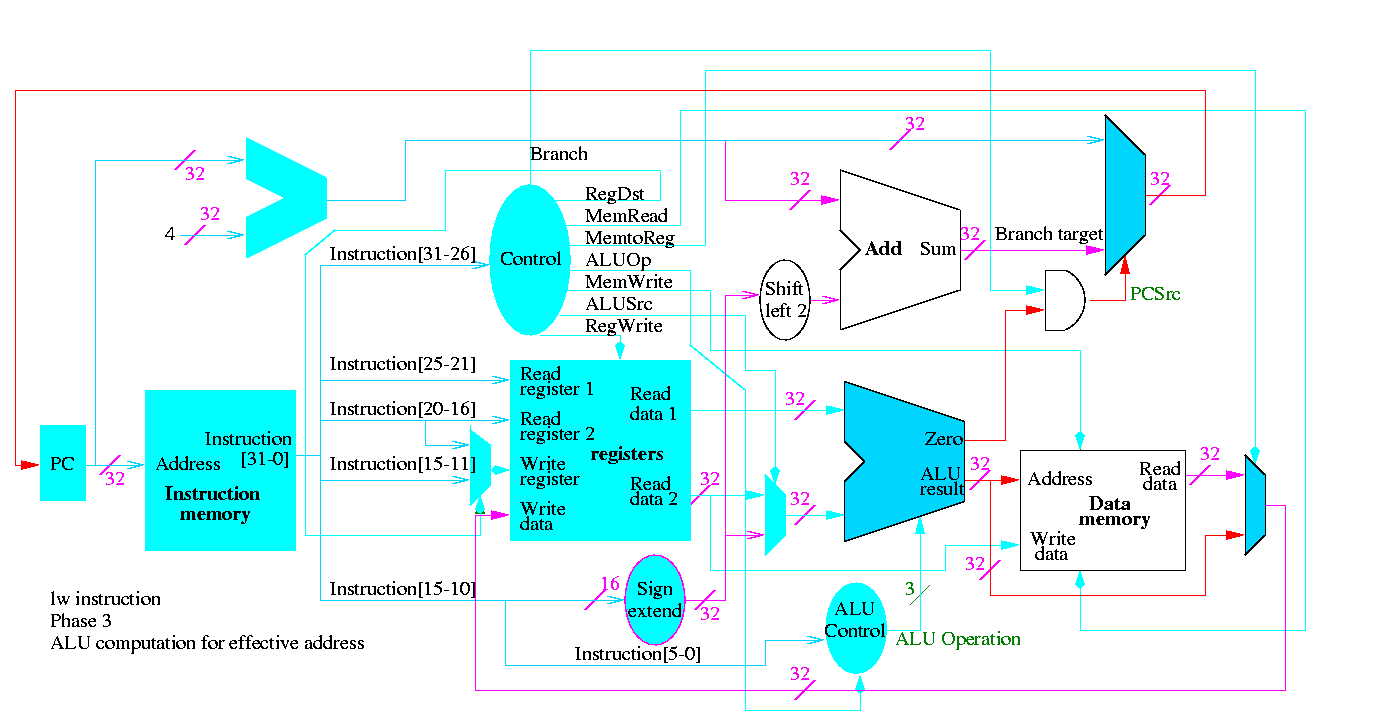

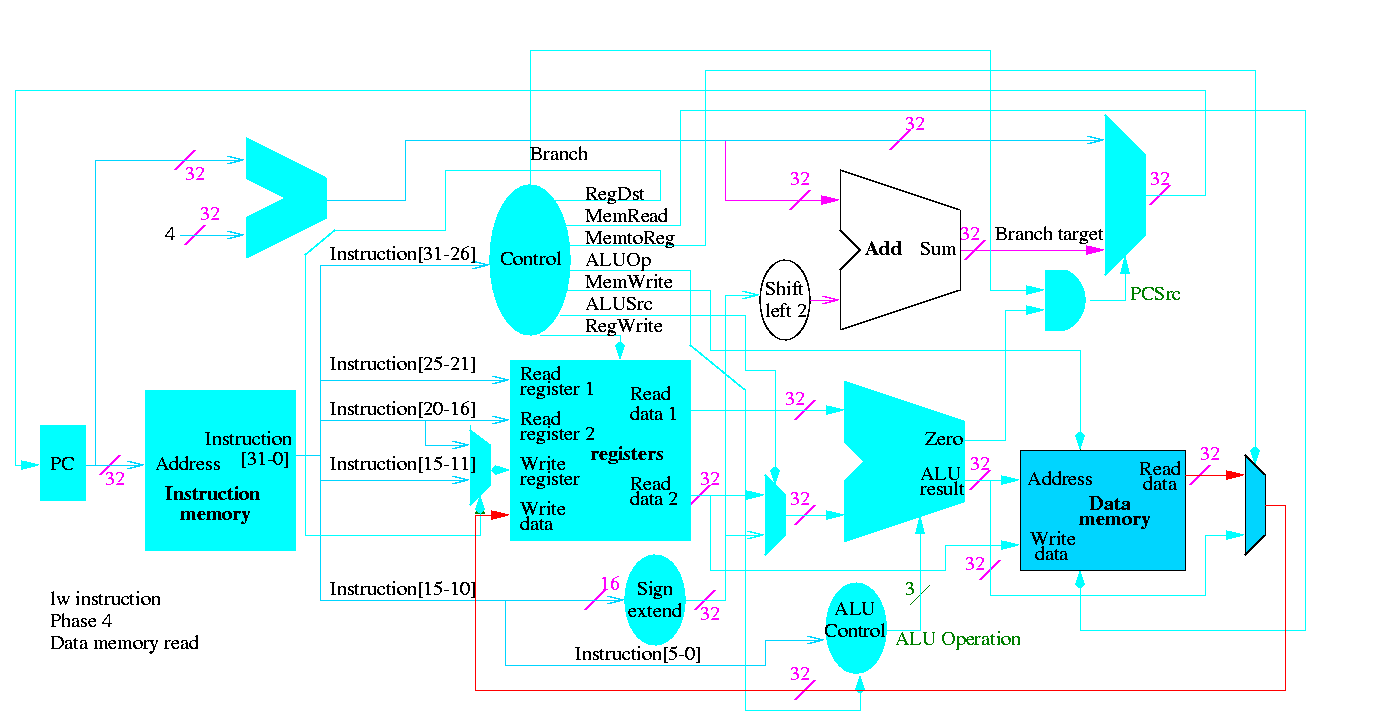

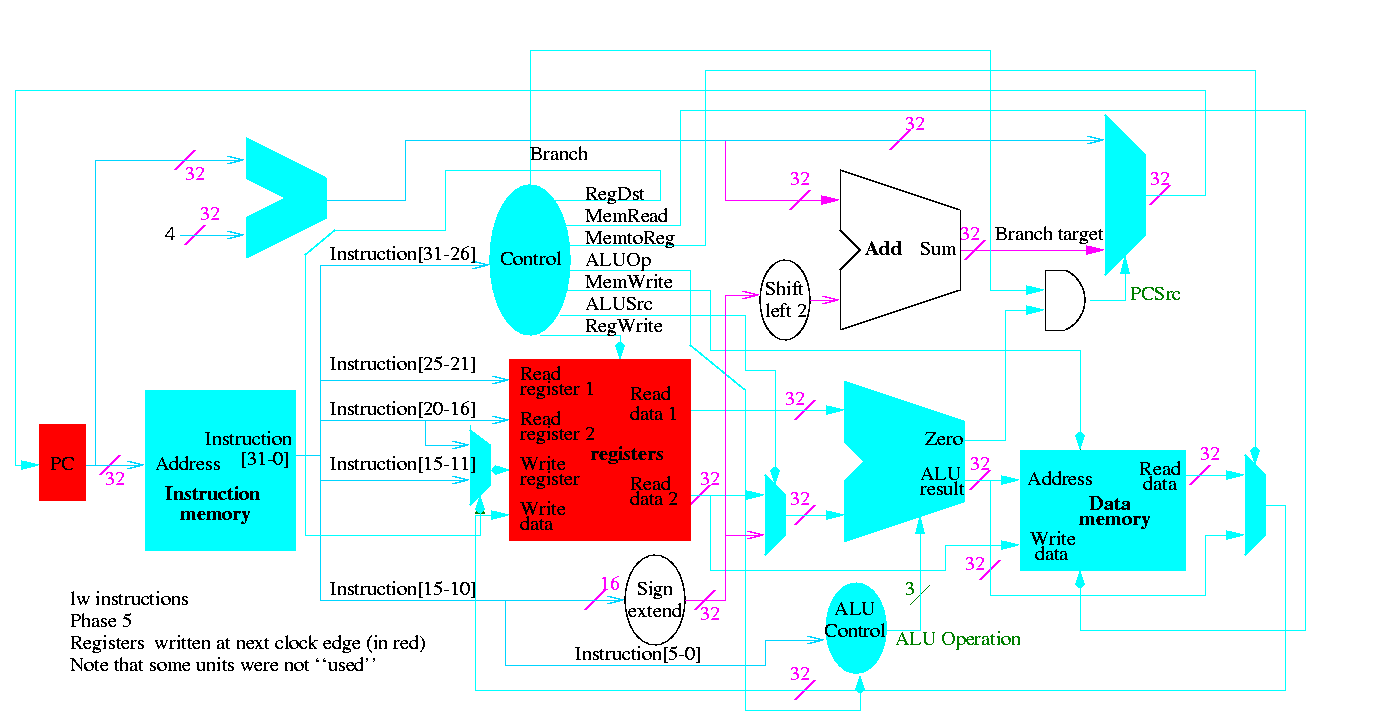

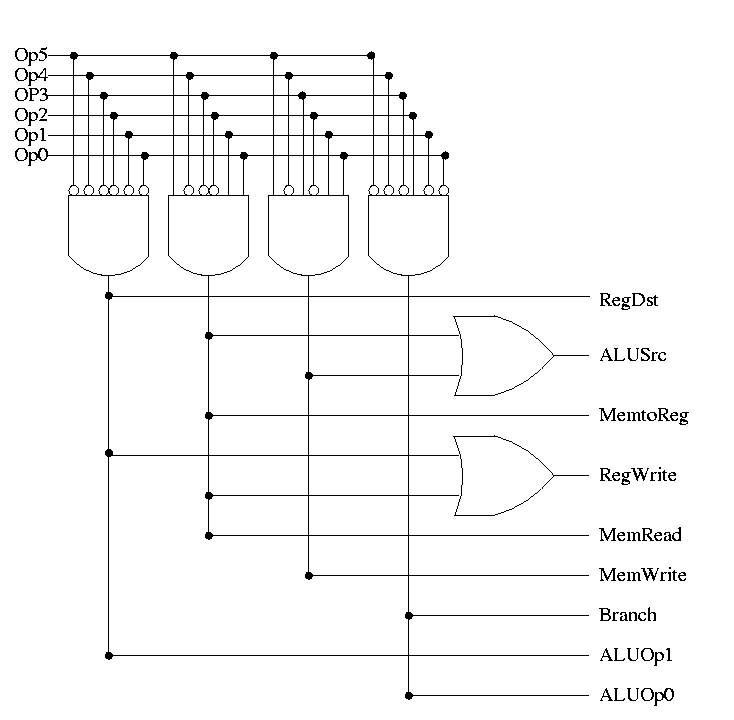

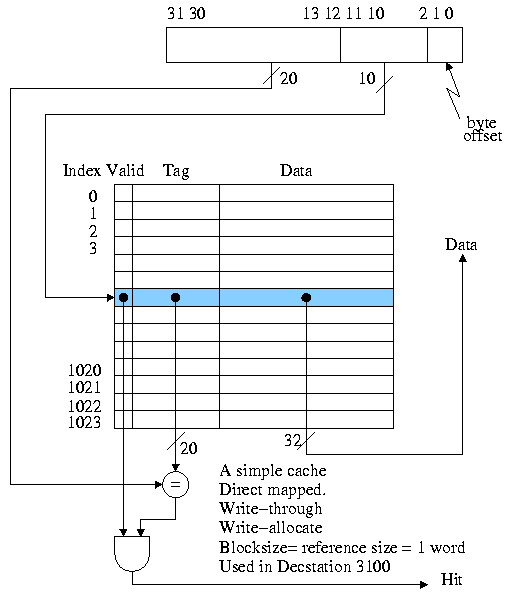

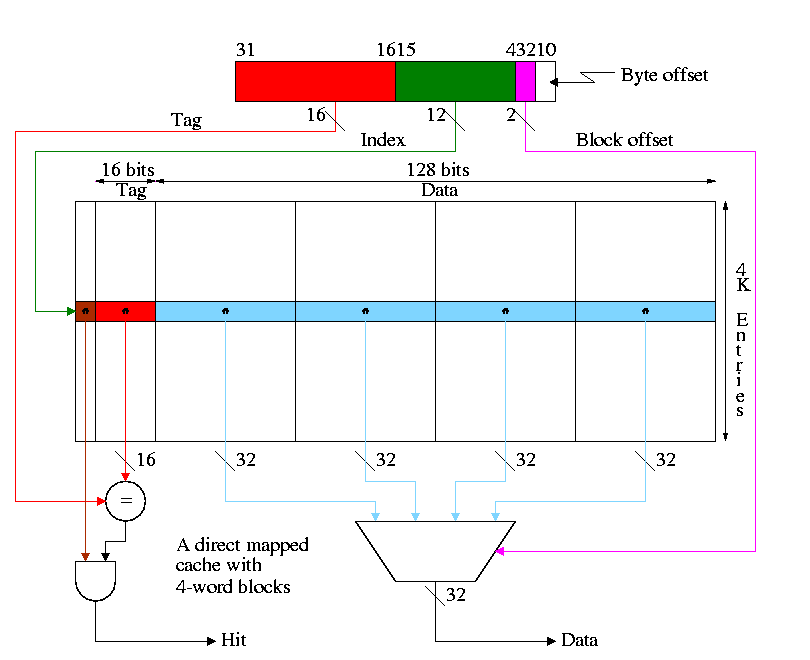

Homework: What would happen if the RegWrite line