Modern systems have a hierarchy of files. A file system tree.

Files and directories normally have permissions

Devices (mouse, tape drive, cdrom) are often view as ``special files''. In a unix system these are normally found in the /dev directory. Some utilities that are normally applied to (ordinary) files can as well be applied to some special files. For example, when you are accessing a unix system and do not have anything serious going on (e.g., right after you log in), type the following command

cat /dev/mouse

and then move the mouse. You kill the cat by typing cntl-C. I tried

this on my linux box and no damage occurred. Your mileage may vary.

Many systems have standard files that are automatically made available to a process upon startup. There (initial) file descriptors are fixed

A convenience offered by some command interpretors is a pipe. The pipeline

ls | wcwill give the number of files in the directory (plus other info).

Homework: 3

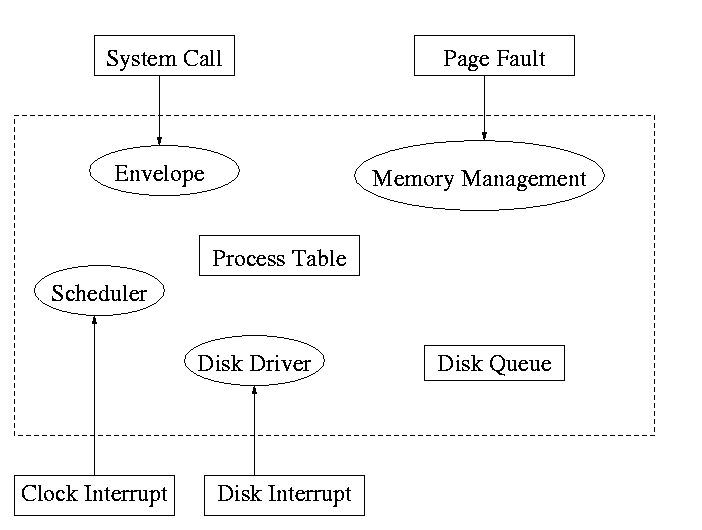

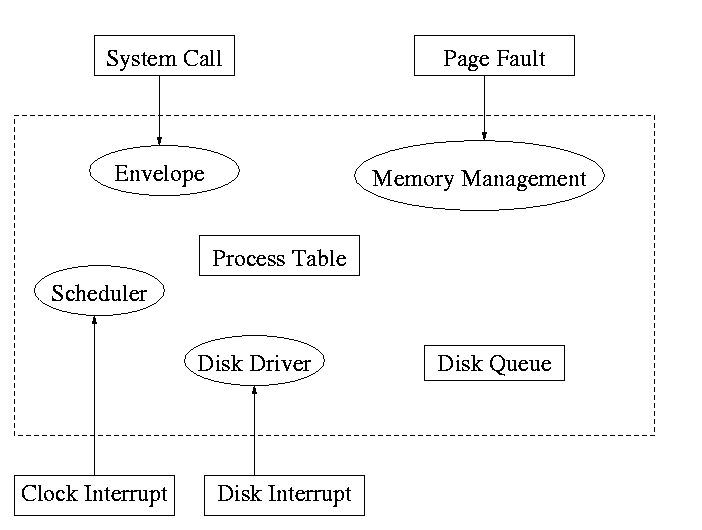

System calls are the way a user (i.e. program) directly interfaces with the OS. Some textbooks use the term envelope for the component of the OS responsible for fielding system calls and dispatching them. Here is a picture showing some of the components and the external events for which they are the interface.

What happens when a user writes a function call such as read()?

Homework: 6

Assumed knowledge

Homework: 9.

I must note that tanenbaum is a big advocate of the so called microkernel approach in which as much as possible is moved out of the (protected) microkernel into usermode components.

In the early 90s this was popular. Digital Unix and windows NT were examples. Digital unix was based on Mach, a research OS from carnegie mellon university. Lately, the growing popularity of linux has called this into question.

The previous picture: one big program

The system switches from user mode to kernel mode during the poof and then back when the OS does a ``return''.

But of course we can structure the system better, which brings us to.

Some systems have more layers and are more strictly structured.

An early layered system was ``THE'' operating system by Dijkstra. The layers were.

The layering was done by convention, i.e. there was no enforcement by hardware and the entire OS is linked together as one program. This is true of many modern OS systems as well (e.g., linux).

The multics system was layered in a more formal manner. The hardware provided several protection layers and the OS used them. That is, arbitrary code could not jump to or access data in a more protected layer.

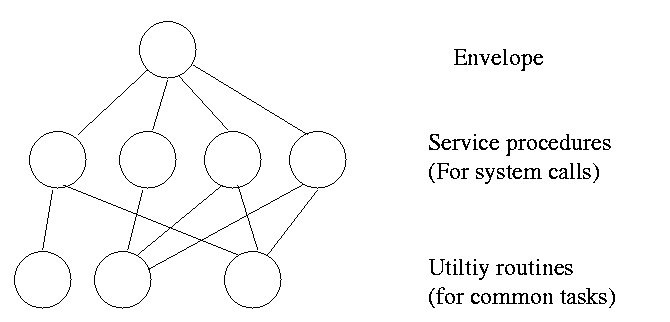

Use a ``hypervisor'' (beyond supervisor) to switch between multiple Operating Systems

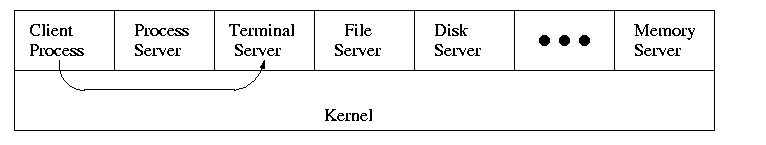

When implemented on one computer, a client server OS is the microkernel approach in which the microkernel just supplies interprocess communication and the main OS functions are provided by a number of usermode processes.

This does have advantages. For example an error in the file server cannot corrupt memory in the process server. This makes errors easier to track down.

But it does mean that when a (real) user process makes a system call there are more switches from user to kernel mode and back. These are not free.

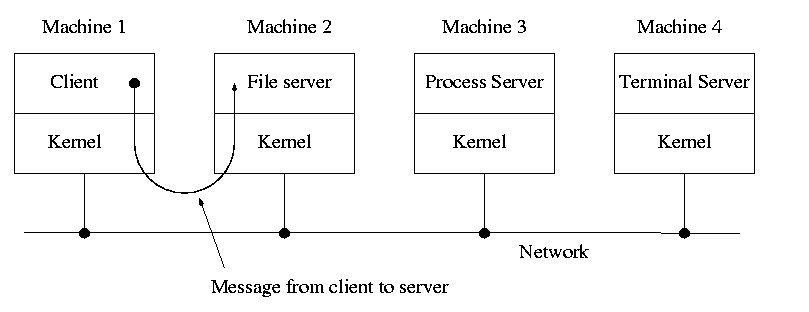

A distributed system can be thought of as an extension of the client server concept where the servers are remote.

Homework: 11

Tanenbaum's chapter title is ``processes''. I prefer process management. The subject matter is processes, process scheduling, interrupt handling, and IPC (Interprocess communication--and coordination).

Definition: A process is a program in execution.

We are assuming a multiprogramming OS that automatically switches from one process to another. Sometimes this is called pseudoparallelism since one has the illusion of a parallel processor. The other possibility is real parallelism in which two or more processes are actually running at once because the computer system is a parallel processor, i.e., has more than one processor. We do not study real parallelism (parallel processing, distributed systems, multiprocessors, etc) in this course.