==== Start Lecture #4 ====

Notes on lab1

- If several processes are waiting on I/O, you may assume

noninterference. For example, assume that on cycle 100 process A

flips a coin and decides its wait is 6 units and next cycle (101)

process B flips a coin and decides its wait is 3 units. You do NOT

have to alter process A. That is, Process A will become ready after

cycle 106 (100+6) so enters the ready list cycle 107 and process B

becomes ready after cycle 104 (101+3) and enters ready list cycle

105.

- For processor sharing (PS), which is part of the extra credit:

PS (processor sharing). Every cycle you see how many jobs are

in the ready Q. Say there are 7. Then during this cycle (an exception

will be described below) each process gets 1/7 of a cycle.

EXCEPTION: Assume there are exactly 2 jobs in RQ, one needs 1/3 cycle

and one needs 1/2 cycle. The process needing only 1/3 gets only 1/3,

i.e. it is finished after 2/3 cycle. So the other process gets 1/3

cycle during the first 2/3 cycle and then starts to get all the cpu.

Hence it finishes after 2/3 + 1/6 = 5/6 cycle. The last 1/6 cycle is

not used by any process.

Chapter 3: Memory Management

Also called storage management or

space management.

Memory management must deal with the storage

hierarchy present in modern machines.

- Registers, cache, central memory, disk, tape (backup)

- Move date from level to level of the hierarchy.

- How decide when to move data up?

- Fetch on demand (e.g. demand paging, which is popular now)

- prefetch

- Readahead for file I/O

- Large cache lines and pages

- Extreme example. Entire job present whenever running.

We will see in the next few lectures that there are three independent

decision:

- Segmentation (or no segmentation)

- Paging (or no paging)

- Fetch on demand (or no fetching on demand)

Memory management implements address translations.

- Convert virtual addresses to physical addresses

- aka logical to real

- Virtual address is the address expressed in the program

- Physical address is the address understood by the computer

- The translation from virtual to physical addresses is performed by

the Memory Management Unit or (MMU).

- Also have question of absolute vs.

relocatable/relative addresses.

- The translation might be trivial (e.g. identity) but not in a modern

general purpose OS

- The translation might be difficult (i.e., slow)

- Often includes addition/shifts/mask--not too bad

- Often includes memory references

- VERY serious

- Solution is to cache translations in a Translation

Lookaside Buffer (TLB). Sometimes called a

translation buffer (TB)

Homework: 7.

When is the address translation performed?

- At compilie time

- Primative

- Compiler generates physical addresses

- Requires knowledge of where compilation unit will be loaded

- Rarely used (MSDOS .COM files)

- At link-edit time

- Compiler

- generates relocatable addresses for each compilation unit

- references external addresses

- Linkage editor

- Converts the relocatable addr to absolute

- resolves external references

- Misnamed ld by unix

- Also convert virtual to physical by knowing where the

linked program will be loaded. Unix ld does

not do this.

- Loader is simple

- Hardware requirements are small

- A program can be loaded only where specified and

cannot move once loaded.

- Not used much any more.

- At load time

- Same as linkage editor but do not fix the

starting address

- Program can be loaded anywhere

- Program can move but cannot be split

- Need modest hardware: base/limit regs

- At execution time

- Dynamically during execution

- Hardware to perform the virtual to physical address

translation quickly

- Currently dominates

- Much more information later

Extensions

- Dynamic Loading

- When executing a call check if loaded.

- If not loaded, call linking loader to load it and update

tables.

- Slows down calls (indirection) unless you rewrite code dynamically

- Dynamic Linking

- The traditional linking described above is now called

statically linked

- Frequently used routines are not linked into the program.

Instead, just a stub is linked.

- If/when a routine is called, the stub checks to see if the

real routine is loaded (it may have been loaded by

another program).

- If not loaded, load it.

- If already loaded, share it. This needs some OS

help so that different jobs sharing the library don't

overwrite each other's private memory.

- Advantages of dynamic linking

- Saves space: Routine only in memory once even when used

many times

- Bug fix to dynamically linked library fixes all applications

that use that library, without having to

relink the application.

- Disadvantages of dynamic linking

- New bugs in dynamically linked library infect all

applications

- Applications ``change'' even when they haven't changed.

Note: I will place ** before each memory management

scheme.

3.1: Memory management without swapping or paging

Job remains in memory from start to finish

Sum of memory requirements of jobs in system cannot exceed size of

physical memory.

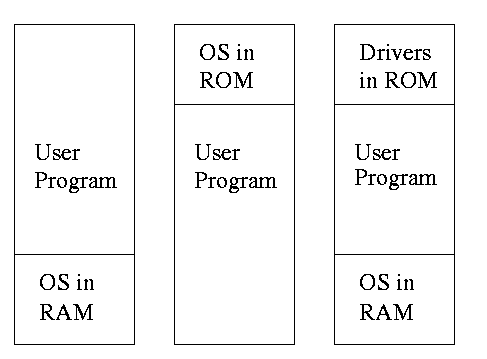

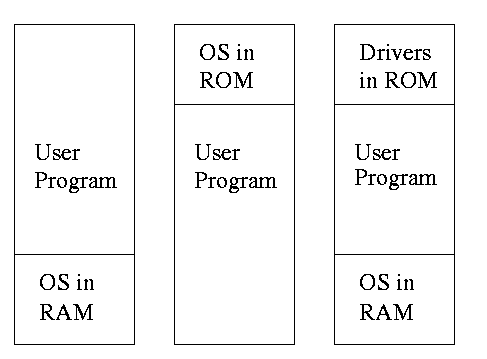

** 3.1.1: Monoprogramming without swapping or paging (Single User)

The ``good old days'' when everything was easy.

- No address translation done by OS (i.e. not done dynamically)

- Either reload OS each job (or don't have an OS, which is almost

the same) or protect the OS from the job.

- One way to protect (part of) the OS is to have it in ROM.

- Of course must have the data in ram

- Can have a separate OS address space only accessed in

supervisor mode.

- User overlays if job memory exceeds physical memory.

- Progammer breaks program into pieces.

- A ``root'' piece is always memory resident.

- The root contains calls to load and unload various pieces.

- Programmer's reponsibility to ensure that a piece is already

loaded when it is called.

- No longer used, but we couldn't have gotten to the moon in the

60s without it (I think).

- Overlays replaced by dynamic address translation and other

features (e.g. demand paging) that have the system support logical

address sizes greater than physical address sizes.

- Fred Brooks (leader of IBM's OS/360 project, author of ``The

mythical man month'') remarked that the OS/360 linkage editor was

terrific, especially in its support for overlays, but by the time

it came out, overlays were no longer used.

3.1.2: Multiprogramming

Goal is to improve CPU utilization, by overlapping CPU and I/O

- If we let p = portion of time job is waiting for I/O if run alone.

- Then for monoprogramming the CPU utilization is 1-p.

- Note that p is often > .5 so cpu utilization is poor.

- But with a multiprogramming level (MPL) of n, the

cpu utilization is approximately 1-(p^n)

- Idea is that if probability that a job is waiting for I/O is p and

n jobs are in memory, then the probability that all n are waiting for

I/O is p^n.

- This is a crude model, but it is correct that increasing MPL does

increase CPU cpu utilization.

- The limitation is memory, which is why we discuss it here

instead of process management.

- Some of the cpu utiilization is time spent in the OS executing

context switches.

Homework: 1, 3.

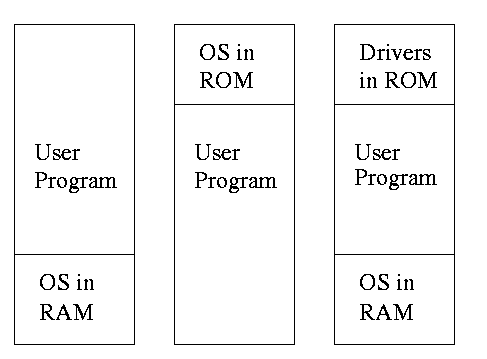

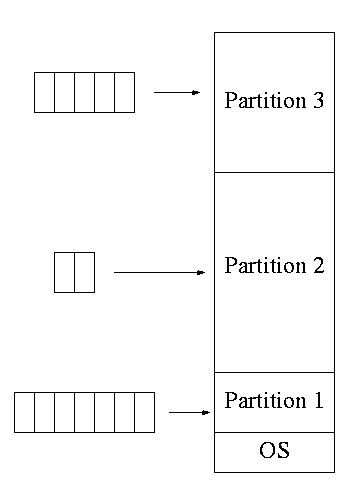

3.1.3: Multiprogramming with fixed partitions

- This was used by IBM for system 360 OS/MFT (multiprogramming with a

fixed number of tasks)

- Can have a single input queue instead of one for each partition

- So that if there are no big jobs can use big partition for

little jobs

- But I don't think IBM did this

- Can think of the input queue(s) as the ready list(s)

- The partition boundaries are not movable (reboot to move)

- Each job has a single ``segment'' (we will discuss segments later)

- No sharing between jobs

- No dynamic address translation

- At load time must ``establish addressibility''

- i.e. must get

a base register set to the location at which job was loaded (the

bottom of the partition). The base register is part of the

programmer visible register set.

- The base register is part of the

programmer visible register set.

- This is address translation during load time

- Also called relocation

- Storage keys are adequate for protection (IBM method)

- Alternative protection method is base/limit registers

- An advantage of base/limit is that it is easier to move a job

- But MVT didn't move jobs

- Tanenbaum says jobs were ``run to completion''. This must be wrong.

- He probably meant that jobs not swapped out and each queue is FCFS

without preemption.

- MFT can have large internal fragmentation,

i.e. wasted space inside a region

3.2: Swapping

Moving entire jobs between disk and memory is called

swapping.

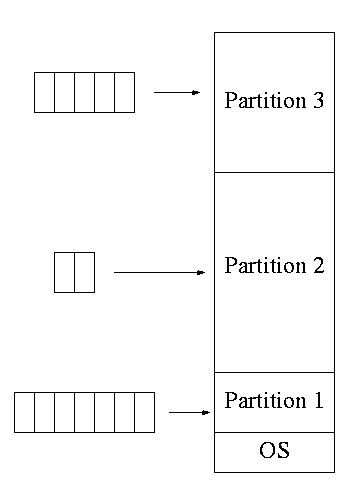

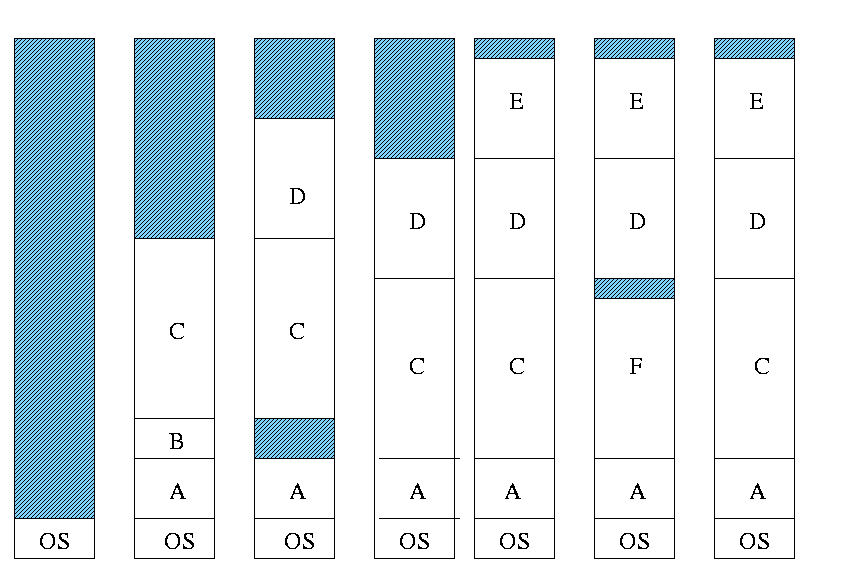

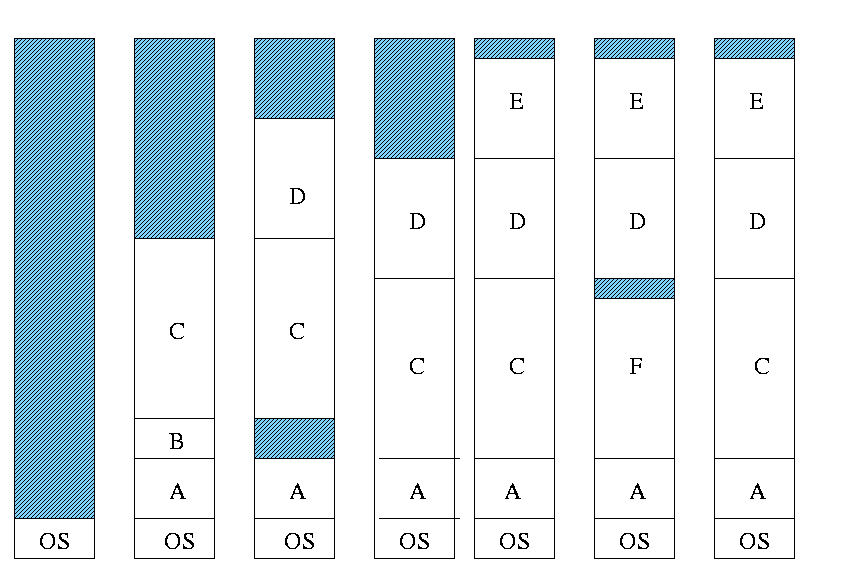

3.2.1: Multiprogramming with variable partitions

- Both the number and size of the partitions change with time.

- IBM OS/MVT (multiprogramming with a varying number of tasks

- Also early PDP-10 OS

- Job still has only one segment (as with MFT) but now can be of any

size

- Single ready list

- Job can move (might be swapped back in a different place

- This is dynamic address translation (during run time).

- Perform an addition on every memory reference (i.e. on every

address translation)

- Called a DAT box by IBM

- Eliminates internal fragmentation

- Find a region the exact right size (leave a hole for the

remainder

- Not quite true, can't get a piece with 10A755 bytes. Would

get say 10A750. But internal fragmentation is much

reduced compared to MFT.

- Introduces external fragmentation, holes

outside any region.

- What do you do if no hole is big enough for request?

- Can compactify

- Transition from bar 3 to bar 4 in diagram below

- This is expensive

- Not suitable for real time (MIT ping pong)

- Can swap out one process to bring in another

- Bars 5-6 and 6-7 in diagram

- There are more processes than holes. Why?

- Because next to a process there might be a process or a hole

but next to a hole there must be a process

- So can have ``runs'' of processes but not of holes

- Above actually shows that there are about twice as many

processes as holes.

- Base and limit registers are used

- Storage keys not good since compactifying would require

changing many keys

- Storage keys might need a fine granularity to permit the

boundaries move by small amts. Hence many keys would need to be

changed